前言: 上一次读恺明大神的文章还是两年前,被ResNet的设计折服得不行,两年过去了,我已经被卷死在沙滩上

Momentum Contrast for Unsupervised Visual Representation Learning

摘要

我们提出了针对无监督表征学习的方法MOCO,利用对比学习作为字典查找,我们建立了一个动态队列字典,和一个moving-averaged的编码器。这就可以实时的构建一个大的并且一致的字典来促进无监督的学习。MOCO在同样的线性协议(线性分类头)在IMAGENET上取得了很好的分类结果,并且学习到的特征可以很好的转移到下游任务中,MOCO在7个检测分割的任务中都 取得了最好的效果,有的甚至是大幅超过。这表明视觉任务中的无监督学习核有监督学习的差距在缩小。

We present Momentum Contrast (MoCo) for unsupervised visual representation learning. From a perspective on contrastive learning as dictionary look-up, we build a dynamic dictionary with a queue and a moving-averaged encoder. This enables building a large and consistent dictionary on-the-fly that facilitates contrastive unsupervised learning. MoCo provides competitive results under the common linear protocol on ImageNet classification. More importantly, the representations learned by MoCo transfer well to downstream tasks. MoCo can outperform its supervised pre-training counterpart in 7 detection/segmentation tasks on PASCAL VOC, COCO, and other datasets, sometimes surpassing it by large margins. This suggests that the gap between unsupervised and supervised representation learning has been largely closed in many vision tasks.

引言

无监督学习NLP领域很成功,但是在视觉领域,监督学习做预训练还是主流,无监督的方法落后。

当然这个现象的原因主要还是响应的信号空间不同。语言任务是离散的信号空间,有一个个的单词组成,就可以建立一个个标记的字典,无监督学习就是基于这些字典进行的。但是视觉任务把字典的建立看作是在连续高维空间的原始信号,并不是人类表达中的结构性信息

Unsupervised representation learning is highly successful in natural language processing, e.g., as shown by GPT and BERT [12]. But supervised pre-training is still dominant in computer vision, where unsupervised methods generally lag behind. The reason may stem from differences in their respective signal spaces. Language tasks have discrete signal spaces (words, sub-word units, etc.) for building tokenized dictionaries, on which unsupervised learning can be based. Computer vision, in contrast, further concerns dictionary building [54, 9, 5], as the raw signal is in a continuous, high-dimensional space and is not structured for human communication (e.g., unlike words).

一些研究也针对无监督学习任务,利用对比学习的方法做出了一些成果。不管是出于什么样的动机,这些方法其实都是考虑建造动态字典。 key在字典中是对原数据的抽样,并被网络的编码器抽取特征。无监督学习就是训练这样一个编码器来不断的进行字典的查找。一个编码好的查询子应该与对应匹配的key相似,与不匹配的key不相似。这种学习可以被表示成最小化一个对比损失。

Several recent studies [61, 46, 36, 66, 35, 56, 2] present promising results on unsupervised visual representation learning using approaches related to the contrastive loss [29]. Though driven by various motivations, these methods can be thought of as building dynamic dictionaries. The “keys” (tokens) in the dictionary are sampled from data (e.g., images or patches) and are represented by an encoder network. Unsupervised learning trains encoders to perform dictionary look-up: an encoded “query” should be similar to its matching key and dissimilar to others. Learning is formulated as minimizing a contrastive loss [29].

从这个角度说,我们假设构建这样一个字典需要,字典够大,并且一致。直觉上来看,一个更大的字典可以更好的对连续高维的视觉空间进行抽样,而字典里的key的特征都应该由同一个编码器编码,这样他们的对比才会一致。

然而,现有的方法或多或少都被这两个局限性限制了。

From this perspective, we hypothesize that it is desirable to build dictionaries that are: (i) large and (ii) consistent as they evolve during training. Intuitively, a larger dictionary may better sample the underlying continuous, high dimensional visual space, while the keys in the dictionary should be represented by the same or similar encoder so that their comparisons to the query are consistent. However, existing methods that use contrastive losses can be limited in one of these two aspects (discussed later in context).

我们提出了一种MOCO,动量对比学习,如图。我们利用队列来保持这个字典里的样本,最新编码的key的表示,而最久被更新的key被队列挤出去。利用队列的形式就可以和batchsize解耦,就可以构建更大的队列而不必受限于机器中有限制大小的batchsize大小。

其次,我们字典里的key都是来自新来的一些batch中的key ,而这些key是缓慢逐渐更新的,这是由于我们设计了一个动量来实现的。因此可以保持整个队列的一致性

We present Momentum Contrast (MoCo) as a way of building large and consistent dictionaries for unsupervised learning with a contrastive loss (Figure 1). We maintain the dictionary as a queue of data samples: the encoded representations of the current mini-batch are enqueued, and the oldest are dequeued. The queue decouples the dictionary size from the mini-batch size, allowing it to be large. Moreover, as the dictionary keys come from the preceding several mini-batches, a slowly progressing key encoder, implemented as a momentum-based moving average of the query encoder, is proposed to maintain consistency.

MOCO是一个构建动态字典来实现对比学习的机制,并且可以被用于代理任务。在本文,我们遵循一个简单的实例判别任务:一个query匹配一个key如果他们是来自同一张图片的编码。用这样的代理任务,MOCO展示了十分有竞争力的结果。

MoCo is a mechanism for building dynamic dictionaries for contrastive learning, and can be used with various pretext tasks. In this paper, we follow a simple instance discrimination task [61, 63, 2]: a query matches a key if they are encoded views (e.g., different crops) of the same image. Using this pretext task, MoCo shows competitive results under the common protocol of linear classification in the ImageNet dataset [11].

一个使用无监督学习的理应是为了进行下游任务的学习。我们展示了7个不同的下游任务,检测和分割,MOCO无监督预训练都在这几个数据集上好,有的还超过了不少。在实验里,我们用了一个亿的数据集进行续联,显示了MOCO可以在实际世界,亿级图片,没有被标记的场景中工作的更好,这些都真实了无监督学习在是视觉任务中可以替代掉有监督学习视觉任务的预训练模型。

A main purpose of unsupervised learning is to pre-train representations (i.e., features) that can be transferred to downstream tasks by fine-tuning. We show that in 7 downstream tasks related to detection or segmentation, MoCo unsupervised pre-training can surpass its ImageNet supervised counterpart, in some cases by nontrivial margins. In these experiments, we explore MoCo pre-trained on ImageNet or on a one-billion Instagram image set, demonstrating that MoCo can work well in a more real-world, billion image scale, and relatively uncurated scenario. These results show that MoCo largely closes the gap between unsupervised and supervised representation learning in many computer vision tasks, and can serve as an alternative to ImageNet supervised pre-training in several applications.

相关工作

无监督/自监督学习涵盖了两个方面。代理任务和损失函数。代理指的是这个任务的解决并不是真正的目的,而是它解决过程中呈现的好的数据表征才是真正要的东西。

而损失函数是独立于代理任务进行调研的。MOCO主要关注损失函数这些部分。我们在接下来两方面来讨论它。

Unsupervised/self-supervised learning methods generally involve two aspects: pretext tasks and loss functions. The term “pretext” implies that the task being solved is not of genuine interest, but is solved only for the true purpose of learning a good data representation. Loss functions can often be investigated independently of pretext tasks. MoCo focuses on the loss function aspect. Next we discuss related studies with respect to these two aspects.

损失函数

一个常用的损失韩式就是衡量一个固定的目标和预测之间的不同,比如L1L2loss,或者把这些摄入分到固定的某些类别中,然后用交叉熵损失,或margin-based损失。接下来讨论其他的替代方法也可以

Loss functions. A common way of defining a loss function is to measure the difference between a model’s prediction and a fixed target, such as reconstructing the input pixels (e.g., auto-encoders) by L1 or L2 losses, or classifying the input into pre-defined categories (e.g., eight positions [13], color bins [64]) by cross-entropy or margin-based losses. Other alternatives, as described next, are also possible.

对比损失衡量的是表征空间里匹配的对的相似度。是不是将输入匹配目标,对比损失将目标实时的在训练中变化,通过网络计算得到它的表征,一些工作中的无监督学习的核心就是对比学习,也是我们在第三章用到的

Contrastive losses [29] measure the similarities of sample pairs in a representation space. Instead of matching an input to a fixed target, in contrastive loss formulations the target can vary on-the-fly during training and can be defined in terms of the data representation computed by a network [29]. Contrastive learning is at the core of several recent works on unsupervised learning [61, 46, 36, 66, 35, 56, 2], which we elaborate on later in context (Sec. 3.1).

对比学习的损失衡量的是概率分布的区别。是无监督数据生成中的成功技巧。表征学习的对抗方法也在一些文献里有研究。生成对抗网络和噪声对比估计的联系也有在一些文章里提到。

Adversarial losses [24] measure the difference between probability distributions. It is a widely successful technique or unsupervised data generation. Adversarial methods for representation learning are explored in [15, 16]. There are relations (see [24]) between generative adversarial networks and noise-contrastive estimation (NCE) [28].

代理任务。一批代理任务被提出,比如在某些损害下恢复原输入,比如…

而一些代理任务通过某些方法形成伪标签,比如…

Pretext tasks. A wide range of pretext tasks have been proposed. Examples include recovering the input under some corruption, e.g., denoising auto-encoders [58], context autoencoders [48], or cross-channel auto-encoders (colorization) [64, 65]. Some pretext tasks form pseudo-labels by, e.g., transformations of a single

(“exemplar”) image [17], patch orderings [13, 45], tracking [59] or

segmenting objects [47] in videos, or clustering features [3, 4].

对比学习和代理任务,一些代理任务是基于对抗损失函数的形式的。比如,

实例判别方法和 exemplar-based task [17] and NCE相关。而CPC中的代理任务是上下文自动编码[48]的一种形式,而在对比多视点编码(CMC)[56]中,它与着色[64]有关。

Contrastive learning vs. pretext tasks. Various pretext tasks can be based on some form of contrastive loss functions. The instance discrimination method [61] is related to the exemplar-based task [17] and NCE [28]. The pretext task in contrastive predictive coding (CPC) [46] is a form of context auto-encoding [48], and in contrastive multiview coding (CMC) [56] it is related to colorization [64].

方法

3.1 对比学习作为字典查找

对比学习和相关发展,可以看做是训练一个字典查找编码器,接下来具体介绍

Contrastive learning [29], and its recent developments, can be thought of as training an encoder for a dictionary look-up task, as described next.

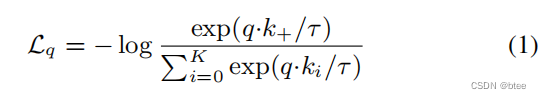

编码好的查询子为q和一系列字典中的key为 {k0, k1, k2, …} 。假设只有一个key是与q匹配的。对比损失函数的值很小时,说明q和正样本K很相似,和其他样本负样本不相似。通过点乘来作相似性判断,因此可以构造一个INfoNCE的损失函数如下

其中t是温度超参数,总和超过了 一个正样本和K个负样本的和。直觉上说,用softmax的分类器将乘积的log作为损失,可以进行分类负样本和正样本。对比损失函数还有其他形式,比如margin-based losses and variants of NCE losses.

Consider an encoded query q and a set of encoded samples {k0, k1, k2, …} that are the keys of a dictionary. Assume that there is a single key (denoted as k+) in the dictionary that q matches. A contrastive loss [29] is a function whose value is low when q is similar to its positive key k+ and dissimilar to all other keys (considered negative keys for q). With similarity measured by dot product, a form of a contrastive loss function, called InfoNCE [46], is considered in this paper:

where τ is a temperature hyper-parameter per [61]. The sum is over one positive and K negative samples. Intuitively, this loss is the log loss of a (K+1)-way softmax-based classifier that tries to classify q as k+. Contrastive loss functions can also be based on other forms [29, 59, 61, 36], such as

margin-based losses and variants of NCE losses.

对比损失作为无监督目标函数来训练表示k和q的编码网络。总体上而言,查询子的表示q = fq(xq)为编码器fq的作用结果,k也是如此。他们的实例化取决于具体的代理任务。输入的xq,xk可以是图片,图像块,一系列图像块内容,编码kq的网络可以相同也可以不同。

The contrastive loss serves as an unsupervised objective function for training the encoder networks that represent the queries and keys [29]. In general, the query representation is q = fq(xq) where fq is an encoder network and xq is a query sample (likewise, k = fk(xk)). Their instantiations depend on the specific pretext task. The input xq and xk can be images [29, 61, 63], patches [46], or context consisting a set of patches [46]. The networks fq and fk can be identical [29, partially shared [46, 36, 2], or different [56].

动量对比

从上述角度来说,对比学习是一个对高维连续图像输入建立高维字典的过程。字典在场景中动态变化,而Key是随机抽样的,key的编码器在训练过程中是不断进行迭代的。我们认为一个号的特征一定是从一个大的字典中学习得到,这个大的字典要包含丰富的负样本。同时对字典中key的编码应该尽可能的在训练的迭代过程中保持一致。基于这个动机,我们设计了一个动量对比学习的方法,并在接下来讨论它。

From the above perspective, contrastive learning is a way of building a discrete dictionary on high-dimensional continuous inputs such as images. The dictionary is dynamic in the sense that the keys are randomly sampled, and that the key encoder evolves during training. Our hypothesis is that good features can be learned by a large dictionary that covers a rich set of negative samples, while the encoder for the dictionary keys is kept as consistent as possible despite its evolution. Based on this motivation, we present Momentum Contrast as described next.

队列形式的字典。

我们方法的核心就是把字典看成数据样本的队列,这可以编码的Key从时刻更新的batch中拯救回来。队列的形式可以使字典尺寸和batch的尺寸保持不一致。我们字典的尺寸可以比batch尺寸大很多,可以灵活和独立的被超参数设置。

字典中的样本是逐步更新的。最新的batch进入队列,最老的batch被队列挤出去。队列始终呈现的是所有数据的一部分,因此维持字典的额外开销计算也可以接受。其次,把最老的batch移除队列是有好处的,因为编码的key是里最新的编码器的key差别最远,也是最不一致的一组数据。

Dictionary as a queue. At the core of our approach is maintaining the dictionary as a queue of data samples. This allows us to reuse the encoded keys from the immediate preceding mini-batches. The introduction of a queue decouples the dictionary size from the mini-batch size. Our dictionary size can be much larger than a typical mini-batch size, and can be flexibly and independently set as a hyper-parameter.

The samples in the dictionary are progressively replaced. The current mini-batch is enqueued to the dictionary, and the oldest mini-batch in the queue is removed. The dictionary always represents a sampled subset of all data, while the extra computation of maintaining this dictionary is manageable. Moreover, removing the oldest mini-batch can be beneficial, because its encoded keys are the most outdated and thus the least consistent with the newest ones. ’

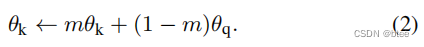

动量更新

利用队列可以实现保持字典很大(与batch解耦开),但是反向传播更新key还是无法解决(梯度只传回到一部分队列中的样本)。一个朴素的解决办法是直接从查询子的编码器中复制权重,就不管反向回传了,但是这种解决方法导致结果并不好,我们认为它失败的原因是编码器一直在更新,因此造成了队列中的key的编码表达不一致。我们通过加了一个动量解决这个问题

只有q的参数被反向传播更新,加 了动量的式子可以使得k的编码更新得很慢,比q慢得多。因此,尽管队列中的key的表达还是被不一致的编码器编码,但是它们的不同被大大缩小了。在实验中,一个相对较大的动量0.999会比稍微小一点的动量0.9效果要好,这意味着缓慢更新的key的编码是使用队列成功的核心因素。

Momentum update. Using a queue can make the dictionary large, but it also makes it intractable to update the key encoder by back-propagation (the gradient should propagate to all samples in the queue). A na¨ıve solution is to copy the key encoder fk from the query encoder fq, ignoring this gradient. But this solution yields poor results in experiments (Sec. 4.1). We hypothesize that such failure is caused by the rapidly changing encoder that reduces the key representations’ consistency. We propose a momentum update to address this issue.

Formally, denoting the parameters of fk as θk and those of fq as θq, we update θk by:

Here m ∈ [0, 1) is a momentum coefficient. Only the parameters θq are updated by back-propagation. The momentum update in Eqn.(2) makes θk evolve more smoothly than θq. As a result, though the keys in the queue are encoded by different encoders (in different mini-batches), the difference among these encoders can be made small. In experiments, a relatively large momentum (e.g., m = 0.999,

our default) works much better than a smaller value (e.g.,m = 0.9), suggesting that a slowly evolving key encoder isa core to making use of a queue.

和以往机制的关系

MOCO是一个用对比学习的总体机制。我们与其他两个已有的总体机制方法比较,如图2。他们的区别主要是在字典容量和一致性上

一种自然的机制是端到端的反向回传迭代更新。如图a,它用每个batch里的样本作为自定,所以每个key都是由一致的编码器编码的。但字典的容量和batch大小是有关的,它会被GPU内存空间所限制。训练大的batch也会遇到很多挑战。一些近期的方法受到局部空间代理任务的驱使,他们的字典大小因为有了多个位置而变大了。但是这些代理任务需要特殊的网络设计,比如将输入打成patch,或定制感受野的大小,下游任务的转移就变得复杂了

Relations to previous mechanisms. MoCo is a general mechanism for using contrastive losses. We compare it with two existing general mechanisms in Figure 2. They exhibit different properties on the dictionary size and consistency.

The end-to-end update by back-propagation is a natural mechanism (e.g., [29, 46, 36, 63, 2, 35], Figure 2a). It uses samples in the current mini-batch as the dictionary, so the keys are consistently encoded (by the same set of encoder parameters). But the dictionary size is coupled with the mini-batch size, limited by the GPU memory size. It is also challenged by large mini-batch optimization [25]. Some recent methods [46, 36, 2] are based on pretext tasks driven by local positions, where the dictionary size can be made larger

by multiple positions. But these pretext tasks may require special network designs such as patchifying the input [46] or customizing the receptive field size [2], which may complicate the transfer of these networks to downstream tasks.

另一个机制是采用memorybank的方式。一个memorybank包括数据集所有样本的特征表征。每个batch构成的字典是从memorybank里随机采样的,并没有反向回传。所以也可以支持大的字典大小。但是,memorybank的表征每次更新了都会改变,每个key的本质是编码器在上一个patch不同的阶段的作用结果,因此并不一致。一个动量更新的方式被某个使用memorybank的方法采用,它的动量更新是在同样样本的特征表达上,而不是编码器上。这种动量更新和我们的方法不太相关,因为moco并不是会跟踪每个样本。我们的方法更多的是一个省内存并且可以在亿级数据上训练的一个方法,这对于 memory bank.来说是很难解决的。

Another mechanism is the memory bank approach proposed by [61] (Figure 2b). A memory bank consists of the representations of all samples in the dataset. The dictionary for each mini-batch is randomly

sampled from the memory bank with no back-propagation, so it can support a large dictionary size. However, the representation of a sample in the memory bank was updated when it was last seen, so the

sampled keys are essentially about the encoders at multiple different steps all over the past epoch and thus are less consistent. A momentum update is adopted on the memory bank in [61]. Its momentum update is on the representations of the same sample, not the encoder. This momentum update is irrelevant to our method, because MoCo does not keep track of every sample. Moreover, our method is more memory-efficient and can be trained on billion-scale data, which can be intractable for a memory bank.

代理任务

对比学习可以驱使更多的代理任务。本文的关注点并不是设计一个新的代理任务,因此我们用了一个简单的网络主要关注实例判别任务,正如一些相关工作所做的那样

依据某个工作,我们将一个来自同一张图片的查询子q和k作为一个正样本对,否则就视为负样本对。依据 [63, 2]的工作,我们随机选了两个视角作为同一个图片的数据增强,来构建正样本对。q和k都是分别被他们的编码器编码。编码器可以是任何的神经网络。代码1提供MOCO代理任务的伪代码。对于当前的batch,我们编码了正样本对q和k,而负样本对则来自队列

Contrastive learning can drive a variety of pretext tasks. As the focus of this paper is not on designing a new pretext task, we use a simple one mainly following the instance discrimination task in [61], to which some recent works [63, 2] are related.

Following [61], we consider a query and a key as a positive pair if they originate from the same image, and otherwise as a negative sample pair. Following [63, 2], we take two random “views” of the same image under random data augmentation to form a positive pair. The queries and keys are respectively encoded by their encoders, fq and fk. The encoder can be any convolutional neural network [39].

Algorithm 1 provides the pseudo-code of MoCo for this pretext task. For the current mini-batch, we encode the queries and their corresponding keys, which form the positive sample pairs. The negative samples are from the queue.

训练细节

我们采用resNet50作为编码器,最后一层全连接层给了一个设定维度的输出。输出向量被L2正则化。这是q和kd的表征。温度设置为0.07,数据增强包括,224的随机patchcrop,随机颜色抖动,水平垂直反翻转,灰度反转。

We adopt a ResNet [33] as the encoder, whose last fully-connected layer (after global average pooling) has a fixed-dimensional output(128-D [61]). This output vector is normalized by its L2-norm [61]. This is the representation of the query or key. The temperature τ in Eqn.(1) is set as 0.07 [61]. The data augmentation setting follows [61]: a 224×224-pixel crop is taken from a randomly resized image, and then undergoes random color jittering, random horizontal flip, and random grayscale conversion, all available in PyTorch’s torchvision package.

Shuffling BN.

q.k都会进行bn,正如resnet中一样,但是在实验中,我们发现使用BN不能使他学到很好的特征表达。这个模型似乎会在代理任务中作弊,找到一个容易的低损失解。这是由于BN的作用使各个样本之间互相通信泄露信息了。

Our encoders fq and fk both have Batch Normalization (BN) [37] as in the standard ResNet [33]. In experiments, we found that using BN prevents the model from learning good representations, as similarly reported in [35] (which avoids using BN). The model appears to “cheat” the pretext task and easily finds a low-loss solution. This is possibly because the intra-batch communication among samples (caused by BN) leaks information.

我们通过shuffling BN来解决。我们在多GPU上对每个独立的样本进行BN,对于Key的编码器fk,在它分配给某个GPU之前,我们打乱了这个batch的顺序,fq的样本顺序则不改变。这能确保被用来计算qk相似度的batch数据来自不同的子集。这能有效率的解决BN的作弊行为,并对训练有用

We resolve this problem by shuffling BN. We train with multiple GPUs and perform BN on the samples independently for each GPU (as done in common practice). For the key encoder fk, we shuffle the sample order in the current mini-batch before distributing it among GPUs (and shuffle back after encoding); the sample order of the mini-batch for the query encoder fq is not altered. This ensures the batch statistics used to compute a query and its positive key come from two different subsets. This effectively tackles the cheating issue and allows training to benefit from BN.

We use shuffled BN in both our method and its end-to-end ablation counterpart (Figure 2a). It is irrelevant to the memory bank counterpart (Figure 2b), which does not suffer from this issue because the positive keys are from different mini-batches in the past.

4810

4810

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?