文章目录

连载系列

https://blog.csdn.net/bryant_meng/article/details/79263542

3.5 Keras Demos(★★★★★)

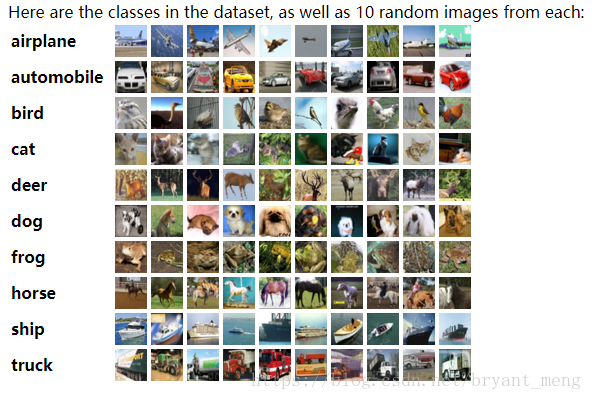

CIFAR-101.(Canadian Institute for Advanced Research)是由 Alex Krizhevsky、Vinod Nair 与 Geoffrey Hinton 收集的一个用于图像识别的数据集,60000个32*32的彩色图像,50000个training data,10000个 test data 有10类,飞机、汽车、鸟、猫、鹿、狗、青蛙、马、船、卡车,每类6000张图。与MNIST相比,色彩、颜色噪点较多,同一类物体大小不一、角度不同、颜色不同。

1 Data preprocessing

1.1 下载和读入数据集

import numpy

from keras.datasets import cifar10

import numpy as np

np.random.seed(10)

# 导入数据集,如果没有就会自动下载

(x_img_train,y_label_train),(x_img_test, y_label_test)=cifar10.load_data()

print('train:',len(x_img_train))

print('test :',len(x_img_test))

print('train_image :',x_img_train.shape)

print('train_label :',y_label_train.shape)

print('test_image :',x_img_test.shape)

print('test_label :',y_label_test.shape)

Output

Using TensorFlow backend.

Downloading data from https://www.cs.toronto.edu/~kriz/cifar-10-python.tar.gz

170500096/170498071 [==============================] - 3835s 22us/step

train: 50000

test : 10000

train_image : (50000, 32, 32, 3)

train_label : (50000, 1)

test_image : (10000, 32, 32, 3)

test_label : (10000, 1)

看看图片的像素

print(x_img_test[0])

Output

[[[158 112 49]

[159 111 47]

[165 116 51]

...

[137 95 36]

[126 91 36]

[116 85 33]]

...

[[ 54 107 160]

[ 56 105 149]

[ 45 89 132]

...

[ 24 77 124]

[ 34 84 129]

[ 21 67 110]]]

32个32*3,一行是一个RGB

1.2 可视化部分训练集

label_dict={0:"airplane",1:"automobile",2:"bird",3:"cat",4:"deer",

5:"dog",6:"frog",7:"horse",8:"ship",9:"truck"}

import matplotlib.pyplot as plt

def plot_images_labels_prediction(images,labels,prediction,idx,num=10):

fig = plt.gcf()

fig.set_size_inches(12, 14) # 控制图片大小

if num>25: num=25 #最多显示25张

for i in range(0, num):

ax=plt.subplot(5,5, 1+i)

ax.imshow(images[idx],cmap='binary')

title=str(i)+','+label_dict[labels[i][0]]# i-th张图片对应的类别

if len(prediction)>0:

title+='=>'+label_dict[prediction[i]]

ax.set_title(title,fontsize=10)

ax.set_xticks([]);

ax.set_yticks([])

idx+=1

plt.savefig('1.png')

plt.show()

Output

Note,0-9类

print(y_label_train[0])

print(y_label_train[0][0])

Output

[6]

6

1.3 Image normalize

print(x_img_train[0][0][0]) #(50000,32,32,3)

x_img_train_normalize = x_img_train.astype('float32') / 255.0

x_img_test_normalize = x_img_test.astype('float32') / 255.0

print(x_img_train_normalize[0][0][0])

Output

[59 62 63]

[0.23137255 0.24313726 0.24705882]

1.4 One-Hot Encoding

print(y_label_train.shape)

print(y_label_train[:5])

Output

(50000, 1)

[[6]

[9]

[9]

[4]

[1]]

One-Hot Encoding

from keras.utils import np_utils

y_label_train_OneHot = np_utils.to_categorical(y_label_train)

y_label_test_OneHot = np_utils.to_categorical(y_label_test)

print(y_label_train_OneHot.shape)

print(y_label_train_OneHot[:5])

Output

(50000, 10)

[[0. 0. 0. 0. 0. 0. 1. 0. 0. 0.]

[0. 0. 0. 0. 0. 0. 0. 0. 0. 1.]

[0. 0. 0. 0. 0. 0. 0. 0. 0. 1.]

[0. 0. 0. 0. 1. 0. 0. 0. 0. 0.]

[0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]]

2 two convolutional layer network

2.1 Build Model

第一节的数据预处理流程合起来如下

from keras.datasets import cifar10

import numpy as np

np.random.seed(10)

# 载入数据集

(x_img_train,y_label_train),(x_img_test,y_label_test)=cifar10.load_data()

print("train data:",'images:',x_img_train.shape,

" labels:",y_label_train.shape)

print("test data:",'images:',x_img_test.shape ,

" labels:",y_label_test.shape)

# 归一化

x_img_train_normalize = x_img_train.astype('float32') / 255.0

x_img_test_normalize = x_img_test.astype('float32') / 255.0

# One-Hot Encoding

from keras.utils import np_utils

y_label_train_OneHot = np_utils.to_categorical(y_label_train)

y_label_test_OneHot = np_utils.to_categorical(y_label_test)

y_label_test_OneHot.shape

Output

Using TensorFlow backend.

train data: images: (50000, 32, 32, 3) labels: (50000, 1)

test data: images: (10000, 32, 32, 3) labels: (10000, 1)

(10000, 10)

建立模型 input - 卷积 - relu - drop out - 池化 - FC - drop out - output

from keras.models import Sequential

from keras.layers import Dense, Dropout, Activation, Flatten

from keras.layers import Conv2D, MaxPooling2D, ZeroPadding2D

# 搭面包架子

model = Sequential()

# 加面包:卷积层1 和 池化层1

model.add(Conv2D(filters=32,kernel_size=(3,3),

input_shape=(32, 32,3),

activation='relu',

padding='same'))

model.add(Dropout(rate=0.25))

model.add(MaxPooling2D(pool_size=(2, 2))) # 16* 16

# 加面包:卷积层2 和 池化层2

model.add(Conv2D(filters=64, kernel_size=(3, 3),

activation='relu', padding='same'))

model.add(Dropout(0.25))

model.add(MaxPooling2D(pool_size=(2, 2))) # 8 * 8

#Step3 建立神經網路(平坦層、隱藏層、輸出層)

model.add(Flatten()) # FC1,64个8*8转化为1维向量

model.add(Dropout(rate=0.25))

model.add(Dense(1024, activation='relu')) # FC2 1024

model.add(Dropout(rate=0.25))

model.add(Dense(10, activation='softmax')) # Output 10

print(model.summary())

Output

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d_1 (Conv2D) (None, 32, 32, 32) 896

_________________________________________________________________

dropout_1 (Dropout) (None, 32, 32, 32) 0

_________________________________________________________________

max_pooling2d_1 (MaxPooling2 (None, 16, 16, 32) 0

_________________________________________________________________

conv2d_2 (Conv2D) (None, 16, 16, 64) 18496

_________________________________________________________________

dropout_2 (Dropout) (None, 16, 16, 64) 0

_________________________________________________________________

max_pooling2d_2 (MaxPooling2 (None, 8, 8, 64) 0

_________________________________________________________________

flatten_1 (Flatten) (None, 4096) 0

_________________________________________________________________

dropout_3 (Dropout) (None, 4096) 0

_________________________________________________________________

dense_1 (Dense) (None, 1024) 4195328

_________________________________________________________________

dropout_4 (Dropout) (None, 1024) 0

_________________________________________________________________

dense_2 (Dense) (None, 10) 10250

=================================================================

Total params: 4,224,970

Trainable params: 4,224,970

Non-trainable params: 0

_________________________________________________________________

None

参数计算

con1 滤波器大小 3*3,输入3通道,32个滤波器,输出32通道

3 × 3 × 3 × 32 + 32 = 896

con2 滤波器大小 3*3,输入32通道,64个滤波器,输出64通道

32 × 3 × 3 × 64 + 64 = 18496

FC1

输入8864,输出 1024

8864*1024 + 1024 = 4195328

FC2(output layer)

输入

1024,输出10

1024*10+10 = 10250

2.2 Training process

训练前设置下需要使用的GPU号

import os

os.environ["CUDA_DEVICE_ORDER"]="PCI_BUS_ID"

os.environ["CUDA_VISIBLE_DEVICES"]="0"

0 表示0号GPU

model.compile(loss='categorical_crossentropy',

optimizer='adam', metrics=['accuracy'])

train_history=model.fit(x_img_train_normalize, y_label_train_OneHot,

validation_split=0.2,

epochs=10, batch_size=128, verbose=1)

参数说明参考【Keras-MLP】MNIST 5.3 节

verbose:日志显示,0为不在标准输出流输出日志信息,1为输出进度条记录,2为每个epoch输出一行记录

Output

Train on 40000 samples, validate on 10000 samples

Epoch 1/10

40000/40000 [==============================] - 12s 290us/step - loss: 1.4855 - acc: 0.4641 - val_loss: 1.2901 - val_acc: 0.5711

Epoch 2/10

40000/40000 [==============================] - 5s 134us/step - loss: 1.1340 - acc: 0.5968 - val_loss: 1.1208 - val_acc: 0.6347

Epoch 3/10

40000/40000 [==============================] - 5s 127us/step - loss: 0.9778 - acc: 0.6561 - val_loss: 1.0021 - val_acc: 0.6681

Epoch 4/10

40000/40000 [==============================] - 5s 121us/step - loss: 0.8719 - acc: 0.6945 - val_loss: 0.9609 - val_acc: 0.6908

Epoch 5/10

40000/40000 [==============================] - 5s 133us/step - loss: 0.7807 - acc: 0.7255 - val_loss: 0.8714 - val_acc: 0.7039

Epoch 6/10

40000/40000 [==============================] - 5s 131us/step - loss: 0.6964 - acc: 0.7564 - val_loss: 0.8542 - val_acc: 0.7130

Epoch 7/10

40000/40000 [==============================] - 5s 127us/step - loss: 0.6222 - acc: 0.7831 - val_loss: 0.8217 - val_acc: 0.7238

Epoch 8/10

40000/40000 [==============================] - 5s 132us/step - loss: 0.5545 - acc: 0.8055 - val_loss: 0.8121 - val_acc: 0.7255

Epoch 9/10

40000/40000 [==============================] - 5s 136us/step - loss: 0.4914 - acc: 0.8285 - val_loss: 0.7473 - val_acc: 0.7467

Epoch 10/10

40000/40000 [==============================] - 5s 125us/step - loss: 0.4284 - acc: 0.8515 - val_loss: 0.7794 - val_acc: 0.7354

2.3 可视化训练过程

import matplotlib.pyplot as plt

def show_train_history(train_acc,test_acc):

plt.plot(train_history.history[train_acc])

plt.plot(train_history.history[test_acc])

plt.title('Train History')

plt.ylabel('Accuracy')

plt.xlabel('Epoch')

plt.legend(['train', 'test'], loc='upper left')

plt.savefig("1.png")

plt.show()

Output

show_train_history('acc','val_acc')

show_train_history('loss','val_loss')

2.4 模型评估

print(model.metrics_names)

scores = model.evaluate(x_img_test_normalize,

y_label_test_OneHot, verbose=0)

print(scores)

print(scores[1])

Output

['loss', 'acc']

[0.7902285717010498, 0.7278]

0.7278

2.5 预测结果

前10个为例,predict_classes(预测类别,也即是softmax输出的最大值)

prediction=model.predict_classes(x_img_test_normalize)

prediction[:10]

Output

array([3, 8, 8, 0, 6, 6, 1, 6, 3, 1])

可视化部分预测结果

label_dict={0:"airplane",1:"automobile",2:"bird",3:"cat",4:"deer",

5:"dog",6:"frog",7:"horse",8:"ship",9:"truck"}

import matplotlib.pyplot as plt

def plot_images_labels_prediction(images,labels,prediction,

idx,num=10):

fig = plt.gcf()

fig.set_size_inches(12, 14)

if num>25: num=25

for i in range(0, num):

ax=plt.subplot(5,5, 1+i)

ax.imshow(images[idx],cmap='binary')

title=str(i)+','+label_dict[labels[i][0]]

if len(prediction)>0:

title+='=>'+label_dict[prediction[i]]

ax.set_title(title,fontsize=10)

ax.set_xticks([]);ax.set_yticks([])

idx+=1

plt.show()

调用

plot_images_labels_prediction(x_img_test,y_label_test,prediction,0,10)

Output

2.6 查看预测概率

model.predict,结果为每个样本的 softmax 输出的10个值,预测结果为10个值中最大值对应的那个类别

Predicted_Probability = model.predict(x_img_test_normalize)

label_dict={0:"airplane",1:"automobile",2:"bird",3:"cat",4:"deer",

5:"dog",6:"frog",7:"horse",8:"ship",9:"truck"}

def show_Predicted_Probability(y,prediction,x_img,Predicted_Probability,i):

print('label:',label_dict[y[i][0]],'predict:',label_dict[prediction[i]])

plt.figure(figsize=(2,2))

plt.imshow(np.reshape(x_img_test[i],(32, 32,3)))

plt.show()

for j in range(10):

print(label_dict[j]+' Probability:%1.9f'%(Predicted_Probability[i][j]))

调用

show_Predicted_Probability(y_label_test,prediction,x_img_test,Predicted_Probability,0)

Output

调用

show_Predicted_Probability(y_label_test,prediction,x_img_test,Predicted_Probability,3)

Output

2.7 显示混淆矩阵

print(y_label_test)

print(y_label_test.reshape(-1))# 转化为1维数组

Output

[[3]

[8]

[8]

...

[5]

[1]

[7]]

[3 8 8 ... 5 1 7]

混淆矩阵

import pandas as pd

print(label_dict)

pd.crosstab(y_label_test.reshape(-1),prediction,rownames=['label'],colnames=['predict'])

分析:

对角线

frog 预测最准确,856/1000,说明最不容易被混淆

cat 预测最不准,458/1000,说明最容易被混淆

非对角线

把 cat 预测成 dog 最多,190

把 dog 预测成 cat 第三多,108 ,猫狗很容易混淆

CIFAR-10大致分为两类

- 动物类:2,3,4,5,6,7

- 交通工具类:0,1,8,9

表中第3列,第11列可以看出

动物很少被错分为 car 和 track

3 six convolutional layer network

第二节建立的神经网络准确率才0.7278,不太好,我们希望能通过更多次的卷积运算提高准确率

3.1 data preprocessing

# 选GPU

import os

os.environ["CUDA_DEVICE_ORDER"]="PCI_BUS_ID"

os.environ["CUDA_VISIBLE_DEVICES"]="0"

# Simple CNN model for the CIFAR-10 Dataset

import numpy

from keras.datasets import cifar10

import numpy as np

np.random.seed(10)

#载入数据集

(X_img_train, y_label_train), (X_img_test, y_label_test) = cifar10.load_data()

print("train data:",'images:',X_img_train.shape," labels:",y_label_train.shape)

print("test data:",'images:',X_img_test.shape ," labels:",y_label_test.shape)

#标准化

X_img_train_normalize = X_img_train.astype('float32') / 255.0

X_img_test_normalize = X_img_test.astype('float32') / 255.0

# One-Hot Encoding

from keras.utils import np_utils

y_label_train_OneHot = np_utils.to_categorical(y_label_train)

y_label_test_OneHot = np_utils.to_categorical(y_label_test)

Output

Using TensorFlow backend.

train data: images: (50000, 32, 32, 3) labels: (50000, 1)

test data: images: (10000, 32, 32, 3) labels: (10000, 1)

y_label_test_OneHot.shape

Output

(10000, 10)

3.2 building model

from keras.models import Sequential

from keras.layers import Dense, Dropout, Activation, Flatten

from keras.layers import Conv2D, MaxPooling2D, ZeroPadding2D

#蛋糕架子

model = Sequential()

#卷積層1與池化層1,开始加蛋糕

model.add(Conv2D(filters=32,kernel_size=(3, 3),input_shape=(32, 32,3),

activation='relu', padding='same'))

model.add(Dropout(0.3))

model.add(Conv2D(filters=32, kernel_size=(3, 3),

activation='relu', padding='same'))

model.add(MaxPooling2D(pool_size=(2, 2)))

#卷積層2與池化層2

model.add(Conv2D(filters=64, kernel_size=(3, 3),

activation='relu', padding='same'))

model.add(Dropout(0.3))

model.add(Conv2D(filters=64, kernel_size=(3, 3),

activation='relu', padding='same'))

model.add(MaxPooling2D(pool_size=(2, 2)))

#卷積層3與池化層3

model.add(Conv2D(filters=128, kernel_size=(3, 3),

activation='relu', padding='same'))

model.add(Dropout(0.3))

model.add(Conv2D(filters=128, kernel_size=(3, 3),

activation='relu', padding='same'))

model.add(MaxPooling2D(pool_size=(2, 2)))

#建立神經網路(平坦層、隱藏層、輸出層)

model.add(Flatten())

model.add(Dropout(0.3))

model.add(Dense(2500, activation='relu'))

model.add(Dropout(0.3))

model.add(Dense(1500, activation='relu'))

model.add(Dropout(0.3))

model.add(Dense(10, activation='softmax'))

print(model.summary())

Output

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d_1 (Conv2D) (None, 32, 32, 32) 896

_________________________________________________________________

dropout_1 (Dropout) (None, 32, 32, 32) 0

_________________________________________________________________

conv2d_2 (Conv2D) (None, 32, 32, 32) 9248

_________________________________________________________________

max_pooling2d_1 (MaxPooling2 (None, 16, 16, 32) 0

_________________________________________________________________

conv2d_3 (Conv2D) (None, 16, 16, 64) 18496

_________________________________________________________________

dropout_2 (Dropout) (None, 16, 16, 64) 0

_________________________________________________________________

conv2d_4 (Conv2D) (None, 16, 16, 64) 36928

_________________________________________________________________

max_pooling2d_2 (MaxPooling2 (None, 8, 8, 64) 0

_________________________________________________________________

conv2d_5 (Conv2D) (None, 8, 8, 128) 73856

_________________________________________________________________

dropout_3 (Dropout) (None, 8, 8, 128) 0

_________________________________________________________________

conv2d_6 (Conv2D) (None, 8, 8, 128) 147584

_________________________________________________________________

max_pooling2d_3 (MaxPooling2 (None, 4, 4, 128) 0

_________________________________________________________________

flatten_1 (Flatten) (None, 2048) 0

_________________________________________________________________

dropout_4 (Dropout) (None, 2048) 0

_________________________________________________________________

dense_1 (Dense) (None, 2500) 5122500

_________________________________________________________________

dropout_5 (Dropout) (None, 2500) 0

_________________________________________________________________

dense_2 (Dense) (None, 1500) 3751500

_________________________________________________________________

dropout_6 (Dropout) (None, 1500) 0

_________________________________________________________________

dense_3 (Dense) (None, 10) 15010

=================================================================

Total params: 9,176,018

Trainable params: 9,176,018

Non-trainable params: 0

_________________________________________________________________

None

输入

conv - drop out - conv - maxpooling(3次)

fc - drop out(2次)

输出

参数计算

con1 滤波器大小 3*3,输入3通道,32个滤波器,输出32通道

3 × 3 × 3 × 32 + 32 = 896

con2 滤波器大小 3*3,输入32通道,32个滤波器,输出32通道

32 × 3 × 3 × 32 + 32 = 9248

con3 滤波器大小 3*3,输入32通道,64个滤波器,输出64通道

32 × 3 × 3 × 64 + 64 = 18496

con4 滤波器大小 3*3,输入64通道,64个滤波器,输出64通道

64 × 3 × 3 × 64 + 64 = 36928

con5 滤波器大小 3*3,输入64通道,128个滤波器,输出128通道

64 × 3 × 3 × 128 + 128 = 73856

con6 滤波器大小 3*3,输入128通道,128个滤波器,输出128通道

128 × 3 × 3 × 128 + 128 = 147584

FC1

输入

4

∗

4

∗

128

=

2048

4*4*128 = 2048

4∗4∗128=2048,输出 2500

2048* 2048 + 2500 = 5122500

FC2

输入 2500,输出1500

2500*1500 + 1500 = 3751500

FC3(output layer)

输入1500,输出10

1500*10+10 = 15010

3.3 training process

model.compile(loss='categorical_crossentropy', optimizer='adam',

metrics=['accuracy'])

train_history=model.fit(X_img_train_normalize, y_label_train_OneHot,

validation_split=0.2,

epochs=50, batch_size=300, verbose=1)

参数说明参考【Keras-MLP】MNIST 5.3 节

或者 Keras中文文档

verbose:日志显示,0为不在标准输出流输出日志信息,1为输出进度条记录,2为每个epoch输出一行记录

epochs = 50,训练时间会比较长,我用一张TITAN xp训练,一个epochs要5-6s

Output(截取部分)

Train on 40000 samples, validate on 10000 samples

Epoch 1/50

40000/40000 [==============================] - 6s 140us/step - loss: 0.6132 - acc: 0.7841 - val_loss: 0.6855 - val_acc: 0.7628

Epoch 2/50

40000/40000 [==============================] - 5s 130us/step - loss: 0.5631 - acc: 0.8018 - val_loss: 0.6670 - val_acc: 0.7720

Epoch 3/50

40000/40000 [==============================] - 5s 126us/step - loss: 0.5222 - acc: 0.8134 - val_loss: 0.6741 - val_acc: 0.7752

Epoch 4/50

40000/40000 [==============================] - 5s 136us/step - loss: 0.4852 - acc: 0.8264 - val_loss: 0.6401 - val_acc: 0.7815

Epoch 5/50

40000/40000 [==============================] - 5s 130us/step - loss: 0.4547 - acc: 0.8364 - val_loss: 0.6573 - val_acc: 0.7816

………………

………………

………………

Epoch 45/50

40000/40000 [==============================] - 5s 131us/step - loss: 0.1225 - acc: 0.9586 - val_loss: 0.7927 - val_acc: 0.7978

Epoch 46/50

40000/40000 [==============================] - 5s 133us/step - loss: 0.1185 - acc: 0.9609 - val_loss: 0.7982 - val_acc: 0.8025

Epoch 47/50

40000/40000 [==============================] - 6s 140us/step - loss: 0.1280 - acc: 0.9565 - val_loss: 0.8781 - val_acc: 0.7910

Epoch 48/50

40000/40000 [==============================] - 5s 132us/step - loss: 0.1241 - acc: 0.9581 - val_loss: 0.7653 - val_acc: 0.8053

Epoch 49/50

40000/40000 [==============================] - 5s 134us/step - loss: 0.1256 - acc: 0.9562 - val_loss: 0.7894 - val_acc: 0.7962

Epoch 50/50

40000/40000 [==============================] - 5s 136us/step - loss: 0.1190 - acc: 0.9596 - val_loss: 0.8189 - val_acc: 0.8019

用第二节的show_train_history函数,查看下训练过程

import matplotlib.pyplot as plt

def show_train_history(train_acc,test_acc):

plt.plot(train_history.history[train_acc])

plt.plot(train_history.history[test_acc])

plt.title('Train History')

plt.ylabel('Accuracy')

plt.xlabel('Epoch')

plt.legend(['train', 'test'], loc='upper left')

plt.show()

调用

show_train_history('acc','val_acc')

Output

show_train_history('loss','val_loss')

哈哈。过拟合了,说明网络设计的相对复杂。自己去DIY吧

3.4 模型的评估

scores = model.evaluate(X_img_test_normalize,

y_label_test_OneHot,verbose=0)

scores[1]

结果

0.7882

4 模型的保存

第三节中,程序训练需要花费很长时间,跑更复杂的模型和更大的图片数据集时候,往往需要十几个小时或者数天的时间,有时候可能因为某些原因导致计算机宕机,这样之前的训练就前功尽弃了,解决的方法是,每次程序执行完训练后,将模型保存一下。下次程序训练之前,先加载模型权重,再继续训练。

4.1 h5

build model以后,载入与训练模型,第一次没有保存与训练模型肯定报错

try:

model.load_weights("SaveModel/cifarCnnModel.h5")

print("载入模型成果!继续训练模型")

except :

print("载入模型失败!开始训练一个新模型")

Output

载入模型失败!开始训练一个新模型

开始训练

model.compile(loss='categorical_crossentropy',

optimizer='adam', metrics=['accuracy'])

train_history=model.fit(x_img_train_normalize, y_label_train_OneHot,

validation_split=0.2,

epochs=5, batch_size=128, verbose=1)

保存模型,在当前目录下,新建一个文件夹,然后把模型保存进去

import os

os.mkdir("SaveModel")

model.save_weights("SaveModel/cifarCnnModel.h5")

print("Saved model to disk")

Output

Saved model to disk

这样就把训练模型保存为 h5 格式了,当然也可以保存为其它格式,例如 json 和 yaml 格式

下次训练的时候,就可以先载入模型的权重了

try:

model.load_weights("SaveModel/cifarCnnModel.h5")

print("载入模型成果!继续训练模型")

except :

print("载入模型失败!开始训练一个新模型")

Output

载入模型成果!继续训练模型

记得训练完了要保存哟

model.save_weights("SaveModel/cifarCnnModel.h5")

print("Saved model to disk")

4.2 json

model_json = model.to_json()

with open("SaveModel/cifarCnnModelnew.json", "w") as json_file:

json_file.write(model_json)

4.3 yaml

model_yaml = model.to_yaml()

with open("SaveModel/cifarCnnModelnew.yaml", "w") as yaml_file:

yaml_file.write(model_yaml)

672

672

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?