| import tensorflow as tf |

| | from tensorflow.python.frameworkimport graph_util |

| | |

| | filedir = "./models/model10.ckpt" |

| | output_graph = "./models/frozen_model.pb" |

| | saver = tf.train.import_meta_graph("./models/model10.ckpt.meta",clear_devices=True) |

| | |

| | graph = tf.get_default_graph() |

| | input_graph_def = graph.as_graph_def() |

| | |

| | with tf.Session() as sess: |

| | saver.restore(sess, filedir) |

| | for x in tf.global_variables(): |

| | print x |

| | output_graph_def = graph_util.convert_variables_to_constants( |

| | sess, |

| | input_graph_def, |

| | ["M","rnn/lstm_cell/w_f_diag"]) |

| | test = tf.get_default_graph().get_tensor_by_name("rnn/lstm_cell/biases/read:0").eval() |

| | print test |

| | |

| | with tf.gfile.GFile(output_graph,"wb")as f: |

| | f.write(output_graph_def.SerializeToString()) |

1. 训练模型

import tensorflow as tf

sess = tf.Session()

matrix_1 = tf.constant([3., 3.], name='input')

add = tf.add(matrix_1, matrix_1, name='output')

sess.run(add)

output_graph_def = tf.graph_util.convert_variables_to_constants(sess, sess.graph_def, output_node_names=['output'])

# 保存模型到目录下的model文件夹中

with tf.gfile.FastGFile('./model/tensorflow_matrix_graph.pb',mode='wb') as f:

f.write(output_graph_def.SerializeToString())

sess.close()

| #

coding=utf8 |

| | import

tensorflow as tf |

| | from

tensorflow.python.framework import graph_util |

| | #

1. pb文件的保存方法。 |

| | v1 =

tf.Variable(tf.constant(1.0, shape=[1]), name = "v1") |

| | v2 =

tf.Variable(tf.constant(2.0, shape=[1]), name = "v2") |

| | result =

v1 + v2 |

| | |

| | init_op =

tf.global_variables_initializer() |

| | with

tf.Session() as sess: |

| | sess.run(init_op) |

| | graph_def =

tf.get_default_graph().as_graph_def() |

| | output_graph_def =

graph_util.convert_variables_to_constants(sess, graph_def, ['add']) |

| | with

tf.gfile.GFile("Saved_model/combined_model.pb", "wb") as

f: |

| | f.write(output_graph_def.SerializeToString()) |

| | #

2. 加载pb文件。 |

| | from

tensorflow.python.platform import gfile |

| | |

| | with

tf.Session() as sess: |

| | model_filename = "Saved_model/combined_model.pb" |

| | |

| | with

gfile.FastGFile(model_filename, 'rb') as f: |

| | graph_def =

tf.GraphDef() |

| | graph_def.ParseFromString(f.read()) |

| | |

| | result =

tf.import_graph_def(graph_def, return_elements=["add:0"]) |

| | print

sess.run(result) |

唯一注意的一点是务必要保成pb格式的文件:

- 不能使用 tf.train.write_graph()保存模型,该种方式只是保存了模型的结构,并不保存训练完毕的参数值

- 不能使用 tf.train.saver()保存模型,该种方式只是保存了网络中的参数值,并不保存模型的结构。

我们需要的是既保存模型的结构,又保存模型中每个参数的值,所以上述的两种方式都不行:因此我们用一下方式保存:

# 可以把整个sesion当作常量都保存下来,通过output_node_names参数来指定输出

graph_util.convert_variables_to_constants

# 指定保存文件的路径以及读写方式

tf.gfile.FastGFile('model/test.pb', mode='wb')

# 将固化的模型写入到文件

f.write(output_graph_def.SerializeToString())

ML主要分为训练和预测两个阶段,此教程就是将训练好的模型freeze并保存下来.freeze的含义就是将该模型的图结构和该模型的权重固化到一起了.也即加载freeze的模型之后,立刻能够使用了。

下面使用一个简单的demo来详细解释该过程,

一、首先运行脚本tiny_model.py

-

- import tensorflow as tf

- import numpy as np

-

-

- with tf.variable_scope('Placeholder'):

- inputs_placeholder = tf.placeholder(tf.float32, name='inputs_placeholder', shape=[None, 10])

- labels_placeholder = tf.placeholder(tf.float32, name='labels_placeholder', shape=[None, 1])

-

- with tf.variable_scope('NN'):

- W1 = tf.get_variable('W1', shape=[10, 1], initializer=tf.random_normal_initializer(stddev=1e-1))

- b1 = tf.get_variable('b1', shape=[1], initializer=tf.constant_initializer(0.1))

- W2 = tf.get_variable('W2', shape=[10, 1], initializer=tf.random_normal_initializer(stddev=1e-1))

- b2 = tf.get_variable('b2', shape=[1], initializer=tf.constant_initializer(0.1))

-

- a = tf.nn.relu(tf.matmul(inputs_placeholder, W1) + b1)

- a2 = tf.nn.relu(tf.matmul(inputs_placeholder, W2) + b2)

-

- y = tf.div(tf.add(a, a2), 2)

-

- with tf.variable_scope('Loss'):

- loss = tf.reduce_sum(tf.square(y - labels_placeholder) / 2)

-

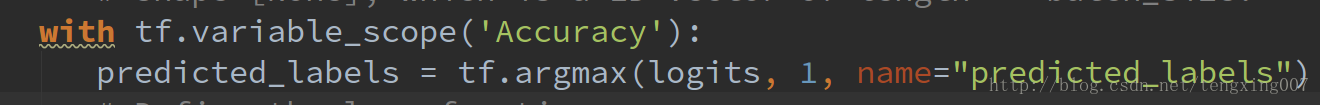

- with tf.variable_scope('Accuracy'):

- predictions = tf.greater(y, 0.5, name="predictions")

- correct_predictions = tf.equal(predictions, tf.cast(labels_placeholder, tf.bool), name="correct_predictions")

- accuracy = tf.reduce_mean(tf.cast(correct_predictions, tf.float32))

-

-

- adam = tf.train.AdamOptimizer(learning_rate=1e-3)

- train_op = adam.minimize(loss)

-

-

- inputs = np.random.choice(10, size=[10000, 10])

- labels = (np.sum(inputs, axis=1) > 45).reshape(-1, 1).astype(np.float32)

- print('inputs.shape:', inputs.shape)

- print('labels.shape:', labels.shape)

-

-

- test_inputs = np.random.choice(10, size=[100, 10])

- test_labels = (np.sum(test_inputs, axis=1) > 45).reshape(-1, 1).astype(np.float32)

- print('test_inputs.shape:', test_inputs.shape)

- print('test_labels.shape:', test_labels.shape)

-

- batch_size = 32

- epochs = 10

-

- batches = []

- print("%d items in batch of %d gives us %d full batches and %d batches of %d items" % (

- len(inputs),

- batch_size,

- len(inputs) // batch_size,

- batch_size - len(inputs) // batch_size,

- len(inputs) - (len(inputs) // batch_size) * 32)

- )

- for i in range(len(inputs) // batch_size):

- batch = [ inputs[batch_size*i:batch_size*i+batch_size], labels[batch_size*i:batch_size*i+batch_size] ]

- batches.append(list(batch))

- if (i + 1) * batch_size < len(inputs):

- batch = [ inputs[batch_size*(i + 1):],labels[batch_size*(i + 1):] ]

- batches.append(list(batch))

- print("Number of batches: %d" % len(batches))

- print("Size of full batch: %d" % len(batches[0]))

- print("Size if final batch: %d" % len(batches[-1]))

-

- global_count = 0

-

- with tf.Session() as sess:

-

-

- sess.run(tf.initialize_all_variables())

- for i in range(epochs):

- for batch in batches:

-

- train_loss , _= sess.run([loss, train_op], feed_dict={

- inputs_placeholder: batch[0],

- labels_placeholder: batch[1]

- })

-

-

- if global_count % 100 == 0:

- acc = sess.run(accuracy, feed_dict={

- inputs_placeholder: test_inputs,

- labels_placeholder: test_labels

- })

- print('accuracy: %f' % acc)

- global_count += 1

-

- acc = sess.run(accuracy, feed_dict={

- inputs_placeholder: test_inputs,

- labels_placeholder: test_labels

- })

- print("final accuracy: %f" % acc)

-

- saver = tf.train.Saver()

- last_chkp = saver.save(sess, 'results/graph.chkp')

-

-

- for op in tf.get_default_graph().get_operations():

- print(op.name)

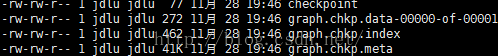

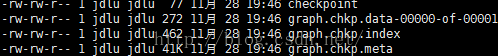

说明:saver.save必须在session里面,因为在session里面,整个图才是激活的,才能够将参数存进来,使用save之后能够得到如下的文件:

说明:

.data:存放的是权重参数

.meta:存放的是图和metadata,metadata是其他配置的数据

如果想将我们的模型固化,让别人能够使用,我们仅仅需要的是图和参数,metadata是不需要的

二、综合上述几个文件,生成可以使用的模型的步骤如下:

1、恢复我们保存的图

2、开启一个Session,然后载入该图要求的权重

3、删除对预测无关的metadata

4、将处理好的模型序列化之后保存

运行freeze.py

-

- import os, argparse

- import tensorflow as tf

- from tensorflow.python.framework import graph_util

-

- dir = os.path.dirname(os.path.realpath(__file__))

-

- def freeze_graph(model_folder):

-

- checkpoint = tf.train.get_checkpoint_state(model_folder)

- input_checkpoint = checkpoint.model_checkpoint_path

-

-

- absolute_model_folder = "/".join(input_checkpoint.split('/')[:-1])

- output_graph = absolute_model_folder + "/frozen_model.pb"

-

-

-

-

-

-

-

- output_node_names = "Accuracy/predictions"

-

-

- clear_devices = True

-

-

- saver = tf.train.import_meta_graph(input_checkpoint + '.meta', clear_devices=clear_devices)

-

-

- graph = tf.get_default_graph()

- input_graph_def = graph.as_graph_def()

-

-

-

-

- with tf.Session() as sess:

- saver.restore(sess, input_checkpoint)

-

-

- output_graph_def = graph_util.convert_variables_to_constants(

- sess,

- input_graph_def,

- output_node_names.split(",")

- )

-

-

- with tf.gfile.GFile(output_graph, "wb") as f:

- f.write(output_graph_def.SerializeToString())

- print("%d ops in the final graph." % len(output_graph_def.node))

-

-

- if __name__ == '__main__':

- parser = argparse.ArgumentParser()

- parser.add_argument("--model_folder", type=str, help="Model folder to export")

- args = parser.parse_args()

-

- freeze_graph(args.model_folder)

说明:

对于freeze操作,我们需要定义输出结点的名字.因为网络其实是比较复杂的,定义了输出结点的名字,那么freeze的时候就只把输出该结点所需要的子图都固化下来,其他无关的就舍弃掉.因为我们freeze模型的目的是接下来做预测.所以,一般情况下,output_node_names就是我们预测的目标

.

三、加载freeze后的模型,注意该模型已经是包含图和相应的参数了.所以,我们不需要再加载参数进来.也即该模型加载进来已经是可以使用了.

-

- import argparse

- import tensorflow as tf

-

- def load_graph(frozen_graph_filename):

-

- with tf.gfile.GFile(frozen_graph_filename, "rb") as f:

- graph_def = tf.GraphDef()

- graph_def.ParseFromString(f.read())

-

-

- with tf.Graph().as_default() as graph:

- tf.import_graph_def(

- graph_def,

- input_map=None,

- return_elements=None,

- name="prefix",

- op_dict=None,

- producer_op_list=None

- )

- return graph

-

- if __name__ == '__main__':

- parser = argparse.ArgumentParser()

- parser.add_argument("--frozen_model_filename", default="results/frozen_model.pb", type=str, help="Frozen model file to import")

- args = parser.parse_args()

-

- graph = load_graph(args.frozen_model_filename)

-

-

-

-

-

- for op in graph.get_operations():

- print(op.name,op.values())

-

-

-

-

-

-

-

- x = graph.get_tensor_by_name('prefix/Placeholder/inputs_placeholder:0')

- y = graph.get_tensor_by_name('prefix/Accuracy/predictions:0')

-

- with tf.Session(graph=graph) as sess:

- y_out = sess.run(y, feed_dict={

- x: [[3, 5, 7, 4, 5, 1, 1, 1, 1, 1]]

- })

- print(y_out)

- print ("finish")

说明:

1、在预测的过程中,当把freeze后的模型加载进来后,我们只需要定义好输入的tensor和目标tensor即可

2、在这里要注意一下tensor_name和ops_name,

注意prefix/Placeholder/inputs_placeholder仅仅是操作的名字,prefix/Placeholder/inputs_placeholder:0才是tensor的名字

x = graph.get_tensor_by_name('prefix/Placeholder/inputs_placeholder:0')一定要使用tensor的名字

3、要获取图中ops的名字和对应的tensor的名字,可用如下的代码:

-

-

-

-

- for op in graph.get_operations():

- print(op.name,op.values())

=============================================================================================================================

上面是使用了Saver()来保存模型,也可以使用sv = tf.train.Supervisor()来保存模型

-

- import tensorflow as tf

- import numpy as np

-

-

- with tf.variable_scope('Placeholder'):

- inputs_placeholder = tf.placeholder(tf.float32, name='inputs_placeholder', shape=[None, 10])

- labels_placeholder = tf.placeholder(tf.float32, name='labels_placeholder', shape=[None, 1])

-

- with tf.variable_scope('NN'):

- W1 = tf.get_variable('W1', shape=[10, 1], initializer=tf.random_normal_initializer(stddev=1e-1))

- b1 = tf.get_variable('b1', shape=[1], initializer=tf.constant_initializer(0.1))

- W2 = tf.get_variable('W2', shape=[10, 1], initializer=tf.random_normal_initializer(stddev=1e-1))

- b2 = tf.get_variable('b2', shape=[1], initializer=tf.constant_initializer(0.1))

-

- a = tf.nn.relu(tf.matmul(inputs_placeholder, W1) + b1)

- a2 = tf.nn.relu(tf.matmul(inputs_placeholder, W2) + b2)

-

- y = tf.div(tf.add(a, a2), 2)

-

- with tf.variable_scope('Loss'):

- loss = tf.reduce_sum(tf.square(y - labels_placeholder) / 2)

-

- with tf.variable_scope('Accuracy'):

- predictions = tf.greater(y, 0.5, name="predictions")

- correct_predictions = tf.equal(predictions, tf.cast(labels_placeholder, tf.bool), name="correct_predictions")

- accuracy = tf.reduce_mean(tf.cast(correct_predictions, tf.float32))

-

-

- adam = tf.train.AdamOptimizer(learning_rate=1e-3)

- train_op = adam.minimize(loss)

-

-

- inputs = np.random.choice(10, size=[10000, 10])

- labels = (np.sum(inputs, axis=1) > 45).reshape(-1, 1).astype(np.float32)

- print('inputs.shape:', inputs.shape)

- print('labels.shape:', labels.shape)

-

-

- test_inputs = np.random.choice(10, size=[100, 10])

- test_labels = (np.sum(test_inputs, axis=1) > 45).reshape(-1, 1).astype(np.float32)

- print('test_inputs.shape:', test_inputs.shape)

- print('test_labels.shape:', test_labels.shape)

-

- batch_size = 32

- epochs = 10

-

- batches = []

- print("%d items in batch of %d gives us %d full batches and %d batches of %d items" % (

- len(inputs),

- batch_size,

- len(inputs) // batch_size,

- batch_size - len(inputs) // batch_size,

- len(inputs) - (len(inputs) // batch_size) * 32)

- )

- for i in range(len(inputs) // batch_size):

- batch = [ inputs[batch_size*i:batch_size*i+batch_size], labels[batch_size*i:batch_size*i+batch_size] ]

- batches.append(list(batch))

- if (i + 1) * batch_size < len(inputs):

- batch = [ inputs[batch_size*(i + 1):],labels[batch_size*(i + 1):] ]

- batches.append(list(batch))

- print("Number of batches: %d" % len(batches))

- print("Size of full batch: %d" % len(batches[0]))

- print("Size if final batch: %d" % len(batches[-1]))

-

- global_count = 0

-

-

- sv = tf.train.Supervisor()

- with sv.managed_session() as sess:

-

- for i in range(epochs):

- for batch in batches:

-

- train_loss , _= sess.run([loss, train_op], feed_dict={

- inputs_placeholder: batch[0],

- labels_placeholder: batch[1]

- })

-

-

- if global_count % 100 == 0:

- acc = sess.run(accuracy, feed_dict={

- inputs_placeholder: test_inputs,

- labels_placeholder: test_labels

- })

- print('accuracy: %f' % acc)

- global_count += 1

-

- acc = sess.run(accuracy, feed_dict={

- inputs_placeholder: test_inputs,

- labels_placeholder: test_labels

- })

- print("final accuracy: %f" % acc)

-

-

-

- sv.saver.save(sess, 'results/graph.chkp')

-

- for op in tf.get_default_graph().get_operations():

- print(op.name)

注意:使用了sv = tf.train.Supervisor(),就不需要再初始化了,将sess.run(tf.initialize_all_variables())注释掉,否则会报错.

在tensorflow中,graph是训练的核心,当一个模型训练完成后,需要将模型保存下来,一个通常的操作是:

variables = tf.all_variables()

saver = tf.train.Saver(variables)

saver.save(sess, "data/data.ckpt")

tf.train.write_graph(sess.graph_def, 'graph', 'model.ph', False)

这样就可以将model保存在model.ph文件中,然而使用的时候不仅要加载模型文件model.ph,还要加载保存的data.ckpt数据文件才能使用。这样保持了数据与模型的分离,确实是个不错的方法。

当我们把一个训练模型完整的训练好上线时候,我们期待的场景是:将一张图片喂进去,然后得出结果。 这时候再这样加载或许有些不必要,特别是在一些变量”不明”的时候特别麻烦.这时候一个比较好的方法就是将变量(偏执,权重等)固化到模型数据中。

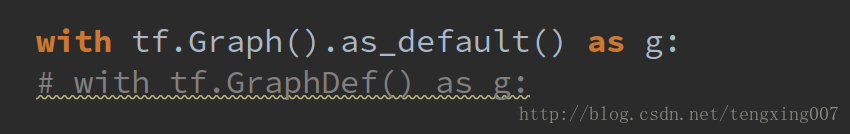

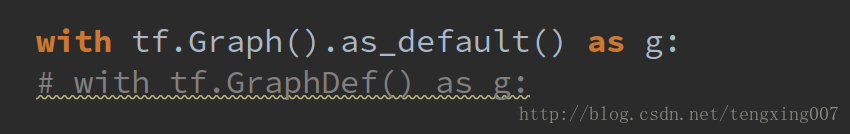

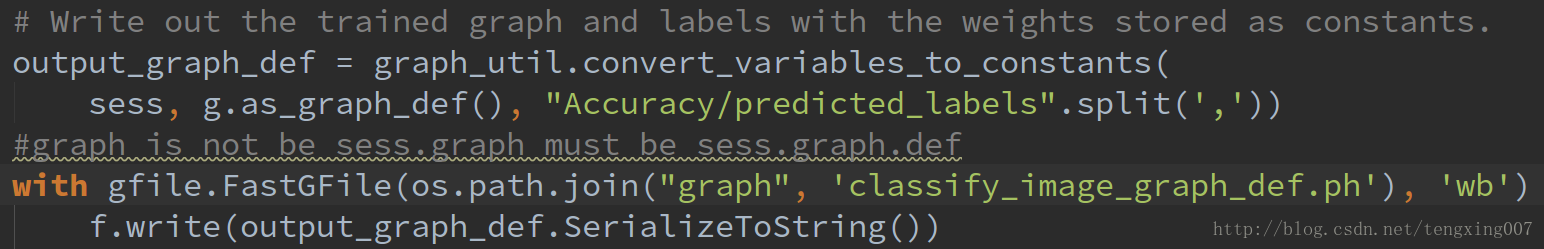

创建图

在文件开头增加如下代码

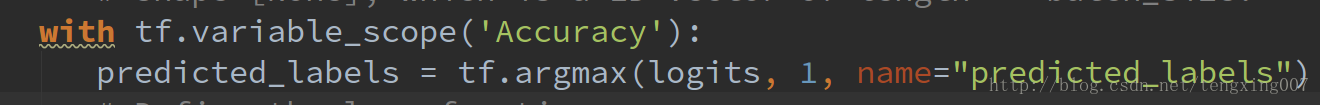

声明tensor

在需要的操作添加

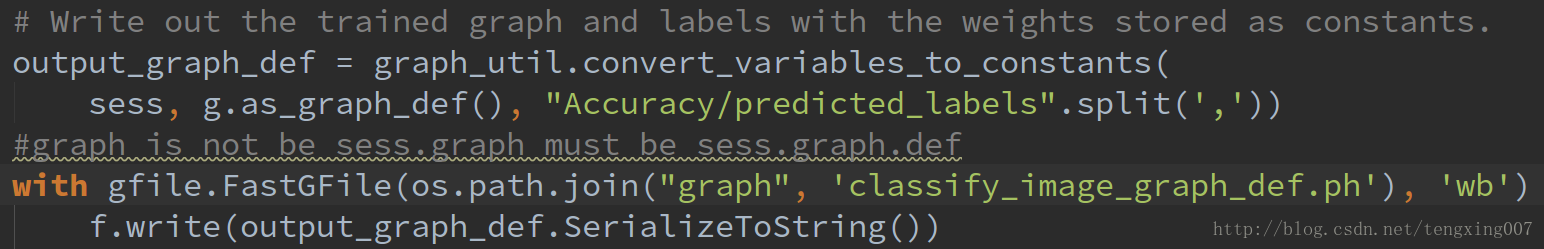

固化保存

固化操作中最重要的函数是:

tf.graph_util.convert_variables_to_constants(sess, input_graph_def, output_node_names, variable_names_whitelist=None, variable_names_blacklist=None)

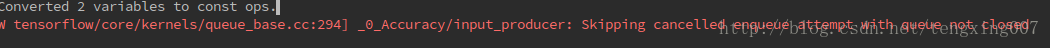

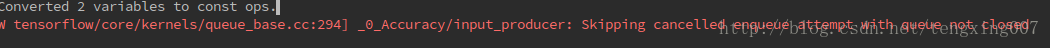

代码运行后控制台打印:

这样在我们使用的时候就不要再进行data.ckpt的数据恢复。直接通过:

sess.graph.get_tensor_by_name()

就可以获取一个tensor,是不是很方便。

小报错:

7146

7146

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?