EDVR复现Vid4中的Segmentation Fault及Vimeo-90k数据集的百度盘下载

EDVR介绍

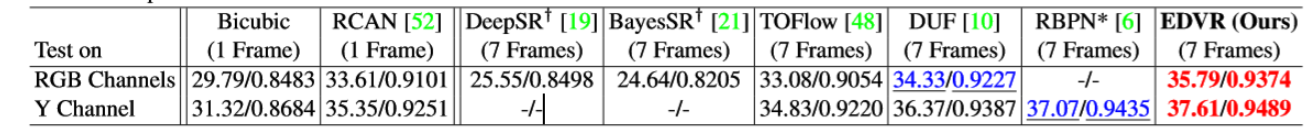

EDVR全称是:Video Restoration with Enhanced Deformable Convolutional Networks,是CVPR 2019 Workshop NTIRE 2019的冠军。在视频超分辨率Vid4数据集上测试结果仅次于今年的ISeeBetter,只有一点点的差距。

EDVR提出了一个PCD 对齐模块和时空注意力融合模型TSA:

详细介绍见paper

本文使用官方提供的code、model以及Vid4数据

Segmentation Fault

初次编译运行后,出现Segmentation Fault,定位后发现是Deformable Convolution的问题,详情见issue。由于本文使用的是gcc4.8,需要升级到gcc5.3

升级gcc

本文使用的系统是centos 7,可以使用devtoolset-4安装gcc5.3以避免重新编译gcc。然而官方已经停止维护devtoolset-4,不得已使用devtoolset-6,仍然报错。一番查找后在这里找到了可以用的devtoolset-4包,下载devtoolset-4-gcc-5.3.1-6.1.el7.x86_64.rpm和devtoolset-4-gcc-c+±5.3.1-6.1.el7.x86_64.rpm使用yum localinstall *.rpm进行安装。

但安装gcc时提示需要devtoolset-4-runtime,后来阴差阳错在另一个包里找到了devtoolset-4-runtime,下载 devtoolset-4-runtime-4.1-3.el7.x86_64.rpm安装后再安装gcc。

最后安装g++时提示要按装devtoolset-4-libstdc++,在原网站下载 devtoolset-4-libstdc+±devel-5.3.1-6.1.el7.x86_64.rpm安装即可继续安装g++5.3.1。

重新编译运行

使用scl重新编译dcn:

scl enable devtoolset-4 -- python setup.py develop

若出现sysroot报错,参考这篇文章解决。

测试结果之Vid4

下载上文中的Vid4数据解压放入datasets文件夹中,下载EDVR_Vimeo90K_SR_L.pth到experiments/pretrained_models/路径下,具体参考test_Vid4_REDS4_with_GT.py中的路径,使用配置好的环境直接运行:

python test_Vid4_REDS4_with_GT.py

结果截图如下图所示:

从运行结果来看,Vid4部分和论文中的是一样的。如下表所示:

测试结果之Vimeo-90K

为了测试Vimeo-90K,我们需要先下载Vimeo-90K的数据。这个数据集有82G,在MIT的服务器,如果下载不下来,博主备份了一份在百度云。(侵删)

链接:https://pan.baidu.com/s/16lANG3EStS6sE7Ir1-AI8g

提取码:q4fi

如果你在你的工作中使用了这个数据,请引用:

@article{xue2019video,

title={Video Enhancement with Task-Oriented Flow},

author={Xue, Tianfan and Chen, Baian and Wu, Jiajun and Wei, Donglai and Freeman, William T},

journal={International Journal of Computer Vision (IJCV)},

volume={127},

number={8},

pages={1106–1125},

year={2019},

publisher={Springer}

}

根据这个issue可知上述的model仍然可以复现Vimeo-90K的测试结果。

需要小修一下test_Vid4_REDS4_with_GT.py,让它能测试Vimeo-90K的数据。下面是修改后的代码,主要是更改了测试文件夹的获取路径。需要注意的是低分辨率的数据vimeo_septuplet_matlabLRx4是使用data_scripts/generate_LR_Vimeo90K.m中的代码生成的,详情见此处的Vimeo-90K数据准备部分,请根据自己的需要修改Vimeo-90K的路径:

'''

Test Vimeo90 (SR) and REDS4 (SR-clean, SR-blur, deblur-clean, deblur-compression) datasets

'''

import os

import os.path as osp

import glob

import logging

import numpy as np

import cv2

import torch

import utils.util as util

import data.util as data_util

import models.archs.EDVR_arch as EDVR_arch

def main():

#################

# configurations

#################

device = torch.device('cuda')

os.environ['CUDA_VISIBLE_DEVICES'] = '2'

data_mode = 'Vimeo90' # Vid4 | sharp_bicubic | blur_bicubic | blur | blur_comp

# Vimeo90: SR

# REDS4: sharp_bicubic (SR-clean), blur_bicubic (SR-blur);

# blur (deblur-clean), blur_comp (deblur-compression).

stage = 1 # 1 or 2, use two stage strategy for REDS dataset.

flip_test = False

############################################################################

#### model

if data_mode == 'Vimeo90':

if stage == 1:

model_path = '../experiments/pretrained_models/EDVR_Vimeo90K_SR_L.pth'

else:

raise ValueError('Vimeo90 does not support stage 2.')

elif data_mode == 'sharp_bicubic':

if stage == 1:

model_path = '../experiments/pretrained_models/EDVR_REDS_SR_L.pth'

else:

model_path = '../experiments/pretrained_models/EDVR_REDS_SR_Stage2.pth'

elif data_mode == 'blur_bicubic':

if stage == 1:

model_path = '../experiments/pretrained_models/EDVR_REDS_SRblur_L.pth'

else:

model_path = '../experiments/pretrained_models/EDVR_REDS_SRblur_Stage2.pth'

elif data_mode == 'blur':

if stage == 1:

model_path = '../experiments/pretrained_models/EDVR_REDS_deblur_L.pth'

else:

model_path = '../experiments/pretrained_models/EDVR_REDS_deblur_Stage2.pth'

elif data_mode == 'blur_comp':

if stage == 1:

model_path = '../experiments/pretrained_models/EDVR_REDS_deblurcomp_L.pth'

else:

model_path = '../experiments/pretrained_models/EDVR_REDS_deblurcomp_Stage2.pth'

else:

raise NotImplementedError

if data_mode == 'Vimeo90':

N_in = 7 # use N_in images to restore one HR image

else:

N_in = 5

predeblur, HR_in = False, False

back_RBs = 40

if data_mode == 'blur_bicubic':

predeblur = True

if data_mode == 'blur' or data_mode == 'blur_comp':

predeblur, HR_in = True, True

if stage == 2:

HR_in = True

back_RBs = 20

model = EDVR_arch.EDVR(128, N_in, 8, 5, back_RBs, predeblur=predeblur, HR_in=HR_in)

#### dataset

if data_mode == 'Vimeo90':

test_dataset_folder = '../../dataset/VideoSR/vimeo_septuplet_matlabLRx4/sequences'

GT_dataset_folder = '../../dataset/VideoSR/vimeo_septuplet/sequences'

else:

if stage == 1:

test_dataset_folder = '../datasets/REDS4/{}'.format(data_mode)

else:

test_dataset_folder = '../results/REDS-EDVR_REDS_SR_L_flipx4'

print('You should modify the test_dataset_folder path for stage 2')

GT_dataset_folder = '../datasets/REDS4/GT'

#### evaluation

crop_border = 0

border_frame = N_in // 2 # border frames when evaluate

# temporal padding mode

if data_mode == 'Vimeo90' or data_mode == 'sharp_bicubic':

padding = 'new_info'

else:

padding = 'replicate'

save_imgs = True

save_folder = '../results/{}'.format(data_mode)

util.mkdirs(save_folder)

util.setup_logger('base', save_folder, 'test', level=logging.INFO, screen=True, tofile=True)

logger = logging.getLogger('base')

#### log info

logger.info('Data: {} - {}'.format(data_mode, test_dataset_folder))

logger.info('Padding mode: {}'.format(padding))

logger.info('Model path: {}'.format(model_path))

logger.info('Save images: {}'.format(save_imgs))

logger.info('Flip test: {}'.format(flip_test))

#### set up the models

model.load_state_dict(torch.load(model_path), strict=True)

model.eval()

model = model.to(device)

avg_psnr_l, avg_psnr_center_l, avg_psnr_border_l = [], [], []

subfolder_name_l = []

#### read vimeo-90 test dataset

testfilename = '../../dataset/VideoSR/vimeo_septuplet/sep_testlist.txt'

testf = open(testfilename, 'r')

subfolder_l = []

subfolder_GT_l = []

for tf in testf:

subfolder_l.append(osp.join(test_dataset_folder, tf[:-1]))

subfolder_GT_l.append(osp.join(GT_dataset_folder, tf[:-1]))

subfolder_l = sorted(subfolder_l)

subfolder_GT_l = sorted(subfolder_GT_l)

#print(subfolder_l, subfolder_GT_l)

#### read Vid4

#subfolder_l = sorted(glob.glob(osp.join(test_dataset_folder, '*')))

#subfolder_GT_l = sorted(glob.glob(osp.join(GT_dataset_folder, '*')))

# for each subfolder

for subfolder, subfolder_GT in zip(subfolder_l, subfolder_GT_l):

subfolder_name = subfolder[-10:]

subfolder_name_l.append(subfolder_name)

save_subfolder = osp.join(save_folder, subfolder_name)

img_path_l = sorted(glob.glob(osp.join(subfolder, '*')))

max_idx = len(img_path_l)

if save_imgs:

util.mkdirs(save_subfolder)

#### read LQ and GT images

imgs_LQ = data_util.read_img_seq(subfolder)

img_GT_l = []

for img_GT_path in sorted(glob.glob(osp.join(subfolder_GT, '*'))):

img_GT_l.append(data_util.read_img(None, img_GT_path))

avg_psnr, avg_psnr_border, avg_psnr_center, N_border, N_center = 0, 0, 0, 0, 0

# process each image

for img_idx, img_path in enumerate(img_path_l):

img_name = osp.splitext(osp.basename(img_path))[0]

select_idx = data_util.index_generation(img_idx, max_idx, N_in, padding=padding)

imgs_in = imgs_LQ.index_select(0, torch.LongTensor(select_idx)).unsqueeze(0).to(device)

if flip_test:

output = util.flipx4_forward(model, imgs_in)

else:

output = util.single_forward(model, imgs_in)

output = util.tensor2img(output.squeeze(0))

if save_imgs:

cv2.imwrite(osp.join(save_subfolder, '{}.png'.format(img_name)), output)

# calculate PSNR

output = output / 255.

GT = np.copy(img_GT_l[img_idx])

# For REDS, evaluate on RGB channels; for Vimeo90, evaluate on the Y channel

if data_mode == 'Vimeo90': # bgr2y, [0, 1]

GT = data_util.bgr2ycbcr(GT, only_y=True)

output = data_util.bgr2ycbcr(output, only_y=True)

output, GT = util.crop_border([output, GT], crop_border)

crt_psnr = util.calculate_psnr(output * 255, GT * 255)

logger.info('{:3d} - {:25} \tPSNR: {:.6f} dB'.format(img_idx + 1, img_name, crt_psnr))

if img_idx >= border_frame and img_idx < max_idx - border_frame: # center frames

avg_psnr_center += crt_psnr

N_center += 1

else: # border frames

avg_psnr_border += crt_psnr

N_border += 1

avg_psnr = (avg_psnr_center + avg_psnr_border) / (N_center + N_border)

avg_psnr_center = avg_psnr_center / N_center

avg_psnr_border = 0 if N_border == 0 else avg_psnr_border / N_border

avg_psnr_l.append(avg_psnr)

avg_psnr_center_l.append(avg_psnr_center)

avg_psnr_border_l.append(avg_psnr_border)

logger.info('Folder {} - Average PSNR: {:.6f} dB for {} frames; '

'Center PSNR: {:.6f} dB for {} frames; '

'Border PSNR: {:.6f} dB for {} frames.'.format(subfolder_name, avg_psnr,

(N_center + N_border),

avg_psnr_center, N_center,

avg_psnr_border, N_border))

logger.info('################ Tidy Outputs ################')

for subfolder_name, psnr, psnr_center, psnr_border in zip(subfolder_name_l, avg_psnr_l,

avg_psnr_center_l, avg_psnr_border_l):

logger.info('Folder {} - Average PSNR: {:.6f} dB. '

'Center PSNR: {:.6f} dB. '

'Border PSNR: {:.6f} dB.'.format(subfolder_name, psnr, psnr_center,

psnr_border))

logger.info('################ Final Results ################')

logger.info('Data: {} - {}'.format(data_mode, test_dataset_folder))

logger.info('Padding mode: {}'.format(padding))

logger.info('Model path: {}'.format(model_path))

logger.info('Save images: {}'.format(save_imgs))

logger.info('Flip test: {}'.format(flip_test))

logger.info('Total Average PSNR: {:.6f} dB for {} clips. '

'Center PSNR: {:.6f} dB. Border PSNR: {:.6f} dB.'.format(

sum(avg_psnr_l) / len(avg_psnr_l), len(subfolder_l),

sum(avg_psnr_center_l) / len(avg_psnr_center_l),

sum(avg_psnr_border_l) / len(avg_psnr_border_l)))

if __name__ == '__main__':

main()

运行结果确实和论文中Y通道的PSNR结果一致:

1313

1313

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?