一 集群规划

主机名 IP 安装的软件 运行的进程

master 192.168.0.110 jdk1.8、hadoop1.2.1 NameNode、JobTracker、SecondaryNameNode

slave1 192.168.0.111 jdk1.8、hadoop1.2.1 DataNode、TaskTracker

slave2 192.168.0.112 jdk1.8、hadoop1.2.1 DataNode、TaskTracker

二 安装步骤

1 安装配置hadoop集群

共修改了6个配置文件:hadoo-env.sh、core-site.xml、hdfs-site.xml、master、slaves、mapred-site.xml

1.1 上传并解压hadoop安装包

tar -zxvf hadoop-1.2.1.tar.gz

1.2 master节点下配置相关文件

hadoop1.2.1所有的配置文件都在hadoop1.2.1/conf目录下

cd /opt/hadoop-1.2.1/conf

1.2.1 修改hadoop-env.sh

export JAVA_HOME=/opt/jdk1.8

1.2.2 修改core-site.xml

<configuration>

<property>

<name>hadoop.tmp.dir</name>

<value>/hadoop</value>

</property>

<property>

<name>dfs.name.dir</name>

<value>/hadoop/name</value>

</property>

<property>

<name>fs.default.name</name>

<value>hdfs://master:9000</value>

</property>

</configuration>

1.2.3修改hdfs-site.xml

<configuration>

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

</configuration>

1.2.4 修改mapred-site.xml

<configuration>

<property>

<name>mapred.job.tracker</name>

<value>master:9001</value>

</property>

</configuration>

1.2.5 修改masters 文件,添加如下内容

master

1.2.6 修改slaves 文件,添加如下内容

slave1

slave2

1.3 将配置好的hadoop拷贝到其他节点

[root@master conf]# scp -r /opt/hadoop-1.2.1/ root@slave1:/opt

[root@master conf]# scp -r /opt/hadoop-1.2.1/ root@slave2:/opt

三 开始启动集群

1 格式化HDFS

在hdfs的NameNode机器(master)上执行命令:

[root@master conf]# hadoop namenode -format

2 启动HDFS

[root@master conf]# start-all.sh

Warning: $HADOOP_HOME is deprecated.

namenode running as process 22989. Stop it first.

slave1: \S

slave1: Kernel \r on an \m

slave2: \S

slave2: Kernel \r on an \m

slave1: starting datanode, logging to /opt/hadoop-1.2.1/libexec/../logs/hadoop-root-datanode-slave1.out

slave2: starting datanode, logging to /opt/hadoop-1.2.1/libexec/../logs/hadoop-root-datanode-slave2.out

master: \S

master: Kernel \r on an \m

master: secondarynamenode running as process 23161. Stop it first.

jobtracker running as process 23249. Stop it first.

slave2: \S

slave2: Kernel \r on an \m

slave1: \S

slave1: Kernel \r on an \m

3 执行成功后进行相关检验

master节点:

[root@master conf]# jps

23249 JobTracker

23161 SecondaryNameNode

22989 NameNode

24238 Jps

slave1节点:

[root@#localhost conf]# jps

11513 Jps

11214 TaskTracker

11407 DataNode

slave2节点:

[root@#localhost conf]# jps

10708 DataNode

10806 Jps

10510 TaskTracker

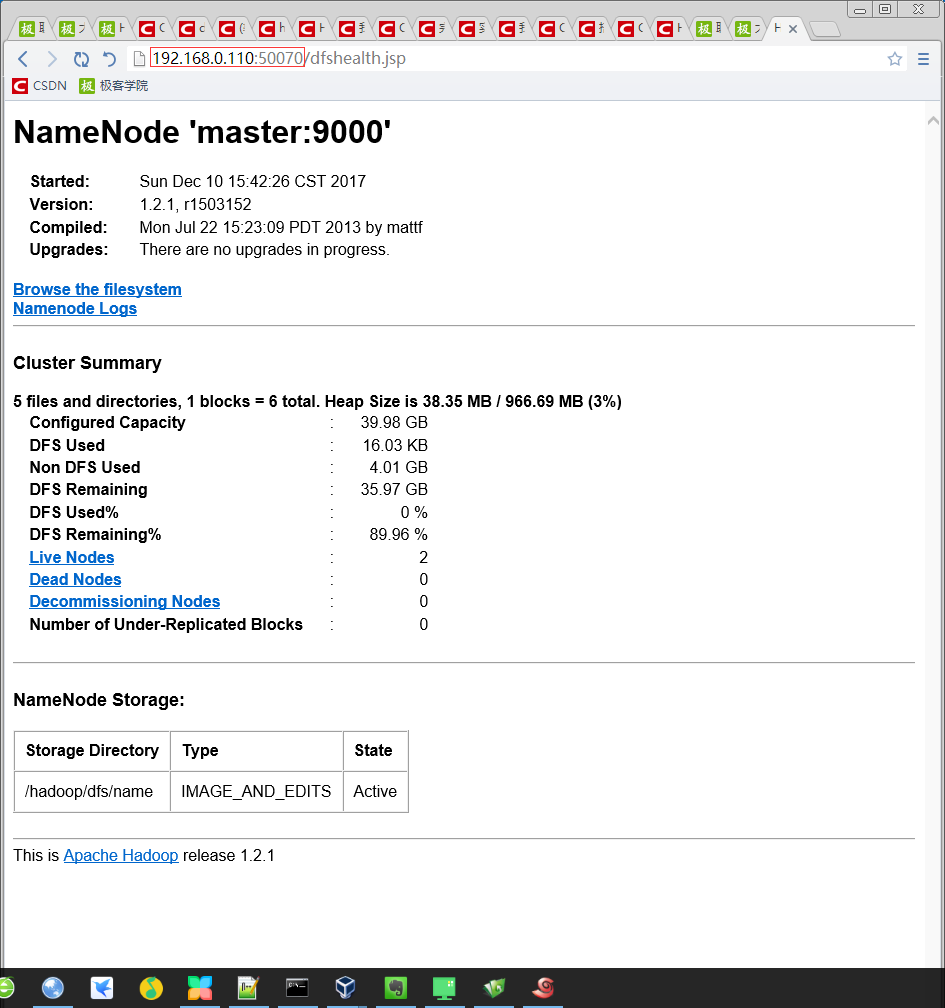

四 hadoop集群启动成功后,进行测试

1 进行浏览器访问

2 运行wordcount测试

2.1 hdfs上创建目录

[root@#localhost conf]# hadoop fs -mkdir /in

Warning: $HADOOP_HOME is deprecated.

2.2 从linux上传测试数据文件到hdfs上

[root@#localhost ~]# hadoop fs -put test.txt /in

Warning: $HADOOP_HOME is deprecated.

2.3 执行测试例子

[root@#localhost ~]# hadoop jar /opt/hadoop-1.2.1/hadoop-examples-1.2.1.jar wordcount /in/ /out

Warning: $HADOOP_HOME is deprecated.

17/12/10 16:05:21 INFO input.FileInputFormat: Total input paths to process : 1

17/12/10 16:05:21 INFO util.NativeCodeLoader: Loaded the native-hadoop library

17/12/10 16:05:21 WARN snappy.LoadSnappy: Snappy native library not loaded

17/12/10 16:05:22 INFO mapred.JobClient: Running job: job_201712101542_0001

17/12/10 16:05:23 INFO mapred.JobClient: map 0% reduce 0%

17/12/10 16:05:28 INFO mapred.JobClient: map 100% reduce 0%

17/12/10 16:05:36 INFO mapred.JobClient: map 100% reduce 33%

17/12/10 16:05:37 INFO mapred.JobClient: map 100% reduce 100%

17/12/10 16:05:38 INFO mapred.JobClient: Job complete: job_201712101542_0001

17/12/10 16:05:38 INFO mapred.JobClient: Counters: 29

17/12/10 16:05:38 INFO mapred.JobClient: Map-Reduce Framework

17/12/10 16:05:38 INFO mapred.JobClient: Spilled Records=50

17/12/10 16:05:38 INFO mapred.JobClient: Map output materialized bytes=325

17/12/10 16:05:38 INFO mapred.JobClient: Reduce input records=25

17/12/10 16:05:38 INFO mapred.JobClient: Virtual memory (bytes) snapshot=3889389568

17/12/10 16:05:38 INFO mapred.JobClient: Map input records=28

17/12/10 16:05:38 INFO mapred.JobClient: SPLIT_RAW_BYTES=95

17/12/10 16:05:38 INFO mapred.JobClient: Map output bytes=269

17/12/10 16:05:38 INFO mapred.JobClient: Reduce shuffle bytes=325

17/12/10 16:05:38 INFO mapred.JobClient: Physical memory (bytes) snapshot=242126848

17/12/10 16:05:38 INFO mapred.JobClient: Reduce input groups=25

17/12/10 16:05:38 INFO mapred.JobClient: Combine output records=25

17/12/10 16:05:38 INFO mapred.JobClient: Reduce output records=25

17/12/10 16:05:38 INFO mapred.JobClient: Map output records=25

17/12/10 16:05:38 INFO mapred.JobClient: Combine input records=25

17/12/10 16:05:38 INFO mapred.JobClient: CPU time spent (ms)=1070

17/12/10 16:05:38 INFO mapred.JobClient: Total committed heap usage (bytes)=177016832

17/12/10 16:05:38 INFO mapred.JobClient: File Input Format Counters

17/12/10 16:05:38 INFO mapred.JobClient: Bytes Read=172

17/12/10 16:05:38 INFO mapred.JobClient: FileSystemCounters

17/12/10 16:05:38 INFO mapred.JobClient: HDFS_BYTES_READ=267

17/12/10 16:05:38 INFO mapred.JobClient: FILE_BYTES_WRITTEN=104686

17/12/10 16:05:38 INFO mapred.JobClient: FILE_BYTES_READ=325

17/12/10 16:05:38 INFO mapred.JobClient: HDFS_BYTES_WRITTEN=219

17/12/10 16:05:38 INFO mapred.JobClient: Job Counters

17/12/10 16:05:38 INFO mapred.JobClient: Launched map tasks=1

17/12/10 16:05:38 INFO mapred.JobClient: Launched reduce tasks=1

17/12/10 16:05:38 INFO mapred.JobClient: SLOTS_MILLIS_REDUCES=9083

17/12/10 16:05:38 INFO mapred.JobClient: Total time spent by all reduces waiting after reserving slots (ms)=0

17/12/10 16:05:38 INFO mapred.JobClient: SLOTS_MILLIS_MAPS=5050

17/12/10 16:05:38 INFO mapred.JobClient: Total time spent by all maps waiting after reserving slots (ms)=0

17/12/10 16:05:38 INFO mapred.JobClient: Data-local map tasks=1

17/12/10 16:05:38 INFO mapred.JobClient: File Output Format Counters

17/12/10 16:05:38 INFO mapred.JobClient: Bytes Written=219

[root@#localhost ~]# hadoop fs -ls /out

Warning: $HADOOP_HOME is deprecated.

Found 3 items

-rw-r--r-- 2 root supergroup 0 2017-12-10 16:05 /out/_SUCCESS

drwxr-xr-x - root supergroup 0 2017-12-10 16:05 /out/_logs

-rw-r--r-- 2 root supergroup 219 2017-12-10 16:05 /out/part-r-00000

[root@#localhost ~]# hadoop fs -cat /out/part-r-00000

Warning: $HADOOP_HOME is deprecated.

12345 1

192.108.4.2 1

194.6.3.98 1

2016-02-14 1

211599 1

5678 1

6aaaaaaaaaaaa 1

7832 1

a 1

aa 1

aaa 1

aaaa 1

aaaaa 1

aabb 1

ab 1

bbbbbb45bbbb 1

c 1

cc 1

said 1

soid. 1

soooooooooood. 1

suud 1

xcccccu 1

xccccu 1

xcccu 1

2278

2278

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?