Our job, at Mapado, is collecting all “things to do”, all around the planet.

In order to get this huge amount of information, we crawl the web, like Google does, searching for content related to concert, show, visits, attractions, …. When we find an interesting page, we try to extract the “good” data from it.

One of our major challenge is to separate content that we are interested in (title, description, photos, dates, …) from all the crap around (advertising, navigation bar, footer content, related content…).

In that challenge, one task is to regroup content that are visually close from each other. Usually, elements composing the main content of a web page are close from each other.

When we began working on this task, we, innocently, thought that we could deal with the HTML DOM. In the DOM, elements are stored as a hierarchy, so elements with the same parent have good chance to be related.

A very intersting paper covering page segmentation can be found at “Page Segmentation by Web Content Clustering“.

Using DOM hierarchy is a good starting point but in many cases things are getting a lot more complicated :

- CSS stylesheet can move elements : elements which are close on the DOM hierarchy can be moved everywhere, including outside browser windows

- CSS stylesheet can hide or show elements : many contents can share the same visual position, just being moved (or removed) by CSS and javascript

- javascript code can display things that are not even in the DOM

So we started considering using webkit as a visual renderer in order to get visual features. There is a bunch of headless webkit packages like phantomjs, zombie.js or casperjs. Each of them can render a web page and get all computed properties of each element on the page.

One should use some of following useful features in order to cluster visually thing together :

- position of the element in page (from top and left)

- width & eight of element

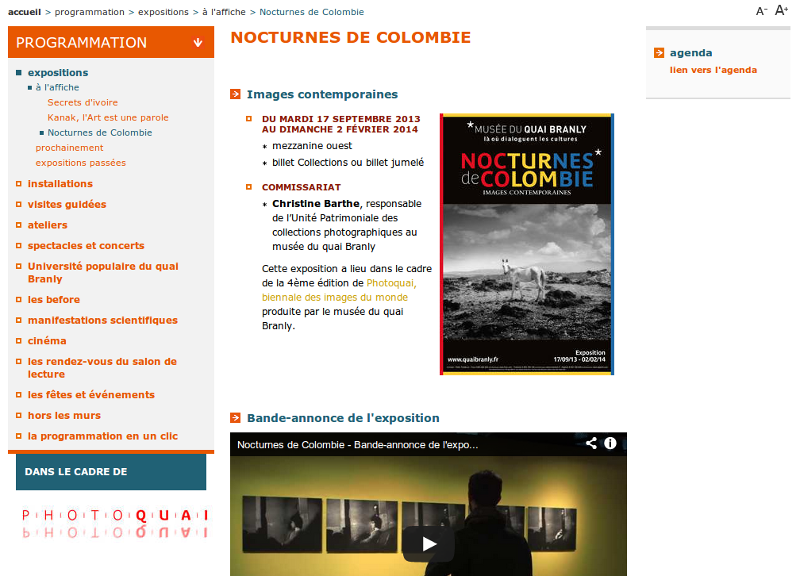

Below is a screenshot of the Quai Branly Museum we want to cluster elements for.

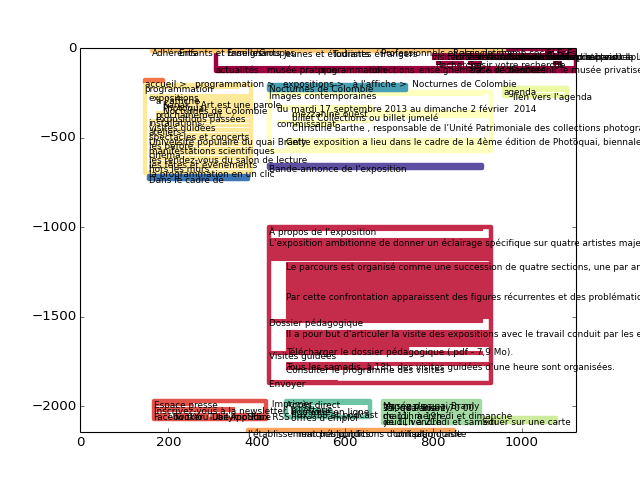

When building the clustering model, we found that one of the main feature is the position of the leftmost and rightmost pixel of each bloc. Indeed, if you look at web pages, very often, different content blocks are separated by a vertical gap.

Adding position of the center of each element bloc and DOM depth improve the efficiency of the clustering.

Below is a first implementation of these concept in Python, using Scikit-Learn to perform the clustering.

Python

| 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 | from sklearn.cluster import DBSCAN # Use scikit-learn to perform clustering

# ElementList contains a line for each element we want to cluster with his top and left position, width and eight and xpath

xpath_dict = set() # Build a dictionnary of XPATH of each element for item in ElementList: path_split_idx = find(item["xpath"],"/") for idx in path_split_idx: xpath_dict.add(item["xpath"][:idx]) xpath_dict=list(xpath_dict)

# Build feature matrix with each element

features = [] # Table will store features for each element to cluster for item in ElementList: # Keep only inside browser visual boundary if (item["left"] >0 and (item["top"] >0 and item["features"]["left"]+item["width"] <1200): visual_features = ( [item["left"] , item["left"] + item["width"], item["top"], item["top"] + item["height"], (item["left"] + item["width"] + item["left"]) / 2, (item["top"] + item["top"] + item["height2"])/ 2) dom_features = [0] * len(xpath_dict) # using DOM parent presence as a feature. Default as 0 path_split_idx = find(item["xpath"], "/")

for i, idx in enumerate(path_split_idx): # give an empirical 70 pixels distance weight to each level of the DOM (far from perfect implementation) dom_features[xpath_dict.index(item["xpath"][:idx])] = 800 / (i + 1)

# create feature vector combining visual and DOM features feayures.append(visual_features + dom_features)

features = np.asarray(features) # Convert to numpy array to make DBSCAN work

# DBSCAN is a good general clustering algorithm eps_value=900 # maximum distance between clusters db = DBSCAN(eps=eps_value, min_samples=1, metric='cityblock').fit(features)

# DBSCAN Algorithm returns a label for each vector of input array labels = db.labels_ |

This algorithm is far from perfect but is a good starting point when trying to cluster things visually.

Below is the result of the clustering from one page of Quai Branly Museum, corresponding to above screenshot.

3万+

3万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?