Overview

Easily defining the level of parallelism for each bolt and spout across the cluster, so that you can scale your topology indefinitely.

Terms

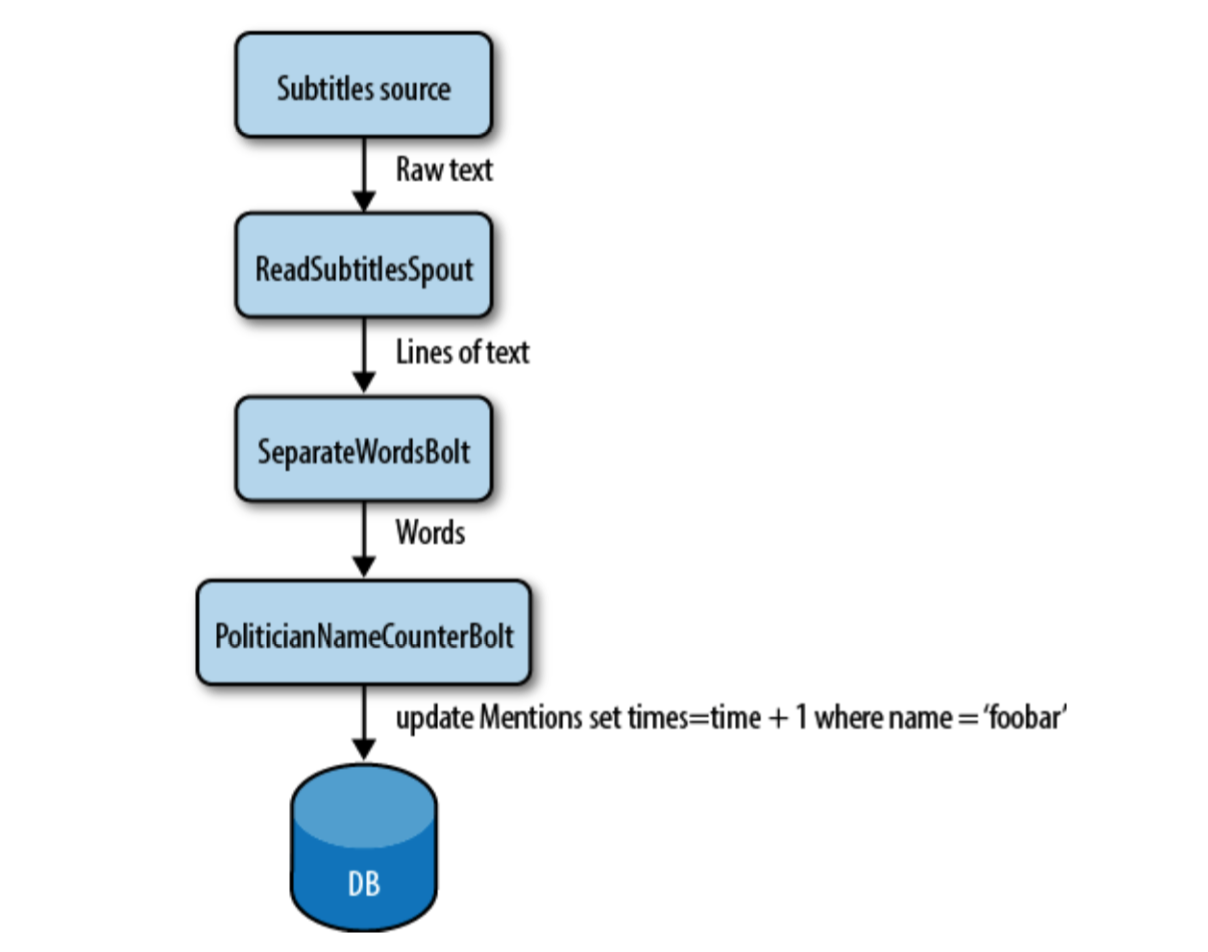

- Spout: input stream of Storm is handled by a component called Spout.

- Bolt: Spout passes the data to the component called Bolt, which transforms the data in some way.

- Topology: the arrangement of all the components (Spout and Bolt) and their connections is called Topology

Node

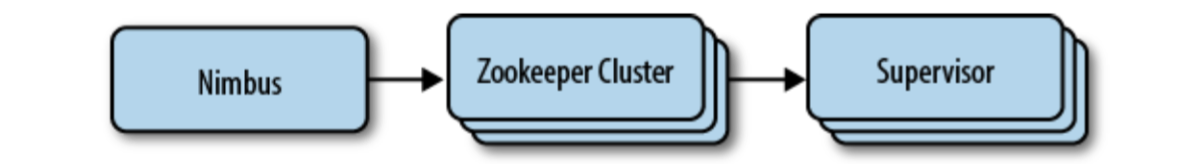

There are two type of nodes in Storm cluster:

- master node: run a daemon called Nimbus, which is responsible for

- distributing the code across the cluster

- assigning the tasks to each node

- monitoring for failure

- worker node: run a daemon called Supervisor, which executes a portion of a topology.

Underneath, Storm makes use of ZeroMQ.

Sample

Spout

public class WordReader extends BaseRichSpout {

private SpoutOutputCollector collector;

private FileReader fileReader;

// the first method gets called by Storm framewokr

public void open(Map conf, TopologyContext context, SpoutOutputCollector collector) {

// context: contains all Topology data

// conf: is created in Topology definition

this.fileReader = new FileReader(conf.get("file_name").toString())

// collector: enable us to emit the data that will be processed by bolts

this.collector = collector;

}

// declare the output fields

public void declareOutputFields(OutputFieldsDeclarer declarer) {

declarer.declare(new Fields("field_name"));

}

public void close() {

}

// emits values that will be processed by bolts

public void nextTuple() {

...

fileReader.read();

...

// Fields is defined in the declareOutputFields .

collector.emit(new Values("field_vale"));

...

}

public void ack(Object msgId) {

}

public void fail(Object msgId) {

}

}Bolt

public class WordNormalizer extends BaseBasicBolt {

public void prepare(Map conf, TopologyContext context, OutputCollector collector) {

}

public void declareOutputFields(OutputFieldsDeclarer declarer) {

declarer.declare(new Fields("field_name"));

}

public void cleanup() {

}

public void execute(Tuple input, BasicOutputCollector collector) {

// use field name or index

String str = input.getString("spout_output_field_name")

// process str

...

// emit the value or persist the message.

collector.emit(new Values("value"));

// acknolowedge the tuple or fail

collector.ack(input);

}

}Main

public class TopologyMain {

public static void main(String[] args) throws InterruptedException {

// Configuration

Config conf = new Config();

conf.setDebug(true);

conf.put("wordsFile", args[0]);

conf.put(Config.TOPOLOGY_MAX_SPOUT_PENDING, 1);

// Topology Definition

TopologyBuilder builder = new TopologyBuilder();

builder.setSpout("word-reader",new WordReader());

builder.setBolt("word-normalizer", new WordNormalizer()).shuffleGrouping("word-reader");

builder.setBolt("word-counter", new WordCounter(), 1).fieldsGrouping("word-normalizer", new Fields("word"));

// Define the cluster

LocalCluster cluster = new LocalCluster();

// Run Topology

cluster.submitTopology("Getting-Started-Toplogie", conf, builder.createTopology());

Thread.sleep(1000);

// Shutdown

cluster.shutdown();

}

}

878

878

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?