- ECCV 2016

- paper

- poster

- Author: Chi Su, Shiliang Zhang, Junliang Xing, Wen Gao1, and Qi Tian

Intuition

- Low level feature sensitive to viewpoint, body poses, etc., and have different character corresponding to different metric learning methods.

- Attribute is mid-level feature.

- Problem: it is difficult to acquire enough training data for a large set of attributes.

- Related work: deep attribute learning works.

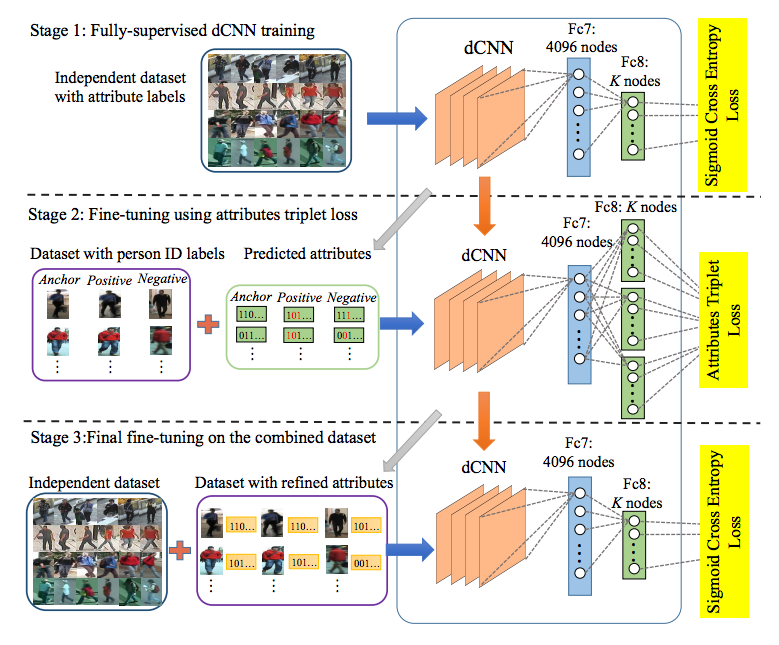

Three stage model

- Goal: learn an attribute predict model for person ReID through dCNN training

AI=(I) - First stage: train on attribute dataset

- AlexNet

- Dataset T={t1,t2,…,tN} labeled with a binary attribute.

- Get trained model S1 , which could predict attribute for any test sample but lack discriminative power.

- Second stage: fine-tuning on person ID dataset

- Dataset U={u1,u2,…,uM} has person ID label.

- Only set attributes with top p=10 highest confidence score as 1, others as 0.

- Triplet loss

=∑eE{max(0,D(A(e)(a),A(e)(p))+θ−D(A(e)(a),A(e)(n)))+γ×ε}

ε=D(A(e)(a),Ã (e)(a))+D(A(e)(p),Ã (e)(p))+D(A(e)(n),Ã (e)(p))

where θ=1 , γ=0.01 in the experiment setting. - Get model S2

- Third stage: fine-tuning on the Combined Dataset

- First predict attributes for dataset

U

using

S2 , act as ground truth attributes. - Combine

T

and

U as a big attribute dataset, training to get final deep attribute extractor

- First predict attributes for dataset

U

using

Experiment

- Attribute dataset in first stage: PETA, split multi-class attribute into binary attributes.

- Tracking dataset in second stage: MOT challenge

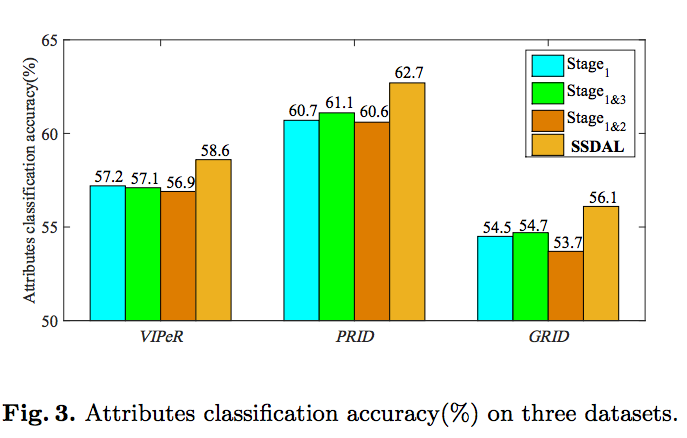

- Attribute accuracy

- Evaluate: VIPeR, PRID, GRID, Market-1501 (VIPeR, GRID and PRID are included in the PETA dataset, they will be excluded from PETA during training)

- XQDA metric learning method, further improvement

- Datasets: VIPeR (43.5%,

sor->63.9%), PRID 450S (22.6%,sor->60%+), Market (Single: 39.4%, multiple: 49.0%,sor->78%)

sordenotes state-of-the-art- The model does not use these three dataset for training, thus it cannot compare directly with supervised re-id model.

- Additional experiments

- Combine hand-crafted feature with deep attribute, improve about 8% on VIPeR.

- Directly fine-tune FC7 feature of AlexNet, deep attribute performs better.

5164

5164

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?