- 【博客】Hello, Gradient Descent

简介:

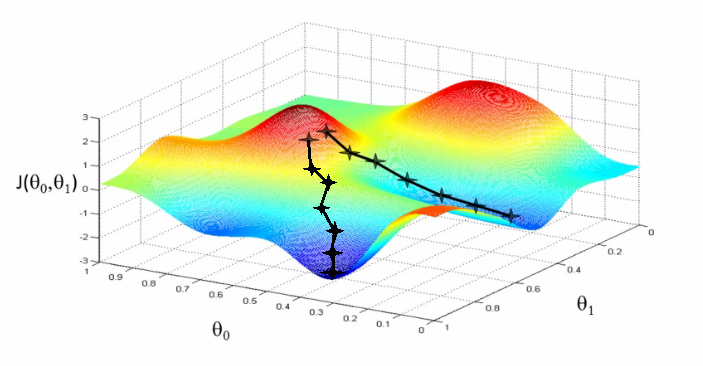

Hi there! This article is part of a series called “Hello, <algorithm>”. In this series we will give some insights about how different AI algorithms work, and we will have fun by implementing them. Today we are gonna talk about Gradient Descent, a simple but yet powerful optimization algorithm for finding the (local) minimum of a given function.

原文链接:https://medium.com/ai-society/hello-gradient-descent-ef74434bdfa5#.ffcmstu3x

2.【论文 & 博客】Variational auto-encoder for "Frey faces" using keras

简介:

How can we perform efficient inference and learning in directed probabilistic models, in the presence of continuous latent variables with intractable posterior distributions, and large datasets? We introduce a stochastic variational inference and learning algorithm that scales to large datasets and, under some mild differentiability conditions, even works in the intractable case. Our contributions is two-fold. First, we show that a reparameterization of the variational lower bound yields a lower bound estimator that can be straightforwardly optimized using standard stochastic gradient methods. Second, we show that for i.i.d. datasets with continuous latent variables per datapoint, posterior inference can be made especially efficient by fitting an approximate inference model (also called a recognition model) to the intractable posterior using the proposed lower bound estimator. Theoretical advantages are reflected in experimental results.

原文链接:http://dohmatob.github.io/research/2016/10/22/VAE.html

论文链接:https://arxiv.org/pdf/1312.6114.pdf

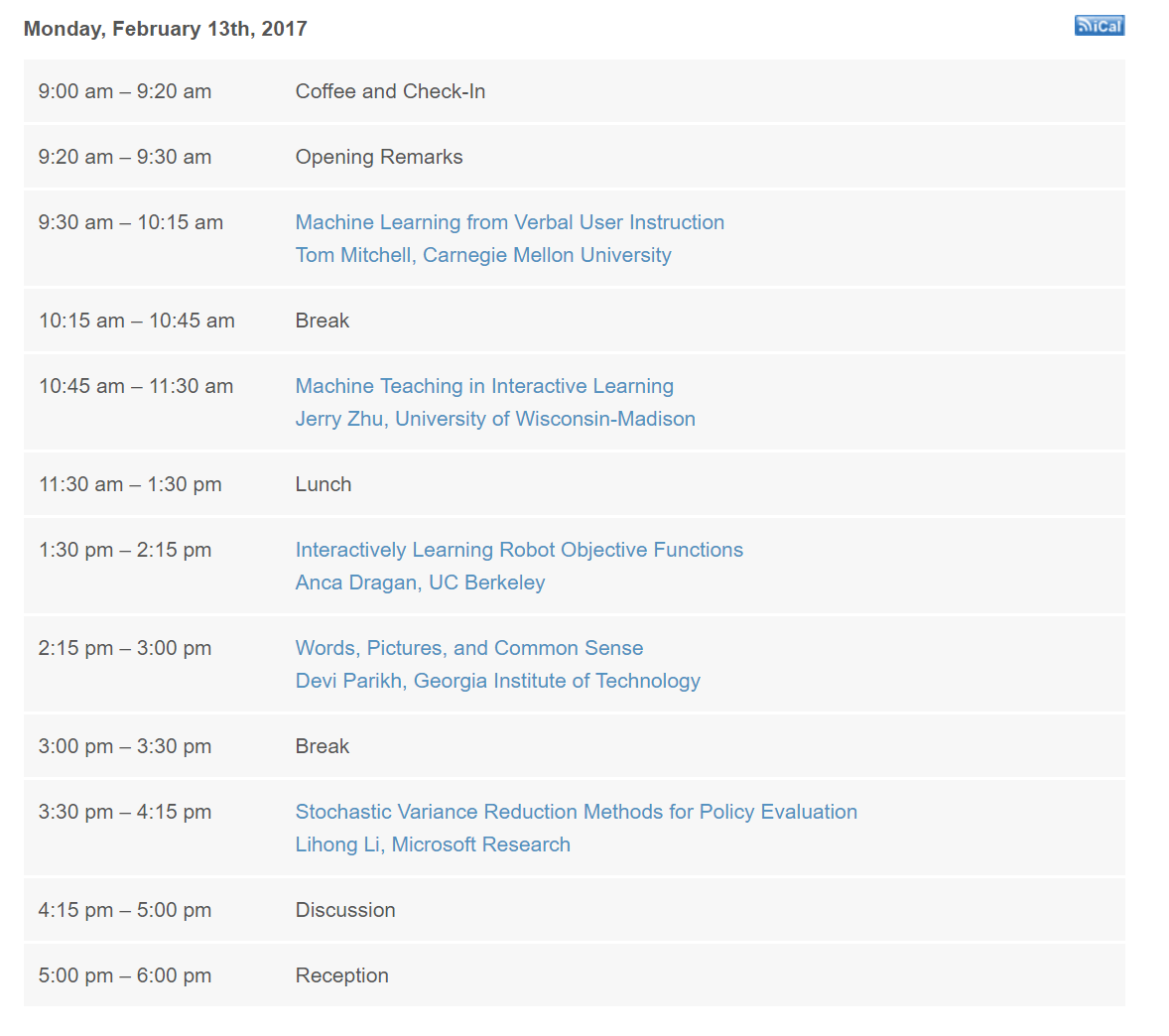

3.【视频】Interactive Learning

简介:

原文链接:https://simons.berkeley.edu/workshops/schedule/3749

4.【代码】Code for Tensorflow Machine Learning Cookbook

简介:

Ch 1: Getting Started with TensorFlow

Ch 2: The TensorFlow Way

Ch 3: Linear Regression

Ch 4: Support Vector Machines

Ch 5: Nearest Neighbor Methods

Ch 6: Neural Networks

Ch 7: Natural Language Processing

Ch 8: Convolutional Neural Networks

Ch 9: Recurrent Neural Networks

Ch 10: Taking TensorFlow to Production

Ch 11: More with TensorFlow

原文链接:https://github.com/nfmcclure/tensorflow_cookbook

5.【博客】Recurrent Neural Networks for Beginners

简介:

In this post I discuss the basics of Recurrent Neural Networks (RNNs) which are deep learning models that are becoming increasingly popular. I don’t intend to get too heavily into the math and proofs behind why these work and am aiming for a more abstract understanding.

原文链接:https://medium.com/@camrongodbout/recurrent-neural-networks-for-beginners-7aca4e933b82#.spi0ozxux

171

171

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?