一、mnist手写数字识别

1、数据集介绍

mnist数据集是一个经典的数据集,其中包括70000个样本,包括60000个训练样本和10000个测试样本

2、下载地址:http://yann.lecun.com/exdb/mnist/

3、文件说明

train-images-idx3-ubyte.gz: training set images (9912422 bytes)

train-labels-idx1-ubyte.gz: training set labels (28881 bytes)

t10k-images-idx3-ubyte.gz: test set images (1648877 bytes)

t10k-labels-idx1-ubyte.gz: test set labels (4542 bytes)

4、特征值

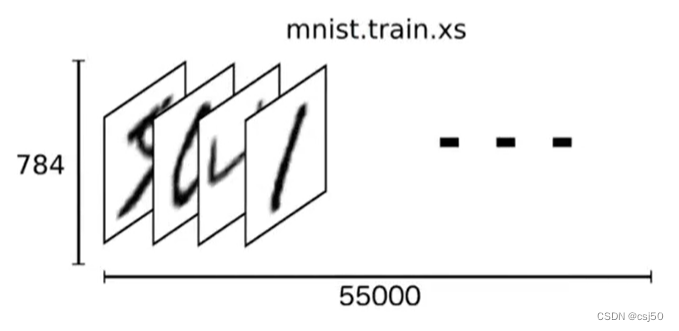

每一个mnist数据单元有两部分组成:一张包含手写数字的图片和一个对应的标签。我们把这些图片设为“xs”,把这些标签设为“ys”。训练数据集和测试数据集都包含xs和ys

比如训练数据集的图片是mnist.train.images,训练数据集的标签是mnist.train.labels

我们可以知道图片是黑白图片,每一张图片包含28像素x28像素。我们把这个数组展开成一个向量。长度是28x28=784。因此,在mnist训练数据集中,mnist.train.images是一个形状为[60000, 784]的张量

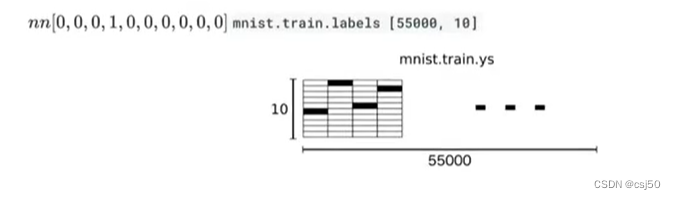

5、目标值

mnist中的每个图像都具有相应的标签,0到9之间的数字表示图像中绘制的数字。用的是one-hot编码

二、mnist数据获取API

1、tensorflow框架自带了获取这个数据集的接口,所以不需要自行读取

2、数据集的引入

mnist = tf.keras.datasets.mnist

(x_train, y_train), (x_test, y_test) = mnist.load_data()

以上四个数据集的格式都为numpy.ndarray

三、案例实战

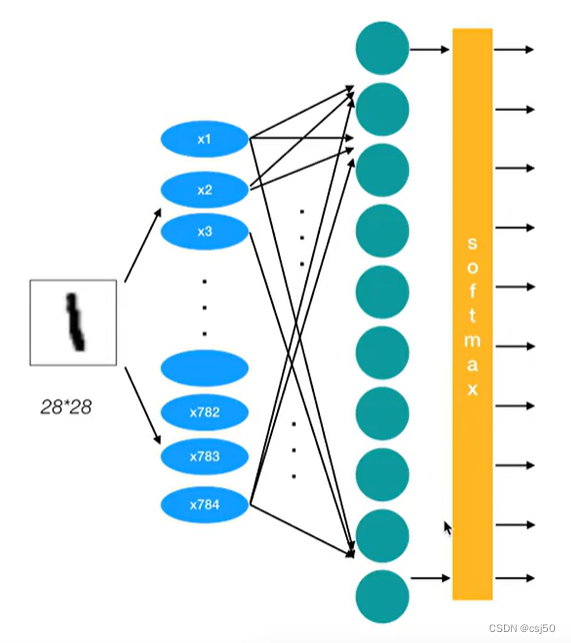

1、网络设计

我们采用只有一层,即最后,一个输出层的神经网络,也称之为全连接(full connected)层神经网络

一个样本有784个特征,每一个特征都有一个权重值,进行加权求和,加上偏置得到logits,然后给logits加上softmax映射,就会得出一组概率值,概率值当中最大的那个就是它的分类

2、全连接层计算

全连接做的事情就是矩阵相乘

tf.experimental.numpy.matmul(x1, x2) + bias

说明:

(1)return:全连接结果,供交叉损失运算

tf.keras.optimizers.SGD(learning_rate)

说明:

(1)梯度下降

(2)learning_rate:学习率

3、形状表示

x[None, 784] * w[784, 10] + bias[None, 10] = y[None, 10]

x是None行784列,y是None行10列。所以权重的形状是784行10列,偏置是None行10列

四、使用全连接神经网络

原来的代码改写起来比较难,找到一个用keras框架来实现的((。・_・。)ノ)

import os

os.environ['TF_CPP_MIN_LOG_LEVEL']='2'

from tensorflow.keras.utils import to_categorical

from tensorflow.keras import models, layers, regularizers

from tensorflow.keras.optimizers import RMSprop, SGD

from tensorflow.keras.datasets import mnist

import matplotlib.pyplot as plt

# 加载数据集

(train_images, train_labels), (test_images, test_labels) = mnist.load_data()

# 将图片由二维铺开成一维

train_images = train_images.reshape((60000, 28*28)).astype('float')

test_images = test_images.reshape((10000, 28*28)).astype('float')

train_labels = to_categorical(train_labels)

test_labels = to_categorical(test_labels)

# 搭建一个神经网络

network = models.Sequential()

network.add(layers.Dense(units=128, activation='relu', input_shape=(28*28, ),

kernel_regularizer=regularizers.l1(0.0001)))

network.add(layers.Dropout(0.01))

network.add(layers.Dense(units=32, activation='relu',

kernel_regularizer=regularizers.l1(0.0001)))

network.add(layers.Dropout(0.01))

network.add(layers.Dense(units=10, activation='softmax'))

# 编译步骤,确定优化器和损失函数等

network.compile(optimizer=SGD(learning_rate=0.001), loss='categorical_crossentropy', metrics=['accuracy'])

# 训练网络,用fit函数, epochs表示训练多少个回合, batch_size表示每次训练给多大的数据

network.fit(train_images, train_labels, epochs=100, batch_size=128, verbose=2)

# 来在测试集上测试一下模型的性能吧

test_loss, test_accuracy = network.evaluate(test_images, test_labels)

print("test_loss:", test_loss, " test_accuracy:", test_accuracy)优化器还是用SGD,训练100个回合,运行结果:

Epoch 1/100

469/469 - 2s - loss: 3.0283 - accuracy: 0.4604

Epoch 2/100

469/469 - 1s - loss: 1.6157 - accuracy: 0.6435

Epoch 3/100

469/469 - 1s - loss: 1.4475 - accuracy: 0.6979

Epoch 4/100

469/469 - 1s - loss: 1.3438 - accuracy: 0.7312

Epoch 5/100

469/469 - 1s - loss: 1.2487 - accuracy: 0.7737

Epoch 6/100

469/469 - 1s - loss: 1.1552 - accuracy: 0.7911

Epoch 7/100

469/469 - 1s - loss: 1.0796 - accuracy: 0.8014

Epoch 8/100

469/469 - 1s - loss: 1.0300 - accuracy: 0.8191

Epoch 9/100

469/469 - 1s - loss: 0.9780 - accuracy: 0.8377

Epoch 10/100

469/469 - 1s - loss: 0.9479 - accuracy: 0.8526

Epoch 11/100

469/469 - 1s - loss: 0.9112 - accuracy: 0.8642

Epoch 12/100

469/469 - 1s - loss: 0.8805 - accuracy: 0.8732

Epoch 13/100

469/469 - 1s - loss: 0.8512 - accuracy: 0.8813

Epoch 14/100

469/469 - 1s - loss: 0.8243 - accuracy: 0.8887

Epoch 15/100

469/469 - 1s - loss: 0.8009 - accuracy: 0.8946

Epoch 16/100

469/469 - 1s - loss: 0.7770 - accuracy: 0.9017

Epoch 17/100

469/469 - 1s - loss: 0.7567 - accuracy: 0.9067

Epoch 18/100

469/469 - 1s - loss: 0.7454 - accuracy: 0.9101

Epoch 19/100

469/469 - 1s - loss: 0.7297 - accuracy: 0.9143

Epoch 20/100

469/469 - 1s - loss: 0.7174 - accuracy: 0.9175

Epoch 21/100

469/469 - 1s - loss: 0.7022 - accuracy: 0.9208

Epoch 22/100

469/469 - 1s - loss: 0.6973 - accuracy: 0.9218

Epoch 23/100

469/469 - 1s - loss: 0.6852 - accuracy: 0.9249

Epoch 24/100

469/469 - 1s - loss: 0.6768 - accuracy: 0.9276

Epoch 25/100

469/469 - 1s - loss: 0.6664 - accuracy: 0.9302

Epoch 26/100

469/469 - 1s - loss: 0.6624 - accuracy: 0.9301

Epoch 27/100

469/469 - 1s - loss: 0.6568 - accuracy: 0.9326

Epoch 28/100

469/469 - 1s - loss: 0.6479 - accuracy: 0.9340

Epoch 29/100

469/469 - 1s - loss: 0.6417 - accuracy: 0.9362

Epoch 30/100

469/469 - 1s - loss: 0.6381 - accuracy: 0.9361

Epoch 31/100

469/469 - 1s - loss: 0.6304 - accuracy: 0.9391

Epoch 32/100

469/469 - 1s - loss: 0.6277 - accuracy: 0.9395

Epoch 33/100

469/469 - 1s - loss: 0.6216 - accuracy: 0.9416

Epoch 34/100

469/469 - 1s - loss: 0.6169 - accuracy: 0.9431

Epoch 35/100

469/469 - 1s - loss: 0.6134 - accuracy: 0.9429

Epoch 36/100

469/469 - 1s - loss: 0.6095 - accuracy: 0.9442

Epoch 37/100

469/469 - 1s - loss: 0.6080 - accuracy: 0.9446

Epoch 38/100

469/469 - 1s - loss: 0.6001 - accuracy: 0.9468

Epoch 39/100

469/469 - 1s - loss: 0.5983 - accuracy: 0.9471

Epoch 40/100

469/469 - 1s - loss: 0.5961 - accuracy: 0.9477

Epoch 41/100

469/469 - 1s - loss: 0.5902 - accuracy: 0.9484

Epoch 42/100

469/469 - 1s - loss: 0.5862 - accuracy: 0.9495

Epoch 43/100

469/469 - 1s - loss: 0.5848 - accuracy: 0.9505

Epoch 44/100

469/469 - 1s - loss: 0.5785 - accuracy: 0.9505

Epoch 45/100

469/469 - 1s - loss: 0.5777 - accuracy: 0.9518

Epoch 46/100

469/469 - 1s - loss: 0.5727 - accuracy: 0.9531

Epoch 47/100

469/469 - 1s - loss: 0.5711 - accuracy: 0.9533

Epoch 48/100

469/469 - 1s - loss: 0.5684 - accuracy: 0.9535

Epoch 49/100

469/469 - 1s - loss: 0.5653 - accuracy: 0.9548

Epoch 50/100

469/469 - 1s - loss: 0.5645 - accuracy: 0.9546

Epoch 51/100

469/469 - 1s - loss: 0.5602 - accuracy: 0.9556

Epoch 52/100

469/469 - 1s - loss: 0.5563 - accuracy: 0.9569

Epoch 53/100

469/469 - 1s - loss: 0.5558 - accuracy: 0.9569

Epoch 54/100

469/469 - 1s - loss: 0.5548 - accuracy: 0.9568

Epoch 55/100

469/469 - 1s - loss: 0.5525 - accuracy: 0.9569

Epoch 56/100

469/469 - 1s - loss: 0.5486 - accuracy: 0.9585

Epoch 57/100

469/469 - 1s - loss: 0.5464 - accuracy: 0.9588

Epoch 58/100

469/469 - 1s - loss: 0.5466 - accuracy: 0.9589

Epoch 59/100

469/469 - 1s - loss: 0.5449 - accuracy: 0.9598

Epoch 60/100

469/469 - 1s - loss: 0.5379 - accuracy: 0.9607

Epoch 61/100

469/469 - 1s - loss: 0.5393 - accuracy: 0.9606

Epoch 62/100

469/469 - 1s - loss: 0.5360 - accuracy: 0.9614

Epoch 63/100

469/469 - 1s - loss: 0.5346 - accuracy: 0.9613

Epoch 64/100

469/469 - 1s - loss: 0.5316 - accuracy: 0.9623

Epoch 65/100

469/469 - 1s - loss: 0.5311 - accuracy: 0.9626

Epoch 66/100

469/469 - 1s - loss: 0.5305 - accuracy: 0.9623

Epoch 67/100

469/469 - 1s - loss: 0.5260 - accuracy: 0.9629

Epoch 68/100

469/469 - 1s - loss: 0.5231 - accuracy: 0.9642

Epoch 69/100

469/469 - 1s - loss: 0.5241 - accuracy: 0.9637

Epoch 70/100

469/469 - 1s - loss: 0.5203 - accuracy: 0.9653

Epoch 71/100

469/469 - 1s - loss: 0.5182 - accuracy: 0.9653

Epoch 72/100

469/469 - 1s - loss: 0.5173 - accuracy: 0.9650

Epoch 73/100

469/469 - 1s - loss: 0.5148 - accuracy: 0.9663

Epoch 74/100

469/469 - 1s - loss: 0.5122 - accuracy: 0.9671

Epoch 75/100

469/469 - 1s - loss: 0.5124 - accuracy: 0.9660

Epoch 76/100

469/469 - 1s - loss: 0.5113 - accuracy: 0.9667

Epoch 77/100

469/469 - 1s - loss: 0.5129 - accuracy: 0.9665

Epoch 78/100

469/469 - 1s - loss: 0.5080 - accuracy: 0.9677

Epoch 79/100

469/469 - 1s - loss: 0.5068 - accuracy: 0.9679

Epoch 80/100

469/469 - 1s - loss: 0.5060 - accuracy: 0.9685

Epoch 81/100

469/469 - 1s - loss: 0.5025 - accuracy: 0.9692

Epoch 82/100

469/469 - 1s - loss: 0.5015 - accuracy: 0.9691

Epoch 83/100

469/469 - 1s - loss: 0.5017 - accuracy: 0.9689

Epoch 84/100

469/469 - 1s - loss: 0.5011 - accuracy: 0.9690

Epoch 85/100

469/469 - 1s - loss: 0.4996 - accuracy: 0.9687

Epoch 86/100

469/469 - 1s - loss: 0.4965 - accuracy: 0.9696

Epoch 87/100

469/469 - 1s - loss: 0.4971 - accuracy: 0.9694

Epoch 88/100

469/469 - 1s - loss: 0.4927 - accuracy: 0.9701

Epoch 89/100

469/469 - 1s - loss: 0.4923 - accuracy: 0.9704

Epoch 90/100

469/469 - 1s - loss: 0.4917 - accuracy: 0.9707

Epoch 91/100

469/469 - 1s - loss: 0.4929 - accuracy: 0.9709

Epoch 92/100

469/469 - 1s - loss: 0.4880 - accuracy: 0.9717

Epoch 93/100

469/469 - 1s - loss: 0.4865 - accuracy: 0.9717

Epoch 94/100

469/469 - 1s - loss: 0.4850 - accuracy: 0.9719

Epoch 95/100

469/469 - 1s - loss: 0.4871 - accuracy: 0.9712

Epoch 96/100

469/469 - 1s - loss: 0.4851 - accuracy: 0.9721

Epoch 97/100

469/469 - 1s - loss: 0.4830 - accuracy: 0.9721

Epoch 98/100

469/469 - 1s - loss: 0.4811 - accuracy: 0.9731

Epoch 99/100

469/469 - 1s - loss: 0.4810 - accuracy: 0.9728

Epoch 100/100

469/469 - 1s - loss: 0.4789 - accuracy: 0.9732

313/313 [==============================] - 1s 1ms/step - loss: 0.6132 - accuracy: 0.9521

test_loss: 0.613166332244873 test_accuracy: 0.9520999789237976参考资料:

https://cloud.tencent.com/developer/article/2055005

1781

1781

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?