I'm putting part of older WebForms portions of my site that still run on bare metal to ASP.NET Core and Azure App Services, and while I'm doing that I realized that I want to make sure my staging sites don't get indexed by Google/Bing.

我将仍旧在裸机上运行的网站的旧WebForms部分的一部分放到ASP.NET Core和Azure App Services中,在这样做的同时,我意识到我想确保我的暂存网站不会出现被Google / Bing索引。

I already have a robots.txt, but I want one that's specific to production and others that are specific to development or staging. I thought about a number of ways to solve this. I could have a static robots.txt and another robots-staging.txt and conditionally copy one over the other during my Azure DevOps CI/CD pipeline.

我已经有一个robots.txt,但是我想要一个特定于生产的机器人,另一个特定于开发或登台的机器人。 我考虑了多种解决方法。 我可以有一个静态robots.txt和另一个robots-staging.txt,并在我的Azure DevOps CI / CD管道中有条件地一个复制一个。

Then I realized the simplest possible thing would be to just make robots.txt be dynamic. I thought about writing custom middleware but that sounded like a hassle and more code that needed. I wanted to see just how simple this could be.

然后,我意识到最简单的方法就是使robots.txt动态化。 我考虑过编写自定义中间件,但这听起来很麻烦,需要更多代码。 我想看看这有多简单。

- You could do this as a single inline middleware, and just lambda and func and linq the heck out out it all on one line 您可以将其作为单个内联中间件来完成,而只需lambda和func和linq即可在一行上全部完成

- You could write your own middleware and do lots of options, then activate it bested on env.IsStaging(), etc. 您可以编写自己的中间件并做很多选择,然后在env.IsStaging()等上激活它。

- You could make a single Razor Page with environment taghelpers. 您可以使用环境taghelpers制作一个Razor Page。

The last one seemed easiest and would also mean I could change the cshtml without a full recompile, so I made a RobotsTxt.cshtml single razor page. No page model, no code behind. Then I used the built-in environment tag helper to conditionally generate parts of the file. Note also that I forced the mime type to text/plain and I don't use a Layout page, as this needs to stand alone.

最后一个似乎最简单,这也意味着我可以在不进行完全重新编译的情况下更改cshtml,因此我制作了RobotsTxt.cshtml单个剃须刀页面。 没有页面模型,没有代码。 然后,我使用内置的环境标签帮助程序有条件地生成文件的各个部分。 另请注意,我将mime类型强制设置为text / plain,并且我不使用“布局”页面,因为这需要单独使用。

@page

@{

Layout = null;

this.Response.ContentType = "text/plain";

}

# /robots.txt file for http://www.hanselman.com/

User-agent: *

<environment include="Development,Staging">Disallow: /</environment>

<environment include="Production">Disallow: /blog/private

Disallow: /blog/secret

Disallow: /blog/somethingelse</environment>

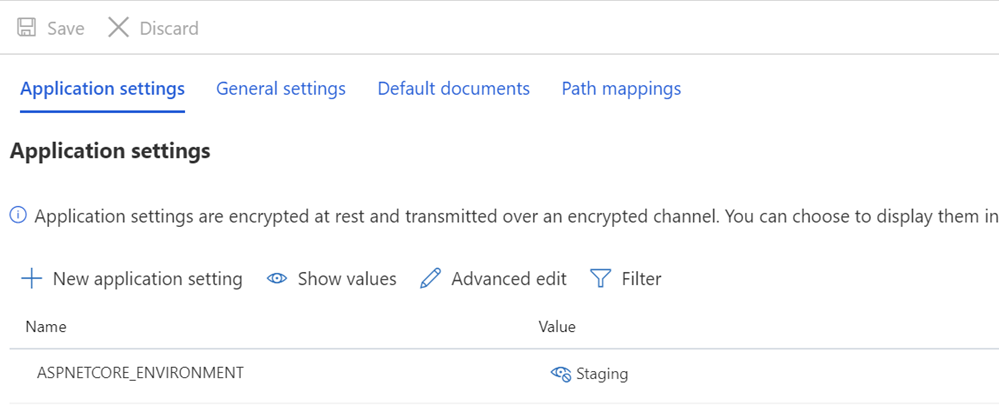

I then make sure that my Staging and/or Production systems have ASPNETCORE_ENVIRONMENT variables set appropriately.

然后,我确保我的登台和/或生产系统具有适当设置的ASPNETCORE_ENVIRONMENT变量。

I also want to point out what may look like odd spacing and how some text is butted up against the TagHelpers. Remember that a TagHelper's tag sometimes "disappears" (is elided) when it's done its thing, but the whitespace around it remains. So I want User-agent: * to have a line, and then Disallow to show up immediately on the next line. While it might be prettier source code to have that start on another line, it's not a correct file then. I want the result to be tight and above all, correct. This is for staging:

我还想指出看起来像是奇数行距的内容,以及一些文本如何与TagHelpers对接。 请记住,TagHelper的标签在完成处理后有时会“消失”(消失),但是标签周围的空白仍然存在。 所以我希望User-agent:*有一行,然后禁止在下一行立即显示。 虽然以另一行开头可能是更漂亮的源代码,但它不是正确的文件。 我希望结果严格,最重要的是,正确。 这是分期进行的:

User-agent: *

Disallow: /

This now gives me a robots.txt at /robotstxt but not at /robots.txt. See the issue? Robots.txt is a file (or a fake one) so I need to map a route from the request for /robots.txt to the Razor page called RobotsTxt.cshtml.

现在,这给我一个位于/ robotstxt的robots.txt,但没有给我一个/robots.txt的机器人。 看到问题了吗? Robots.txt是一个文件(或伪造的文件),因此我需要将一条路径从对/robots.txt的请求映射到名为RobotsTxt.cshtml的Razor页面。

Here I add a RazorPagesOptions in my Startup.cs with a custom PageRoute that maps /robots.txt to /robotstxt. (I've always found this API annoying as the parameters should, IMHO, be reversed like ("from","to") so watch out for that, lest you waste ten minutes like I just did.

在这里,我在Startup.cs中添加了带有自定义PageRoute的RazorPagesOptions,该自定义PageRoute将/robots.txt映射到/ robotstxt。 (我总是发现这个API很烦人,因为恕我直言,参数应该像(“ from”,“ to”)那样反向,所以要当心,以免您像我刚才那样浪费十分钟。

public void ConfigureServices(IServiceCollection services)

{

services.AddMvc()

.AddRazorPagesOptions(options =>

{

options.Conventions.AddPageRoute("/robotstxt", "/Robots.Txt");

});

}

And that's it! Simple and clean.

就是这样! 简单干净。

You could also add caching if you wanted, either as a larger middleware, or even in the cshtml Page, like

如果需要,您还可以添加缓存,既可以作为更大的中间件,也可以添加到cshtml Page中,例如

context.Response.Headers.Add("Cache-Control", $"max-age=SOMELARGENUMBEROFSECONDS");

but I'll leave that small optimization as an exercise to the reader.

但我会将这种小的优化留给读者练习。

UPDATE: After I was done I found this robots.txt middleware and NuGet up on GitHub. I'm still happy with my code and I don't mind not having an external dependency, but it's nice to file this one away for future more sophisticated needs and projects.

更新:完成后,我在GitHub上找到了robots.txt中间件和NuGet。 我仍然对我的代码感到满意,并且我不介意没有外部依赖关系,但是很高兴将其归档以便将来更复杂的需求和项目。

How do you handle your robots.txt needs? Do you even have one?

您如何处理robots.txt的需求? 你甚至有一个吗?

180

180

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?