motivation:这篇文章是在模型训练阶段添加满足DP的噪声从而达到隐私保护的目的,在之前读的论文中,不同的数据集大小,优化器,激活函数的不同都会影响整个模型的性能。看的比较多的就是在裁剪阈值C上进行优化,过大过小都不利于模型训练,所以需要找一个合适的阈值C。

在联邦学习(FL)设置中,使用用户级差分隐私(例如DP联邦平均)训练神经网络的现有方法涉及到通过将每个用户的模型更新裁剪为某个常数值来限制其贡献。

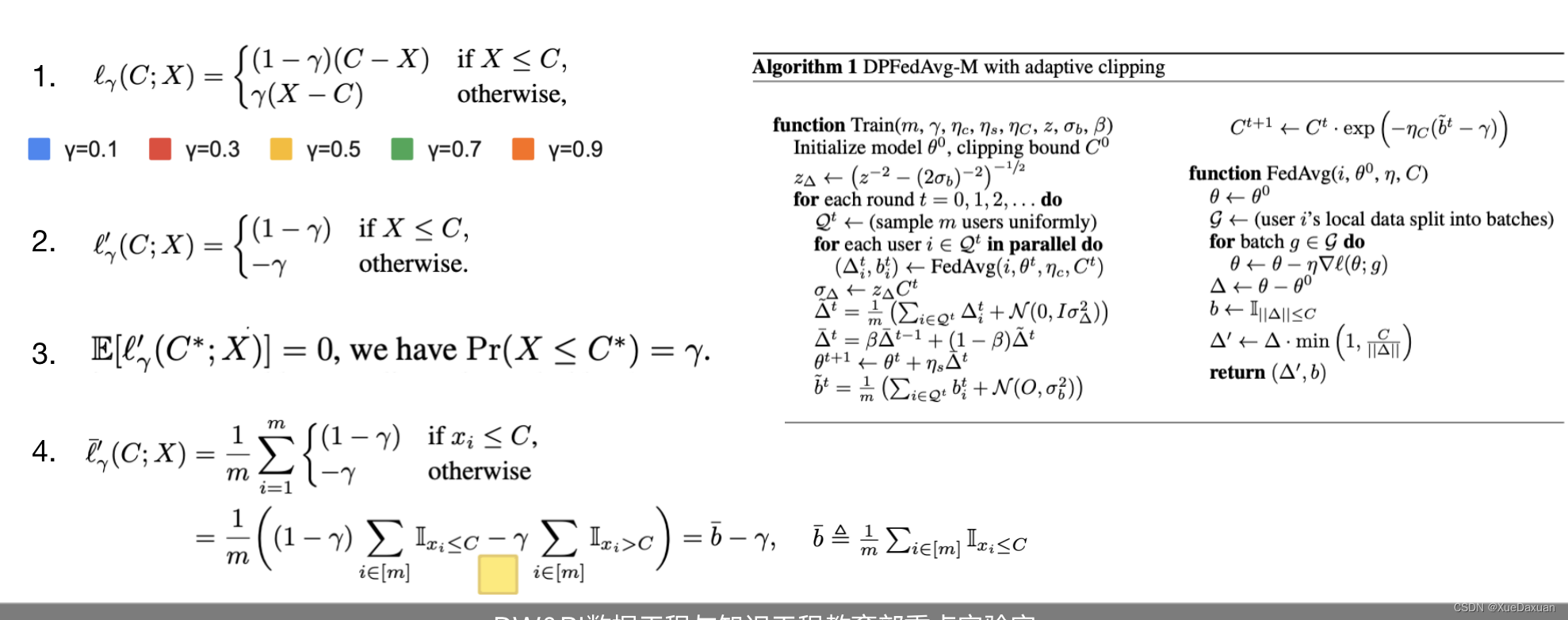

method:基于这样的前提,文章提出了一种分位数的思想,用分位数去找一个合适的裁剪临界值。左边第一个公式中的参数伽马就是分位数,通过令导数的期望为0可以找到一个与分位数相关的的C*(是X的γ分位数)。

公式4是假设在某轮中有m个X的样本值(x1,…xm)。这一轮损失的平均导数是公式4,其中参数b代表最大值为C时样本的平均概率分数;根据梯度下降对裁剪阈值C进行迭代更新。

公式4是假设在某轮中有m个X的样本值(x1,…xm)。这一轮损失的平均导数是公式4,其中参数b代表最大值为C时样本的平均概率分数;根据梯度下降对裁剪阈值C进行迭代更新。

右边是文章算法的一个流程,对于sample出来的每一个用户进行客户端训练,通过梯度下降和梯度裁剪得到返回值模型参数delta和参数b;因为模型参数和参数b是文章保护对象,所以添加噪声进行保护,通过贝塔参数对模型参数进行更新,最后得到塞塔;并且对参数b进行加噪,利用分位数和b来控制裁剪阈值的大小。

该方法密切跟踪分位数,使用的隐私预算可以忽略不计,与其他联邦学习技术(如压缩和安全聚合)兼容,并与DP- fedavg有一个直接的联合DP分析。实验表明,中值更新规范的自适应剪切在一系列现实的联邦学习任务中都能很好地工作,有时甚至优于事后选择的最佳固定剪切,而且不需要调整任何剪切超参数。

729

729

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?