6 for i in cards:

7 if “title” not in i:

8 for j in i[‘card_group’][1][‘users’]:

9 user_name = j[‘screen_name’] # 用户名

10 user_id = j[‘id’] # 用户id

11 fans_count = j[‘followers_count’] # 粉丝数量

12 sex, add = get_user_info(user_id)

13 info = {

14 “用户名”: user_name,

15 “性别”: sex,

16 “所在地”: add,

17 “粉丝数”: fans_count,

18 }

19 info_list.append(info)

20 else:

21 for j in i[‘card_group’]:

22 user_name = j[‘user’][‘screen_name’] # 用户名

23 user_id = j[‘user’][‘id’] # 用户id

24 fans_count = j[‘user’][‘followers_count’] # 粉丝数量

25 sex, add = get_user_info(user_id)

26 info = {

27 “用户名”: user_name,

28 “性别”: sex,

29 “所在地”: add,

30 “粉丝数”: fans_count,

31 }

32 info_list.append(info)

33 if “followers” in url:

34 print(“第1页关注信息爬取完毕…”)

35 else:

36 print(“第1页粉丝信息爬取完毕…”)

37 save_info(info_list)

38 except Exception as e:

39 print(e)

爬取其他页并解析的代码如下:

1 def get_and_parse2(url, data):

2 res = requests.get(url, headers=get_random_ua(), data=data)

3 sleep(3)

4 info_list = []

5 try:

6 if ‘cards’ in res.json()[‘data’]:

7 card_group = res.json()[‘data’][‘cards’][0][‘card_group’]

8 else:

9 card_group = res.json()[‘data’][‘cardlistInfo’][‘cards’][0][‘card_group’]

10 for card in card_group:

11 user_name = card[‘user’][‘screen_name’] # 用户名

12 user_id = card[‘user’][‘id’] # 用户id

13 fans_count = card[‘user’][‘followers_count’] # 粉丝数量

14 sex, add = get_user_info(user_id)

15 info = {

16 “用户名”: user_name,

17 “性别”: sex,

18 “所在地”: add,

19 “粉丝数”: fans_count,

20 }

21 info_list.append(info)

22 if “page” in data:

23 print(“第{}页关注信息爬取完毕…”.format(data[‘page’]))

24 else:

25 print(“第{}页粉丝信息爬取完毕…”.format(data[‘since_id’]))

26 save_info(info_list)

27 except Exception as e:

28 print(e)

在运行的时候可能会出现各种各样的错误,有的时候返回结果为空,有的时候解析出错,不过还是能成功爬取大部分数据的,这里就放一下最后生成的三张图片吧。

login.py

import requests

import time

import json

import base64

import rsa

import binascii

class WeiBoLogin:

def init(self, username, password):

self.username = username

self.password = password

self.session = requests.session()

self.cookie_file = “Cookie.json”

self.nonce, self.pubkey, self.rsakv = “”, “”, “”

self.headers = {‘User-Agent’: ‘Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/41.0.2228.0 Safari/537.36’}

def save_cookie(self, cookie):

“”"

保存Cookie

:param cookie: Cookie值

:return:

“”"

with open(self.cookie_file, ‘w’) as f:

json.dump(requests.utils.dict_from_cookiejar(cookie), f)

def load_cookie(self):

“”"

导出Cookie

:return: Cookie

“”"

with open(self.cookie_file, ‘r’) as f:

cookie = requests.utils.cookiejar_from_dict(json.load(f))

return cookie

def pre_login(self):

“”"

预登录,获取nonce, pubkey, rsakv字段的值

:return:

“”"

url = ‘https://login.sina.com.cn/sso/prelogin.php?entry=weibo&su=&rsakt=mod&client=ssologin.js(v1.4.19)&_={}’.format(int(time.time() * 1000))

res = requests.get(url)

js = json.loads(res.text.replace(“sinaSSOController.preloginCallBack(”, “”).rstrip(“)”))

self.nonce, self.pubkey, self.rsakv = js[“nonce”], js[‘pubkey’], js[“rsakv”]

def sso_login(self, sp, su):

“”"

发送加密后的用户名和密码

:param sp: 加密后的用户名

:param su: 加密后的密码

:return:

“”"

data = {

‘encoding’: ‘UTF-8’,

‘entry’: ‘weibo’,

‘from’: ‘’,

‘gateway’: ‘1’,

‘nonce’: self.nonce,

‘pagerefer’: ‘https://login.sina.com.cn/crossdomain2.php?action=logout&r=https%3A%2F%2Fweibo.com%2Flogout.php%3Fbackurl%3D%252F’,

‘prelt’: ‘22’,

‘pwencode’: ‘rsa2’,

‘qrcode_flag’: ‘false’,

‘returntype’: ‘META’,

‘rsakv’: self.rsakv,

‘savestate’: ‘7’,

‘servertime’: int(time.time()),

‘service’: ‘miniblog’,

‘sp’: sp,

‘sr’: ‘1920*1080’,

‘su’: su,

‘url’: ‘https://weibo.com/ajaxlogin.php?framelogin=1&callback=parent.sinaSSOController.feedBackUrlCallBack’,

‘useticket’: ‘1’,

‘vsnf’: ‘1’}

url = ‘https://login.sina.com.cn/sso/login.php?client=ssologin.js(v1.4.19)&_={}’.format(int(time.time() * 1000))

self.session.post(url, headers=self.headers, data=data)

def login(self):

“”"

模拟登录主函数

:return:

“”"

Base64加密用户名

def encode_username(usr):

return base64.b64encode(usr.encode(‘utf-8’))[:-1]

RSA加密密码

def encode_password(code_str):

pub_key = rsa.PublicKey(int(self.pubkey, 16), 65537)

crypto = rsa.encrypt(code_str.encode(‘utf8’), pub_key)

return binascii.b2a_hex(crypto) # 转换成16进制

获取nonce, pubkey, rsakv

self.pre_login()

加密用户名

su = encode_username(self.username)

加密密码

text = str(int(time.time())) + ‘\t’ + str(self.nonce) + ‘\n’ + str(self.password)

sp = encode_password(text)

发送参数,保存cookie

self.sso_login(sp, su)

self.save_cookie(self.session.cookies)

self.session.close()

def cookie_test(self):

“”"

测试Cookie是否有效,这里url要替换成个人主页的url

:return:

“”"

session = requests.session()

session.cookies = self.load_cookie()

url = ‘’

res = session.get(url, headers=self.headers)

print(res.text)

if name == ‘main’:

user_name = ‘’

pass_word = ‘’

wb = WeiBoLogin(user_name, pass_word)

wb.login()

wb.cookie_test()

test.py

import random

import pymongo

import requests

from time import sleep

import matplotlib.pyplot as plt

from multiprocessing import Pool

返回随机的User-Agent

def get_random_ua():

user_agent_list = [

“Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.1 (KHTML, like Gecko) Chrome/22.0.1207.1 Safari/537.1”

“Mozilla/5.0 (X11; CrOS i686 2268.111.0) AppleWebKit/536.11 (KHTML, like Gecko) Chrome/20.0.1132.57 Safari/”

“536.11”,

“Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.6 (KHTML, like Gecko) Chrome/20.0.1092.0 Safari/536.6”,

“Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.6 (KHTML, like Gecko) Chrome/20.0.1090.0 Safari/536.6”,

“Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/537.1 (KHTML, like Gecko) Chrome/19.77.34.5 Safari/537.1”,

“Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/536.5 (KHTML, like Gecko) Chrome/19.0.1084.9 Safari/536.5”,

“Mozilla/5.0 (Windows NT 6.0) AppleWebKit/536.5 (KHTML, like Gecko) Chrome/19.0.1084.36 Safari/536.5”,

“Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3”,

“Mozilla/5.0 (Windows NT 5.1) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3”,

"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_8_0) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1063.0 "

“Safari/536.3”,

“Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1062.0 Safari/536.3”,

“Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1062.0 Safari/536.3”,

“Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3”,

“Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3”,

“Mozilla/5.0 (Windows NT 6.1) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3”,

“Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1061.0 Safari/536.3”,

“Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/535.24 (KHTML, like Gecko) Chrome/19.0.1055.1 Safari/535.24”,

“Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/535.24 (KHTML, like Gecko) Chrome/19.0.1055.1 Safari/535.24”

]

return {

“User-Agent”: random.choice(user_agent_list)

}

返回关注数和粉丝数

def get():

res = requests.get(“https://m.weibo.cn/profile/info?uid=5720474518”)

return res.json()[‘data’][‘user’][‘follow_count’], res.json()[‘data’][‘user’][‘followers_count’]

获取内容并解析

def get_and_parse1(url):

res = requests.get(url)

cards = res.json()[‘data’][‘cards’]

info_list = []

try:

for i in cards:

if “title” not in i:

for j in i[‘card_group’][1][‘users’]:

user_name = j[‘screen_name’] # 用户名

user_id = j[‘id’] # 用户id

fans_count = j[‘followers_count’] # 粉丝数量

sex, add = get_user_info(user_id)

info = {

“用户名”: user_name,

“性别”: sex,

“所在地”: add,

“粉丝数”: fans_count,

}

info_list.append(info)

else:

for j in i[‘card_group’]:

user_name = j[‘user’][‘screen_name’] # 用户名

user_id = j[‘user’][‘id’] # 用户id

fans_count = j[‘user’][‘followers_count’] # 粉丝数量

sex, add = get_user_info(user_id)

info = {

“用户名”: user_name,

“性别”: sex,

“所在地”: add,

“粉丝数”: fans_count,

}

info_list.append(info)

if “followers” in url:

print(“第1页关注信息爬取完毕…”)

else:

print(“第1页粉丝信息爬取完毕…”)

save_info(info_list)

except Exception as e:

print(e)

爬取第一页的关注和粉丝信息

def get_first_page():

url1 = “https://m.weibo.cn/api/container/getIndex?containerid=231051_-followers-_5720474518” # 关注

url2 = “https://m.weibo.cn/api/container/getIndex?containerid=231051_-fans-_5720474518” # 粉丝

get_and_parse1(url1)

get_and_parse1(url2)

获取内容并解析

def get_and_parse2(url, data):

res = requests.get(url, headers=get_random_ua(), data=data)

sleep(3)

info_list = []

try:

if ‘cards’ in res.json()[‘data’]:

card_group = res.json()[‘data’][‘cards’][0][‘card_group’]

else:

card_group = res.json()[‘data’][‘cardlistInfo’][‘cards’][0][‘card_group’]

for card in card_group:

user_name = card[‘user’][‘screen_name’] # 用户名

user_id = card[‘user’][‘id’] # 用户id

fans_count = card[‘user’][‘followers_count’] # 粉丝数量

sex, add = get_user_info(user_id)

info = {

“用户名”: user_name,

“性别”: sex,

“所在地”: add,

“粉丝数”: fans_count,

}

info_list.append(info)

if “page” in data:

print(“第{}页关注信息爬取完毕…”.format(data[‘page’]))

else:

print(“第{}页粉丝信息爬取完毕…”.format(data[‘since_id’]))

save_info(info_list)

except Exception as e:

print(e)

爬取关注的用户信息

def get_follow(num):

url1 = “https://m.weibo.cn/api/container/getIndex?containerid=231051_-followers-_5720474518&page={}”.format(num)

data1 = {

“containerid”: “231051_ - followers - _5720474518”,

“page”: num

}

get_and_parse2(url1, data1)

爬取粉丝的用户信息

def get_followers(num):

url2 = “https://m.weibo.cn/api/container/getIndex?containerid=231051_-fans-_5720474518&since_id={}”.format(num)

data2 = {

“containerid”: “231051_-fans-_5720474518”,

“since_id”: num

}

get_and_parse2(url2, data2)

爬取用户的基本资料(性别和所在地)

def get_user_info(uid):

uid_str = “230283” + str(uid)

url2 = “https://m.weibo.cn/api/container/getIndex?containerid={}_-_INFO&title=%E5%9F%BA%E6%9C%AC%E8%B5%84%E6%96%99&luicode=10000011&lfid={}&featurecode=10000326”.format(

uid_str, uid_str)

data2 = {

“containerid”: “{}_-_INFO”.format(uid_str),

“title”: “基本资料”,

“luicode”: 10000011,

“lfid”: int(uid_str),

“featurecode”: 10000326

}

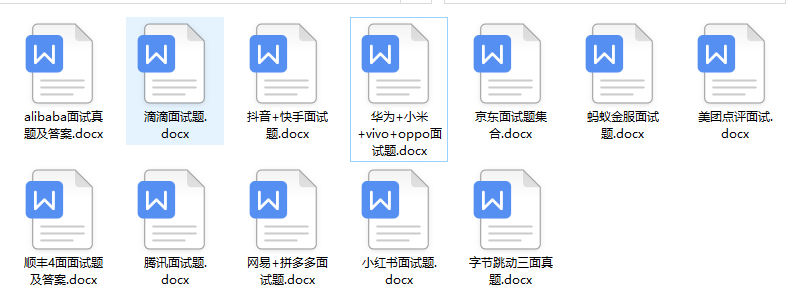

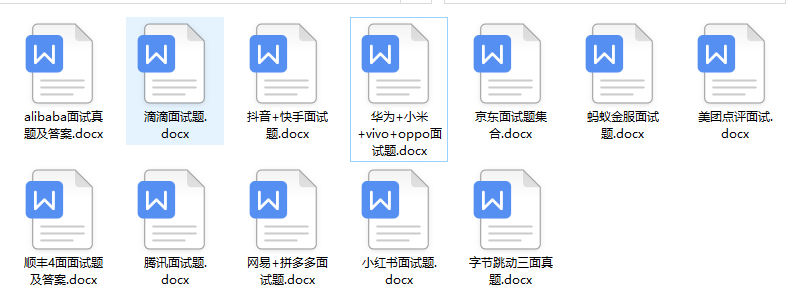

自我介绍一下,小编13年上海交大毕业,曾经在小公司待过,也去过华为、OPPO等大厂,18年进入阿里一直到现在。

深知大多数Python工程师,想要提升技能,往往是自己摸索成长或者是报班学习,但对于培训机构动则几千的学费,着实压力不小。自己不成体系的自学效果低效又漫长,而且极易碰到天花板技术停滞不前!

因此收集整理了一份《2024年Python开发全套学习资料》,初衷也很简单,就是希望能够帮助到想自学提升又不知道该从何学起的朋友,同时减轻大家的负担。

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,基本涵盖了95%以上前端开发知识点,真正体系化!

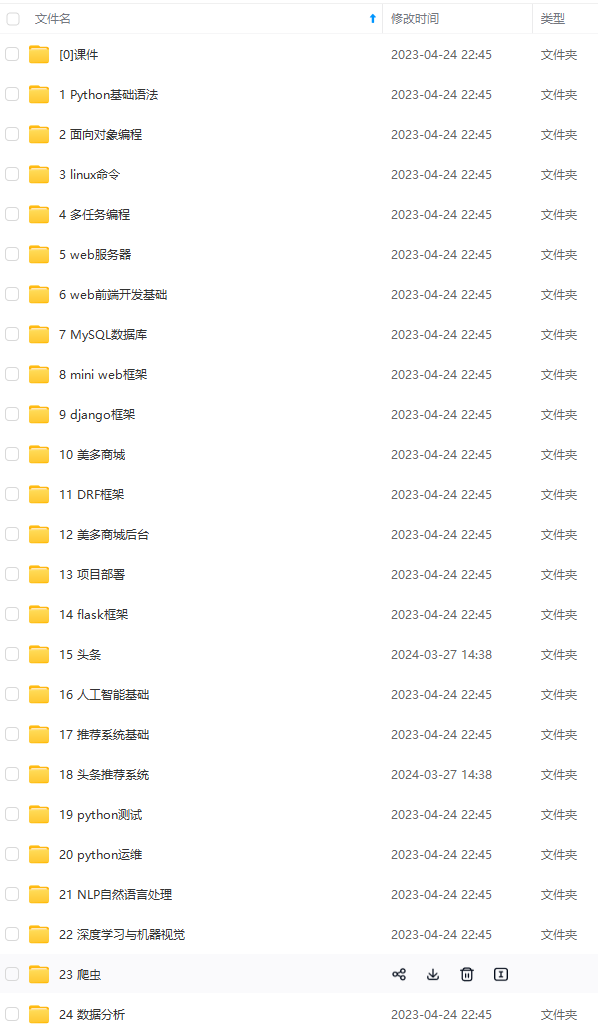

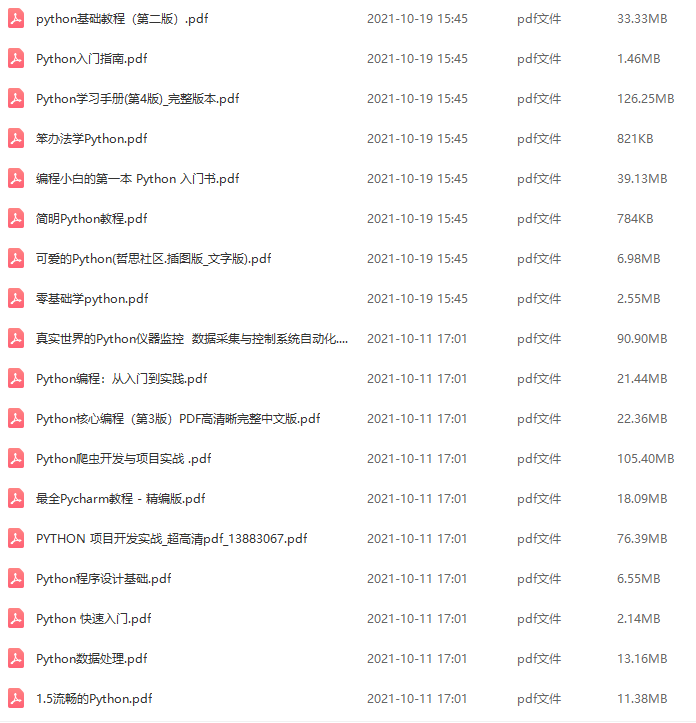

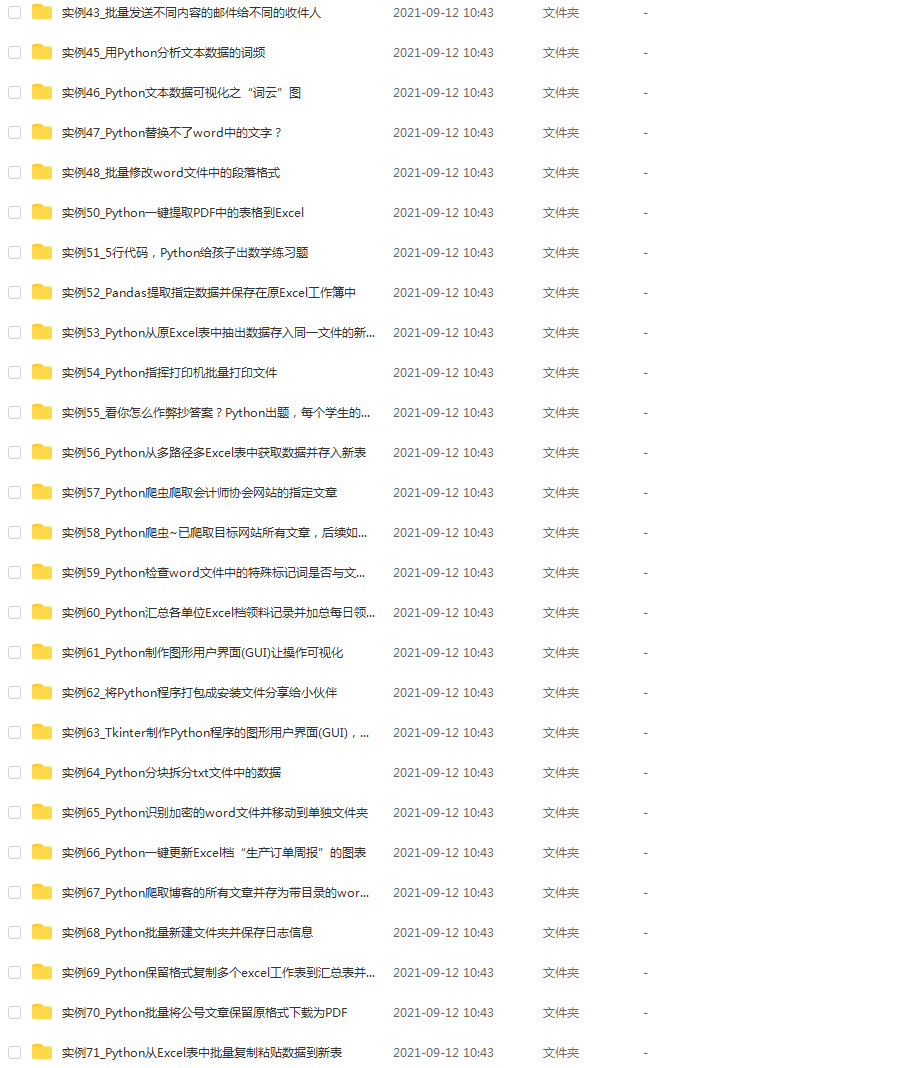

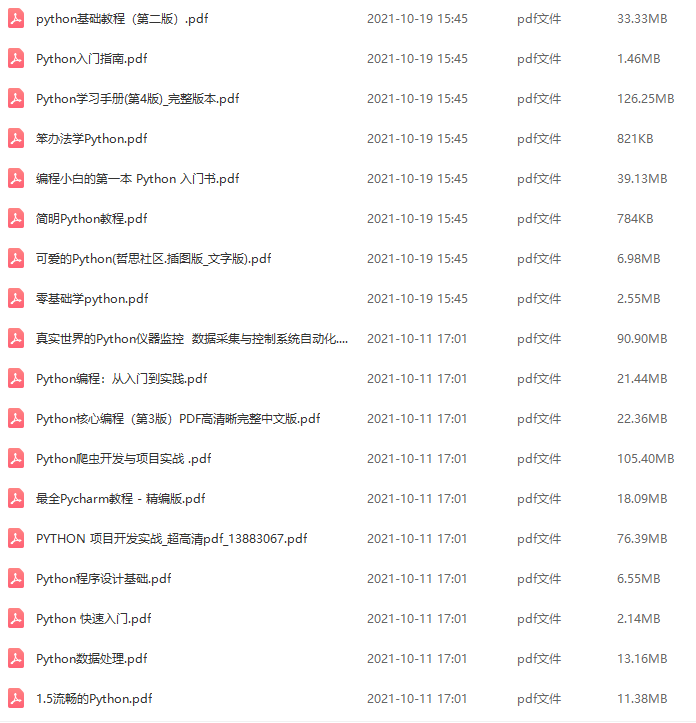

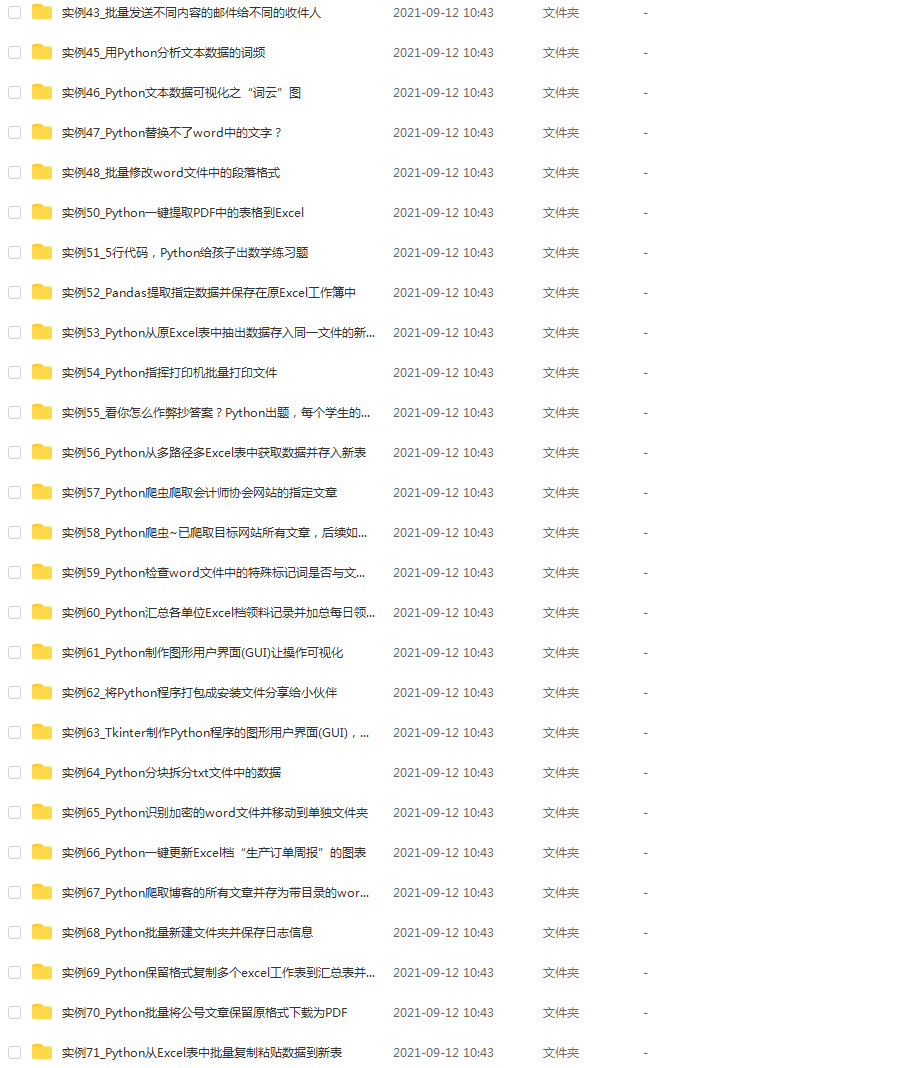

由于文件比较大,这里只是将部分目录大纲截图出来,每个节点里面都包含大厂面经、学习笔记、源码讲义、实战项目、讲解视频,并且后续会持续更新

如果你觉得这些内容对你有帮助,可以扫码获取!!!(备注Python)

低效又漫长,而且极易碰到天花板技术停滞不前!**

因此收集整理了一份《2024年Python开发全套学习资料》,初衷也很简单,就是希望能够帮助到想自学提升又不知道该从何学起的朋友,同时减轻大家的负担。

[外链图片转存中…(img-9sGGnTW1-1712530620876)]

[外链图片转存中…(img-9NN53gFN-1712530620877)]

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,基本涵盖了95%以上前端开发知识点,真正体系化!

由于文件比较大,这里只是将部分目录大纲截图出来,每个节点里面都包含大厂面经、学习笔记、源码讲义、实战项目、讲解视频,并且后续会持续更新

如果你觉得这些内容对你有帮助,可以扫码获取!!!(备注Python)

本文介绍了使用Python编写的脚本,通过抓取API数据来获取微博用户的关注和粉丝信息,包括用户名、性别、所在地和粉丝数量,支持多页爬取并处理可能出现的异常情况。

本文介绍了使用Python编写的脚本,通过抓取API数据来获取微博用户的关注和粉丝信息,包括用户名、性别、所在地和粉丝数量,支持多页爬取并处理可能出现的异常情况。

412

412

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?