Hive SQL

1 数据库实例操作

https://cwiki.apache.org/confluence/display/Hive/LanguageManual+DDL

2 Hive 数据类型

| data_type : primitive_type | array_type 数组 | map_type 键值对 | struct_type 结构体类型(比如只有属性没有方法的类) | union_type -- (Note: Available in Hive 0.7.0 and later) primitive_type : TINYINT | SMALLINT | INT | BIGINT | BOOLEAN | FLOAT | DOUBLE | DOUBLE PRECISION -- (Note: Available in Hive 2.2.0 and later) | STRING | BINARY -- (Note: Available in Hive 0.8.0 and later) | TIMESTAMP -- (Note: Available in Hive 0.8.0 and later) | DECIMAL -- (Note: Available in Hive 0.11.0 and later) | DECIMAL(precision, scale) -- (Note: Available in Hive 0.13.0 and later) | DATE -- (Note: Available in Hive 0.12.0 and later) | VARCHAR -- (Note: Available in Hive 0.12.0 and later) | CHAR -- (Note: Available in Hive 0.13.0 and later) array_type : ARRAY < data_type > map_type : MAP < primitive_type, data_type > struct_type : STRUCT < col_name : data_type [COMMENT col_comment], ...> union_type : UNIONTYPE < data_type, data_type, ... > -- (Note: Available inHive 0.7.0 and later) |

3 表相关操作

3.1 表基础知识

完整的 DDL 建表语法规则

|

------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

|

基本数据类型

| 类型名称 | 描述 | 举例 |

| boolean | true/false | true |

| tinyint | 1 字节的有符号整数 | 1 |

| smallint | 2 字节的有符号整数 | 1 |

| == int== | 4 字节的有符号整数 | 1 |

| ==bigint== | 8 字节的有符号整数 | 1 |

| float | 4 字节单精度浮点数 | 1.0 |

| ==double== | 8 字节单精度浮点数 | 1.0 |

| ==string== | 字符串(不设长度) | “gtjin” |

| varchar | 字符串(1-65355 长度,超长截断) | “geekmooc.cn” |

| timestamp | 时间戳 | 161243654 |

| date | 日期 | 20200416 |

复合数据类型

| 类型名称 | 描述 | 举例 |

| array | 一组有序的字段,字段类型必须相同 array(元素 1,元素 2) | Array(1,2,3) |

| map | 一组无序的键值对 map(k1,v1,k2,v2) | Map(‘a’,1,'b',2) |

| struct | 一组命名的字段,字段类型可以不同 struct(元素 1,元素 2) | Struct('a',1,2,0) |

- array 字段的元素访问方式:

- 下标获取元素,下标从 0 开始

- 获取第一个元素

- array[0]

- map 字段的元素访问方式

- 通过键获取值

- 获取 a 这个 key 对应的 value

- map['a']

- struct 字段的元素获取方式

- 定义一个字段 c 的类型为 struct{a int;b string}

- 获取 a 和 b 的值

- 使用 c.a 和 c.b 获取其中的元素值

- 这里可以把这种类型看成是一个对象

create table complex(

col1 array<int>,

col2 map<string,int>,

col3 struct<a:string,b:int,c:double>

)Hive 建表案例

create table person(

id int,

name string,

likes array<string>,

address map<string,string>

)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY ','

COLLECTION ITEMS TERMINATED BY '-'

MAP KEYS TERMINATED BY ':'

LINES TERMINATED BY '\n';Hive 查看表描述

DESC [EXTENDED|FORMATTED] table_name

desc [extended|formatted] table_name

| hive> desc formatted psn; id int # Detailed Table Information # Storage Information 存储信息 |

删除表:

hive> drop table psn;| hive> desc formatted person; # Storage Information |

数据:

id,姓名,爱好,地址

| 1,小明 1,lol-book-movie,beijing:xisanqi-shanghai:pudong 2,小明 2,lol-book-movie,beijing:xisanqi-shanghai:pudong 3,小明 3,lol-book-movie,beijing:xisanqi-shanghai:pudong 4,小明 4,lol-book-movie,beijing:xisanqi-shanghai:pudong 5,小明 5,lol-movie,beijing:xisanqi-shanghai:pudong 6,小明 6,lol-book-movie,beijing:xisanqi-shanghai:pudong 7,小明 7,lol-book,beijing:xisanqi-shanghai:pudong 8,小明 8,lol-book,beijing:xisanqi-shanghai:pudong 9,小明 9,lol-book-movie,beijing:xisanqi-shanghai:pudong |

Loading files into tables

Hive does not do any transformation while loading data into tables. Load operations are currently pure copy/move

operations that move datafiles into locations corresponding to Hive tables.

LOAD DATA [LOCAL] INPATH 'filepath' [OVERWRITE] INTO TABLE tablename [PARTITION (partcol1=val1,partcol2=val2 ...)]

复制 node4 连接窗口,并执行以下操作:

[root@node4 ~]# mkdir data

[root@node4 ~]# cd data

[root@node4 data]# vim person01.txt

1,小明 1,lol-book-movie,beijing:xisanqi-shanghai:pudong

2,小明 2,lol-book-movie,beijing:xisanqi-shanghai:pudong

3,小明 3,lol-book-movie,beijing:xisanqi-shanghai:pudong

4,小明 4,lol-book-movie,beijing:xisanqi-shanghai:pudong

5,小明 5,lol-movie,beijing:xisanqi-shanghai:pudong

6,小明 6,lol-book-movie,beijing:xisanqi-shanghai:pudong

7,小明 7,lol-book,beijing:xisanqi-shanghai:pudong

8,小明 8,lol-book,beijing:xisanqi-shanghai:pudong

9,小明 9,lol-book-movie,beijing:xisanqi-shanghai:pudong去含有 hive 客户端的 node4 窗口中执行:

| hive> load data local inpath '/root/data/person01.txt' into tableperson; Loading data to table default.person Table default.person stats: [numFiles=1, totalSize=496] OK Time taken: 0.439 seconds hive> select * from person; OK 39 1 小明 1 ["lol","book","movie"] {"beijing":"xisanqi","shanghai":"pudong"} 2 小明 2 ["lol","book","movie"] {"beijing":"xisanqi","shanghai":"pudong"} 3 小明 3 ["lol","book","movie"] {"beijing":"xisanqi","shanghai":"pudong"} 4 小明 4 ["lol","book","movie"] {"beijing":"xisanqi","shanghai":"pudong"} 5 小明 5 ["lol","movie"] {"beijing":"xisanqi","shanghai":"pudong"} 6 小明 6 ["lol","book","movie"] {"beijing":"xisanqi","shanghai":"pudong"} 7 小明 7 ["lol","book"] {"beijing":"xisanqi","shanghai":"pudong"} 8 小明 8 ["lol","book"] {"beijing":"xisanqi","shanghai":"pudong"} 9 小明 9 ["lol","book","movie"] {"beijing":"xisanqi","shanghai":"pudong"} Time taken: 0.086 seconds, Fetched: 9 row(s) |

| select id,name,likes[0],address['beijing'] from person; +-----+-------+------+----------+--+ | id | name | _c2 | _c3 | +-----+-------+------+----------+--+ | 1 | 小明 1 | lol | xisanqi | | 2 | 小明 2 | lol | xisanqi | | 3 | 小明 3 | lol | xisanqi | |

默认分隔符演示:

node4 hive>

create table person2

(

id int,

name string,

likes array<string>,

address map<string,string>

);[root@node4 data]#

[root@node4 data]# cp person01.txt person02.txt

[root@node4 data]# vim person02.txt

1^A 小明 1^Alol^Bbook^Bmovie^Abeijing^Cxisanqi^Bshanghai^Cpudong注意:^A 输入:Ctrl+V Ctrl A,^B 和^C 同理。

node4 hive>

hive> load data local inpath '/root/data/person02.txt' into table

person2;

Loading data to table default.person2

Table default.person2 stats: [numFiles=1, totalSize=57]

OK

Time taken: 0.604 seconds

hive> select * from person2;

OK

1 小明 1 ["lol","book","movie"]

{"beijing":"xisanqi","shanghai":"pudong"}node4 hive>

| create table person3 hive> desc formatted person3; # Storage Information hive> load data local inpath '/root/data/person02.txt' into table person3; |

3.2 内部表与外部表

(1) Hive 内部表

CREATE TABLE [IF NOT EXISTS] table_name

删除表时,元数据与数据都会被删除

Table Type: MANAGED_TABLE 内部表

(2) Hive 外部表

CREATE EXTERNAL TABLE [IF NOT EXISTS] table_name LOCATION hdfs_path

删除外部表只删除 metastore 的元数据,不删除 hdfs 中的表数据

Table Type: EXTERNAL_TABLE external

首先将 person01.txt 文件上传到 hdfs 的/usr 目录

[root@node4 ~]# hdfs dfs -mkdir /usr

[root@node4 ~]# hdfs dfs -put /root/data/person01.txt /usr

创建外部表

| create external table person4

|

内部表和外部表的区别:

1、创建表的时候,内部表直接存储在默认的 hdfs 路径,外部表需要自己指定路径

2、删除表的时候,内部表会将数据和元数据全部删除,外部表只删除元数据,数据不删除

| hive> drop table person4; |

注意:hive:读时检查(实现解耦,提高数据加载的效率)

关系型数据库:写时检查

演示:

| create table person(

|

3.3 表分区

Hive 分区 partition (订单介绍)

必须在表定义时指定对应的 partition 字段

a、单分区建表语句:

create table day_table (id int, content string) partitioned by (dt string);单分区表,按天分区,在表结构中存在 id,content,dt 三列。

以 dt 为文件夹区分

b、 双分区建表语句:

create table day_hour_table (id int, content string) partitioned by

(dt string, hour string);双分区表,按天和小时分区,在表结构中新增加了 dt 和 hour 两列。

先以 dt 为文件夹,再以 hour 子文件夹区分

理论上分区的个数可以任意多,但是常用的为单分区和双分区。

注意:定义分区和格式化语句的位置。

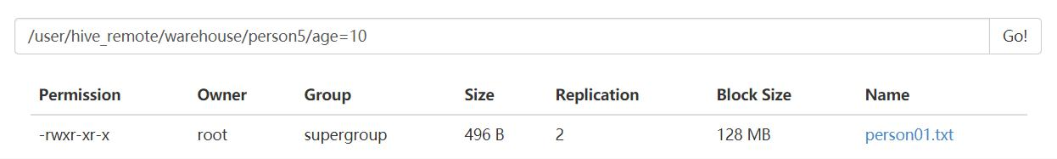

3.3.1 单分区

| create table person5

hive> create table person5

# Partition Information |

注意:分区字段不能出现在建表脚本中。

添加数据:

| hive> load data local inpath '/root/data/person01.txt' into table person5 partition(age=10); Loading data to table default.person5 partition (age=10) Partition default.person5{age=10} stats: [numFiles=1, numRows=0, totalSize=496, rawDataSize=0] OK Time taken: 0.892 seconds hive> select * from person5; OK 1 小明 1 ["lol","book","movie"] {"beijing":"xisanqi","shanghai":"pudong"} 10 2 小明 2 ["lol","book","movie"] {"beijing":"xisanqi","shanghai":"pudong"} 10 3 小明 3 ["lol","book","movie"] {"beijing":"xisanqi","shanghai":"pudong"} 10 4 小明 4 ["lol","book","movie"] {"beijing":"xisanqi","shanghai":"pudong"} 10 5 小明 5 ["lol","movie"] {"beijing":"xisanqi","shanghai":"pudong"} 10 6 小明 6 ["lol","book","movie"] {"beijing":"xisanqi","shanghai":"pudong"} 10 7 小明 7 ["lol","book"] {"beijing":"xisanqi","shanghai":"pudong"} 10 8 小明 8 ["lol","book"] {"beijing":"xisanqi","shanghai":"pudong"} 10 9 小明 9 ["lol","book","movie"] {"beijing":"xisanqi","shanghai":"pudong"} 10 Time taken: 0.305 seconds, Fetched: 9 row(s) |

| hive> load data local inpath '/root/data/person01.txt' into table person5 partition(age=20); Loading data to table default.person5 partition (age=20) Partition default.person5{age=20} stats: [numFiles=1, numRows=0, totalSize=496, rawDataSize=0] OK Time taken: 0.609 seconds hive> select * from person5; OK 1 小明 1 ["lol","book","movie"] {"beijing":"xisanqi","shanghai":"pudong"} 10 2 小明 2 ["lol","book","movie"] {"beijing":"xisanqi","shanghai":"pudong"} 10 3 小明 3 ["lol","book","movie"] {"beijing":"xisanqi","shanghai":"pudong"} 10 4 小明 4 ["lol","book","movie"] {"beijing":"xisanqi","shanghai":"pudong"} 10 5 小明 5 ["lol","movie"] {"beijing":"xisanqi","shanghai":"pudong"} 10 6 小明 6 ["lol","book","movie"] {"beijing":"xisanqi","shanghai":"pudong"} 10 7 小明 7 ["lol","book"] {"beijing":"xisanqi","shanghai":"pudong"} 10 47 8 小明 8 ["lol","book"] {"beijing":"xisanqi","shanghai":"pudong"} 10 9 小明 9 ["lol","book","movie"] {"beijing":"xisanqi","shanghai":"pudong"} 10 1 小明 1 ["lol","book","movie"] {"beijing":"xisanqi","shanghai":"pudong"} 20 2 小明 2 ["lol","book","movie"] {"beijing":"xisanqi","shanghai":"pudong"} 20 3 小明 3 ["lol","book","movie"] {"beijing":"xisanqi","shanghai":"pudong"} 20 4 小明 4 ["lol","book","movie"] {"beijing":"xisanqi","shanghai":"pudong"} 20 5 小明 5 ["lol","movie"] {"beijing":"xisanqi","shanghai":"pudong"} 20 6 小明 6 ["lol","book","movie"] {"beijing":"xisanqi","shanghai":"pudong"} 20 7 小明 7 ["lol","book"] {"beijing":"xisanqi","shanghai":"pudong"} 20 8 小明 8 ["lol","book"] {"beijing":"xisanqi","shanghai":"pudong"} 20 9 小明 9 ["lol","book","movie"] {"beijing":"xisanqi","shanghai":"pudong"} 20 |

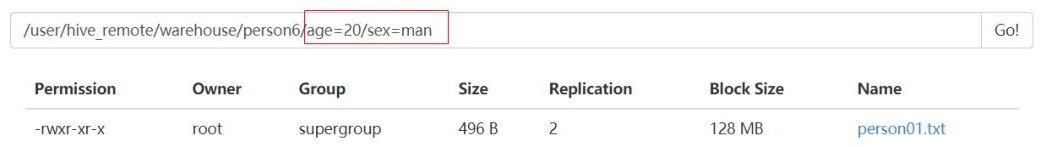

3.3.2 双分区

| create table person6 hive> create table person6

# Partition Information

|

| hive> load data local inpath '/root/data/person01.txt' into table person6 partition(sex='man',age=10); Loading data to table default.person6 partition (age=10, sex=man) Partition default.person6{age=10, sex=man} stats: [numFiles=1, numRows=0, totalSize=496, rawDataSize=0] OK Time taken: 0.704 seconds hive> select * from person6; OK 1 小明 1 ["lol","book","movie"] {"beijing":"xisanqi","shanghai":"pudong"} 10 man 2 小明 2 ["lol","book","movie"] {"beijing":"xisanqi","shanghai":"pudong"} 10 man 3 小明 3 ["lol","book","movie"] {"beijing":"xisanqi","shanghai":"pudong"} 10 man 4 小明 4 ["lol","book","movie"] {"beijing":"xisanqi","shanghai":"pudong"} 10 man 5 小明 5 ["lol","movie"] {"beijing":"xisanqi","shanghai":"pudong"} 10 man 6 小明 6 ["lol","book","movie"] {"beijing":"xisanqi","shanghai":"pudong"} 10 man 7 小明 7 ["lol","book"] {"beijing":"xisanqi","shanghai":"pudong"} 10 man 8 小明 8 ["lol","book"] {"beijing":"xisanqi","shanghai":"pudong"} 10 man 9 小明 9 ["lol","book","movie"] {"beijing":"xisanqi","shanghai":"pudong"} 10 man 1 小明 1 ["lol","book","movie"] {"beijing":"xisanqi","shanghai":"pudong"} 20 man 2 小明 2 ["lol","book","movie"] {"beijing":"xisanqi","shanghai":"pudong"} 20 man 3 小明 3 ["lol","book","movie"] {"beijing":"xisanqi","shanghai":"pudong"} 20 man 4 小明 4 ["lol","book","movie"] {"beijing":"xisanqi","shanghai":"pudong"} 20 man 5 小明 5 ["lol","movie"] {"beijing":"xisanqi","shanghai":"pudong"} 20 man 6 小明 6 ["lol","book","movie"] {"beijing":"xisanqi","shanghai":"pudong"} 20 man 7 小明 7 ["lol","book"] {"beijing":"xisanqi","shanghai":"pudong"} 20 man 8 小明 8 ["lol","book"] {"beijing":"xisanqi","shanghai":"pudong"} 20 man 9 小明 9 ["lol","book","movie"] {"beijing":"xisanqi","shanghai":"pudong"} 20 man Time taken: 0.122 seconds, Fetched: 18 row(s) |

加载数据时,分区先写谁都可以,但是属性名称不能写错了。

| hive> load data local inpath '/root/data/person01.txt' into table person6 partition(sex='man',age=10);。 |

该行命令共做了三件事情:

1. 在 hdfs 上创建对应的目录。

2. 将数据文件上传到对应的目录上。

3. 在元数据的数据库实例 hive_remote 的表 PARTITIONS 中添加分区信息。

3.3.3 Hive 表添加分区语法

(表已创建,在此基础上添加分区,注意只能添加在表创建时定义好的分区的值):

| ALTER TABLE table_name ADD [IF NOT EXISTS] PARTITION partition_spec

|

例:

| hive> ALTER TABLE person6 ADD PARTITION (age=30); FAILED: ValidationFailureSemanticException partition spec {age=30} doesn't contain all (2) partition columns hive> ALTER TABLE person6 ADD PARTITION (age=30,sex='man'); OK Time taken: 0.309 second |

表在创建时定义的时双分区,条件分区值时,不能只指定一个,需要同时指定两个分区的值。

只建目录没有数据。如果添加的分区已经存在,则抛出 AlreadyExistsException。

3.3.4 删除分区

| ALTER TABLE table_name DROP [IF EXISTS] PARTITION partition_spec[, PARTITION partition_spec, ...] [IGNORE PROTECTION] [PURGE]; |

双分区表,可以通过指定两个分区值进行删除:

| hive> alter table person6 drop partition(age=30,sex='man'); Dropped the partition age=30/sex=man OK Time taken: 0.85 seconds |

也可以通过指定一个分区值进行删除:

| alter table person6 drop if exists partition(age=30); Dropped the partition age=30/sex=man OK |

| hive> alter table person6 drop partition(sex='man'); Dropped the partition age=10/sex=man Dropped the partition age=20/sex=man OK Time taken: 0.178 seconds |

3.3.5 修复分区

先将 hdfs 上/usr 目录下的内容删除干净

| [root@node4 ~]# hdfs dfs -ls /usr Found 1 items -rw-r--r-- 2 root supergroup 496 2020-02-03 10:39 /usr/person01.txt [root@node4 ~]# hdfs dfs -rm /usr/person01.txt Deleted /usr/person01.txt |

创建目录/usr/age=10

| [root@node4 ~]# hdfs dfs -mkdir /usr/age=10 |

将 person01.txt 文件上传到/usr/age=10 目录下

| [root@node4 ~]# hdfs dfs -put /root/data/person01.txt /usr/age=10 |

创建外部表 person7

| create external table person7 ( id int, name string, likes array<string>, address map<string,string> ) partitioned by(age int) row format delimited fields terminated by ',' collection items terminated by '-' map keys terminated by ':' location '/usr/'; |

创建完表后,并不会将对应的分区信息同步到 MySQL 数据库的元数据中

Recover Partitions (MSCK REPAIR TABLE)

Hive stores a list of partitions for each table in its metastore. If, however,

new partitions are directly added to HDFS (say by using hadoop fs -put command)

or removed from HDFS, the metastore (and hence Hive) will not be aware of these

changes to partition information unless the user runs ALTER TABLE table_name

ADD/DROP PARTITION commands on each of the newly added or removed partitions,

respectively.

However, users can run a metastore check command with the repair table option:

语法格式:

| MSCK [REPAIR] TABLE table_name [ADD/DROP/SYNC PARTITIONS]; |

| hive> msck repair table person7; OK Partitions not in metastore: person7:age=10 Repair: Added partition to metastore person7:age=10 Time taken: 0.206 seconds, Fetched: 2 row(s) |

3.4 使用已有表建表(扩展)

a. Create Table Like:

| CREATE TABLE empty_key_value_store LIKE key_value_store; |

只创建相同结构的空表,没有具体的表中的数据。

| hive> select * from person3; id int # Detailed Table Information

|

b. Create Table As Select (CTAS)

| CREATE TABLE new_key_value_store AS SELECT columA, columB FROM key_value_store; |

| hive> create table person9 as select id,name from person3; id int # Detailed Table Information # Storage Information |

3.5 删除数据

| hive> delete from person;

|

delete 经过事务,truncate 不经过事务。

3.6 添加数据

3.6.1 Loading files into tables

Hive does not do any transformation while loading data into tables.

Load operations are currently pure copy/move operations that move

datafiles into locations corresponding to Hive tables.

Syntax

| LOAD DATA [LOCAL] INPATH 'filepath' [OVERWRITE] INTO TABLE tablename [PARTITION (partcol1=val1, partcol2=val2 ...)] |

copy:load 的是本地(LOCAL)的文件

move:load 的是 hdfs 上的文件,不适用 local。如果也 copy 的话,hdfs 上将会产生不必要的文件。

| [root@node4 hive]# hdfs dfs -mkdir /test [root@node4 hive]# hdfs dfs -put person1.txt /test/ |

| hive> LOAD DATA INPATH '/test/person1.txt' INTO TABLE person5 PARTITION (age=30); |

3.6.2 Inserting data into Hive Tables from queries

Query Results can be inserted into tables by using the insert clause.

Syntax

Standard syntax:

| INSERT OVERWRITE TABLE tablename1 [PARTITION (partcol1=val1,

|

以上方式不建议使用。

将大表中的数据拆分到多个小表中。

Hive extension (multiple inserts):

| FROM from_statement INSERT OVERWRITE TABLE tablename1 [PARTITION (partcol1=val1, partcol2=val2 ...) [IF NOT EXISTS]] select_statement1 [INSERT OVERWRITE TABLE tablename2 [PARTITION ... [IF NOT EXISTS]] select_statement2] [INSERT INTO TABLE tablename2 [PARTITION ...] select_statement2] ...; |

只查询一次,建议使用。

INSERT OVERWRITE:表示覆盖

INSERT INTO:表示追加。

| create table person2_1(

|

使用场景,将一个复杂的表 person(加入它有 50 个列),将其中一些列的数据添加到person2 中,将另外一些列的数据添加到 person3 中。仅需要查询一次,减少磁盘 IO。

update 了解,基本不用。

3.7 Hive SerDe

Hive SerDe - Serializer and Deserializer

SerDe 用于做序列化和反序列化。

构建在数据存储和执行引擎之间,对两者实现解耦。

Hive 通过 ROW FORMAT DELIMITED 以及 SERDE 进行内容的读写。

| row_format : DELIMITED [FIELDS TERMINATED BY char [ESCAPED BY char]] [COLLECTION ITEMS TERMINATED BY char] [MAP KEYS TERMINATED BY char] [LINES TERMINATED BY char] :SERDE serde_name [WITH SERDEPROPERTIES (property_name= property_value,property_name=property_value, ...)] |

Hive 正则匹配

| CREATE TABLE logtbl ( host STRING, identity STRING, t_user STRING, time STRING, request STRING, referer STRING, agent STRING) ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.RegexSerDe' WITH SERDEPROPERTIES ( "input.regex" = "([^ ]*) ([^ ]*) ([^ ]*) \\[(.*)\\] \"(.*)\" (-|[0-9]*) (-|[0-9]*)" ) STORED AS TEXTFILE; |

使用“01 资料/localhost_access_log.txt”导入。

| 192.168.57.4 - - [29/Feb/2016:18:14:35 +0800] "GET /bg-upper.png HTTP/1.1" 304 - |

将该文件上传到 node4 的/root/data 目录

| [root@node4 data]# pwd /root/data [root@node4 data]# ls localhost_access_log.2016-02-29.txt person01.txt person02.txt [root@node4 data]# mv localhost_access_log.2016-02-29.txt logtbl.txt [root@node4 data]# ls logtbl.txt person01.txt person02.txt |

Node4 hive load 数据到对应的表中

| hive> load data local inpath '/root/data/logtbl.txt' into table logtbl; Loading data to table default.logtbl Table default.logtbl stats: [numFiles=1, totalSize=1759] OK Time taken: 0.463 seconds hive> select * from logtbl; |

4Hive Beeline

Beeline 要与 HiveServer2 配合使用

https://cwiki.apache.org/confluence/display/Hive/Home#Home-UserDocumentation

HiveServer2(HS2)是一种使客户端能够对 Hive 执行查询的服务。HiveServer2 是已被废弃的 HiveServer1(仅支持单客户端访问)的继承者。HS2 支持多客户端并发和身份验证。它旨在为 JDBC 和 ODBC 等开放 API 客户端提供更好的支持。HS2 是一个作为复合服务运行的进程,它包括基于 Thrift 的 Hive 服务(TCP 或 HTTP)和 WebUI 的 Jetty Web 服务器。

服务端启动 hiveserver2

如果让让该进程在后台执行:

| nohup hiveserver2 & #关闭的话通过 kill -9 pid |

客户的通过 beeline 两种方式连接到 hive

1、方式一:

| beeline -u jdbc:hive2://node3:10000/default [-n hiveuser -p pass] [root@node4 ~]# beeline -u jdbc:hive2://node3:10000/default -n root -p 123 0: jdbc:hive2://node3:10000/default> !quit #退出 |

2、方式二:

| % bin/beeline

|

默认 用户名、密码不验证,可以是任意内容,但是不能不写。

The Beeline Shell 工作在嵌入式模式和远程模式.在嵌入式模式下,它运行嵌入式 Hive(类似于 HiveCLI),而远程模式是用于在 Thrift 上连接到单独的 HiveServer2 进程。从 Hive0.14 开始,当 Beeline 与 HiveServer2 一起使用时,它还会打印来自

HiveServer2 的日志消息,以供它执行到 STDERR 的查询。远程 HiveServer2 模式推荐用于生产使用,因为它更安全,不需要为用户授予直接 HDFS/metastore 访问权限。

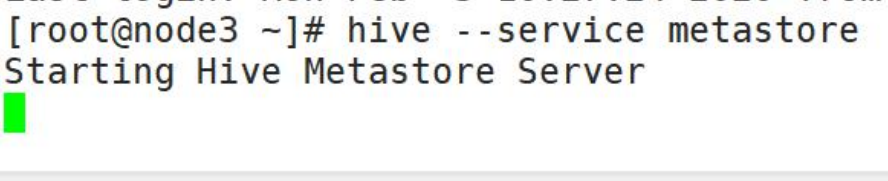

生产环境启动方式:

1. 结束掉 node3 上的 hiveserver2 进程,通过如下命令启动 hive 元数据服务

| hive --service metastore |

2. 在 node4 上启动 hiveserver2

3. 分为开发人员和运营人员:

(1) 开发人员:再打开一个 xshell 连接到 node4 上,然后通过 hive 命令启动,通过命令行模式进行开发与调试。

(2) 运营人员:使用开发好的项目(可以通过 javaweb 项目,使用 jdbc 方法 node4上提供的接口,设计成 BS 架构的项目),通过浏览器进行操作。

扩展案例:node2 上如何通过 beeline 向表中 load 本地文件中的数据?

假设文件中的数据如下:

| 11,小明 11,lol-book-movie,beijing:xisanqi-shanghai:pudong 12,小明 12,lol-book-movie,beijing:xisanqi-shanghai:pudong 13,小明 13,lol-book-movie,beijing:xisanqi-shanghai:pudong 14,小明 14,lol-book-movie,beijing:xisanqi-shanghai:pudong 15,小明 15,lol-movie,beijing:xisanqi-shanghai:pudong |

操作步骤:

A. node2 上的数据准备工作

| [root@node2 ~]# mkdir data [root@node2 ~]# cd data [root@node2 data]# vim data3 将数据放入该文件 |

B. Beeline 连接

| [root@node2 data]# beeline -u jdbc:hive2://node4:10000/default -n root -p 123 |

C. Load 本地数据:

| 0: jdbc:hive2://node4:10000/default> load data local inpath '/root/data/data3' into table person; Error: Error while compiling statement: FAILED: SemanticException Line 1:23 Invalid path ''/root/data/data3'': No files matching path file:/root/data/data3 (state=42000,code=40000) |

明明存在/root/data/data3 啊,为何会有这样的提示呢?这是因为 beeline 通常被用来执行 sql 操作,如果非要通过它实现加载本地文件,需要再对应的 hiveserver2 服务器(也就是 node4)上,存在/root/data/data3 文件。

D. 将 data3 文件从 node2 拷贝到 node4 对应目录下,然后在 node2 上再次执行 load操作。

先拷贝文件

| [root@node2 ~]# scp /root/data/data3 node4:/root/data/data3 |

避免 node2 上文件困扰,也可以将 node2 上的/root/data/data3 删除

再次执行 load

E. 再次执行 load,抛出以下异常:

| Caused by: org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.security. AccessControlException): Permission denied: user=anonymous, access=WRITE, inode="/user/hive_remote/warehouse/person":root:supergroup:drwxr-xr-x |

这是因为默认通过匿名用户登录的,权限不足。解决办法,beeline 连接断开,然后以 root用户登录,再次执行 load 即可。

| [root@node2 data]# beeline -u jdbc:hive2://node4:10000/default -n root 0: jdbc:hive2://node4:10000/default> load data local inpath '/root/data/data3' into table person; INFO : Loading data to table default.person from file:/root/data/data3 INFO : Table default.person stats: [numFiles=1, numRows=0, totalSize=290, rawDataSize=0] No rows affected (1.419 seconds) 0: jdbc:hive2://node4:10000/default> select * from person; +------------+--------------+-------------------------+--------------------------------------------+--+ | person.id | person.name | person.likes | person.address | +------------+--------------+-------------------------+--------------------------------------------+--+ | 11 | 小明 11 | ["lol","book","movie"] | {"beijing":"xisanqi","shanghai":"pudong"} | | 12 | 小明 12 | ["lol","book","movie"] | {"beijing":"xisanqi","shanghai":"pudong"} | | 13 | 小明 13 | ["lol","book","movie"] | {"beijing":"xisanqi","shanghai":"pudong"} | | 14 | 小明 14 | ["lol","book","movie"] | {"beijing":"xisanqi","shanghai":"pudong"} | | 15 | 小明 15 | ["lol","movie"] | {"beijing":"xisanqi","shanghai":"pudong"} | +------------+--------------+-------------------------+--------------------------------------------+--+ 5 rows selected (0.178 seconds) |

5 Hive JDBC

服务端启动hiveserver2后,在java代码中通过调用hive的jdbc访问默认端口10000进行连接、访问。

演示 hive 项目(hive_demo_idea)

177

177

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?