TCD-LoRA用途广泛,可以与ControlNets、IP adapter和AnimateDiff等其他适配器类型组合使用。

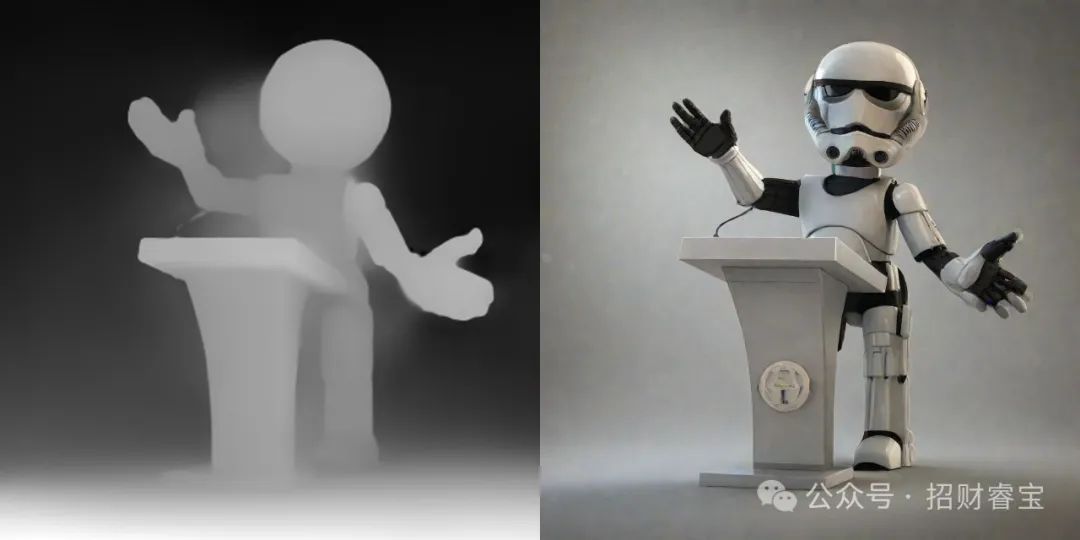

下面是使用Depth ControlNet

import os

os.environ["HF_ENDPOINT"] = "https://hf-mirror.com"

mport torch

import numpy as np

from PIL import Image

from transformers import DPTFeatureExtractor, DPTForDepthEstimation

from diffusers import ControlNetModel, StableDiffusionXLControlNetPipeline

from diffusers.utils import load_image, make_image_grid

from scheduling_tcd import TCDScheduler

device = "cuda"

depth_estimator = DPTForDepthEstimation.from_pretrained("Intel/dpt-hybrid-midas").to(device)

feature_extractor = DPTFeatureExtractor.from_pretrained("Intel/dpt-hybrid-midas")

def get_depth_map(image):

image = feature_extractor(images=image, return_tensors="pt").pixel_values.to(device)

with torch.no_grad(), torch.autocast(device):

depth_map = depth_estimator(image).predicted_depth

depth_map = torch.nn.functional.interpolate(

depth_map.unsqueeze(1),

size=(1024, 1024),

mode="bicubic",

align_corners=False,

)

depth_min = torch.amin(depth_map, dim=[1, 2, 3], keepdim=True)

depth_max = torch.amax(depth_map, dim=[1, 2, 3], keepdim=True)

depth_map = (depth_map - depth_min) / (depth_max - depth_min)

image = torch.cat([depth_map] * 3, dim=1)

image = image.permute(0, 2, 3, 1).cpu().numpy()[0]

image = Image.fromarray((image * 255.0).clip(0, 255).astype(np.uint8))

return image

base_model_id = "stabilityai/stable-diffusion-xl-base-1.0"

controlnet_id = "diffusers/controlnet-depth-sdxl-1.0"

tcd_lora_id = "h1t/TCD-SDXL-LoRA"

controlnet = ControlNetModel.from_pretrained(

controlnet_id,

torch_dtype=torch.float16,

variant="fp16",

).to(device)

pipe = StableDiffusionXLControlNetPipeline.from_pretrained(

base_model_id,

controlnet=controlnet,

torch_dtype=torch.float16,

variant="fp16",

).to(device)

pipe.enable_model_cpu_offload()

pipe.scheduler = TCDScheduler.from_config(pipe.scheduler.config)

pipe.load_lora_weights(tcd_lora_id)

pipe.fuse_lora()

prompt = "stormtrooper lecture, photorealistic"

image = load_image("https://hf-mirror.com/lllyasviel/sd-controlnet-depth/resolve/main/images/stormtrooper.png")

depth_image = get_depth_map(image)

controlnet_conditioning_scale = 0.5 # recommended for good generalization

image = pipe(

prompt,

image=depth_image,

num_inference_steps=4,

guidance_scale=0,

eta=0.3,

controlnet_conditioning_scale=controlnet_conditioning_scale,

generator=torch.Generator(device=device).manual_seed(0),

).images[0]

grid_image = make_image_grid([depth_image, image], rows=1, cols=2)

grid_image.save("infer.jpg")

9592

9592

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?