import tensorflow as tf

from tensorflow import keras

from keras.layers import Dense,BatchNormalization,Activation,Dropout导入数据

(x_train,y_train),(x_test,y_test) = keras.datasets.mnist.load_data()

display(x_train.shape,y_train.shape)(60000, 28, 28)

(60000,)

使用独热编码处理数据y_train和y_test,或者在compile模型时,损失使用sparse_categorical_crossentropy

#独热编码

y_train = tf.keras.utils.to_categorical(y_train,10)

y_test = tf.keras.utils.to_categorical(y_test,10)搭建网络

class NetWork(keras.models.Model):

def __init__(self,**kwargs):

''' 定义网络结构'''

super().__init__(**kwargs)

#flatten的作用是将输入进来的数据进行铺平

self.flatten = keras.layers.Flatten()

#定义隐层1,因为要加BN层,所以bias失效,直接定义为False就行了

self.hidden1 = Dense(units=64,use_bias=False)

#定义隐层2

self.hidden2 = Dense(units=128,use_bias=False)

self.activation = Activation('relu')

#bn层的作用就是做了标准化

self.bn1 = BatchNormalization(momentum=0.99,epsilon=0.001)

self.bn2 = BatchNormalization(momentum=0.99,epsilon=0.001)

#dropout就是做了正则化,随机让一些神经元失效

self.dropout1 = Dropout(0.2)

self.dropout2 = Dropout(0.4)

#定义输出层

self.outputs = Dense(units=10)

self.softmax = Activation('softmax')

def call(self,inputs,**kwargs):

'''定义前向传播,也就是把网络搭起来'''

x = self.flatten(inputs)

x = self.hidden1(x)

#无论先bn还是先激活都是一样的

x = self.bn1(x)

x = self.activation(x)

x = self.dropout1(x)

x = self.hidden2(x)

x = self.bn2(x)

x = self.activation(x)

x = self.dropout2(x)

x = self.outputs(x)

output = self.softmax(x)

return output

生成模型

model = NetWork()使用bulid方法对call函数里的input的形状做一个说明

model.build(input_shape=(None,28,28))查看网络结构

model.summary()Model: "net_work_13"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

flatten_16 (Flatten) multiple 0

dense_46 (Dense) multiple 50176

dense_47 (Dense) multiple 8192

activation_26 (Activation) multiple 0

batch_normalization_18 (Bat multiple 256

chNormalization)

batch_normalization_19 (Bat multiple 512

chNormalization)

dropout_28 (Dropout) multiple 0

dropout_29 (Dropout) multiple 0

dense_48 (Dense) multiple 1290

activation_27 (Activation) multiple 0

=================================================================

Total params: 60,426

Trainable params: 60,042

Non-trainable params: 384

_________________________________________________________________

配置网络

model.compile(optimizer='adam',loss='categorical_crossentropy',metrics='acc')训练网络

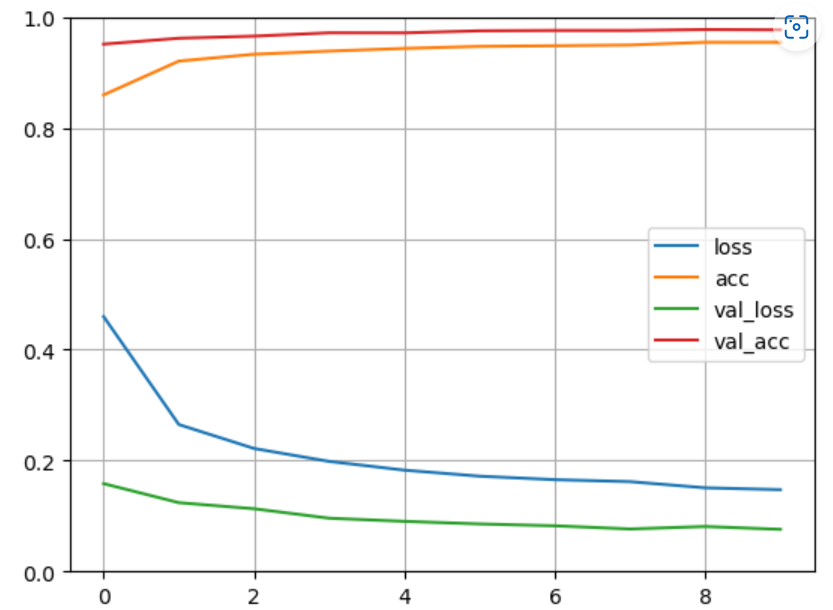

history = model.fit(x_train,y_train,epochs=10,batch_size = 32,validation_data=(x_test,y_test))Epoch 1/10

1875/1875 [==============================] - 13s 6ms/step - loss: 0.4596 - acc: 0.8595 - val_loss: 0.1583 - val_acc: 0.9511

Epoch 2/10

1875/1875 [==============================] - 5s 3ms/step - loss: 0.2649 - acc: 0.9205 - val_loss: 0.1240 - val_acc: 0.9617

Epoch 3/10

1875/1875 [==============================] - 5s 2ms/step - loss: 0.2217 - acc: 0.9329 - val_loss: 0.1130 - val_acc: 0.9655

Epoch 4/10

1875/1875 [==============================] - 5s 3ms/step - loss: 0.1984 - acc: 0.9385 - val_loss: 0.0960 - val_acc: 0.9715

Epoch 5/10

1875/1875 [==============================] - 5s 2ms/step - loss: 0.1826 - acc: 0.9434 - val_loss: 0.0903 - val_acc: 0.9714

Epoch 6/10

1875/1875 [==============================] - 4s 2ms/step - loss: 0.1718 - acc: 0.9470 - val_loss: 0.0856 - val_acc: 0.9751

Epoch 7/10

1875/1875 [==============================] - 4s 2ms/step - loss: 0.1655 - acc: 0.9482 - val_loss: 0.0823 - val_acc: 0.9757

Epoch 8/10

1875/1875 [==============================] - 4s 2ms/step - loss: 0.1619 - acc: 0.9495 - val_loss: 0.0766 - val_acc: 0.9757

Epoch 9/10

1875/1875 [==============================] - 4s 2ms/step - loss: 0.1508 - acc: 0.9545 - val_loss: 0.0810 - val_acc: 0.9774

Epoch 10/10

1875/1875 [==============================] - 4s 2ms/step - loss: 0.1474 - acc: 0.9545 - val_loss: 0.0759 - val_acc: 0.9769

绘制学习曲线

import pandas as pd

import matplotlib.pyplot as plt

import numpy as np

def plot_training_curve(history):

pd.DataFrame(history.history).plot()

plt.grid(True)

plt.gca().set_ylim(0,1)

plt.legend()

plot_training_curve(history)

由于这个mnist手写数字很简单,所以写个简单神经网络就训练出很好的效果,但是在更复杂的图像分类问题中,只写这么几层是远远不够的,那么接下来就可以使用引入卷积神经网路来进行图像分类,得到更高的准确率。

selu激活函数的作用近似于relu+bn

#网络架构和上文相同

model = keras.Sequential()

model.add(keras.layers.Flatten())

model.add(Dense(64,activation='selu'))

model.add(Dense(128,activation='selu'))

model.add(Dense(10,activation='softmax'))

#配置网络

model.compile(optimizer='adam',loss='categorical_crossentropy',metrics='acc')

#训练

history = model.fit(x_train,y_train,epochs=10,batch_size = 32,validation_data=(x_test,y_test))Epoch 1/10

1875/1875 [==============================] - 6s 2ms/step - loss: 1.4172 - acc: 0.7984 - val_loss: 0.5034 - val_acc: 0.8724

Epoch 2/10

1875/1875 [==============================] - 4s 2ms/step - loss: 0.4686 - acc: 0.8854 - val_loss: 0.4361 - val_acc: 0.9047

Epoch 3/10

1875/1875 [==============================] - 4s 2ms/step - loss: 0.4069 - acc: 0.9040 - val_loss: 0.3437 - val_acc: 0.9185

Epoch 4/10

1875/1875 [==============================] - 4s 2ms/step - loss: 0.3341 - acc: 0.9219 - val_loss: 0.3073 - val_acc: 0.9271

Epoch 5/10

1875/1875 [==============================] - 4s 2ms/step - loss: 0.2781 - acc: 0.9312 - val_loss: 0.2853 - val_acc: 0.9366

Epoch 6/10

1875/1875 [==============================] - 4s 2ms/step - loss: 0.2317 - acc: 0.9395 - val_loss: 0.2633 - val_acc: 0.9313

Epoch 7/10

1875/1875 [==============================] - 5s 3ms/step - loss: 0.2044 - acc: 0.9448 - val_loss: 0.2136 - val_acc: 0.9472

Epoch 8/10

1875/1875 [==============================] - 5s 2ms/step - loss: 0.1818 - acc: 0.9508 - val_loss: 0.2016 - val_acc: 0.9477

Epoch 9/10

1875/1875 [==============================] - 5s 3ms/step - loss: 0.1627 - acc: 0.9543 - val_loss: 0.2252 - val_acc: 0.9444

Epoch 10/10

1875/1875 [==============================] - 5s 3ms/step - loss: 0.1569 - acc: 0.9571 - val_loss: 0.1991 - val_acc: 0.9563

对于简单网络,使用这种keras写法更简单

保存模型

model.save('./model.h5')调用模型

model2 = keras.models.load_model('./model.h5')调用的模型与之前的模型效果相同

model2.evaluate(x_test)313/313 [==============================] - 1s 1ms/step - loss: 0.0000e+00 - acc: 0.0000e+00

[0.0, 0.0]

model.evaluate(x_test)313/313 [==============================] - 0s 1ms/step - loss: 0.0000e+00 - acc: 0.0000e+00

[0.0, 0.0]

5691

5691

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?