A. 建立通讯链路

以frameworks/av/media/mediaserver/main_mediaserver.cpp为例来说明Binder通讯机制;

int main(int argc __unused, char **argv __unused)

{

// 省略部分代码;

sp<ProcessState> proc(ProcessState::self());

sp<IServiceManager> sm(defaultServiceManager());

ALOGI("ServiceManager: %p", sm.get());

// 省略部分代码;

::android::hardware::configureRpcThreadpool(16, false);

ProcessState::self()->startThreadPool();

IPCThreadState::self()->joinThreadPool();

::android::hardware::joinRpcThreadpool();

}1. ProcessState

从代码中可以看出:

- ProcessState::self()创建一个进程唯一的ProcessState实例,并且建立了内核与用户空间的通讯(mmap映射)。

- Binder通讯的大小接近(小于)1M;

- mThreadPoolSeq从1开始计数, mDriverFD保存“/dev/binder”句柄资源;

#ifdef __ANDROID_VNDK__

const char* kDefaultDriver = "/dev/vndbinder";

#else

const char* kDefaultDriver = "/dev/binder";

#endif

sp<ProcessState> ProcessState::self()

{

return init(kDefaultDriver, false /*requireDefault*/);

}

sp<ProcessState> ProcessState::init(const char* driver, bool requireDefault) {

// 省略部分代码;

[[clang::no_destroy]] static std::once_flag gProcessOnce;

std::call_once(gProcessOnce, [&](){

// 省略部分代码;

// we must install these before instantiating the gProcess object,

// otherwise this would race with creating it, and there could be the

// possibility of an invalid gProcess object forked by another thread

// before these are installed

int ret = pthread_atfork(ProcessState::onFork, ProcessState::parentPostFork,

ProcessState::childPostFork);

LOG_ALWAYS_FATAL_IF(ret != 0, "pthread_atfork error %s", strerror(ret));

std::lock_guard<std::mutex> l(gProcessMutex);

gProcess = sp<ProcessState>::make(driver);

});

// 省略部分代码;

verifyNotForked(gProcess->mForked);

return gProcess;

}

#define BINDER_VM_SIZE ((1 * 1024 * 1024) - sysconf(_SC_PAGE_SIZE) * 2)

#define DEFAULT_MAX_BINDER_THREADS 15

ProcessState::ProcessState(const char* driver)

: mDriverName(String8(driver)),

mDriverFD(-1),

mVMStart(MAP_FAILED),

mMaxThreads(DEFAULT_MAX_BINDER_THREADS),

mThreadPoolSeq(1),

mCallRestriction(CallRestriction::NONE) {

base::unique_fd opened = open_driver(driver);

if (opened.ok()) {

mVMStart = mmap(nullptr, BINDER_VM_SIZE, PROT_READ, MAP_PRIVATE | MAP_NORESERVE,

opened.get(), 0);

// 省略部分代码;

}

// 省略部分代码;

if (opened.ok()) {

mDriverFD = opened.release();

}

}2. defaultServiceManager

这段代码位于:frameworks/native/libs/binder/IServiceManager.cpp,从代码上看返回一个IServiceManager接口实例,并且这个实例对于一个进程来说也是唯一的(gDefaultServiceManager);但要关注下几个细节;

using AidlServiceManager = android::os::IServiceManager;

static sp<IServiceManager> gDefaultServiceManager;

sp<IServiceManager> defaultServiceManager()

{

std::call_once(gSmOnce, []() {

#if defined(__BIONIC__) && !defined(__ANDROID_VNDK__)

/* wait for service manager */ {

// 省略部分代码;

sp<AidlServiceManager> sm = nullptr;

while (sm == nullptr) {

★ sm = interface_cast<AidlServiceManager>(ProcessState::self()->getContextObject(nullptr));

if (sm == nullptr) {

ALOGE("Waiting 1s on context object on %s.", ProcessState::self()->getDriverName().c_str());

sleep(1);

}

}

gDefaultServiceManager = sp<ServiceManagerShim>::make(sm);

});

return gDefaultServiceManager;

}2.1 getContextObject

返回一个BpBinder实例;

sp<IBinder> ProcessState::getContextObject(const sp<IBinder>& /*caller*/)

{

sp<IBinder> context = getStrongProxyForHandle(0);

// 省略部分代码;

return context;

}

ProcessState::handle_entry* ProcessState::lookupHandleLocked(int32_t handle)

{

const size_t N=mHandleToObject.size();

if (N <= (size_t)handle) {

handle_entry e;

e.binder = nullptr;

e.refs = nullptr;

status_t err = mHandleToObject.insertAt(e, N, handle+1-N);

if (err < NO_ERROR) return nullptr;

}

return &mHandleToObject.editItemAt(handle);

}

sp<IBinder> ProcessState::getStrongProxyForHandle(int32_t handle)

{

sp<IBinder> result;

std::unique_lock<std::mutex> _l(mLock);

// 省略部分代码;

handle_entry* e = lookupHandleLocked(handle);

if (e != nullptr) {

IBinder* b = e->binder;

if (b == nullptr || !e->refs->attemptIncWeak(this)) {

if (handle == 0) {

☆ IPCThreadState* ipc = IPCThreadState::self();

CallRestriction originalCallRestriction = ipc->getCallRestriction();

ipc->setCallRestriction(CallRestriction::NONE);

Parcel data;

status_t status = ipc->transact(

0, IBinder::PING_TRANSACTION, data, nullptr, 0);

ipc->setCallRestriction(originalCallRestriction);

if (status == DEAD_OBJECT)

return nullptr;

}

sp<BpBinder> b = BpBinder::PrivateAccessor::create(handle);

e->binder = b.get();

if (b) e->refs = b->getWeakRefs();

result = b;

}

// 省略部分代码;

}

return result;

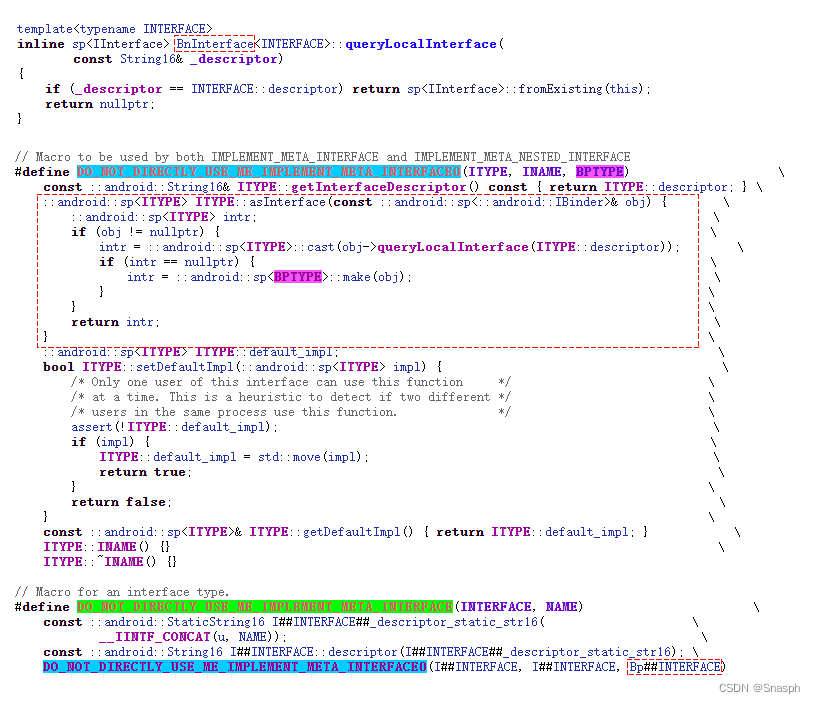

}2.2 interface_cast

检索实现IServiceManager接口的BnBinder实例。如何没有BnBinder实例,则创建一个BpBinder实例,这也意味着后面的函数调用可能会出现异常(BAD_TYPE);

/**

* If this is a local object and the descriptor matches, this will return the

* actual local object which is implementing the interface. Otherwise, this will

* return a proxy to the interface without checking the interface descriptor.

* This means that subsequent calls may fail with BAD_TYPE.

*/

template<typename INTERFACE>

inline sp<INTERFACE> interface_cast(const sp<IBinder>& obj)

{

return INTERFACE::asInterface(obj);

}

3. startThreadPool

启动binder线程,binder线程命名规则为: binder:pid_callingCnt;

void ProcessState::startThreadPool()

{

std::unique_lock<std::mutex> _l(mLock);

if (!mThreadPoolStarted) {

// 省略部分代码;

mThreadPoolStarted = true;

spawnPooledThread(true);

}

}

void ProcessState::spawnPooledThread(bool isMain)

{

if (mThreadPoolStarted) {

// name: binder:pid_callingCnt;

String8 name = makeBinderThreadName();

ALOGV("Spawning new pooled thread, name=%s\n", name.c_str());

sp<Thread> t = sp<PoolThread>::make(isMain);

t->run(name.c_str());

pthread_mutex_lock(&mThreadCountLock);

mKernelStartedThreads++;

pthread_mutex_unlock(&mThreadCountLock);

}

// TODO: if startThreadPool is called on another thread after the process

// starts up, the kernel might think that it already requested those

// binder threads, and additional won't be started. This is likely to

// cause deadlocks, and it will also cause getThreadPoolMaxTotalThreadCount

// to return too high of a value.

}

String8 ProcessState::makeBinderThreadName() {

// 表示此接口被调用次数

int32_t s = android_atomic_add(1, &mThreadPoolSeq);

pid_t pid = getpid();

std::string_view driverName = mDriverName.c_str();

android::base::ConsumePrefix(&driverName, "/dev/");

String8 name;

name.appendFormat("%.*s:%d_%X", static_cast<int>(driverName.length()), driverName.data(), pid, s);

return name;

}4. joinThreadPool

线程执行的主体部分:

- 通过ioctl(mProcess->mDriverFD, BINDER_WRITE_READ, &bwr)读写驱动节点;

- 获取command指令来决定什么样的操作;

void IPCThreadState::joinThreadPool(bool isMain)

{

pthread_mutex_lock(&mProcess->mThreadCountLock);

mProcess->mCurrentThreads++;

pthread_mutex_unlock(&mProcess->mThreadCountLock);

mOut.writeInt32(isMain ? BC_ENTER_LOOPER : BC_REGISTER_LOOPER);

mIsLooper = true;

status_t result;

do {

processPendingDerefs();

// now get the next command to be processed, waiting if necessary

★ result = getAndExecuteCommand();

// 省略部分代码

// Let this thread exit the thread pool if it is no longer

// needed and it is not the main process thread.

if(result == TIMED_OUT && !isMain) {

break;

}

} while (result != -ECONNREFUSED && result != -EBADF);

mOut.writeInt32(BC_EXIT_LOOPER);

mIsLooper = false;

talkWithDriver(false);

pthread_mutex_lock(&mProcess->mThreadCountLock);

mProcess->mCurrentThreads--;

pthread_mutex_unlock(&mProcess->mThreadCountLock);

}

status_t IPCThreadState::getAndExecuteCommand()

{

status_t result;

int32_t cmd;

result = talkWithDriver();

if (result >= NO_ERROR) {

size_t IN = mIn.dataAvail();

if (IN < sizeof(int32_t)) return result;

cmd = mIn.readInt32();

pthread_mutex_lock(&mProcess->mThreadCountLock);

mProcess->mExecutingThreadsCount++;

if (mProcess->mExecutingThreadsCount >= mProcess->mMaxThreads &&

mProcess->mStarvationStartTimeMs == 0) {

mProcess->mStarvationStartTimeMs = uptimeMillis();

}

pthread_mutex_unlock(&mProcess->mThreadCountLock);

result = executeCommand(cmd);

pthread_mutex_lock(&mProcess->mThreadCountLock);

mProcess->mExecutingThreadsCount--;

if (mProcess->mExecutingThreadsCount < mProcess->mMaxThreads &&

mProcess->mStarvationStartTimeMs != 0) {

int64_t starvationTimeMs = uptimeMillis() - mProcess->mStarvationStartTimeMs;

if (starvationTimeMs > 100) {

ALOGE("binder thread pool (%zu threads) starved for %" PRId64 " ms",

mProcess->mMaxThreads, starvationTimeMs);

}

mProcess->mStarvationStartTimeMs = 0;

}

// Cond broadcast can be expensive, so don't send it every time a binder

// call is processed. b/168806193

if (mProcess->mWaitingForThreads > 0) {

pthread_cond_broadcast(&mProcess->mThreadCountDecrement);

}

pthread_mutex_unlock(&mProcess->mThreadCountLock);

}

return result;

}下面是talkWithDriver和executeCommand(cmd)相关代码实现,cmd有:BR_TRANSACTION,BR_DEAD_BINDER,BR_FINISHED等。

struct binder_write_read {

binder_size_t write_size; /* bytes to write */

binder_size_t write_consumed; /* bytes consumed by driver */

binder_uintptr_t write_buffer;

binder_size_t read_size; /* bytes to read */

binder_size_t read_consumed; /* bytes consumed by driver */

binder_uintptr_t read_buffer;

};

status_t IPCThreadState::talkWithDriver(bool doReceive)

{

// 省略部分代码,详见: frameworks/native/libs/binder/IPCThreadState.cpp

binder_write_read bwr;

bwr.write_consumed = 0;

bwr.read_consumed = 0;

status_t err;

do {

#if defined(__ANDROID__)

if (ioctl(mProcess->mDriverFD, BINDER_WRITE_READ, &bwr) >= 0)

err = NO_ERROR;

else

err = -errno;

#else

err = INVALID_OPERATION;

#endif

if (mProcess->mDriverFD < 0) {

err = -EBADF;

}

} while (err == -EINTR);

if (err >= NO_ERROR) {

if (bwr.write_consumed > 0) {

else {

mOut.setDataSize(0);

processPostWriteDerefs();

}

}

if (bwr.read_consumed > 0) {

mIn.setDataSize(bwr.read_consumed);

mIn.setDataPosition(0);

}

return NO_ERROR;

}

return err;

}

status_t IPCThreadState::executeCommand(int32_t cmd)

{

BBinder* obj;

RefBase::weakref_type* refs;

status_t result = NO_ERROR;

switch ((uint32_t)cmd) {

/**

* 省略此部分代码,详见:frameworks/native/libs/binder/IPCThreadState.cpp

* 涉及到的数据类型,详见: external/kernel-headers/original/uapi/linux/android/binder.h

*/

case BR_ACQUIRE:

case BR_TRANSACTION_SEC_CTX:

case BR_TRANSACTION:

case BR_DEAD_BINDER:

case BR_CLEAR_DEATH_NOTIFICATION_DONE:

case BR_FINISHED:

default:;

if (result != NO_ERROR) {

mLastError = result;

}

return result;

}B. 用户侧ioctl与kernel侧的交互简介

在aosp kernel的drivers/android/binder.c中会初始化、绑定相关函数操作;从下述内核代码中可知,用户侧的ioctl操作将会调用binder_ioctl(struct file *filp, unsigned int cmd, unsigned long arg)函数。

const struct file_operations binder_fops = {

.owner = THIS_MODULE,

.poll = binder_poll,

.unlocked_ioctl = binder_ioctl,

.compat_ioctl = binder_ioctl,

.mmap = binder_mmap,

.open = binder_open,

.flush = binder_flush,

.release = binder_release,

};

static int __init init_binder_device(const char *name)

{

int ret;

struct binder_device *binder_device;

binder_device = kzalloc(sizeof(*binder_device), GFP_KERNEL);

if (!binder_device)

return -ENOMEM;

binder_device->miscdev.fops = &binder_fops;

// 省略部分代码;

ret = misc_register(&binder_device->miscdev);

if (ret < 0) {

kfree(binder_device);

return ret;

}

hlist_add_head(&binder_device->hlist, &binder_devices);

return ret;

}

static int __init binder_init(void) {

// 省略部分代码;

while ((device_name = strsep(&device_tmp, ","))) {

ret = init_binder_device(device_name);

if (ret)

goto err_init_binder_device_failed;

}

}

device_initcall(binder_init);1. struct file_operations的ioctl

// 源码位于: include/linux/fs.h

struct file_operations {

struct module *owner;

__poll_t (*poll) (struct file *, struct poll_table_struct *);

long (*unlocked_ioctl) (struct file *, unsigned int, unsigned long);

long (*compat_ioctl) (struct file *, unsigned int, unsigned long);

int (*mmap) (struct file *, struct vm_area_struct *);

int (*open) (struct inode *, struct file *);

int (*flush) (struct file *, fl_owner_t id);

int (*release) (struct inode *, struct file *);

} __randomize_layout;在 Linux 内核中,当用户空间应用程序调用 ioctl 时,它最终是调用内核 file_operations 结构中的 unlocked_ioctl 还是 compat_ioctl ,取决于几个因素:

① 系统类型(32 位系统还是 64 位系统);

② 调用应用程序的类型(32 位应用程序还是 64 位应用程序)。

unlocked_ioctl:

unlocked_ioctl 函数是 Linux 内核中 ioctl 系统调用的标准处理程序。它用于非阻塞 I/O 控制操作。在 64 位系统中,如果 64 位应用程序发出 ioctl 调用,通常由 unlocked_ioctl 函数处理。

unlocked_ioctl 中的 "unlocked "指的是该函数不持有大内核锁(BKL),这种锁曾被用来防止并发访问内核中的关键代码段。出于可扩展性和性能方面的考虑,现代 Linux 内核已不再使用 BKL。

compat_ioctl:

compat_ioctl 函数用于兼容在 64 位内核上运行的 32 位应用程序。它允许内核正确处理来自这些应用程序的 ioctl 调用,因为在 32 位和 64 位环境中,数据结构的对齐方式或某些数据类型的大小可能存在差异。64 位系统上的 32 位应用程序:如果 32 位应用程序在 64 位系统上发出 ioctl 调用,则使用 compat_ioctl 处理该调用。

C. 示例

1. native层创建Service实例

通过ServiceManagement::registerAsServiceInternal()将native层的服务注册到系统中

int main(int /* argc */, char* /* argv */[]) {

sp<IDemo> demo= new Demo();

configureRpcThreadpool(1, true /* will join */);

if (demo->registerAsService() != OK) {

ALOGE("Could not register demo service.");

return 1;

}

joinRpcThreadpool();

ALOGE("Service exited!");

return 1;

}

// 向服务管理器注册服务。对于Trebilized的设备,服务也必须在VINTF清单中。

// out/soong/.intermediates/hardware/interfaces/demo/../目录中

::android::status_t IDEMO::registerAsService(const std::string &serviceName/* = "default"*/) {

return ::android::hardware::details::registerAsServiceInternal(this, serviceName);

}2. 在aidl文件中定义一个接口

interface IDemo {

boolean getRecordAudio(int cameraID);

}3. 生成后的.class文件中的相关代码

public boolean getRecordAudio(int cameraID) throws RemoteException {

Parcel _data = Parcel.obtain();

Parcel _reply = Parcel.obtain();

boolean _result;

try {

// 写RPC头部数据,string字符串;

_data.writeInterfaceToken("com.example.IDemo");

// 写入参数据

_data.writeInt(cameraID);

// ★ 开始传递数据, flag=0表示同步调用,flag=1表示oneway异步

boolean _status = this.mRemote.transact(15, _data, _reply, 0);

if (!_status && IDemo.Stub.getDefaultImpl() != null) {

boolean var6 = IDemo.Stub.getDefaultImpl().getRecordAudio(cameraID);

return var6;

}

_reply.readException();

_result = 0 != _reply.readInt();

} finally {

_reply.recycle();

_data.recycle();

}

return _result;

}3.1 mRemote字段

从.class文件中可知,mRemote是App侧先从ServiceManager获取服务后,再调用asInterface(IBinder)实现的BpBinder。

因为在getService()时,会调用ProcessState::getContextObject(),即:不同进程申请的BpBinder;

// App侧实现

IBinder binder = ServiceManager.getService("android.hardware.demo@1.0::IDemo");

IDemo mDvrService = IDemo.Stub.asInterface(binder);

// IDemo.class

public static IDemo asInterface(IBinder obj) {

if (obj == null) {

return null;

} else {

IInterface iin = obj.queryLocalInterface("android.hardware.demo.IDemo");

return (IDemo)(iin != null && iin instanceof IDemo? (IDemo)iin : new Proxy(obj));

}

}3.2 this.mRemote.transact(15, _data, _reply, 0);

从3.1可知,mRemote是BpBinder类型,因此此方法要调用BpBinder::transact(uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags)

// 代码位于: frameworks/native/libs/binder/BpBinder.cpp

status_t BpBinder::transact(

uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags)

{

// 省略部分代码;

// Once a binder has died, it will never come back to life.

if (mAlive) {

status_t status;

if (isRpcBinder()) [[unlikely]] {

status = rpcSession()->transact(sp<IBinder>::fromExisting(this), code, data, reply,

flags);

} else {

if constexpr (!kEnableKernelIpc) {

LOG_ALWAYS_FATAL("Binder kernel driver disabled at build time");

return INVALID_OPERATION;

}

// ☆

status = IPCThreadState::self()->transact(binderHandle(), code, data, reply, flags);

}

if (data.dataSize() > LOG_TRANSACTIONS_OVER_SIZE) {

RpcMutexUniqueLock _l(mLock);

ALOGW("Large outgoing transaction of %zu bytes, interface descriptor %s, code %d",

data.dataSize(), String8(mDescriptorCache).c_str(), code);

}

if (status == DEAD_OBJECT) mAlive = 0;

return status;

}

return DEAD_OBJECT;

}从上述代码上看,要调用IPCThreadState::transact()方法

// 代码位于: frameworks/native/libs/binder/IPCThreadState.cpp

status_t IPCThreadState::transact(int32_t handle,

uint32_t code, const Parcel& data,

Parcel* reply, uint32_t flags)

{

// 省略部分代码;

flags |= TF_ACCEPT_FDS;

// ★

status_t err = writeTransactionData(BC_TRANSACTION, flags, handle, code, data, nullptr);

if (err != NO_ERROR) {

if (reply) reply->setError(err);

return (mLastError = err);

}

// ☆ waitForResponse

if ((flags & TF_ONE_WAY) == 0) {

if (reply) {

err = waitForResponse(reply);

} else {

Parcel fakeReply;

err = waitForResponse(&fakeReply);

}

} else {

err = waitForResponse(nullptr, nullptr);

}

return err;

}接下来,要将数据写入Parcel中。

// 代码位于: frameworks/native/libs/binder/IPCThreadState.cpp

status_t IPCThreadState::writeTransactionData(int32_t cmd, uint32_t binderFlags,

int32_t handle, uint32_t code, const Parcel& data, status_t* statusBuffer)

{

binder_transaction_data tr;

tr.target.ptr = 0; /* Don't pass uninitialized stack data to a remote process */

tr.target.handle = handle;

tr.code = code;

tr.flags = binderFlags;

tr.cookie = 0;

tr.sender_pid = 0;

tr.sender_euid = 0;

const status_t err = data.errorCheck();

if (err == NO_ERROR) {

tr.data_size = data.ipcDataSize();

tr.data.ptr.buffer = data.ipcData();

tr.offsets_size = data.ipcObjectsCount()*sizeof(binder_size_t);

tr.data.ptr.offsets = data.ipcObjects();

}

// 省略部分代码;

mOut.writeInt32(cmd);

mOut.write(&tr, sizeof(tr));

return NO_ERROR;

}后面通过IPCThreadState::waitForResponse()及talkWithDriver(),利用内核binder_ioctl进行数据交互;

// 代码位于: frameworks/native/libs/binder/IPCThreadState.cpp

status_t IPCThreadState::waitForResponse(Parcel *reply, status_t *acquireResult)

{

uint32_t cmd;

int32_t err;

while (1) {

if ((err=talkWithDriver()) < NO_ERROR) break;

err = mIn.errorCheck();

if (err < NO_ERROR) break;

if (mIn.dataAvail() == 0) continue;

cmd = (uint32_t)mIn.readInt32();

switch (cmd) {

// 省略具体细节代码;

case BR_ONEWAY_SPAM_SUSPECT:

case BR_TRANSACTION_COMPLETE:

case BR_TRANSACTION_PENDING_FROZEN:

case BR_DEAD_REPLY:

case BR_FAILED_REPLY:

case BR_FROZEN_REPLY:

case BR_ACQUIRE_RESULT:

case BR_REPLY:

default:

break;

}

return err;

}

}

2万+

2万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?