为了创建多任务嵌入式系统,最简单的办法就是应用程序任务独立起来。如今大多数操作系统都使用操作系统,且其中高级的操作系统采用了基于硬件的内存管理单元(MMU)。MMU提供的核心内容之一就是组织任务(manage task)为运行在各自私有内存空间的独立程序。在这种操作系统中,任务不需要知道不相关任务的内存需求。

在Chappter-13,我们介绍了MPU。其中,如果两个程序被编译的时候使用的地址重叠了,这是不可能同时在内存中运行的。

MMU简化了应用程序任务的编程,因为其提供了开启虚拟内存(virtual memory)的资源,虚拟内存是独立于系统物理空间的额外内存空间。MMU作用就如同翻译器(translator),其将需要运行在虚拟内存的数据和程序的地址转换为实际物理地址。这样允许运行在同一虚拟地址的程序被存放在物理内存中的不同位置。

基于如上观点,产生了两种不同的地址:

1. 虚拟地址:当在内存定位程序的时候,由compiler(编译器)和linker(连接器)分配安排。

2. 物理地址:用于访问程序实际所在的主存空间中的硬件组件(hardware component)。

ARM提供了一系列内置MMU硬件的处理器,这样能高效支持使用虚拟内存的多任务环境。

本章节包含如下内容:

| aspect | explanation |

|---|---|

| Relocation register | hold the conversion data to translate virtual memory address to physical memory address |

| Translation Lookaside Buffer(TLB) | a cache of recent address relocations |

| How to configure the behavior of the relocation registers | use pages and page table |

| How to create regions | configuring blocks of pages in virtual memory |

| 介绍配置MMU硬件的细节 | page tables, the Translation Lookaside Buffer(TLB), access permission, caches and write buffer,the CP15:c1 control register, and the Fast Context Switch Extension(FCSE) |

| demonstration software | 用实际example展示如何建立使用虚拟内存的嵌入系统。核心内容是如何配置MMU来从虚拟地址转换到物理地址,以及如何在任务间进行切换。 |

14.1 Moving from an MPU to an MMU

在MPU中Regions有两种状态:激活(active)和休眠(dormant)。active region包含当前系统正在使用的数据或者代码。而dormant region包含的数据和代码都没有被使用,但有可能在很短的时间内进入激活的状态。

MPU和MMU最主要的不同就是MMU额外增加硬件支持虚拟内存(virtual memory),MMU也扩展了可获得的region数目。

14.2 How virtual memory works

MMU中,多个被编译和连接到regions中的任务,即使它们在主存中地址出现了重叠,这些任务也是可以运行的。在MPU中就是不能运行的。MMU虚拟内存的支持使系统拥有多个virtual memory maps和一个physical memory map。

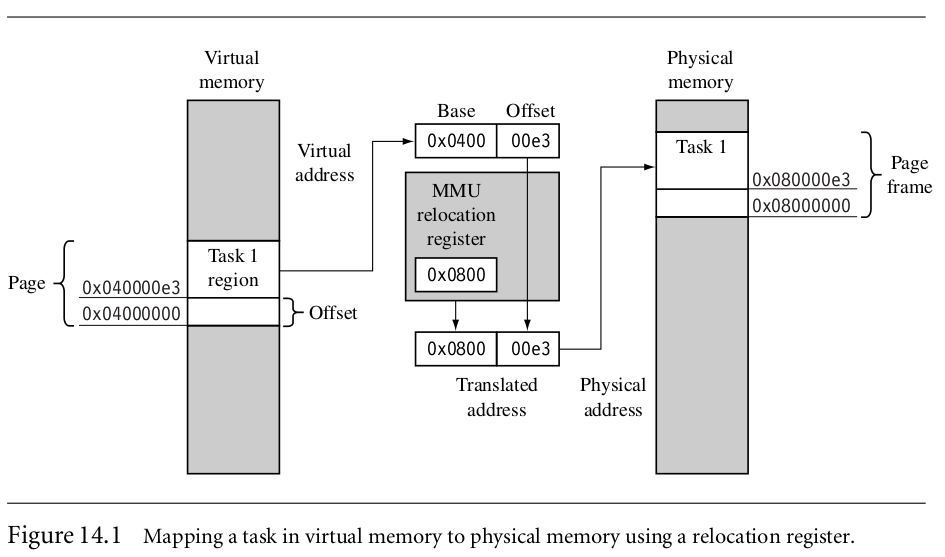

为了允许任务拥有其私有的virtual memory map,MMU硬件能够进行address relocation,能够在处理器核心访问主存前,将处理器输出的内存地址进行翻译。能理解翻译过程的最简单办法就是想象在core和main memory之间的MMU里有a relocation register.

如上图:当处理器产生虚拟地址的时候,MMU会将其高几位替换成relocation register中的内容,以此来产生物理地址。虚拟地址的低位部分是转换到物理地址的偏移部分。可以被转换的地址范围可以使用偏移部分的最大尺寸来限制。

通过上图我们来讲解一下如何转换两个虚拟地址一样的任务到不同的物理地址。

task1 task2均为0x040000e3,task1用relocation register的值替换高位之后为0x0800 00e3,task2替换高位后为0x0001 00e3,这样在物理地址上就不一样了。物理地址的起始地址0x10000(64kb)的整数倍,如task1物理空间的起始地址就是0x0800 0000.

一个relocation register只能转换内存中单独的区域。虚拟内存中这块区域就被成为page.由转换进程指向的物理内存中的区域被称为page frame。

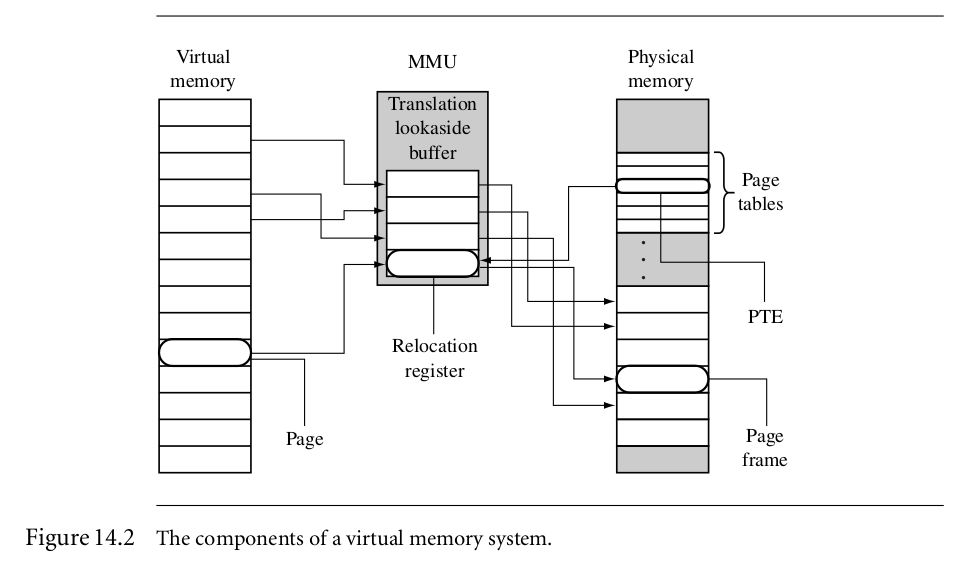

pages, the MMU, page frame之间的关系展示在上图中。ARM的MMU硬件需要很多relocation registers来支持转换。在ARM MMU中暂时存放转换值的relocation registers集是64个relocation registers的高速缓存cache,这cache被称为Translation Lookaside Buffer(TLB).TLB缓存了最近访问的pages。

MMU在主存中使用tables来存储描述virtual memory maps的数据,该tables被称为page tables,在其中的page table entry(PTE)保存了从虚拟内存的page转换到物理内存中page frame的全部信息。

PTE包含如下信心:

| PTE Contents |

|---|

| the physical base address |

| page’s access permission |

| the cache and write buffer configuration for the page |

Regions in MMU are created in software by grouping blocks of virtual pages in memory.

14.2.1 Defing regions using pages

In the MPU,regions are a hardware component. In the MMU, regions are defined as groups of page tables and are controlled completely in software as sequential pages in virtual memory.

regions的位置和尺寸可以被保存在软件的数据结构中,并且实际的转换数据和属性信息被保存在page tables中。

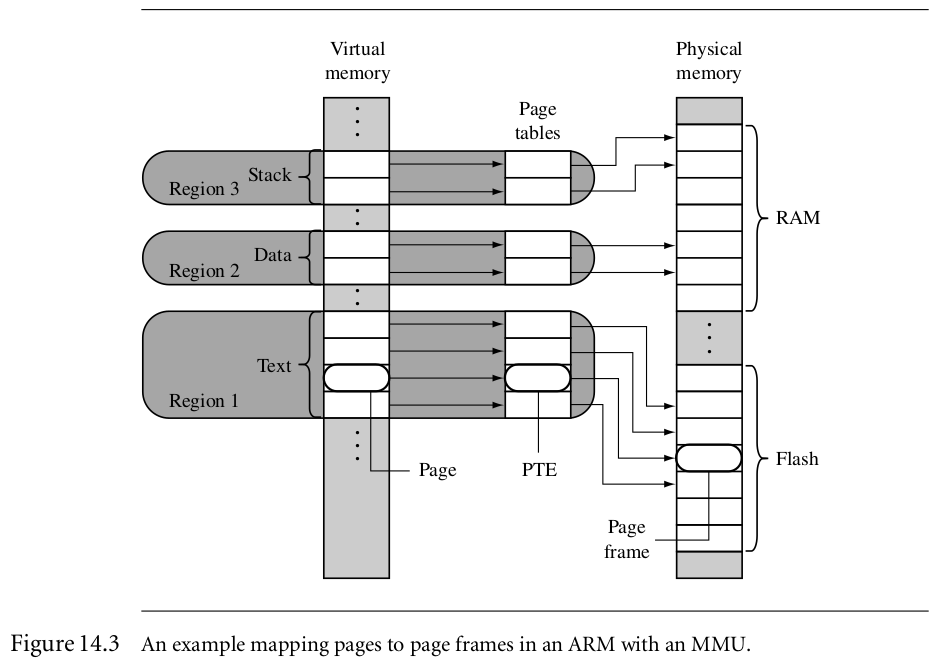

下图是单个任务拥有三块regions的实例。

code定位在flash中是操作系统支持任务间共享代码的典型做法。

除了mater level1(L1)page tables,所有的page tables能表示虚拟内存1MB的区域。如果region的尺寸大于1MB,则region必须要包含a list of page tables

14.2.2 Multitasking and the MMU

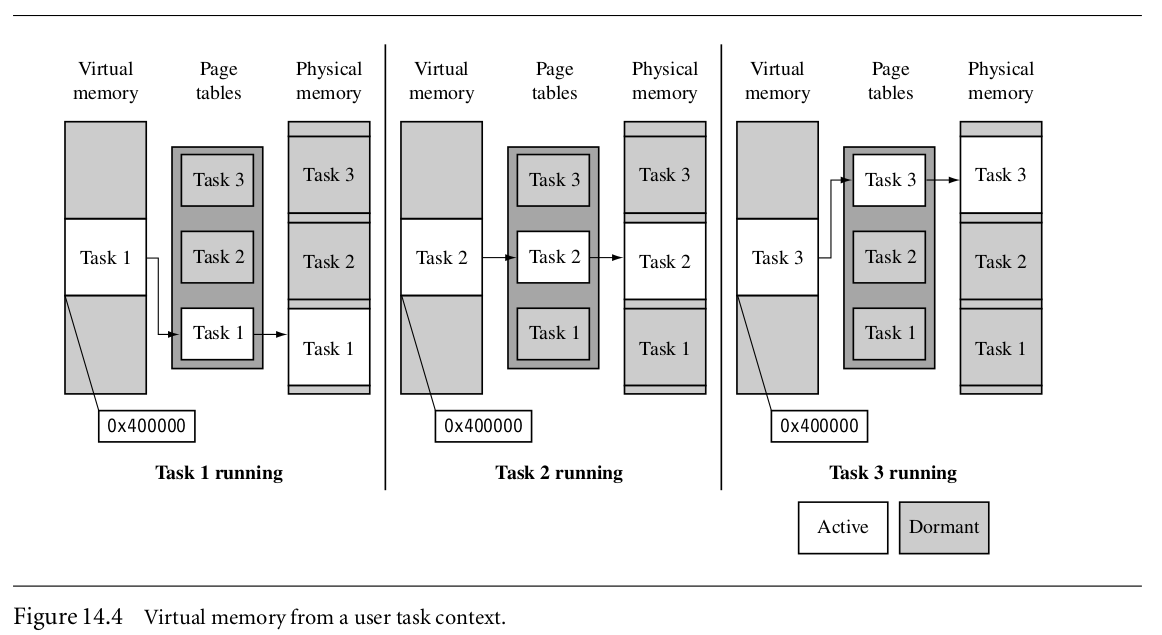

Page tables can reside in memory and not be mapped to MMU hardware. One way to build a multitasking system is to create separate sets of page tables, each mapping a unique virtual memory space for a task. To activate a task, the set of page tables for the specific task and its virtual memory space are mapped into use by the MMU. The other sets of inactive page tables represent dormant tasks. This approach allows all tasks to remain resident in physical memory and still be available immediately when a context switch occurs to activate it.

下图三个任务拥有各自的set of page tables并且运行在同一个虚拟地址0x04000000:

dormant(休眠)task’s page tables不能被运行的任务访问。这样能完全保护休眠的任务,因为从virtual memory根本没有map到休眠任务的路线。

当page table激活和休眠切换的时候,存放先前数据的cache需要clean and flush,此外TLB也需要flushing,因为也缓存了old translation data。

在context switch的时候,page table data没有移动到物理内存中,仅仅指向page tables的指针发生了改变。

To switch between tasks requires the following steps:

1. Save the active task context and place the task in a dormant state.

2. Flush the caches; possibly clean the D-cache if using a writeback policy.

3. Flush the TLB to remove translations for the retiring task.

4. Configure the MMU to use new page tables translating the virtual memory execution

area to the awakening task’s location in physical memory.

5. Restore the context of the awakening task.

6. Resume execution of the restored task.

By configuring the data cache to use a writethrough policy, there is no need to clean the data cache during a context switch, which will provide better context switch performance.

If, after some performance analysis, the efficiency of a writethrough system is not adequate, then performance can be improved using a writeback cache. If you are using a disk drive or other very slow secondary storage, a writeback policy is almost mandatory.

This argument only applies to ARM cores that use logical caches(逻辑缓存,逻辑与物理cache详细内容见chapter12-caches的第3.1节,链接http://blog.csdn.net/feather_wch/article/details/50560647#t5). If a physical cache is present, as in the ARM11 family, the information in cache remains valid when the MMU changes its virtual memory map. Using a physical cache eliminates(消除) the need to perform cache management activities when changing virtual memory addresses.

14.2.3 Memory organization in a Virtual Memory System

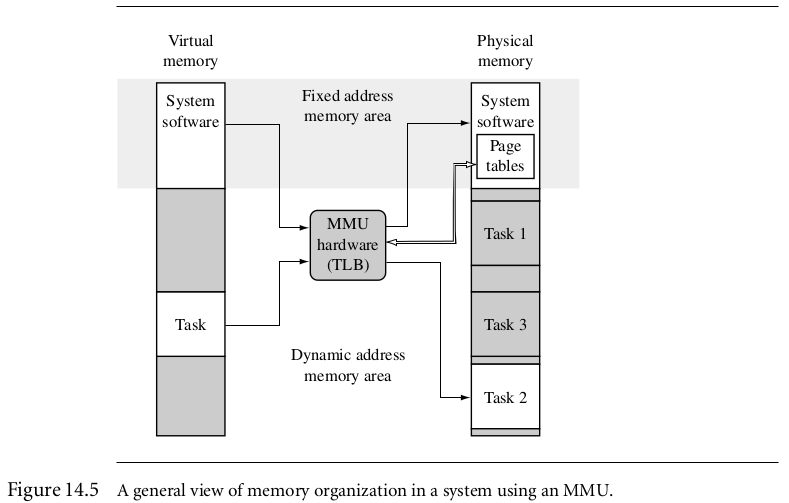

如图:

物理内存固定的空间里包含操作系统内核和其他进程。包含了TLB的MMU是操作在虚拟和物理空间之外的硬件。它的功能就是将虚拟空间和物理空间之间进行转换。

将系统软件固定在virtual memory中能消除一些内存管理任务和pipeline(可能会破坏程序的执行)的影响(具体影响见p499 14.2.3)。我们推荐在固定地址执行系统代码的时候激活(activating)page tables,这样能确保在用户任务间安全地转换。

Many embedded systems do not use complex virtual memory but simply create a “fixed” virtual memory map to consolidate(加强) the use of physical memory. These systems usually collect blocks of physical memory spread over a large address space into a contiguous block of virtual memory(分布在更大的虚拟内存中连续的地址空间上). They commonly create a “fixed” map during the initialization process, and the map remains the same during system operation.

14.3 Details of the ARM MMU

The ARM MMU performs several tasks:

1. It translates virtual addresses into physical addresses,

2. it controls memory access permission,

3. it determines the individual behavior of the cache and write buffer for each page in memory.

When the MMU is disabled, all virtual addresses map one-to-one to the same physical address. If the MMU is unable to translate an address, it generates an abort exception. The MMU will only abort on translation, permission, and domain faults.

The main software configuration and control components in the MMU are

■ Page tables

■ The Translation Lookaside Buffer (TLB)

■ Domains and access permission

■ Caches and write buffer

■ The CP15:c1 control register

■ The Fast Context Switch Extension

We provide the details of operation and how to configure these components in the following sections.

14.4 Page tables

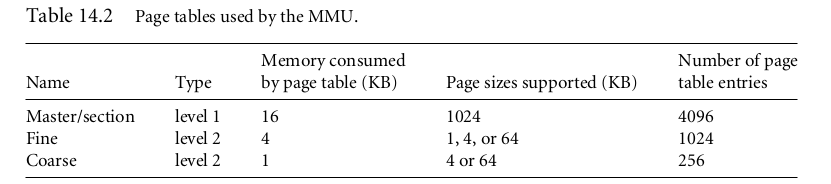

ARM MMU硬件有多层page table结构。一共有两层:level 1(L1), level 2(L2)

L1包含两种类型的page table entry: 保存指向L2 page tables的指针,保存转换1MB pages的page table entries。L1 master table也被成为section page table,其将4GB地址空间分成1MB的sections,因此L1 page table包含4096个page table entries。

如图:

A coarse(粗糙的) L2 page table包含256个大小为1KB的entries,每个PTE能转换4KB,他支持的页面大小为4KB或64KB,如果是64KB则需要16个相同的(identical)PTE—16x4KB = 64KB

A file L2 page table包含1024个大小为4KB的entries,每个PTE能转换1KB。其支持1,4, or 64KB的页面大小,如果是64KB大小的页面则需要64个连续的PTE。

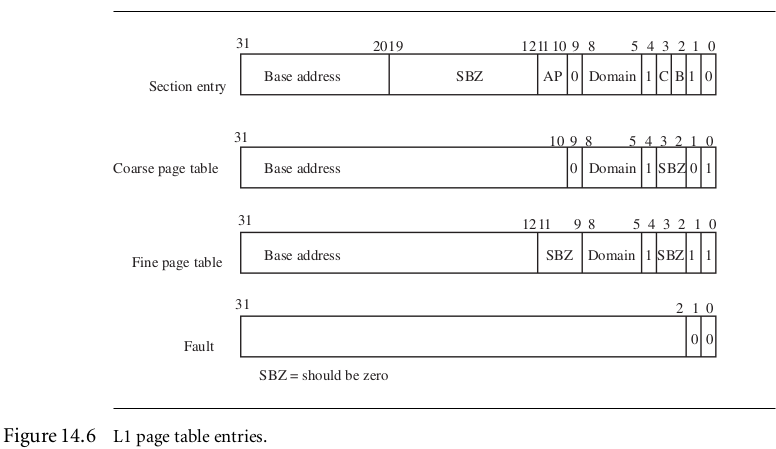

14.4.1 L1 page table entries

L1 page table包含四种entry:

■ A 1 MB section translation entry

■ A directory entry that points to a fine L2 page table

■ A directory entry that points to a coarse L2 page table

■ A fault entry that generates an abort exception

系统辨别entry的类型使用entry域的0~1位。PTE的格式需要L2页表的地址以其页面尺寸的整数倍对齐。

下图展现L1 page table中所有entry的形式。

英文原文:(The system identifies the type of entry by the lower two bits [1:0] in the entry field. The format of the PTE requires the address of an L2 page table to be aligned on a multiple of its page size. Figure 14.6 shows the format of each entry in the L1 page table.)

| entries | |

|---|---|

| A section page table entry points to a 1 MB section of memory | The upper 12 bits of the page table entry replace the upper 12 bits of the virtual address to generate the physical address. A section entry also contains the domain, cached, buffered, and access permission attributes, which we discuss in Section 14.6. |

| A coarse page entry contains a pointer to the base address of a second-level coarse page table. | It also contains domain information for the 1 MB section of virtual memory represented by the L1 table entry. For coarse pages, the tables must be aligned on an address multiple of 1 KB. |

| A fine page table entry contains a pointer to the base address of a second-level fine page table | It also contains domain information for the 1 MB section of virtual memory represented by the L1 table entry. Fine page tables must be aligned on an address multiple of 4 KB. |

| A fault page table entry generates a memory page fault. | The fault condition results in either a prefetch or data abort, depending on the type of memory access attempted. |

通过写入CP15:c2 register可以设置L1 master page table在内存的位置

(The location of the L1 master page table in memory is set by writing to the CP15:c2 register.)

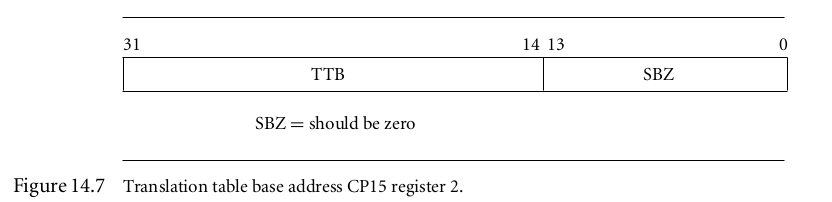

14.4.2 the L1 Translation Table Base Address

L1转换表基址

CP15:c2寄存器保存转换表基址(TTB),一种地址,指向L1 table在虚拟内存中的位置。

具体格式如下图:

(The CP15:c2 register holds the translation table base address (TTB)—an address pointing to the location of the master L1 table in virtual memory. Figure 14.7 shows the format of CP15:c2 register.)

Example

该例程能设置L1 page table的TTB。使用了指令MRC来写入CP15:c2。

原型如下:

void ttbSet(unsigned int ttb);唯一的参数必须以16KB进行对齐。

函数定义如下:

void ttbSet(unsigned int ttb)

{

ttb &= 0xffffc000;

__asm{MRC p15, 0, ttb, c2, c0, 0 } /* set translation table base */

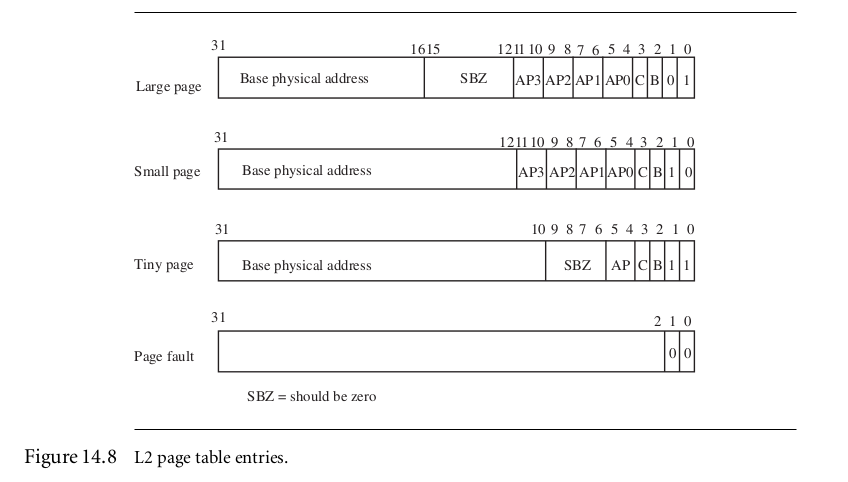

}14.4.3 Level 2 page table entries

L2页表表项

包含四种表项:

■ A large page entry defines the attributes for a 64 KB page frame.

■ A small page entry defines a 4 KB page frame.

■ A tiny page entry defines a 1 KB page frame.

■ A fault page entry generates a page fault abort exception when accessed.

MMU决定L2 page table entries的类型使用entry filed的低两位

| L2 page table entries | |

|---|---|

| A large PTE includes the base address of a 64 KB block of physical memory. | It also has four sets of permission bit fields, as well as the cache and write buffer attributes for the page. Each set of access permission bit fields represents one-fourth of the page in virtual memory. 这些entries可以被认为提供了64KB页面中16KB子页面的访问权限(These entries may be thought of as 16 KB subpages providing finer control of access permission within the 64 KB page). |

| A small PTE holds the base address of a 4 KB block of physical memory. | It also includes four sets of permission bit fields and the cache and write buffer attributes for the page. Each set of permission bit fields represents one-fourth of the page in virtual memory. These entries may be thought of as 1 KB subpages providing finer control of access permission within the 4 KB page. |

| A tiny PTE provides the base address of a 1 KB block of physical memory. | It also includes a single access permission bit field and the cache and write buffer attributes for the page. 从ARMv6架构开始tiny page就不使用了,为了未来的兼容性,我们建议你避免使用tiny page(The tiny page has not been incorporated in the ARMv6 architecture. If you are planning to create a system that is easily portable to future architectures, we recommend avoiding the use of tiny 1 KB pages in your system). |

| A fault PTE generates a memory page access fault. | The fault condition results in either a prefetch or data abort, depending on the type of memory access. |

14.4.4 Selecting a page size for your embeded system

在你设置page尺寸的时候,哲理有一些提示和建议。

■ page size越小,page frames就越多

■ page size越小,内部碎片就更少(The less the internal fragmentation).内部碎片是内存空间中不用的部分。例如需要9kb的任务可以适合3个4kb的页面或者1个64KB的页面。后者会有多大55KB无用的空间。

■ page size越大,系统将更能加载需求的代码和数据。

■ 大的page更具效率,当访问secondary storage(第二存储)的时间会增加的时候。

■ page size的增加,每个TLB entry能表示更多的空间。因此,系统可以缓存更多的tranlation data,TLB和所有translation data被加载就能更快。

■ Each page table consumes 1 KB of memory if you use L2 coarse pages. Each L2 fine page table consumes 4 KB. Each L2 page table translates 1 MB of address space.

Your maximum page table memory use, per task, is

((task size/1 megabyte) + 1) ∗ (L2 page table size)

14.5 the translation lookaside buffer

TLB是特殊的cache用于保存最近使用的page translations。TLB将virtual page转换到active page frame,并且保存限制page访问的数据。TLB是cache,所有具有victim pointer和TLB line replacement policy,ARM中TLB采用round-robin算法进行替换。TLB只支持两种操作:flush the TLB, lock translation in the TLB。进行内存访问的时候,MMU比较虚拟地址的一部分和TLB中所有cached的数据。如果需求的translation是可以获得的,则称为TLB hit。如果没有找到合法的translation,则称为TLB miss。MMU在硬件会自动处理TLB miss问题,会寻找到合法的translation然后将其加载到TLB中64 lines之一里。

在页表中搜寻合法的转换值的步骤被称为页表漫游(page table walk).如果有合法的PTE,硬件会将PTE的转换地址复制到TLB中,然后产生物理地址来访问主存。如果在搜索结束的时候,在页表(page table)中有错误的实体(entry),MMU硬件就会产生abort异常。

如果搜索结束在L1主页表(master page table),则会产生single-stage page table walk(单阶段页表漫游)。如果搜索结束在L2 page table(页表),则会产生two-stage page table walk。

如果MMU产生abort异常,TLB miss会消耗很多额外的周期。ARM720T只有单一的TLB因为其只有一个统一的总线结构。ARM920T,ARM922T采用哈弗结构,所以instruction translation和data translation个需要一个TLB。

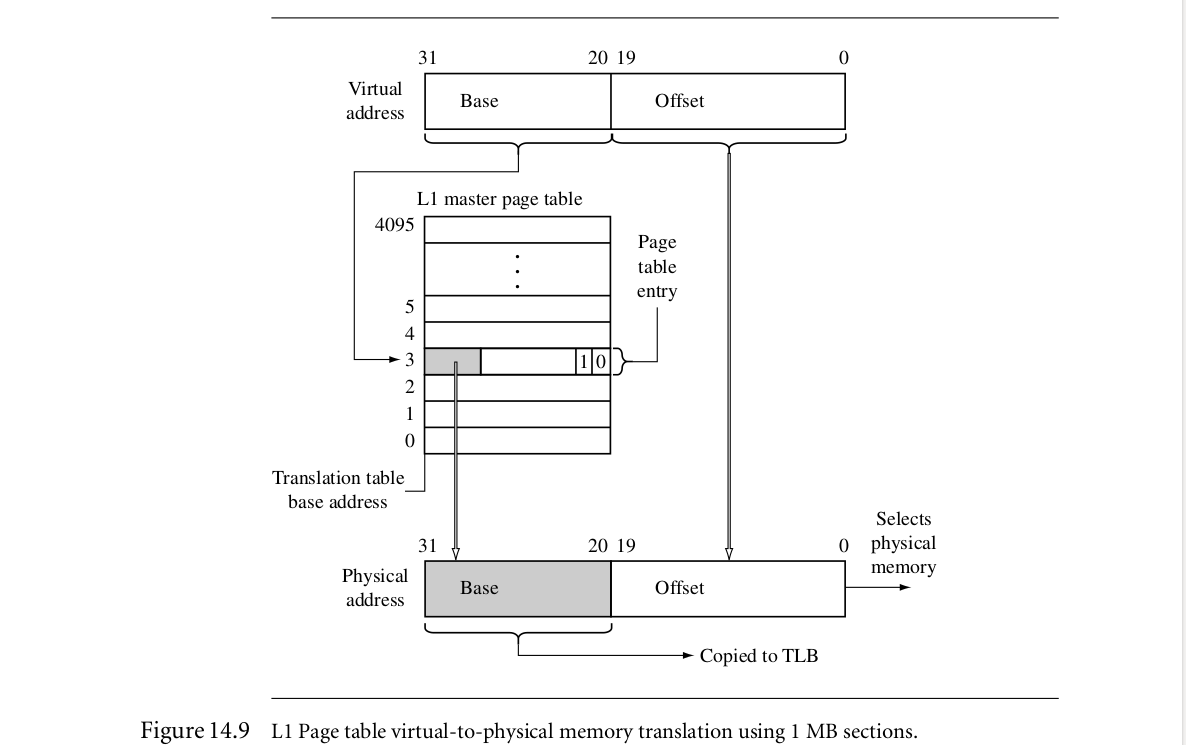

14.5.1 Single-Step Page table walk

下图: shows the table walk of an L1 table for a 1 MB section page translation.

如果[1:0]位值为10,则代表PTE有1MB的page。然后PTE的数据进入TLB,之后物理地址通过结合该数据和虚拟地址的偏移部分(offset portion)来产生。

当最低两位是00时,则产生错误(fault)。如果是其他两种值,则表示MMU进行two-stage search。

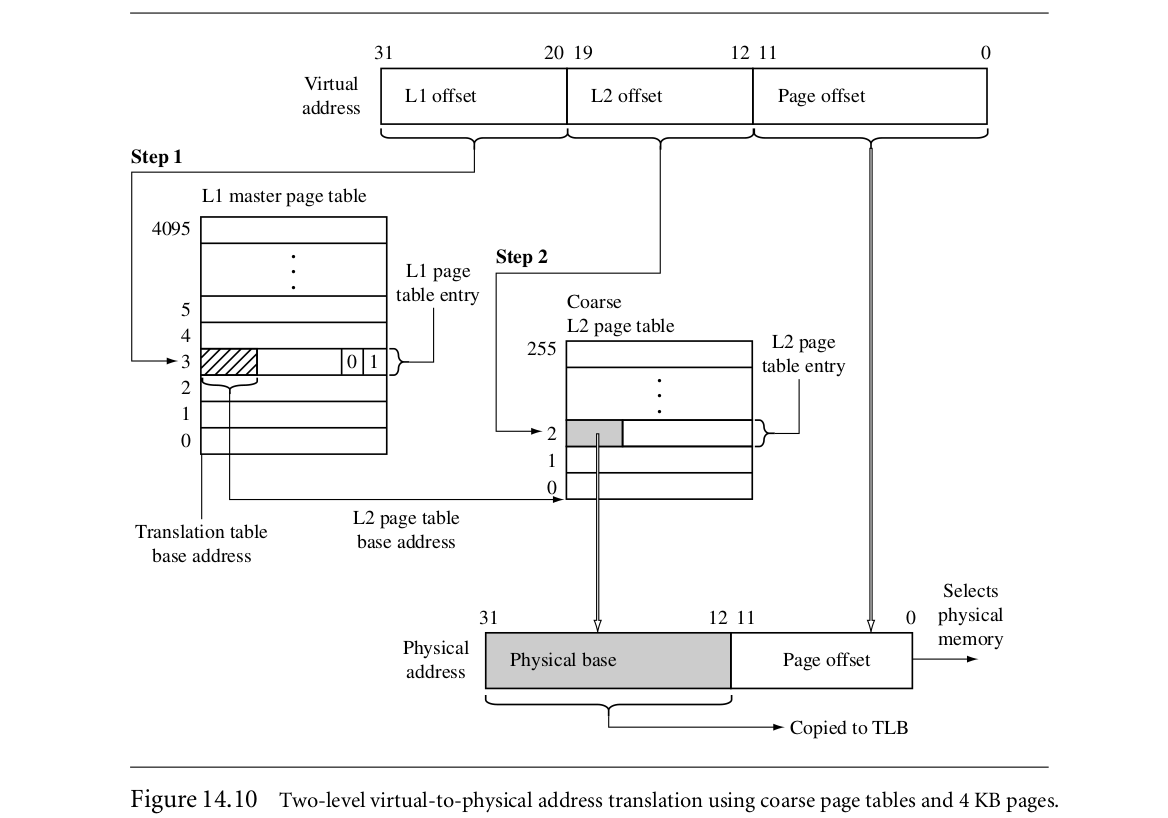

14.5.2 Two-Step Page table walk

If the MMU ends its search for a page that is 1, 4, 16, or 64 KB in size, then the page table walk will have taken two steps to find the address translation.

如图:演示在coarse page table搜寻page的情况。

虚拟地址被分为三部分,通过第一部分L1 offset寻找到L1 page table中的PTE,[1:0]为01发现需要到coarse page table中寻找,然后利用第二部分的L2 offset定位L2 page table中的PTE。最终确定physical base。

14.5.3 TLB Operations

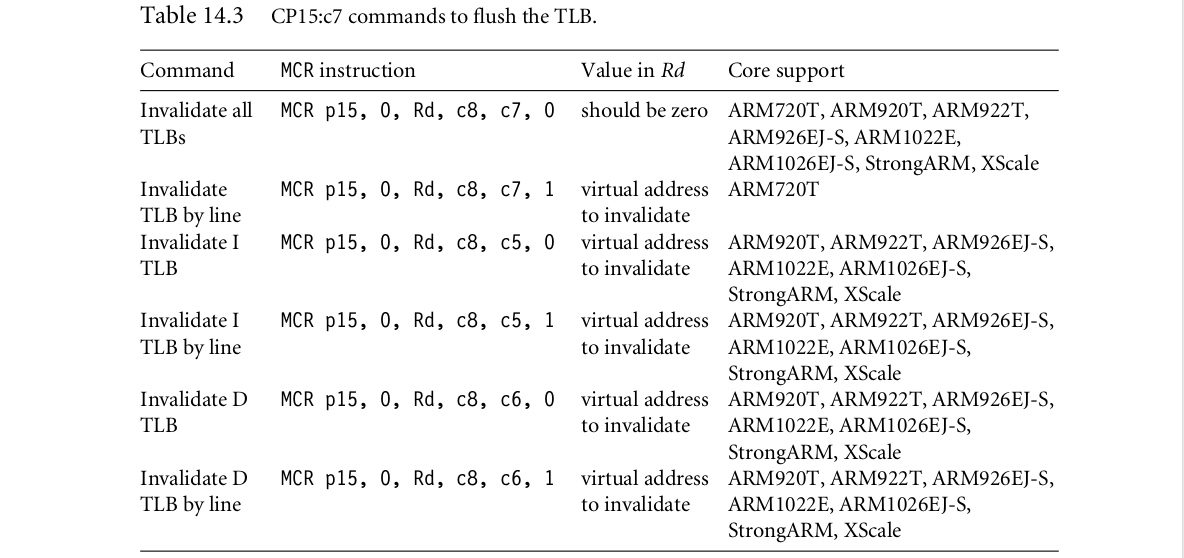

如果操作系统改变了页表中的内容,那么TLB中的数据也不再合法了。为了处理TLB中的非法数据,核心提供CP15命令来flush the TLB。如下表:

Example: flush TLB的简单实例

void flushTLB(void)

{

unsigned int c8format = 0;

__asm{MCR p15, 0, c8format, c8, c7, 0 }

}14.5.4 TLB lockdown

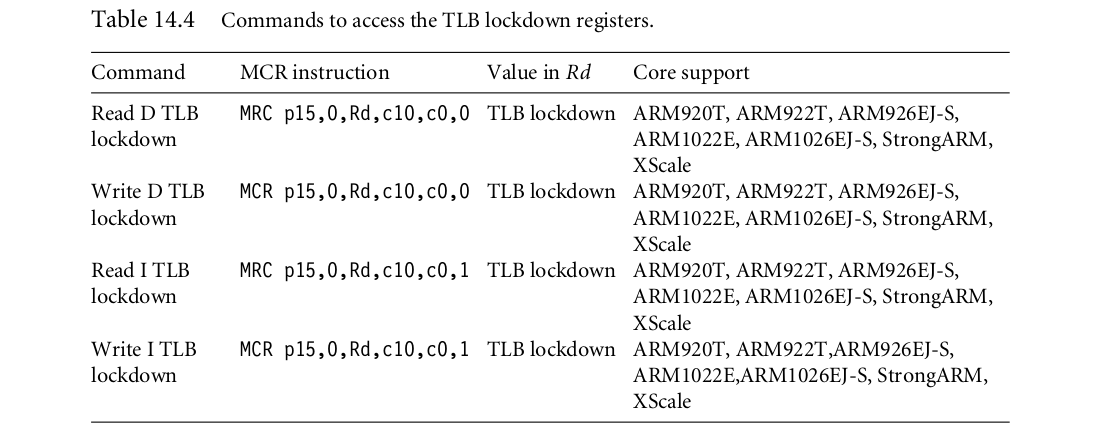

如果TLB中某一行被锁住,当执行flush the TLB操作的时候,其值不改变。

各种类型处理器的指令如下表:

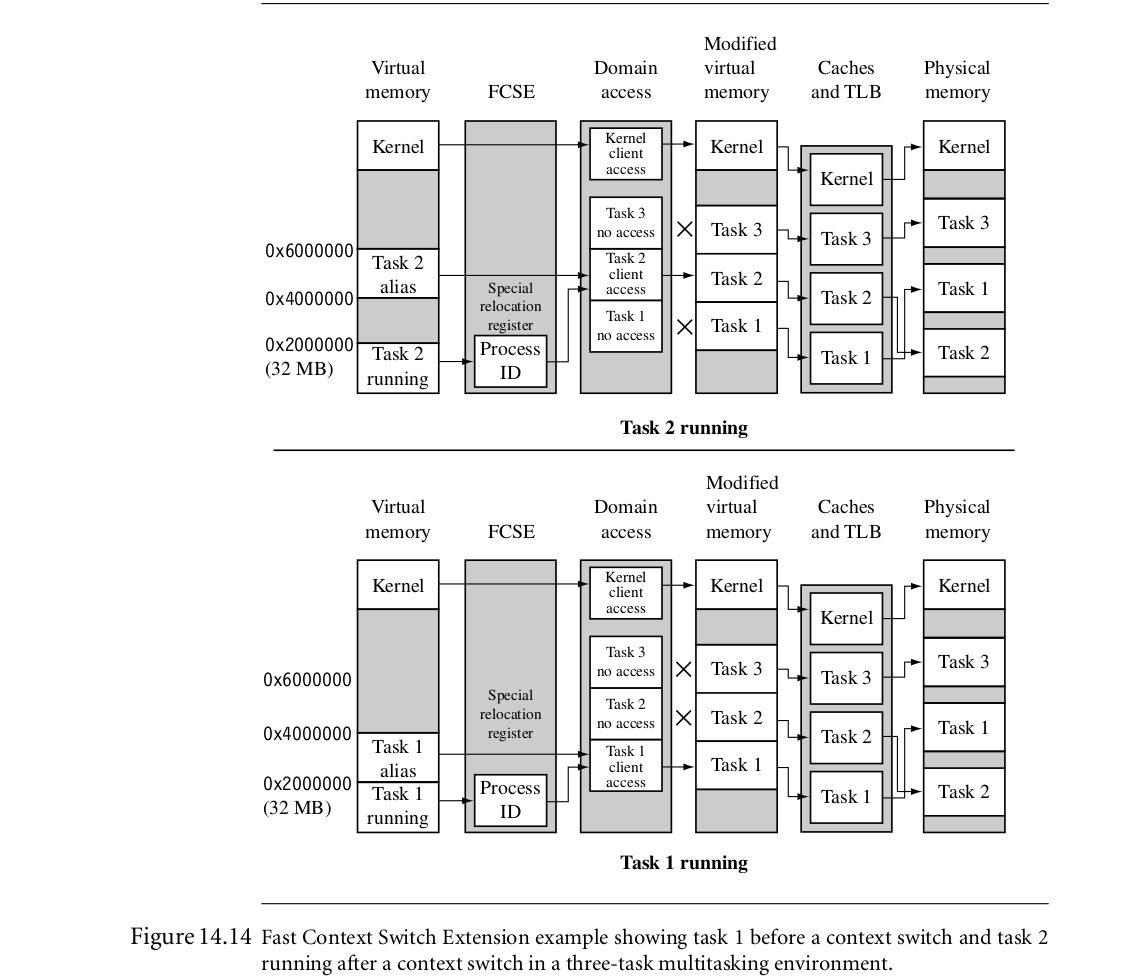

14.6 Domains and Memory access permission

有两种管理任务访问内存权限的控制方法:domain(领地),access permission set(in the page tables)

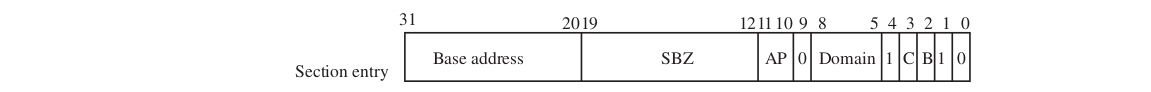

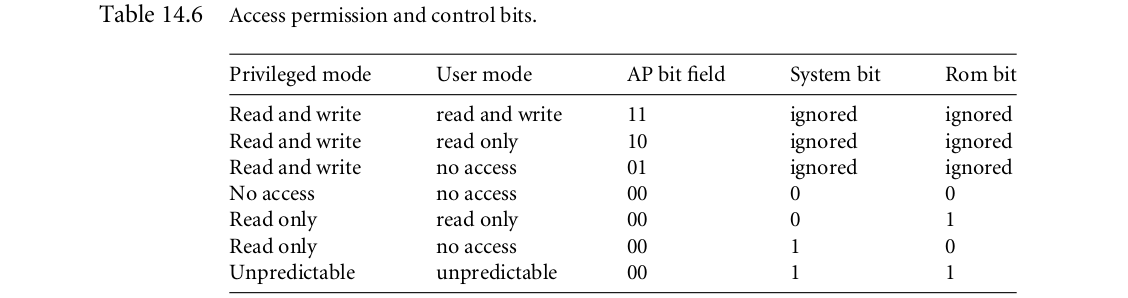

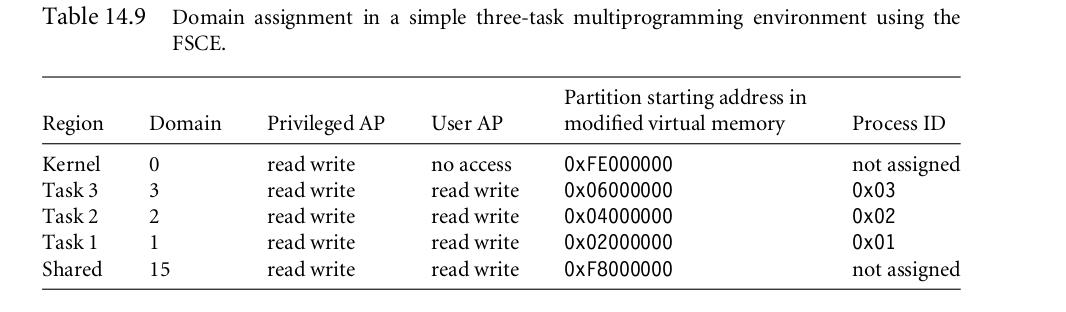

Domain控制虚拟没村的基础访问,在共享共同虚拟内存map的时候,采用分离两者的内存空间的方法。如下图中的情况,1MB sections拥有16种不同的domains值[8:5]

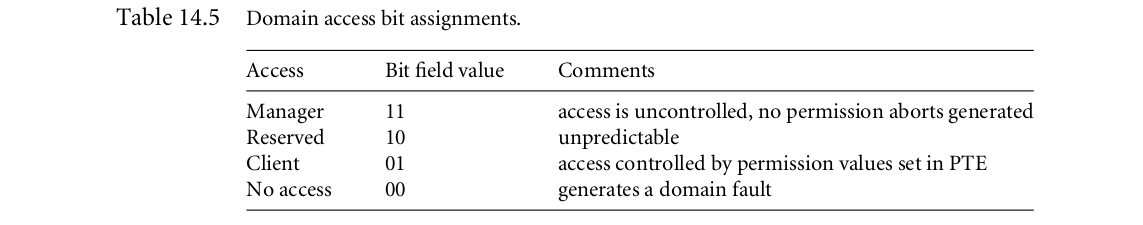

Domain访问权限安排在CP15:c3中,并且控制处理器核心访问虚拟内存中sections的能力。

CP15:c3使用每个domain的两位来定义domains的访问权限,如下图:

(When a domain is assigned to a section, it must obey the domain access rights assigned to the domain. Domain access rights are assigned in the CP15:c3 register and control the processor core’s ability to access sections of virtual memory.The CP15:c3 register uses two bits for each domain to define the access permitted for each of the 16 available domains.)

即使你不使用MMU提供的虚拟内存的能力,也可以将该处理器作为简单的内存保护单元。

1. 首先,mapping virtual memory directly to physical memory

2. 然后,给每个任务分配不同的domain,通过将domain访问设定未“no access”,来使用domains 保护休眠任务。

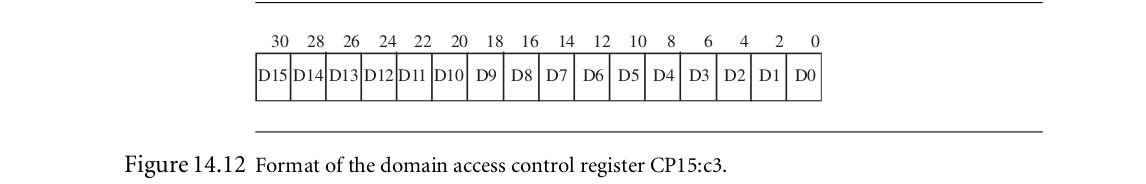

14.6.1 Page-table-based access permissions

PTE中AP位决定了page的访问权限。如figure 14.6。

In addition to the AP bits located in the PTE, there are two bits in the CP15:c1 control register that act globally to modify access permission to memory: the system (S) bit and the rom (R) bit. These bits can be used to reveal large blocks of memory from the system at different times during operation.

Setting the S bit changes all pages with “no access” permission to allow read access for privileged mode tasks.

Thus, by changing a single bit in CP15:c1, all areas marked as no access are instantly available without the cost of changing every AP bit field in every PTE.

Changing the R bit changes all pages with “no access” permission to allow read access for both privileged and user mode tasks.

Again, this bit can speed access to large blocks of memory without needing to change lots of PTEs.

14.7 The Caches and Write Buffer

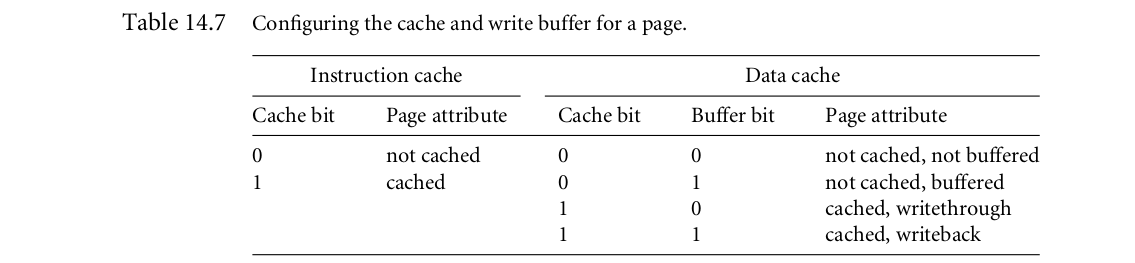

我们在chapter-12展示了caches and write buffers的基础操作。你可以使用PTE中的两位来给内存中每个page配置caches and write buffers。

当配置instruction(指令)的页面的时候,write buffer bit会被忽视,cache bit据定了cache操作。当cache bit被设置的时候,page开启cache。当该位清除的时候,page则关闭cache。

当配置data pages的时候,the write buffer bithas two uses:

1. 开启关闭page的write buffer。

2. set the page cache policy

page cache bit控制着write buffer bit的含义。cache bit = 0时,buffer bit用于开启关闭page’s write buffer,如下图。当cache bit = 1,buffer bit控制是write through还是writeback。

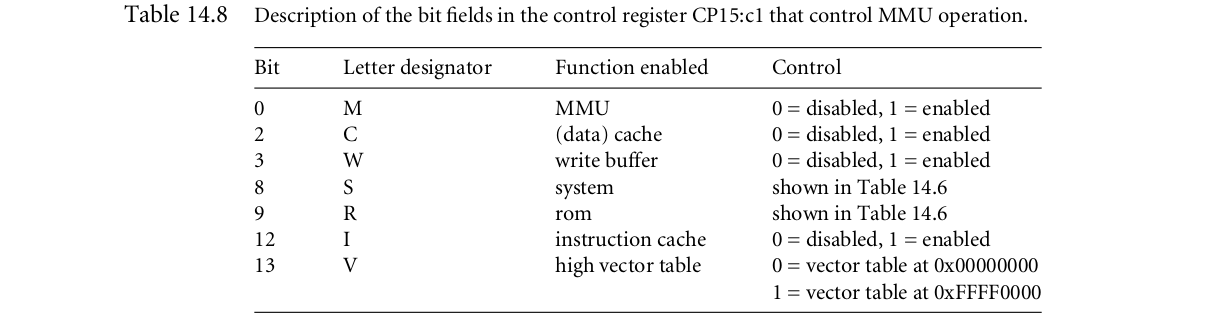

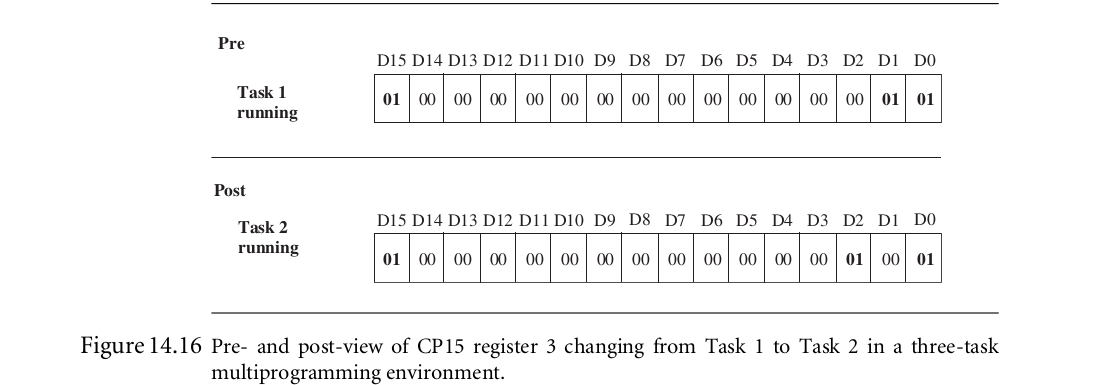

14.8 Coprocessor 15 and MMU configuration

Enabling a configured MMU is very similar for the three cores. To enable the MMU, cache, and write buffer, you need to change bit[12], bit[3], bit[2], and bit[0] in the control register.

下图就是控制寄存器中哥哥位置的描述。

如下是不同核心的差异

书中p515有具体enable MMU,cache,write buffer的代码。

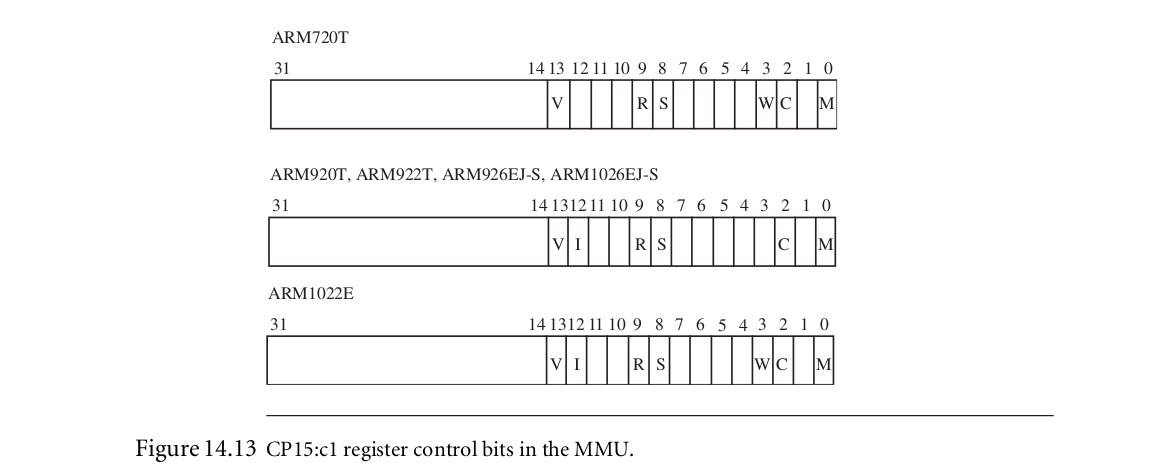

14.9 the fast context switch extension

The Fast Context Switch Extension(FCSE)是MMU中额外的硬件,可以提升ARM嵌入式系统中的性能。FCSE云溪多个任务运行在内存中重叠的固定区域,而不需要在context switch的时候clean或flush cache,或flush TLB。FCSE的核心特性就是消除flush cache and TLB的需求。

没有FCSE,任务间的转换需要在virtual memory maps中的进行改变。如果这些改变涉及到两个地址范围重叠的任务,那么caches和TLB中的信息就无效了,因此系统必须要flush caches and TLB。这样会消耗大量额外的时间用于flush,因为处理器需要flush所有数据包括那些合法的。

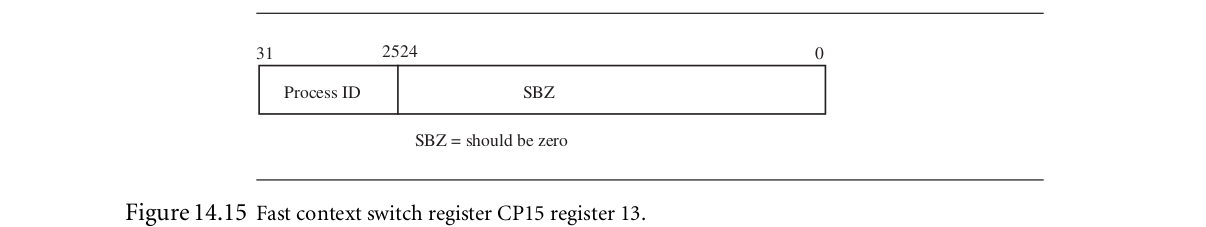

FCSE在管理virutal memory的时候辉金星额外的地址转换。FCSE会在使用cache和TLB之前使用特殊的relocation register修改虚拟内存,该寄存器包含process ID的数值。ARM将第一次转换(translation)之前virtual memory中的地址称为virtual address(VA),在第一次转换之后的地址被称为modified virtual address(MVA).使用FCSE时,所有MVA都被激活,任务通过domain进行保护。

任务间切换不涉及到改变page tables,仅仅简单的要将新任务process ID写入FCSEprocess ID寄存器(CP15中)。因为转换不需要改变page tables,因此cache and TLB中数据就是合法的,不再需要flush。

当时用了FCSE,每个任务必须执行在固定虚拟地址范围(0x00000000 to 0x1fffffff) 并且要被放在MVA中不同的32MB区域,系统共享0x20000000之上所有的内存地址,使用domain保护任务。正在运行的任务用current process ID来标志。

为了利用FCSE,需要编译和链接所有任务运行到virtual address第一个32MB block,并且分配一个特有的process ID。然后安排每个任务到MVA不同的32MB区域中使用下列的relocation formula

MVA= VA + (0x20000000 * processID)CP15:c13:0寄存器包含了current process ID,支持128 process IDS,如下图:

Example

设置FCSE中process ID

void processIDSet(unsigned int value)

{

unsigned int PID;

PID = value << 25;//乘以0x20000000(32MB)

__asm{MCR p15, 0, PID, c13, c0, 0 } /* write Process ID register */

}14.9.1 How the FCSE Uses page tables and domains

上图展示task间的切换。

下图演示了domain access register(CP15:c3:c0)中的值在从task1切换到task2的时候如何改变,task1从client access 到 no access,task2 vice versa。Domain 0一直保持client access,因为0代表内核。

在任务间共享内存,使用了“sharing domain”。任务可以共享domain意味着MVA中一部分(partition)都有client access。

共享内存可由双方任务看到,该内存的访问权限是由map the memory space的page table entries决定的。

在使用FCSE的context switch时需要下列步骤:

1. Save the active task context and place the task in a dormant state.

2. Write the awakening task’s process ID to CP15:c13:c0.

3. Set the current task’s domain to no access, and the awakening task’s domain to client access, by writing to CP15:c3:c0.

4. Restore the context of the awakening task.

5. Resume execution of the restored task.

14.9.2 Hints for Using the FCSE

■ A task has a fixed 32 MB maximum limit on size.

■ The memory manager must use fixed 32 MB partitions with a fixed starting address that is a multiple of 32 MB.

■ Unless you want to manage an exception vector table for each task, place the exception vector table at virtual address 0xffff0000, using the V bit in CP15 register 1.

■ You must define and use an active domain control system.

■ The core fetches the two instructions following a change in process ID from the previous process space, if execution is taking place in the first 32 MB block. Therefore, it is wise to switch tasks from a “fixed” region in memory.

■ If you use domains to control task access, the running task also appears as an alias at VA + (0x2000000 ∗ process ID) in virtual memory.

■ If you use domains to protect tasks from each other, you are limited to a maximum of 16 concurrent tasks, unless you are willing to modify the domain fields in the level 1 page table and flush the TLB on a context switch.

14.12 Summary

The chapter presented the basics of memory management and virtual memory systems.A key service of an MMU is the ability to manage tasks as independent programs running in their own private virtual memory space.

An important feature of a virtual memory system is address relocation. Address relocation is the translation of the address issued by the processor core to a different address in main memory. The translation is done by the MMU hardware.

In a virtual memory system, virtual memory is commonly divided into parts as fixed areas and dynamic areas. In fixed areas the translation data mapped in a page table does not change during normal operation; in dynamic areas the memory mapping between virtual and physical memory frequently changes.

Page tables contain descriptions of virtual page information. A page table entry (PTE) translates a page in virtual memory to a page frame in physical memory. Page table entries are organized by virtual address and contain the translation data to map a page to a page frame.

The functions of an ARM MMU are to:

■ read level 1 and level 2 page tables and load them into the TLB

■ store recent virtual-to-physical memory address translations in the TLB

■ perform virtual-to-physical address translation

■ enforce access permission and configure the cache and write buffer

An additional special feature in an ARM MMU is the Fast Context Switch Extension.The Fast Context Switch Extension improves performance in a multitasking environment because it does not require flushing the caches or TLB during a context switch.

A working example of a small virtual memory system provided in-depth details to set up the MMU to support multitasking. The steps in setting up the demonstration are to define the regions used in the fixed system software of virtual memory, define the virtual memory maps for each task, locate the fixed and task regions into the physical memory map, define and locate the page tables within the page table region, define the data structure**s needed to create and manage the regions and page tables, **initialize the MMU by using the defined region data to create page table entries and write them to the page tables, and establish a context switch procedure to transition from one task to the next.

961

961

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?