关于KNN的介绍可以参考: http://blog.csdn.net/fengbingchun/article/details/78464169

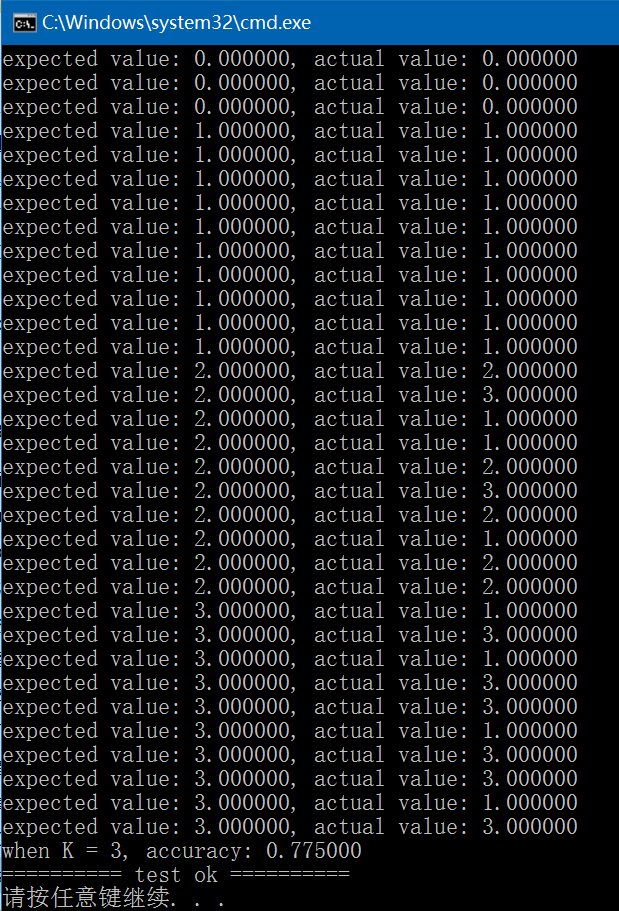

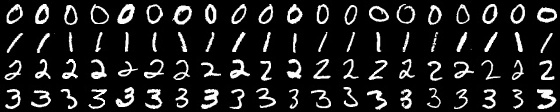

这里给出KNN的C++实现,用于分类。训练数据和测试数据均来自MNIST,关于MNIST的介绍可以参考: http://blog.csdn.net/fengbingchun/article/details/49611549 , 从MNIST中提取的40幅图像,0,1,2,3四类各20张,每类的前10幅来自于训练样本,用于训练,后10幅来自测试样本,用于测试,如下图:

实现代码如下:

knn.hpp:

#ifndef FBC_NN_KNN_HPP_

#define FBC_NN_KNN_HPP_

#include <memory>

#include <vector>

namespace ANN {

template<typename T>

class KNN {

public:

KNN() = default;

void set_k(int k);

int set_train_samples(const std::vector<std::vector<T>>& samples, const std::vector<T>& labels);

int predict(const std::vector<T>& sample, T& result) const;

private:

int k = 3;

int feature_length = 0;

int samples_number = 0;

std::unique_ptr<T[]> samples;

std::unique_ptr<T[]> labels;

};

} // namespace ANN

#endif // FBC_NN_KNN_HPP_#include "knn.hpp"

#include <limits>

#include <algorithm>

#include <functional>

#include "common.hpp"

namespace ANN {

template<typename T>

void KNN<T>::set_k(int k)

{

this->k = k;

}

template<typename T>

int KNN<T>::set_train_samples(const std::vector<std::vector<T>>& samples, const std::vector<T>& labels)

{

CHECK(samples.size() == labels.size());

this->samples_number = samples.size();

if (this->k > this->samples_number) this->k = this->samples_number;

this->feature_length = samples[0].size();

this->samples.reset(new T[this->feature_length * this->samples_number]);

this->labels.reset(new T[this->samples_number]);

T* p = this->samples.get();

for (int i = 0; i < this->samples_number; ++i) {

T* q = p + i * this->feature_length;

for (int j = 0; j < this->feature_length; ++j) {

q[j] = samples[i][j];

}

this->labels.get()[i] = labels[i];

}

}

template<typename T>

int KNN<T>::predict(const std::vector<T>& sample, T& result) const

{

if (sample.size() != this->feature_length) {

fprintf(stderr, "their feature length dismatch: %d, %d", sample.size(), this->feature_length);

return -1;

}

typedef std::pair<T, T> value;

std::vector<value> info;

for (int i = 0; i < this->k + 1; ++i) {

info.push_back(std::make_pair(std::numeric_limits<T>::max(), (T)-1.));

}

for (int i = 0; i < this->samples_number; ++i) {

T s{ 0. };

const T* p = this->samples.get() + i * this->feature_length;

for (int j = 0; j < this->feature_length; ++j) {

s += (p[j] - sample[j]) * (p[j] - sample[j]);

}

info[this->k] = std::make_pair(s, this->labels.get()[i]);

std::stable_sort(info.begin(), info.end(), [](const std::pair<T, T>& p1, const std::pair<T, T>& p2) {

return p1.first < p2.first; });

}

std::vector<T> vec(this->k);

for (int i = 0; i < this->k; ++i) {

vec[i] = info[i].second;

}

std::sort(vec.begin(), vec.end(), std::greater<T>());

vec.erase(std::unique(vec.begin(), vec.end()), vec.end());

std::vector<std::pair<T, int>> ret;

for (int i = 0; i < vec.size(); ++i) {

ret.push_back(std::make_pair(vec[i], 0));

}

for (int i = 0; i < this->k; ++i) {

for (int j = 0; j < ret.size(); ++j) {

if (info[i].second == ret[j].first) {

++ret[j].second;

break;

}

}

}

int max = -1, index = -1;

for (int i = 0; i < ret.size(); ++i) {

if (ret[i].second > max) {

max = ret[i].second;

index = i;

}

}

result = ret[index].first;

return 0;

}

template class KNN<float>;

template class KNN<double>;

} // namespace ANN#include "funset.hpp"

#include <iostream>

#include "perceptron.hpp"

#include "BP.hpp""

#include "CNN.hpp"

#include "linear_regression.hpp"

#include "naive_bayes_classifier.hpp"

#include "logistic_regression.hpp"

#include "common.hpp"

#include "knn.hpp"

#include <opencv2/opencv.hpp>

// =========================== KNN(K-Nearest Neighbor) ======================

int test_knn_classifier_predict()

{

const std::string image_path{ "E:/GitCode/NN_Test/data/images/digit/handwriting_0_and_1/" };

const int K{ 3 };

cv::Mat tmp = cv::imread(image_path + "0_1.jpg", 0);

const int train_samples_number{ 40 }, predict_samples_number{ 40 };

const int every_class_number{ 10 };

cv::Mat train_data(train_samples_number, tmp.rows * tmp.cols, CV_32FC1);

cv::Mat train_labels(train_samples_number, 1, CV_32FC1);

float* p = (float*)train_labels.data;

for (int i = 0; i < 4; ++i) {

std::for_each(p + i * every_class_number, p + (i + 1)*every_class_number, [i](float& v){v = (float)i; });

}

// train data

for (int i = 0; i < 4; ++i) {

static const std::vector<std::string> digit{ "0_", "1_", "2_", "3_" };

static const std::string suffix{ ".jpg" };

for (int j = 1; j <= every_class_number; ++j) {

std::string image_name = image_path + digit[i] + std::to_string(j) + suffix;

cv::Mat image = cv::imread(image_name, 0);

CHECK(!image.empty() && image.isContinuous());

image.convertTo(image, CV_32FC1);

image = image.reshape(0, 1);

tmp = train_data.rowRange(i * every_class_number + j - 1, i * every_class_number + j);

image.copyTo(tmp);

}

}

ANN::KNN<float> knn;

knn.set_k(K);

std::vector<std::vector<float>> samples(train_samples_number);

std::vector<float> labels(train_samples_number);

const int feature_length{ tmp.rows * tmp.cols };

for (int i = 0; i < train_samples_number; ++i) {

samples[i].resize(feature_length);

const float* p1 = train_data.ptr<float>(i);

float* p2 = samples[i].data();

memcpy(p2, p1, feature_length * sizeof(float));

}

const float* p1 = (const float*)train_labels.data;

float* p2 = labels.data();

memcpy(p2, p1, train_samples_number * sizeof(float));

knn.set_train_samples(samples, labels);

// predict datta

cv::Mat predict_data(predict_samples_number, tmp.rows * tmp.cols, CV_32FC1);

for (int i = 0; i < 4; ++i) {

static const std::vector<std::string> digit{ "0_", "1_", "2_", "3_" };

static const std::string suffix{ ".jpg" };

for (int j = 11; j <= every_class_number + 10; ++j) {

std::string image_name = image_path + digit[i] + std::to_string(j) + suffix;

cv::Mat image = cv::imread(image_name, 0);

CHECK(!image.empty() && image.isContinuous());

image.convertTo(image, CV_32FC1);

image = image.reshape(0, 1);

tmp = predict_data.rowRange(i * every_class_number + j - 10 - 1, i * every_class_number + j - 10);

image.copyTo(tmp);

}

}

cv::Mat predict_labels(predict_samples_number, 1, CV_32FC1);

p = (float*)predict_labels.data;

for (int i = 0; i < 4; ++i) {

std::for_each(p + i * every_class_number, p + (i + 1)*every_class_number, [i](float& v){v = (float)i; });

}

std::vector<float> sample(feature_length);

int count{ 0 };

for (int i = 0; i < predict_samples_number; ++i) {

float value1 = ((float*)predict_labels.data)[i];

float value2;

memcpy(sample.data(), predict_data.ptr<float>(i), feature_length * sizeof(float));

CHECK(knn.predict(sample, value2) == 0);

fprintf(stdout, "expected value: %f, actual value: %f\n", value1, value2);

if (int(value1) == int(value2)) ++count;

}

fprintf(stdout, "when K = %d, accuracy: %f\n", K, count * 1.f / predict_samples_number);

return 0;

}

GitHub: https://github.com/fengbingchun/NN_Test

2万+

2万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?