目录

1. Only the versions between 2017 and 2019 (inclusive) are supported!

2. Cannot find cuDNN library. Turning the option off

下面是配套的视频教程:

Pytorch 快速实战教程:0_Pytorch实战前言_哔哩哔哩_bilibili

Pytorch 分割实战教程:介绍一个图像分割的网络搭建利器,Segmentation model PyTorch_哔哩哔哩_bilibili

C++ 部署的时候,demo 写完之后,提示如下错误

1. Only the versions between 2017 and 2019 (inclusive) are supported!

C:\Qt\Tools\CMake_64\share\cmake-3.21\Modules\CMakeTestCUDACompiler.cmake:56: error: The CUDA compiler "C:/Program Files/NVIDIA GPU Computing Toolkit/CUDA/v11.3/bin/nvcc.exe" is not able to compile a simple test program. It fails with the following output: Change Dir: D:/AI/Learn/engineer/build-cppdemo-Desktop_Qt_5_15_1_MSVC2019_64bit-Release/CMakeFiles/CMakeTmp Run Build Command(s):C:/PROGRA~1/MICROS~1/2022/COMMUN~1/Common7/IDE/COMMON~1/MICROS~1/CMake/Ninja/ninja.exe cmTC_b9b7c && [1/2] Building CUDA object CMakeFiles\cmTC_b9b7c.dir\main.cu.obj FAILED: CMakeFiles/cmTC_b9b7c.dir/main.cu.obj C:\PROGRA~1\NVIDIA~2\CUDA\v11.3\bin\nvcc.exe -c D:\AI\Learn\engineer\build-cppdemo-Desktop_Qt_5_15_1_MSVC2019_64bit-Release\CMakeFiles\CMakeTmp\main.cu -o CMakeFiles\cmTC_b9b7c.dir\main.cu.obj C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v11.3\include\crt/host_config.h(160): fatal error C1189: #error: -- unsupported Microsoft Visual Studio version! Only the versions between 2017 and 2019 (inclusive) are supported! The nvcc flag '-allow-unsupported-compiler' can be used to override this version check; however, using an unsupported host compiler may cause compilation failure or incorrect run time execution. Use at your own risk. main.cu ninja: build stopped: subcommand failed.

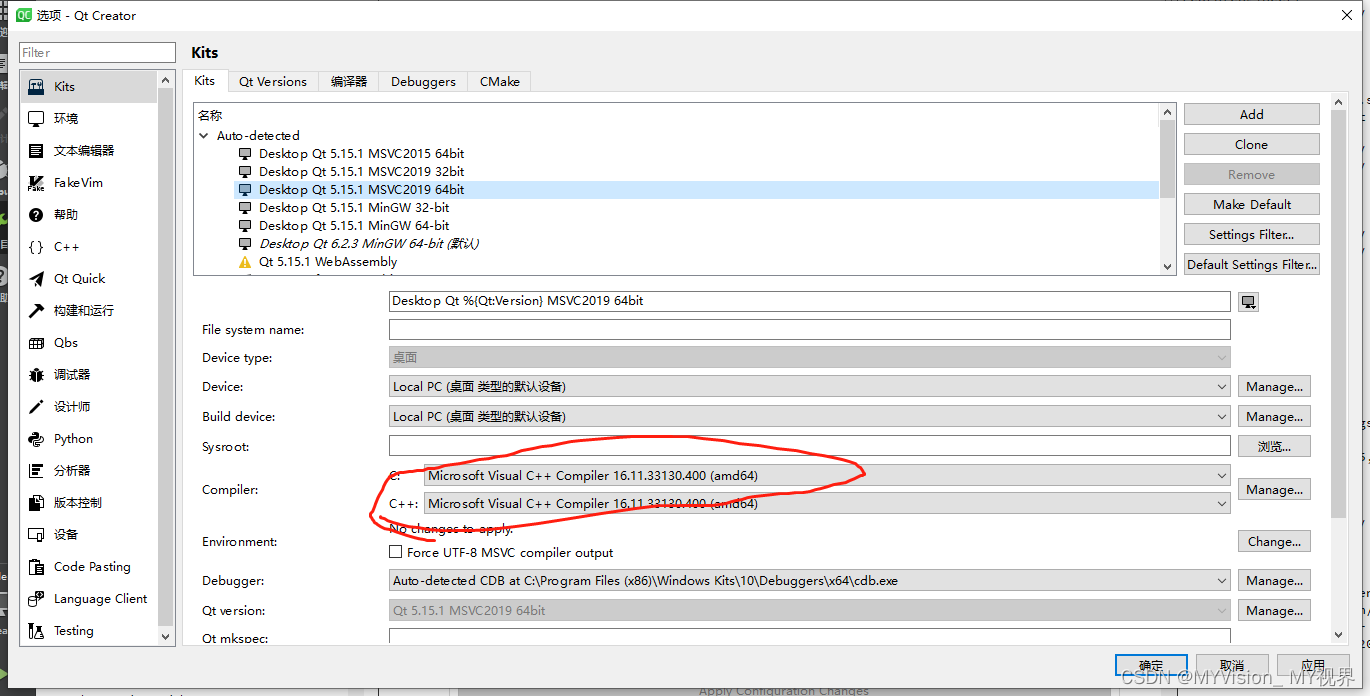

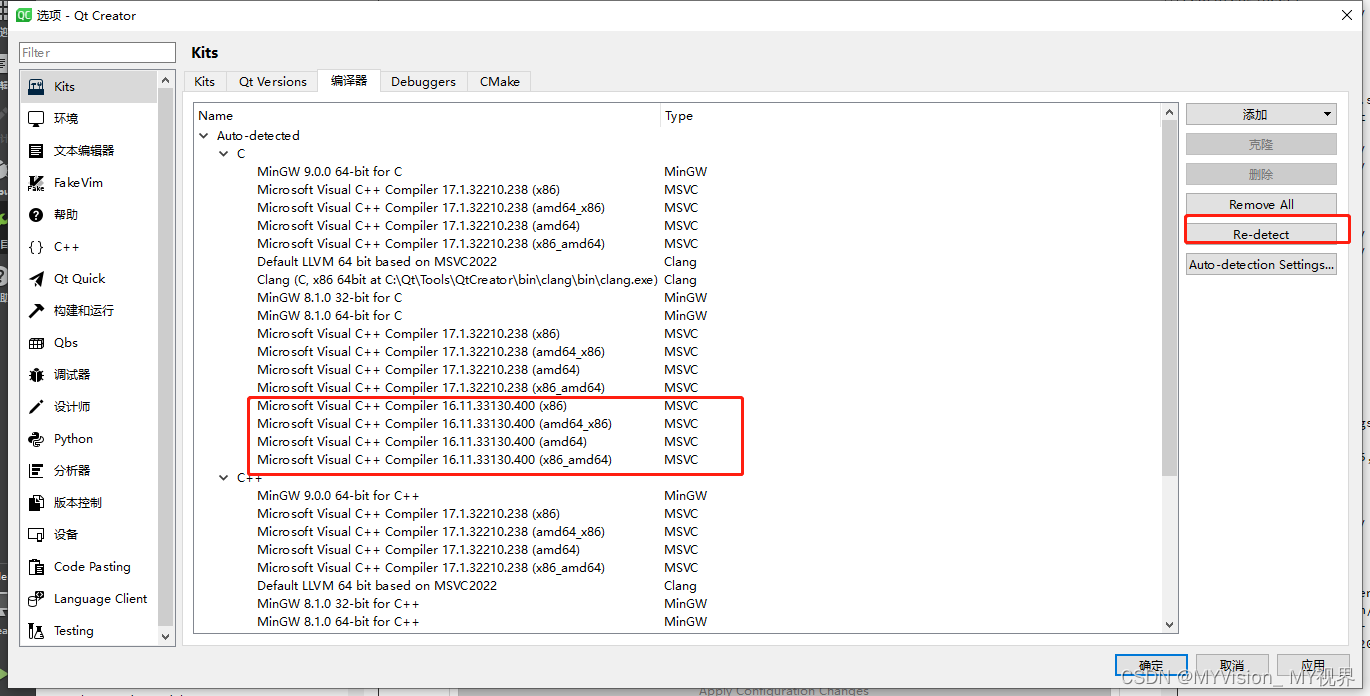

大概意思是说cuda 只支持vs1025~vs2019 的版本,我本地安装的是vs2022, 所以安装vs2019 之后,重新配置QT 的kits ,问题解决

重新配置时,点击编译器目录下的re-detect 按钮, 就可以把刚安装的vs2019的编译器检测到

然后执行cmake 时,发现cmake 依旧失败失败log 如下

2. Cannot find cuDNN library. Turning the option off

Running C:\Qt\Tools\CMake_64\bin\cmake.exe -S D:/AI/Learn/engineer/cppdemo -B D:/AI/Learn/engineer/build-cppdemo-Desktop_Qt_5_15_1_MSVC2019_64bit-Release in D:\AI\Learn\engineer\build-cppdemo-Desktop_Qt_5_15_1_MSVC2019_64bit-Release.

-- Caffe2: CUDA detected: 11.3

-- Caffe2: CUDA nvcc is: C:/Program Files/NVIDIA GPU Computing Toolkit/CUDA/v11.3/bin/nvcc.exe

-- Caffe2: CUDA toolkit directory: C:/Program Files/NVIDIA GPU Computing Toolkit/CUDA/v11.3

-- Caffe2: Header version is: 11.3

-- Could NOT find CUDNN (missing: CUDNN_LIBRARY_PATH CUDNN_INCLUDE_PATH)

CMake Warning at D:/AI/Learn/engineer/libtorch_win1_13_1_gpu/share/cmake/Caffe2/public/cuda.cmake:120 (message):

Caffe2: Cannot find cuDNN library. Turning the option off

Call Stack (most recent call first):

D:/AI/Learn/engineer/libtorch_win1_13_1_gpu/share/cmake/Caffe2/Caffe2Config.cmake:92 (include)

D:/AI/Learn/engineer/libtorch_win1_13_1_gpu/share/cmake/Torch/TorchConfig.cmake:68 (find_package)

CMakeLists.txt:10 (find_package)

CMake Warning at D:/AI/Learn/engineer/libtorch_win1_13_1_gpu/share/cmake/Caffe2/public/cuda.cmake:214 (message):

Failed to compute shorthash for libnvrtc.so

Call Stack (most recent call first):

D:/AI/Learn/engineer/libtorch_win1_13_1_gpu/share/cmake/Caffe2/Caffe2Config.cmake:92 (include)

D:/AI/Learn/engineer/libtorch_win1_13_1_gpu/share/cmake/Torch/TorchConfig.cmake:68 (find_package)

CMakeLists.txt:10 (find_package)

-- Autodetected CUDA architecture(s): 7.5

-- Added CUDA NVCC flags for: -gencode;arch=compute_75,code=sm_75

CMake Error at D:/AI/Learn/engineer/libtorch_win1_13_1_gpu/share/cmake/Caffe2/Caffe2Config.cmake:100 (message):

Your installed Caffe2 version uses cuDNN but I cannot find the cuDNN

libraries. Please set the proper cuDNN prefixes and / or install cuDNN.

Call Stack (most recent call first):

D:/AI/Learn/engineer/libtorch_win1_13_1_gpu/share/cmake/Torch/TorchConfig.cmake:68 (find_package)

CMakeLists.txt:10 (find_package)

-- Configuring incomplete, errors occurred!

See also "D:/AI/Learn/engineer/build-cppdemo-Desktop_Qt_5_15_1_MSVC2019_64bit-Release/CMakeFiles/CMakeOutput.log".

See also "D:/AI/Learn/engineer/build-cppdemo-Desktop_Qt_5_15_1_MSVC2019_64bit-Release/CMakeFiles/CMakeError.log".

CMake process exited with exit code 1.Elapsed time: 00:04.

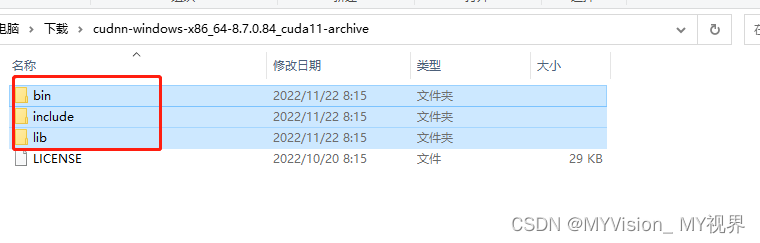

说是没有安装cuDNN, 那就下载一个呗,下载地址如下

cuDNN Download | NVIDIA Developer

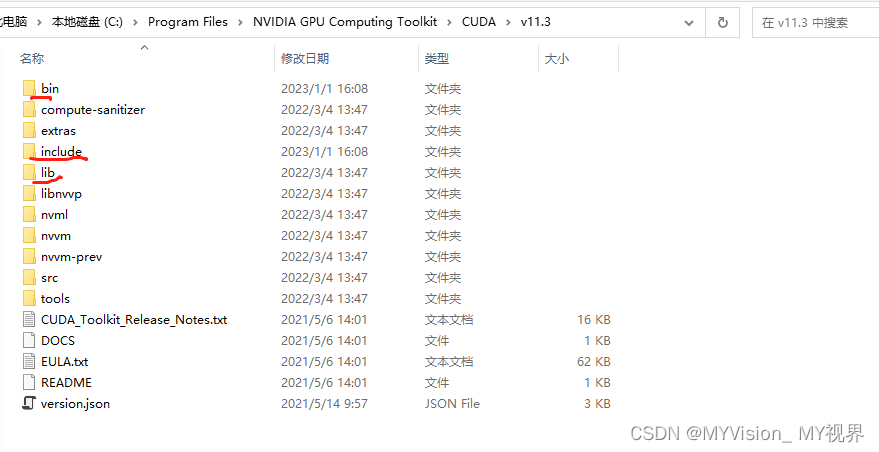

选择跟自己cuda 版本匹配的,点击下载,下载后copy到cuda toolkits 的目录下

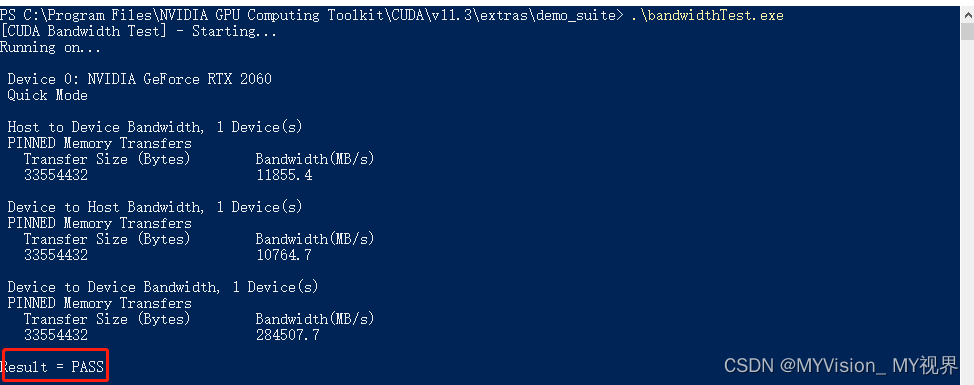

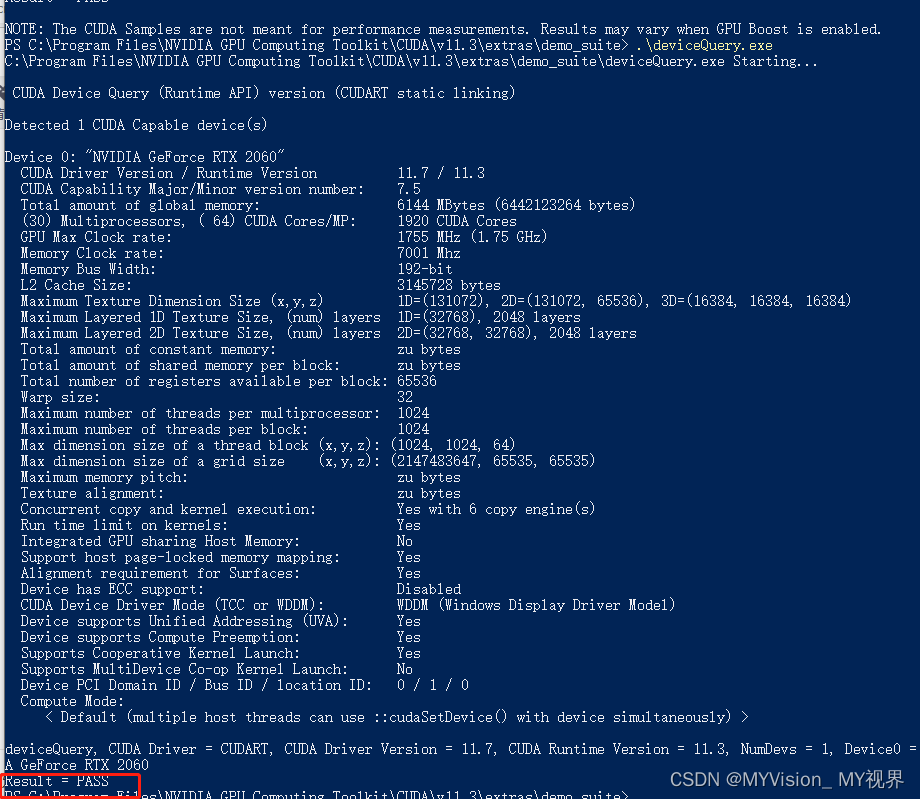

拷贝完之后,验证是否成功

出现两个PASS 意味着已经安装成功了,重新cmake demo 程序

出现两个PASS 意味着已经安装成功了,重新cmake demo 程序

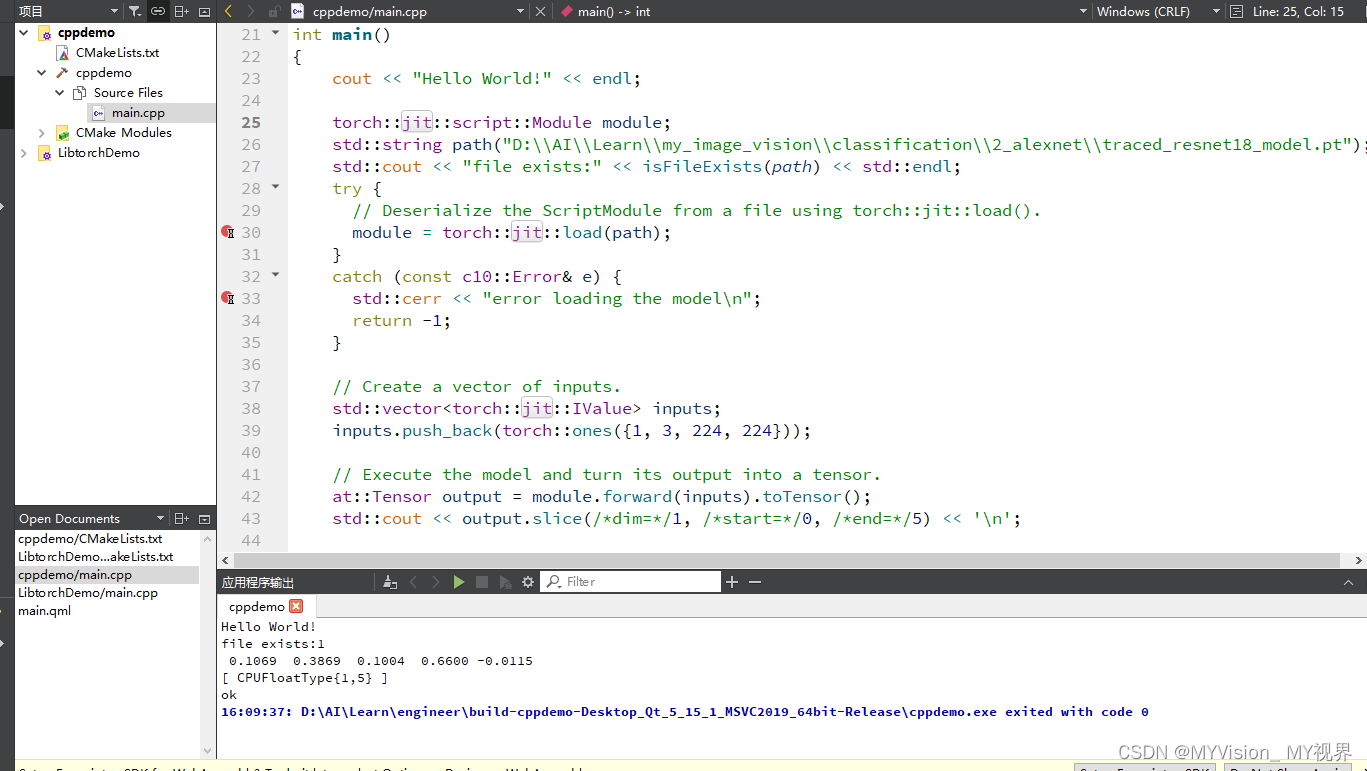

可以正常运行~

3. 下载历史版本的libtorch

how could i get old version of libtorch , thanks · Issue #40961 · pytorch/pytorch · GitHub

文章讲述了在使用PyTorch进行C++部署时遇到的CUDA版本不兼容(仅支持2017-2019版VS)和找不到cuDNN库的问题。通过安装VS2019并重新配置QTkits解决了CUDA版本问题,然后下载并正确放置cuDNN库文件以解决找不到库的问题,最终使项目成功运行。

文章讲述了在使用PyTorch进行C++部署时遇到的CUDA版本不兼容(仅支持2017-2019版VS)和找不到cuDNN库的问题。通过安装VS2019并重新配置QTkits解决了CUDA版本问题,然后下载并正确放置cuDNN库文件以解决找不到库的问题,最终使项目成功运行。

987

987

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?