1、数据集信息抽取

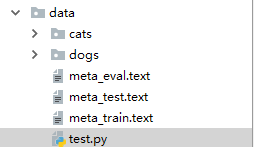

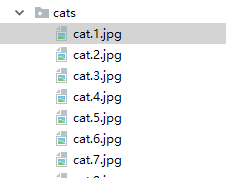

目录结构:

import os

from PIL import Image

from random import shuffle

#1、收集数据集所有分类下的图像

file_name =r'D:\python\new_test\data'

imagelist = []

def get_img_file(file_name):

for parent, dirnames, filenames in os.walk(file_name):

for dirname in dirnames:

path = parent + "\\"+dirname

get_img_file(path)

for filename in filenames:

if filename.lower().endswith(('.bmp', '.png', '.jpg', '.jpeg')):

imagelist.append(os.path.join(parent, filename))

return imagelist

image_list = get_img_file(file_name)

# print(len(image_list))

# print(image_list[0])

# imag = Image.open(image_list[0])

# imag.show()

#2、数据集的划分

name_map_tag_id = { 'cat':0,'dog':1}

#shuffle(image_list)

meta_dict ={}

for path in image_list:

tag_name = os.path.split(path)[-1].split('.')[0]

tag_id = name_map_tag_id[tag_name]

if tag_id not in meta_dict:

meta_dict[tag_id] = [path]

else:

meta_dict[tag_id].append(path)

#划分比例

train_ratio, eval_ratio,test_ratio = 0.8,0.1,0.1

train_set,eval_set,test_set = [],[],[]

for _,set_list in meta_dict.items():

length = len(set_list)

train_num , eval_num = int(length * train_ratio) ,int(length * eval_ratio)

test_num = length - train_num -eval_num

shuffle(set_list)

train_set.extend(set_list[:train_num])

eval_set.extend(set_list[train_num:train_num+eval_num])

test_set.extend(set_list[train_num+eval_num:])

shuffle(train_set)

shuffle(eval_set)

shuffle(test_set)

#3、写入数据集元文件

with open("meta_train.text",'w') as f:

#D:\python\new_test\data\cats\cat.1.jpg

for path in train_set:

tag_name = os.path.split(path)[-1].split('.')[0]

#print(tag_name)

tag_id = name_map_tag_id[tag_name]

#print(tag_id)

f.write("%d|%s\n"% (tag_id,path))

with open("meta_eval.text",'w') as f:

#D:\python\new_test\data\cats\cat.1.jpg

for path in eval_set:

tag_name = os.path.split(path)[-1].split('.')[0]

#print(tag_name)

tag_id = name_map_tag_id[tag_name]

#print(tag_id)

f.write("%d|%s\n"% (tag_id,path))

with open("meta_test.text",'w') as f:

#D:\python\new_test\data\cats\cat.1.jpg

for path in test_set:

tag_name = os.path.split(path)[-1].split('.')[0]

#print(tag_name)

tag_id = name_map_tag_id[tag_name]

#print(tag_id)

f.write("%d|%s\n"% (tag_id,path))

# path = r"D:\python\new_test\data\cats\cat.1.jpg"

# print(os.path.split(path)[-1].split('.')[0])

写入meta文件:meta_train.text

0|D:\python\new_test\data\cats\cat.3219.jpg

0|D:\python\new_test\data\cats\cat.1434.jpg

1|D:\python\new_test\data\dogs\dog.2697.jpg

1|D:\python\new_test\data\dogs\dog.832.jpg

1|D:\python\new_test\data\dogs\dog.1512.jpg

1|D:\python\new_test\data\dogs\dog.3539.jpg

0|D:\python\new_test\data\cats\cat.2974.jpg

0|D:\python\new_test\data\cats\cat.3891.jpg

0|D:\python\new_test\data\cats\cat.3229.jpg

0|D:\python\new_test\data\cats\cat.3360.jpg

1|D:\python\new_test\data\dogs\dog.3962.jpg

0|D:\python\new_test\data\cats\cat.3430.jpg

1|D:\python\new_test\data\dogs\dog.2062.jpg

1|D:\python\new_test\data\dogs\dog.2324.jpg

1|D:\python\new_test\data\dogs\dog.89.jpg

0|D:\python\new_test\data\cats\cat.193.jpg

0|D:\python\new_test\data\cats\cat.1630.jpg

0|D:\python\new_test\data\cats\cat.664.jpg

0表示猫,1表示狗

2、数据预处理和数据读取

import torchvision.transforms as T

from torch.utils import data

from PIL import Image

#数据增广 要注意训练和测试时有些是不需要的

trans = T.Compose([

T.Resize((224,224)), #统一输入图像大小

T.ToTensor(), #必须 0-1 ToTensor

T.Normalize((0.485,0.456,0.406),(0.229,0.224,0.225)), #标准化

#T.RandomSizedCrop(100,100), #随机裁剪

T.RandomRotation(degrees = 45),

T.RandomAffine(45),

T.RandomHorizontalFlip(), #左右翻转

T.GaussianBlur(kernel_size=3),#高斯模糊

])

#加载元数据信息

def load_meta(meta_data_path):

with open(meta_data_path,'r') as f:

return [line.strip().split('|') for line in f.readlines()]

#读取图像数据

def load_image(image_path):

return Image.open(image_path)

#数据读取类

class TestDataset(data.Dataset): #继承Dataset类 需要实现下面几个方法

def __init__(self,meta_data_path):

#[ (0,image_path) ,(2,image_path) ,(1,image_path)]

self.dataset = load_meta(meta_data_path)

def __getitem__(self, index):

item = self.dataset[index]

tag, image_path = int(item[0]),item[1] #类别,图片路径

image = load_image(image_path)

return trans(image),tag

def __len__(self): #返回数据集的长度

return len(self.dataset)

测试1:

if __name__ == '__main__':

trainset = TestDataset(meta_data_path=r"D:\python\new_test\data\meta_train.text")

train_loader = data.DataLoader(trainset,batch_size= 64,shuffle=True,drop_last=True)

#train_loader会根据batch_size一次性拿到64个数据

for batch in iter(train_loader): #每次会返回一个batch大小的数据[[image_data,image_data,...一个batch大小...],[tag,tag,......一个batch大小...]]

images,tags = batch # [image_data,image_data,....] ,[tag,tag,...]

print("images:",len(images)) #64

print("tags",len(tags)) #64

img = images[0]

print("img:",type(img))

print("tag:",tags[0])

break

输出:

images: 64

tags 64

img: <class 'torch.Tensor'>

tag: tensor(1)

测试2:使用matplotlib展示

if __name__ == '__main__':

import matplotlib.pyplot as plt

import torchvision.utils as vutils

import numpy as np

import torch

trainset = TestDataset(meta_data_path=r"D:\python\new_test\data\meta_train.text")

train_loader = data.DataLoader(trainset,batch_size= 64,shuffle=True,drop_last=True)

ngpu = 1

device = torch.device("cuda:0" if (torch.cuda.is_available() and ngpu > 0) else "cpu")

real_batch = next(iter(train_loader))

plt.figure(figsize=(8, 8))

plt.axis("off")

plt.title("Training Images")

plt.imshow(np.transpose(vutils.make_grid(real_batch[0].to(device)[:64], padding=2, normalize=True).cpu(), (1, 2, 0)))

plt.show()

输出:

1567

1567

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?