OpenPose C++实现单人姿态估计 - 代码实现

flyfish

运行环境

Ubuntu18.04

Qt 5.12

OpenCV,版本4.2.0

深度学习推理库使用OpenCV DNN

预训练模型下载

COCO模型(18个part)

http://posefs1.perception.cs.cmu.edu/OpenPose/models/pose/coco/pose_iter_440000.caffemodel

https://raw.githubusercontent.com/opencv/opencv_extra/master/testdata/dnn/openpose_pose_coco.prototxt

MPI模型(16个part)):

http://posefs1.perception.cs.cmu.edu/OpenPose/models/pose/mpi/pose_iter_160000.caffemodel

https://raw.githubusercontent.com/opencv/opencv_extra/master/testdata/dnn/openpose_pose_mpi_faster_4_stages.prototxt

手势模型

http://posefs1.perception.cs.cmu.edu/OpenPose/models/hand/pose_iter_102000.caffemodel

https://raw.githubusercontent.com/CMU-Perceptual-Computing-Lab/openpose/master/models/hand/pose_deploy.prototxt

不同的模型不同的输出

以COCO为例输出18个part

这里只下载COCO模型

配置

cv::String modelTxt ="openpose_pose_coco.prototxt";

cv::String modelBin = "pose_iter_440000.caffemodel";

cv::String imageFile = "1.jpg";

cv::String dataset = "COCO";

int W_in = 368;

int H_in = 368;

float thresh = 0.1f;

float scale = 0.003922f;

准备输入到网络

将图像从OpenCV格式转换为Caffe blob格式。

像素值归一化为(0,1)。

指定图像的尺寸W_in, H_in。

减去的平均值是(0,0,0)。由于OpenCV和Caffe都使用BGR格式,就不用交换R和B通道。

Mat img = imread(imageFile);

Mat inputBlob = blobFromImage(img, scale, Size(W_in, H_in), Scalar(0, 0, 0), false, false);

输出解析关键点

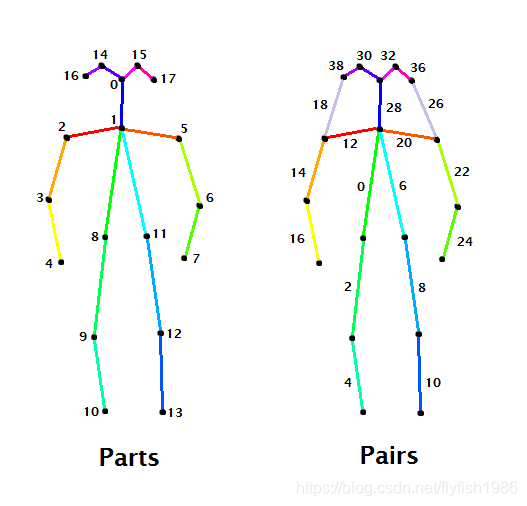

COCO dataset的Parts 和 Pairs 索引

COCO 输出格式

Nose – 0,

Neck – 1,

Right Shoulder – 2,

Right Elbow – 3,

Right Wrist – 4,

Left Shoulder – 5,

Left Elbow – 6,

Left Wrist – 7,

Right Hip – 8,

Right Knee – 9,

Right Ankle – 10,

Left Hip – 11,

Left Knee – 12,

LAnkle – 13,

Right Eye – 14,

Left Eye – 15,

Right Ear – 16,

Left Ear – 17,

Background – 18

MPII 输出格式

Head – 0,

Neck – 1,

Right Shoulder – 2,

Right Elbow – 3,

Right Wrist – 4,

Left Shoulder – 5,

Left Elbow – 6,

Left Wrist – 7,

Right Hip – 8,

Right Knee – 9,

Right Ankle – 10,

Left Hip – 11,

Left Knee – 12,

Left Ankle – 13,

Chest – 14,

Background – 15

Mat result = net.forward();

result如何解析

输出为4维矩阵:

第一个维度是图像ID

第二个维度表示关键点的索引。

该模型生成置信度图(Confidence Map)和Part Affinity map,它们都是连接在一起的。

对于COCO模型,它由57个部分组成,

18个关键点置信度图(confidence Map)+1个背景+19*2个Part Affinity Map。

18+1+38=57

第三个维度是输出图像的高度。

第四个维度是输出图像的宽度。

通过找到该关键点的置信度图的极大值来获得该关键点的位置,使用阈值来减少误检测。

下面代码注释中的Slice heatmap of corresponding body’s part.

slice heatmap 相当于probability map更容易理解

完整代码(OpenCV自带OPenPose实例,以下代码有更改)

pro文件

QT -= gui

CONFIG += c++11 console

CONFIG -= app_bundle

# The following define makes your compiler emit warnings if you use

# any Qt feature that has been marked deprecated (the exact warnings

# depend on your compiler). Please consult the documentation of the

# deprecated API in order to know how to port your code away from it.

DEFINES += QT_DEPRECATED_WARNINGS

# You can also make your code fail to compile if it uses deprecated APIs.

# In order to do so, uncomment the following line.

# You can also select to disable deprecated APIs only up to a certain version of Qt.

#DEFINES += QT_DISABLE_DEPRECATED_BEFORE=0x060000 # disables all the APIs deprecated before Qt 6.0.0

SOURCES += \

main.cpp

# Default rules for deployment.

qnx: target.path = /tmp/$${TARGET}/bin

else: unix:!android: target.path = /opt/$${TARGET}/bin

!isEmpty(target.path): INSTALLS += target

INCLUDEPATH += /usr/local/include \

/usr/local/include/opencv4 \

/usr/local/include/opencv4/opencv2

LIBS += /usr/local/lib/libopencv_calib3d.so \

/usr/local/lib/libopencv_core.so \

/usr/local/lib/libopencv_highgui.so \

/usr/local/lib/libopencv_imgproc.so \

/usr/local/lib/libopencv_imgcodecs.so\

/usr/local/lib/libopencv_objdetect.so\

/usr/local/lib/libopencv_photo.so \

/usr/local/lib/libopencv_dnn.so \

/usr/local/lib/libopencv_features2d.so \

/usr/local/lib/libopencv_stitching.so \

/usr/local/lib/libopencv_flann.so\

/usr/local/lib/libopencv_videoio.so \

/usr/local/lib/libopencv_video.so\

/usr/local/lib/libopencv_ml.so

main.cpp 文件

#include <opencv2/dnn.hpp>

#include <opencv2/imgproc.hpp>

#include <opencv2/highgui.hpp>

using namespace cv;

using namespace cv::dnn;

#include <iostream>

using namespace std;

// connection table, in the format [model_id][pair_id][from/to]

// please look at the nice explanation at the bottom of:

// https://github.com/CMU-Perceptual-Computing-Lab/openpose/blob/master/doc/output.md

//

const int POSE_PAIRS[3][20][2] = {

{ // COCO body

{1,2}, {1,5}, {2,3},

{3,4}, {5,6}, {6,7},

{1,8}, {8,9}, {9,10},

{1,11}, {11,12}, {12,13},

{1,0}, {0,14},

{14,16}, {0,15}, {15,17}

},

{ // MPI body

{0,1}, {1,2}, {2,3},

{3,4}, {1,5}, {5,6},

{6,7}, {1,14}, {14,8}, {8,9},

{9,10}, {14,11}, {11,12}, {12,13}

},

{ // hand

{0,1}, {1,2}, {2,3}, {3,4}, // thumb

{0,5}, {5,6}, {6,7}, {7,8}, // pinkie

{0,9}, {9,10}, {10,11}, {11,12}, // middle

{0,13}, {13,14}, {14,15}, {15,16}, // ring

{0,17}, {17,18}, {18,19}, {19,20} // small

}};

int main(int argc, char **argv)

{

CommandLineParser parser(argc, argv,

"{ h help | false | print this help message }"

"{ p proto | | (required) model configuration, e.g. hand/pose.prototxt }"

"{ m model | | (required) model weights, e.g. hand/pose_iter_102000.caffemodel }"

"{ i image | | (required) path to image file (containing a single person, or hand) }"

"{ d dataset | | specify what kind of model was trained. It could be (COCO, MPI, HAND) depends on dataset. }"

"{ width | 368 | Preprocess input image by resizing to a specific width. }"

"{ height | 368 | Preprocess input image by resizing to a specific height. }"

"{ t threshold | 0.1 | threshold or confidence value for the heatmap }"

"{ s scale | 0.003922 | scale for blob }"

);

// cv::String modelTxt = samples::findFile(parser.get<string>("proto"));

// cv::String modelBin = samples::findFile(parser.get<string>("model"));

// cv::String imageFile = samples::findFile(parser.get<String>("image"));

// cv::String dataset = parser.get<cv::String>("dataset");

// int W_in = parser.get<int>("width");

// int H_in = parser.get<int>("height");

// float thresh = parser.get<float>("threshold");

// float scale = parser.get<float>("scale");

cv::String modelTxt ="openpose_pose_coco.prototxt";

cv::String modelBin = "pose_iter_440000.caffemodel";

cv::String imageFile = "1.jpg";

cv::String dataset = "COCO";

int W_in = 368;

int H_in = 368;

float thresh = 0.1f;

float scale = 0.003922f;

if (parser.get<bool>("help") || modelTxt.empty() || modelBin.empty() || imageFile.empty())

{

cout << "A sample app to demonstrate human or hand pose detection with a pretrained OpenPose dnn." << endl;

parser.printMessage();

return 0;

}

int midx, npairs, nparts;

if (!dataset.compare("COCO")) { midx = 0; npairs = 17; nparts = 18; }

else if (!dataset.compare("MPI")) { midx = 1; npairs = 14; nparts = 16; }

else if (!dataset.compare("HAND")) { midx = 2; npairs = 20; nparts = 22; }

else

{

std::cerr << "Can't interpret dataset parameter: " << dataset << std::endl;

exit(-1);

}

// read the network model

Net net = readNet(modelBin, modelTxt);

// and the image

Mat img = imread(imageFile);

if (img.empty())

{

std::cerr << "Can't read image from the file: " << imageFile << std::endl;

exit(-1);

}

// send it through the network

Mat inputBlob = blobFromImage(img, scale, Size(W_in, H_in), Scalar(0, 0, 0), false, false);

net.setInput(inputBlob);

Mat result = net.forward();

// the result is an array of "heatmaps", the probability of a body part being in location x,y

int H = result.size[2];

int W = result.size[3];

// find the position of the body parts

vector<Point> points(22);

for (int n=0; n<nparts; n++)

{

// Slice heatmap of corresponding body's part.

Mat heatMap(H, W, CV_32F, result.ptr(0,n));

// 1 maximum per heatmap

Point p(-1,-1),pm;

double conf;

minMaxLoc(heatMap, 0, &conf, 0, &pm);

if (conf > thresh)

p = pm;

points[n] = p;

}

// connect body parts and draw it !

float SX = float(img.cols) / W;

float SY = float(img.rows) / H;

for (int n=0; n<npairs; n++)

{

// lookup 2 connected body/hand parts

Point2f a = points[POSE_PAIRS[midx][n][0]];

Point2f b = points[POSE_PAIRS[midx][n][1]];

// we did not find enough confidence before

if (a.x<=0 || a.y<=0 || b.x<=0 || b.y<=0)

continue;

// scale to image size

a.x*=SX; a.y*=SY;

b.x*=SX; b.y*=SY;

line(img, a, b, Scalar(0,200,0), 2);

circle(img, a, 3, Scalar(0,0,200), -1);

circle(img, b, 3, Scalar(0,0,200), -1);

}

imshow("OpenPose", img);

waitKey();

return 0;

}

2486

2486

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?