FNN

先引入全连接神经网络预测MNIST的代码,为了方便后面修改为CNN网络,这里网络的输入参数引入了input_dim=784,hid_layers表示隐藏层数目和全连接层数目

fnn.py

import numpy as np

import matplotlib.pyplot as plt

import torch

from struct import unpack

import gzip

import torch.nn as nn

import time

np.random.seed(1234)

def __read_image(path):

with gzip.open(path, 'rb') as f:

magic, num, rows, cols = unpack('>4I', f.read(16))

img=np.frombuffer(f.read(), dtype=np.uint8).reshape(num, 28*28)

return img

def __read_label(path):

with gzip.open(path, 'rb') as f:

magic, num = unpack('>2I', f.read(8))

lab = np.frombuffer(f.read(), dtype=np.uint8)

# print(lab[1])

return lab

def __normalize_image(image):

img = image.astype(np.float32) / 255.0

return img

def __one_hot_label(label):

lab = np.zeros((label.shape[0], 10))

for i, row in enumerate(lab):

row[label[i]] = 1

return lab

def load_mnist(x_train_path, y_train_path, x_test_path, y_test_path, normalize=True, one_hot=False):

image = {

'train' : __read_image(x_train_path),

'test' : __read_image(x_test_path)

}

label = {

'train' : __read_label(y_train_path),

'test' : __read_label(y_test_path)

}

if normalize:

for key in ('train', 'test'):

image[key] = __normalize_image(image[key])

if one_hot:

for key in ('train', 'test'):

label[key] = __one_hot_label(label[key])

return (image['train'], label['train']), (image['test'], label['test'])

class Net(nn.Module):

def __init__(self,input_dim,hid_layers,k,dtype):

super(Net,self).__init__()

self.layers = [input_dim] + hid_layers

self.layers_hid_num = len(self.layers)-2

fc = []

for i in range(self.layers_hid_num+1):

fc.append(torch.nn.Linear(self.layers[i],self.layers[i+1]))

self.fc = torch.nn.Sequential(*fc)

for i in range(self.layers_hid_num+1):

self.fc[i].weight.data = self.fc[i].weight.data.type(dtype)

self.fc[i].bias.data = self.fc[i].bias.data.type(dtype)

#----------

def Dense(self,x):

for i in range(self.layers_hid_num):

x = torch.relu(self.fc[i](x))#.to(device)

temp = torch.eye(x.shape[-1],self.layers[i+1])

x = x + x@temp

return self.fc[-1](x)

def forward(self,x):

dense_output = self.Dense(x)

output = dense_output

return output

def total_para(self):#计算参数数目

return sum([x.numel() for x in self.parameters()])

def pred(net,images):

return net.forward(images).argmax(dim = 1)

def SOmax(X,y):

y = torch.tensor(__one_hot_label(y))

return (torch.exp(X)*y).sum(1,keepdims = True)/torch.exp(X).sum(1,keepdims = True)

'''

def Loss(net,images,labels):

logits = net.forward(images)

loss = -torch.log(SOmax(logits,labels)).mean()

return loss

'''

def Loss(net,images,labels):

criteon = nn.CrossEntropyLoss()

logits = net.forward(images)

pred_label = logits.argmax(dim = 1)

out = criteon(logits,labels)

return out

def pred_acc(net,images,labels):

logits = net.forward(images)

pred_label = logits.argmax(dim = 1)

return pred_label.eq(labels).sum()

def Train(net,train_images,train_labels,batch,epoch,optim):

m = 0

iter_num = int(epoch*train_images.shape[0]/batch)

train_acc = 0

for i in range(iter_num):

x = i*batch%len(train_images)

y = x + batch

for k in range(1):

loss = Loss(net,train_images[x:y],train_labels[x:y])

optim.zero_grad()

loss.backward()

optim.step()

if i%20 == 0:

print('the iteration:%d,the batch_loss:%.3e,the trained photo:%d'%(i + 20,loss.item(),y))

elif (i + 1)*batch%len(train_images) == 0:

train_acc = pred_acc(net,train_images,train_labels)

m = m + 1

acc = train_acc.item()/train_images.shape[0]

print('the epoch:%d,the acc:%.2f'%(m,acc))

train_acc = 0

def test_acc(net,images,labels):

logits = net.forward(images)

pred_label = logits.argmax(dim = 1)

return pred_label.eq(labels).sum().item()/images.shape[0]

x_train_path = 'C:\\Users\\Desktop\\icbc\\deepfm\\mnist_dataset\\train-images-idx3-ubyte.gz'

y_train_path = 'C:\\Users\\Desktop\\icbc\\deepfm\\mnist_dataset\\train-labels-idx1-ubyte.gz'

x_test_path = 'C:\\Users\\Desktop\\icbc\\deepfm\\mnist_dataset\\t10k-images-idx3-ubyte.gz'

y_test_path = 'C:\\Users\\Desktop\\icbc\\deepfm\\mnist_dataset\\t10k-labels-idx1-ubyte.gz'

(x_train,y_train),(x_test,y_test)=load_mnist(x_train_path, y_train_path, x_test_path, y_test_path)

tic = time.time()

train_images = torch.tensor(x_train).float()

train_labels = torch.tensor(y_train).long()

test_images = torch.tensor(x_test).float()

test_labels = torch.tensor(y_test).long()

input_dim = 784

hid_layers = [64,10]

k = 10

dtype = torch.float32

net = Net(input_dim,hid_layers,k,dtype)

#optim = torch.optim.SGD(net.parameters(),lr = 1e-3,momentum = 0.78)

optim = torch.optim.Adam(net.parameters(),lr = 1e-3,betas=(0.9,0.999))

batch = 1000

epoch = 8

Train(net,train_images,train_labels,batch,epoch,optim)

ela = time.time() - tic

print('the time:%.2f,the test_acc:%.2f'%(ela,test_acc(net,test_images,test_labels)))

FNN+FM

这里引入因子分解技术,增加一个额外的线性层,不过效果没有明显提升

fnnfm.py

import numpy as np

import matplotlib.pyplot as plt

import torch

from struct import unpack

import gzip

import torch.nn as nn

import time

np.random.seed(1234)

def __read_image(path):

with gzip.open(path, 'rb') as f:

magic, num, rows, cols = unpack('>4I', f.read(16))

img=np.frombuffer(f.read(), dtype=np.uint8).reshape(num, 28*28)

return img

def __read_label(path):

with gzip.open(path, 'rb') as f:

magic, num = unpack('>2I', f.read(8))

lab = np.frombuffer(f.read(), dtype=np.uint8)

# print(lab[1])

return lab

def __normalize_image(image):

img = image.astype(np.float32) / 255.0

return img

def __one_hot_label(label):

lab = np.zeros((label.shape[0], 10))

for i, row in enumerate(lab):

row[label[i]] = 1

return lab

def load_mnist(x_train_path, y_train_path, x_test_path, y_test_path, normalize=True, one_hot=False):

image = {

'train' : __read_image(x_train_path),

'test' : __read_image(x_test_path)

}

label = {

'train' : __read_label(y_train_path),

'test' : __read_label(y_test_path)

}

if normalize:

for key in ('train', 'test'):

image[key] = __normalize_image(image[key])

if one_hot:

for key in ('train', 'test'):

label[key] = __one_hot_label(label[key])

return (image['train'], label['train']), (image['test'], label['test'])

class Net(nn.Module):

def __init__(self,input_dim,hid_layers,k,dtype):

super(Net,self).__init__()

self.layers = [input_dim] + hid_layers

self.layers_hid_num = len(self.layers)-2

fc = []

for i in range(self.layers_hid_num+1):

fc.append(torch.nn.Linear(self.layers[i],self.layers[i+1]))

self.fc = torch.nn.Sequential(*fc)

for i in range(self.layers_hid_num+1):

self.fc[i].weight.data = self.fc[i].weight.data.type(dtype)

self.fc[i].bias.data = self.fc[i].bias.data.type(dtype)

#--------

self.w = torch.nn.Linear(input_dim,hid_layers[-1])

fm = [];fm.append(self.w)

self.fm = torch.nn.Sequential(*fm)

self.fm[0].weight.data = self.fm[0].weight.data.type(dtype)

self.fm[0].bias.data = self.fm[0].bias.data.type(dtype)

self.v = torch.nn.Parameter(torch.FloatTensor(torch.rand(input_dim,k)), requires_grad=True)

self.v = self.v.type(dtype)

#----------

def FM(self,x):

linear_part = self.fm[0](x)

inner_part1 = torch.pow(x,2)@torch.pow(self.v,2)

inner_part2 = torch.pow((x@self.v),2)

inner_s = 0.5*(inner_part2 - inner_part1).sum(axis = 1,keepdims = True)

inner = inner_s.repeat(1,self.layers[-1])

return linear_part + inner

def Dense(self,x):

for i in range(self.layers_hid_num):

x = torch.relu(self.fc[i](x))#.to(device)

temp = torch.eye(x.shape[-1],self.layers[i+1])

x = x + x@temp

return self.fc[-1](x)

def forward(self,x):

fm_output = self.FM(x)

dense_output = self.Dense(x)

output = dense_output + fm_output

return output

def total_para(self):#计算参数数目

return sum([x.numel() for x in self.parameters()])

def pred(net,images):

return net.forward(images).argmax(dim = 1)

def SOmax(X,y):

y = torch.tensor(__one_hot_label(y))

return (torch.exp(X)*y).sum(1,keepdims = True)/torch.exp(X).sum(1,keepdims = True)

'''

def Loss(net,images,labels):

logits = net.forward(images)

loss = -torch.log(SOmax(logits,labels)).mean()

return loss

'''

def Loss(net,images,labels):

criteon = nn.CrossEntropyLoss()

logits = net.forward(images)

pred_label = logits.argmax(dim = 1)

out = criteon(logits,labels)

return out

def pred_acc(net,images,labels):

logits = net.forward(images)

pred_label = logits.argmax(dim = 1)

return pred_label.eq(labels).sum()

def Train(net,train_images,train_labels,batch,epoch,optim):

m = 0

iter_num = int(epoch*train_images.shape[0]/batch)

train_acc = 0

for i in range(iter_num):

x = i*batch%len(train_images)

y = x + batch

for k in range(1):

loss = Loss(net,train_images[x:y],train_labels[x:y])

optim.zero_grad()

loss.backward()

optim.step()

if i%20 == 0:

print('the iteration:%d,the batch_loss:%.3e,the trained photo:%d'%(i + 20,loss.item(),y))

elif (i + 1)*batch%len(train_images) == 0:

train_acc = pred_acc(net,train_images,train_labels)

m = m + 1

acc = train_acc.item()/train_images.shape[0]

print('the epoch:%d,the acc:%.2f'%(m,acc))

train_acc = 0

def test_acc(net,images,labels):

logits = net.forward(images)

pred_label = logits.argmax(dim = 1)

return pred_label.eq(labels).sum().item()/images.shape[0]

x_train_path = 'C:\\Users\\2001213226\\Desktop\\icbc\\deepfm\\mnist_dataset\\train-images-idx3-ubyte.gz'

y_train_path = 'C:\\Users\\2001213226\\Desktop\\icbc\\deepfm\\mnist_dataset\\train-labels-idx1-ubyte.gz'

x_test_path = 'C:\\Users\\2001213226\\Desktop\\icbc\\deepfm\\mnist_dataset\\t10k-images-idx3-ubyte.gz'

y_test_path = 'C:\\Users\\2001213226\\Desktop\\icbc\\deepfm\\mnist_dataset\\t10k-labels-idx1-ubyte.gz'

(x_train,y_train),(x_test,y_test)=load_mnist(x_train_path, y_train_path, x_test_path, y_test_path)

tic = time.time()

train_images = torch.tensor(x_train).float()

train_labels = torch.tensor(y_train).long()

test_images = torch.tensor(x_test).float()

test_labels = torch.tensor(y_test).long()

input_dim = 784

hid_layers = [64,10]

k = 10

dtype = torch.float32

net = Net(input_dim,hid_layers,k,dtype)

#optim = torch.optim.SGD(net.parameters(),lr = 1e-3,momentum = 0.78)

optim = torch.optim.Adam(net.parameters(),lr = 1e-3,betas=(0.9,0.999))

batch = 1000

epoch = 8

Train(net,train_images,train_labels,batch,epoch,optim)

ela = time.time() - tic

print('the time:%.2f,the test_acc:%.2f'%(ela,test_acc(net,test_images,test_labels)))

1维卷积的引入

1维卷积的引入可以降低参数量,但是发现引入卷积运算以后,代码训练速度明显慢了很多,这里值得考虑。

import numpy as np

import matplotlib.pyplot as plt

import torch

from struct import unpack

import gzip

import torch.nn as nn

import time

np.random.seed(1234)

def __read_image(path):

with gzip.open(path, 'rb') as f:

magic, num, rows, cols = unpack('>4I', f.read(16))

img=np.frombuffer(f.read(), dtype=np.uint8).reshape(num, 28*28)

return img

def __read_label(path):

with gzip.open(path, 'rb') as f:

magic, num = unpack('>2I', f.read(8))

lab = np.frombuffer(f.read(), dtype=np.uint8)

# print(lab[1])

return lab

def __normalize_image(image):

img = image.astype(np.float32) / 255.0

return img

def __one_hot_label(label):

lab = np.zeros((label.shape[0], 10))

for i, row in enumerate(lab):

row[label[i]] = 1

return lab

def load_mnist(x_train_path, y_train_path, x_test_path, y_test_path, normalize=True, one_hot=False):

image = {

'train' : __read_image(x_train_path),

'test' : __read_image(x_test_path)

}

label = {

'train' : __read_label(y_train_path),

'test' : __read_label(y_test_path)

}

if normalize:

for key in ('train', 'test'):

image[key] = __normalize_image(image[key])

if one_hot:

for key in ('train', 'test'):

label[key] = __one_hot_label(label[key])

return (image['train'], label['train']), (image['test'], label['test'])

class Net(nn.Module):

def __init__(self,input_dim,hid_layers,k,dtype):

super(Net,self).__init__()

self.kernel_size = [10,10,6]

self.stride = [1,1,2]

cov = []

out_dim = [3,3,1]

in_dim = 1

for i in range(len(self.kernel_size)):

cov.append(nn.Conv1d(in_channels = in_dim,out_channels = out_dim[i],kernel_size = self.kernel_size[i],stride = self.stride[i]))

input_dim = (input_dim - self.kernel_size[i])//self.stride[i] + 1

in_dim = out_dim[i]

self.cov = torch.nn.Sequential(*cov)

self.layers = [input_dim] + hid_layers

self.layers_hid_num = len(self.layers)-2

fc = []

for i in range(self.layers_hid_num+1):

fc.append(torch.nn.Linear(self.layers[i],self.layers[i+1]))

self.fc = torch.nn.Sequential(*fc)

for i in range(self.layers_hid_num+1):

self.fc[i].weight.data = self.fc[i].weight.data.type(dtype)

self.fc[i].bias.data = self.fc[i].bias.data.type(dtype)

def CNN(self,x):

h = x.reshape(x.shape[0],1,x.shape[1])

for i in range(len(self.cov)):

h = self.cov[i](h)

h = torch.relu(h)

return h.squeeze(dim = 1)

def Dense(self,x):

for i in range(self.layers_hid_num):

x = torch.relu(self.fc[i](x))#.to(device)

temp = torch.eye(x.shape[-1],self.layers[i+1])

x = x + x@temp

return self.fc[-1](x)

def forward(self,x):

x = self.CNN(x)

dense_output = self.Dense(x)

output = dense_output

return output

def total_para(self):#计算参数数目

return sum([x.numel() for x in self.parameters()])

def pred(net,images):

return net.forward(images).argmax(dim = 1)

def SOmax(X,y):

y = torch.tensor(__one_hot_label(y))

return (torch.exp(X)*y).sum(1,keepdims = True)/torch.exp(X).sum(1,keepdims = True)

'''

def Loss(net,images,labels):

logits = net.forward(images)

loss = -torch.log(SOmax(logits,labels)).mean()

return loss

'''

def Loss(net,images,labels):

criteon = nn.CrossEntropyLoss()

logits = net.forward(images)

pred_label = logits.argmax(dim = 1)

out = criteon(logits,labels)

return out

def pred_acc(net,images,labels):

logits = net.forward(images)

pred_label = logits.argmax(dim = 1)

return pred_label.eq(labels).sum()

def Train(net,train_images,train_labels,batch,epoch,optim):

m = 0

iter_num = int(epoch*train_images.shape[0]/batch)

train_acc = 0

for i in range(iter_num):

x = i*batch%len(train_images)

y = x + batch

for k in range(1):

loss = Loss(net,train_images[x:y],train_labels[x:y])

optim.zero_grad()

loss.backward()

optim.step()

if i%20 == 0:

print('the iteration:%d,the batch_loss:%.3e,the trained photo:%d'%(i + 20,loss.item(),y))

elif (i + 1)*batch%len(train_images) == 0:

train_acc = pred_acc(net,train_images,train_labels)

m = m + 1

acc = train_acc.item()/train_images.shape[0]

print('the epoch:%d,the acc:%.2f'%(m,acc))

train_acc = 0

def test_acc(net,images,labels):

logits = net.forward(images)

pred_label = logits.argmax(dim = 1)

return pred_label.eq(labels).sum().item()/images.shape[0]

x_train_path = 'C:\\Users\\2001213226\\Desktop\\icbc\\deepfm\\mnist_dataset\\train-images-idx3-ubyte.gz'

y_train_path = 'C:\\Users\\2001213226\\Desktop\\icbc\\deepfm\\mnist_dataset\\train-labels-idx1-ubyte.gz'

x_test_path = 'C:\\Users\\2001213226\\Desktop\\icbc\\deepfm\\mnist_dataset\\t10k-images-idx3-ubyte.gz'

y_test_path = 'C:\\Users\\2001213226\\Desktop\\icbc\\deepfm\\mnist_dataset\\t10k-labels-idx1-ubyte.gz'

(x_train,y_train),(x_test,y_test)=load_mnist(x_train_path, y_train_path, x_test_path, y_test_path)

tic = time.time()

train_images = torch.tensor(x_train).float()

train_labels = torch.tensor(y_train).long()

test_images = torch.tensor(x_test).float()

test_labels = torch.tensor(y_test).long()

input_dim = 784

hid_layers = [64,10]

k = 10

dtype = torch.float32

net = Net(input_dim,hid_layers,k,dtype)

#optim = torch.optim.SGD(net.parameters(),lr = 1e-3,momentum = 0.78)

optim = torch.optim.Adam(net.parameters(),lr = 1e-3,betas=(0.9,0.999))

batch = 1000

epoch = 8

Train(net,train_images,train_labels,batch,epoch,optim)

ela = time.time() - tic

print('the time:%.2f,the test_acc:%.2f'%(ela,test_acc(net,test_images,test_labels)))

二维卷积的引入

MNIST数据集是图片,因此可以对数据集进行一个修改,得到[N,1,28,28]的数据集,其中N是batch,1是输入维度,如果是RGB图像输入维度就是3,28*28是图片的宽和高。这里面还没引用batchnorm,pool

import numpy as np

import matplotlib.pyplot as plt

import torch

from struct import unpack

import gzip

import torch.nn as nn

import time

np.random.seed(1234)

def __read_image(path):

with gzip.open(path, 'rb') as f:

magic, num, rows, cols = unpack('>4I', f.read(16))

img=np.frombuffer(f.read(), dtype=np.uint8).reshape(num, 28*28)

return img

def __read_label(path):

with gzip.open(path, 'rb') as f:

magic, num = unpack('>2I', f.read(8))

lab = np.frombuffer(f.read(), dtype=np.uint8)

# print(lab[1])

return lab

def __normalize_image(image):

img = image.astype(np.float32) / 255.0

return img

def __one_hot_label(label):

lab = np.zeros((label.shape[0], 10))

for i, row in enumerate(lab):

row[label[i]] = 1

return lab

def load_mnist(x_train_path, y_train_path, x_test_path, y_test_path, normalize=True, one_hot=False):

image = {

'train' : __read_image(x_train_path),

'test' : __read_image(x_test_path)

}

label = {

'train' : __read_label(y_train_path),

'test' : __read_label(y_test_path)

}

if normalize:

for key in ('train', 'test'):

image[key] = __normalize_image(image[key])

if one_hot:

for key in ('train', 'test'):

label[key] = __one_hot_label(label[key])

return (image['train'], label['train']), (image['test'], label['test'])

class Net(nn.Module):

def __init__(self,weight_dim,high_dim,hid_layers,dtype):

super(Net,self).__init__()

self.kernel_size = [3,3,3]

self.stride = [[2,1],[1,2],[1,1]]

cov = []

out_dim = [3,3,1]

in_dim = 1

for i in range(len(self.kernel_size)):

cov.append(nn.Conv2d(in_channels = in_dim,out_channels = out_dim[i],kernel_size = self.kernel_size[i],stride = self.stride[i]))

weight_dim = (weight_dim - self.kernel_size[i])//self.stride[i][0] + 1

high_dim = (high_dim - self.kernel_size[i])//self.stride[i][1] + 1

in_dim = out_dim[i]

self.cov = torch.nn.Sequential(*cov)

input_dim = weight_dim*high_dim

self.layers = [input_dim] + hid_layers

self.layers_hid_num = len(self.layers)-2

fc = []

for i in range(self.layers_hid_num+1):

fc.append(torch.nn.Linear(self.layers[i],self.layers[i+1]))

self.fc = torch.nn.Sequential(*fc)

for i in range(self.layers_hid_num+1):

self.fc[i].weight.data = self.fc[i].weight.data.type(dtype)

self.fc[i].bias.data = self.fc[i].bias.data.type(dtype)

def CNN(self,h):

#h = x.reshape(x.shape[0],1,x.shape[1],x.shape[2])

for i in range(len(self.cov)):

h = self.cov[i](h)

h = torch.relu(h)

#h = nn.BatchNorm2d(h.shape[1])

return (h.squeeze(dim = 1)).reshape(h.shape[0],-1)

def Dense(self,x):

for i in range(self.layers_hid_num):

x = torch.relu(self.fc[i](x))#.to(device)

temp = torch.eye(x.shape[-1],self.layers[i+1])

x = x + x@temp

return self.fc[-1](x)

def forward(self,x):

x = self.CNN(x)

dense_output = self.Dense(x)

output = dense_output

return output

def total_para(self):#计算参数数目

return sum([x.numel() for x in self.parameters()])

def pred(net,images):

return net.forward(images).argmax(dim = 1)

def SOmax(X,y):

y = torch.tensor(__one_hot_label(y))

return (torch.exp(X)*y).sum(1,keepdims = True)/torch.exp(X).sum(1,keepdims = True)

'''

def Loss(net,images,labels):

logits = net.forward(images)

loss = -torch.log(SOmax(logits,labels)).mean()

return loss

'''

def Loss(net,images,labels):

criteon = nn.CrossEntropyLoss()

logits = net.forward(images)

pred_label = logits.argmax(dim = 1)

out = criteon(logits,labels)

return out

def pred_acc(net,images,labels):

logits = net.forward(images)

pred_label = logits.argmax(dim = 1)

return pred_label.eq(labels).sum()

def Train(net,train_images,train_labels,batch,epoch,optim):

m = 0

iter_num = int(epoch*train_images.shape[0]/batch)

train_acc = 0

for i in range(iter_num):

x = i*batch%len(train_images)

y = x + batch

for k in range(1):

loss = Loss(net,train_images[x:y],train_labels[x:y])

optim.zero_grad()

loss.backward()

optim.step()

if i%20 == 0:

print('the iteration:%d,the batch_loss:%.3e,the trained photo:%d'%(i + 20,loss.item(),y))

elif (i + 1)*batch%len(train_images) == 0:

train_acc = pred_acc(net,train_images,train_labels)

m = m + 1

acc = train_acc.item()/train_images.shape[0]

print('the epoch:%d,the acc:%.2f'%(m,acc))

train_acc = 0

def test_acc(net,images,labels):

logits = net.forward(images)

pred_label = logits.argmax(dim = 1)

return pred_label.eq(labels).sum().item()/images.shape[0]

x_train_path = 'C:\\Users\\2001213226\\Desktop\\icbc\\deepfm\\mnist_dataset\\train-images-idx3-ubyte.gz'

y_train_path = 'C:\\Users\\2001213226\\Desktop\\icbc\\deepfm\\mnist_dataset\\train-labels-idx1-ubyte.gz'

x_test_path = 'C:\\Users\\2001213226\\Desktop\\icbc\\deepfm\\mnist_dataset\\t10k-images-idx3-ubyte.gz'

y_test_path = 'C:\\Users\\2001213226\\Desktop\\icbc\\deepfm\\mnist_dataset\\t10k-labels-idx1-ubyte.gz'

(x_train,y_train),(x_test,y_test)=load_mnist(x_train_path, y_train_path, x_test_path, y_test_path)

tic = time.time()

x_train = x_train.reshape(x_train.shape[0],1,28,28)

x_test = x_test.reshape(x_test.shape[0],1,28,28)

train_images = torch.tensor(x_train).float()

train_labels = torch.tensor(y_train).long()

test_images = torch.tensor(x_test).float()

test_labels = torch.tensor(y_test).long()

weight_dim = 28

high_dim = 28

hid_layers = [64,10]

dtype = torch.float32

net = Net(weight_dim,high_dim,hid_layers,dtype)

#optim = torch.optim.SGD(net.parameters(),lr = 1e-3,momentum = 0.78)

optim = torch.optim.Adam(net.parameters(),lr = 1e-3,betas=(0.9,0.999))

batch = 1000

epoch = 8

Train(net,train_images,train_labels,batch,epoch,optim)

ela = time.time() - tic

print('the time:%.2f,the test_acc:%.2f'%(ela,test_acc(net,test_images,test_labels)))

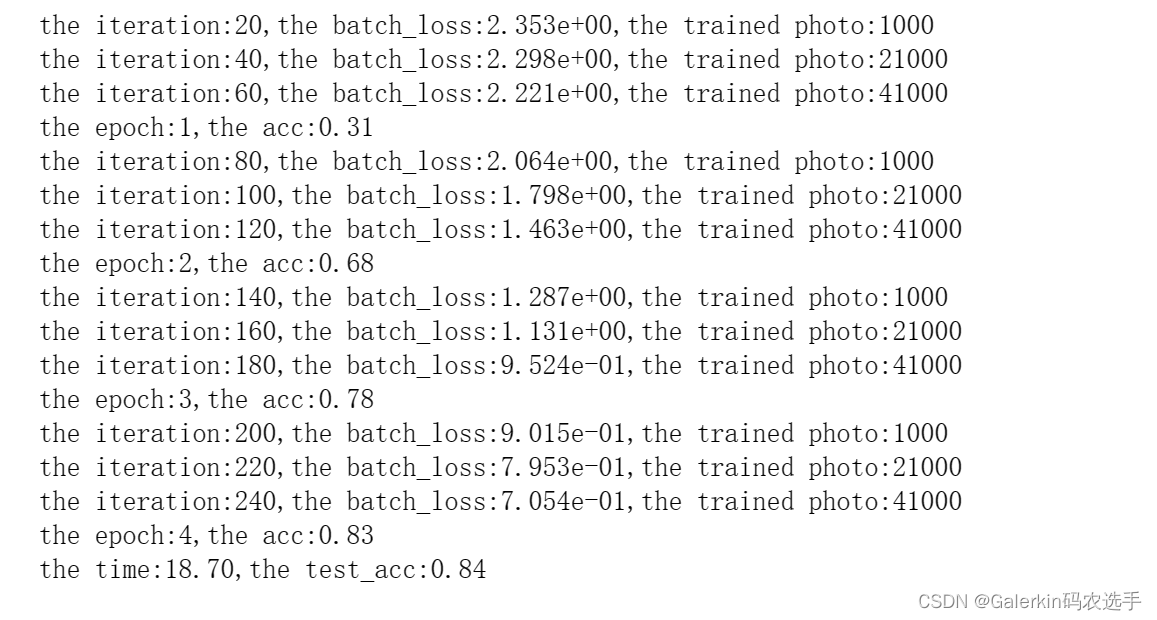

反1维卷积

import numpy as np

import matplotlib.pyplot as plt

import torch

from struct import unpack

import gzip

import torch.nn as nn

import time

np.random.seed(1234)

def __read_image(path):

with gzip.open(path, 'rb') as f:

magic, num, rows, cols = unpack('>4I', f.read(16))

img=np.frombuffer(f.read(), dtype=np.uint8).reshape(num, 28*28)

return img

def __read_label(path):

with gzip.open(path, 'rb') as f:

magic, num = unpack('>2I', f.read(8))

lab = np.frombuffer(f.read(), dtype=np.uint8)

# print(lab[1])

return lab

def __normalize_image(image):

img = image.astype(np.float32) / 255.0

return img

def __one_hot_label(label):

lab = np.zeros((label.shape[0], 10))

for i, row in enumerate(lab):

row[label[i]] = 1

return lab

def load_mnist(x_train_path, y_train_path, x_test_path, y_test_path, normalize=True, one_hot=False):

image = {

'train' : __read_image(x_train_path),

'test' : __read_image(x_test_path)

}

label = {

'train' : __read_label(y_train_path),

'test' : __read_label(y_test_path)

}

if normalize:

for key in ('train', 'test'):

image[key] = __normalize_image(image[key])

if one_hot:

for key in ('train', 'test'):

label[key] = __one_hot_label(label[key])

return (image['train'], label['train']), (image['test'], label['test'])

class Net(nn.Module):

def __init__(self,layers_q,layers_hid,dtype):

super(Net,self).__init__()

self.dtype = dtype

self.layers_q = layers_q

self.layers_qhid_num = len(self.layers_q) - 2

fc_q = []

for i in range(self.layers_qhid_num + 1):

fc_q.append(torch.nn.Linear(self.layers_q[i],self.layers_q[i + 1]))

self.fc_q = torch.nn.Sequential(*fc_q)

for i in range(self.layers_qhid_num + 1):

self.fc_q[i].weight.data = self.fc_q[i].weight.data.type(dtype)

self.fc_q[i].bias.data = self.fc_q[i].bias.data.type(dtype)

#-----------------

input_dim = layers_q[-1]

self.iker = [10,6]

self.istride = [2,1]

icov = []

iout_dim = [3,1]

iin_dim = 1

for i in range(len(self.iker)):

icov.append(nn.ConvTranspose1d(in_channels = iin_dim,out_channels = iout_dim[i],kernel_size = self.iker[i],stride = self.istride[i]))

input_dim = (input_dim - 1)*self.istride[i] + self.iker[i]

iin_dim = iout_dim[i]

self.icov = torch.nn.Sequential(*icov)

for i in range(len(self.iker)):

self.icov[i].weight.data = self.icov[i].weight.data.type(dtype)

self.icov[i].bias.data = self.icov[i].bias.data.type(dtype)

#---------------------------

self.kernel_size = [10,6]

self.stride = [2,2]

cov = []

out_dim = [3,1]

in_dim = 1

for i in range(len(self.kernel_size)):

cov.append(nn.Conv1d(in_channels = in_dim,out_channels = out_dim[i],kernel_size = self.kernel_size[i],stride = self.stride[i]))

input_dim = (input_dim - self.kernel_size[i])//self.stride[i] + 1

in_dim = out_dim[i]

self.cov = torch.nn.Sequential(*cov)

for i in range(len(self.kernel_size)):

self.cov[i].weight.data = self.cov[i].weight.data.type(dtype)

self.cov[i].bias.data = self.cov[i].bias.data.type(dtype)

#-------------------------------

self.layers_hid = [input_dim] + layers_hid

self.layers_hid_num = len(self.layers_hid) - 2

fc_hid = []

for i in range(self.layers_hid_num + 1):

fc_hid.append(torch.nn.Linear(self.layers_hid[i],self.layers_hid[i+1]))

self.fc_hid = torch.nn.Sequential(*fc_hid)

for i in range(self.layers_hid_num + 1):

self.fc_hid[i].weight.data = self.fc_hid[i].weight.data.type(dtype)

self.fc_hid[i].bias.data = self.fc_hid[i].bias.data.type(dtype)

def fnn_q(self,x):

for i in range(self.layers_qhid_num):

x = torch.sin(self.fc_q[i](x))

temp = torch.eye(x.shape[-1],self.layers_q[i + 1],dtype = self.dtype,device = x.device)

x = x + x@temp

return self.fc_q[-1](x)

def icnn(self,x):

h = x.reshape(x.shape[0],1,x.shape[1])

for i in range(len(self.icov)):

h = self.icov[i](h)

h = torch.sin(h)

return h

def cnn(self,h):

for i in range(len(self.cov)):

h = self.cov[i](h)

h = torch.sin(h)

return h.squeeze(dim = 1)

def fnn_h(self,x):

for i in range(self.layers_hid_num):

x = torch.relu(self.fc_hid[i](x))#.to(device)

temp = torch.eye(x.shape[-1],self.layers_hid[i + 1],dtype = self.dtype,device = x.device)

x = x + x@temp

return self.fc_hid[-1](x)

def forward(self,x):

x = self.fnn_q(x)#;print(x.shape)

x = self.icnn(x)#;print(x.shape)

x = self.cnn(x)#;print(x.shape)

x = self.fnn_h(x)

return x

def total_para(self):#计算参数数目

return sum([x.numel() for x in self.parameters()])

def pred(net,images):

return net.forward(images).argmax(dim = 1)

def SOmax(X,y):

y = torch.tensor(__one_hot_label(y))

return (torch.exp(X)*y).sum(1,keepdims = True)/torch.exp(X).sum(1,keepdims = True)

'''

def Loss(net,images,labels):

logits = net.forward(images)

loss = -torch.log(SOmax(logits,labels)).mean()

return loss

'''

def Loss(net,images,labels):

criteon = nn.CrossEntropyLoss()

logits = net.forward(images)

pred_label = logits.argmax(dim = 1)

out = criteon(logits,labels)

return out

def pred_acc(net,images,labels):

logits = net.forward(images)

pred_label = logits.argmax(dim = 1)

return pred_label.eq(labels).sum()

def Train(net,train_images,train_labels,batch,epoch,optim):

m = 0

iter_num = int(epoch*train_images.shape[0]/batch)

train_acc = 0

for i in range(iter_num):

x = i*batch%len(train_images)

y = x + batch

for k in range(1):

loss = Loss(net,train_images[x:y],train_labels[x:y])

optim.zero_grad()

loss.backward()

optim.step()

if i%20 == 0:

print('the iteration:%d,the batch_loss:%.3e,the trained photo:%d'%(i + 20,loss.item(),y))

elif (i + 1)*batch%len(train_images) == 0:

train_acc = pred_acc(net,train_images,train_labels)

m = m + 1

acc = train_acc.item()/train_images.shape[0]

print('the epoch:%d,the acc:%.2f'%(m,acc))

train_acc = 0

def test_acc(net,images,labels):

logits = net.forward(images)

pred_label = logits.argmax(dim = 1)

return pred_label.eq(labels).sum().item()/images.shape[0]

x_train_path = 'C:\\Users\\2001213226\\Desktop\\mnist_dataset\\train-images-idx3-ubyte.gz'

y_train_path = 'C:\\Users\\2001213226\\Desktop\\mnist_dataset\\train-labels-idx1-ubyte.gz'

x_test_path = 'C:\\Users\\2001213226\\Desktop\\mnist_dataset\\t10k-images-idx3-ubyte.gz'

y_test_path = 'C:\\Users\\2001213226\\Desktop\\mnist_dataset\\t10k-labels-idx1-ubyte.gz'

(x_train,y_train),(x_test,y_test)=load_mnist(x_train_path, y_train_path, x_test_path, y_test_path)

tic = time.time()

train_images = torch.tensor(x_train).float()

train_labels = torch.tensor(y_train).long()

test_images = torch.tensor(x_test).float()

test_labels = torch.tensor(y_test).long()

input_dim = 784

dtype = torch.float32

layers_q = [input_dim,10,10]

layers_hid = [20,10]

net = Net(layers_q,layers_hid,dtype)

#optim = torch.optim.SGD(net.parameters(),lr = 1e-3,momentum = 0.78)

optim = torch.optim.Adam(net.parameters(),lr = 1e-3,betas=(0.9,0.999))

batch = 1000

epoch = 4

Train(net,train_images,train_labels,batch,epoch,optim)

ela = time.time() - tic

print('the time:%.2f,the test_acc:%.2f'%(ela,test_acc(net,test_images,test_labels)))

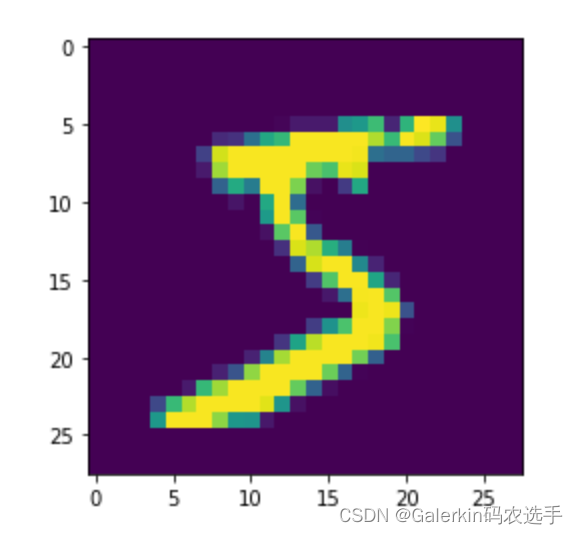

plt.imshow(x_train[0,:].reshape(28,28))

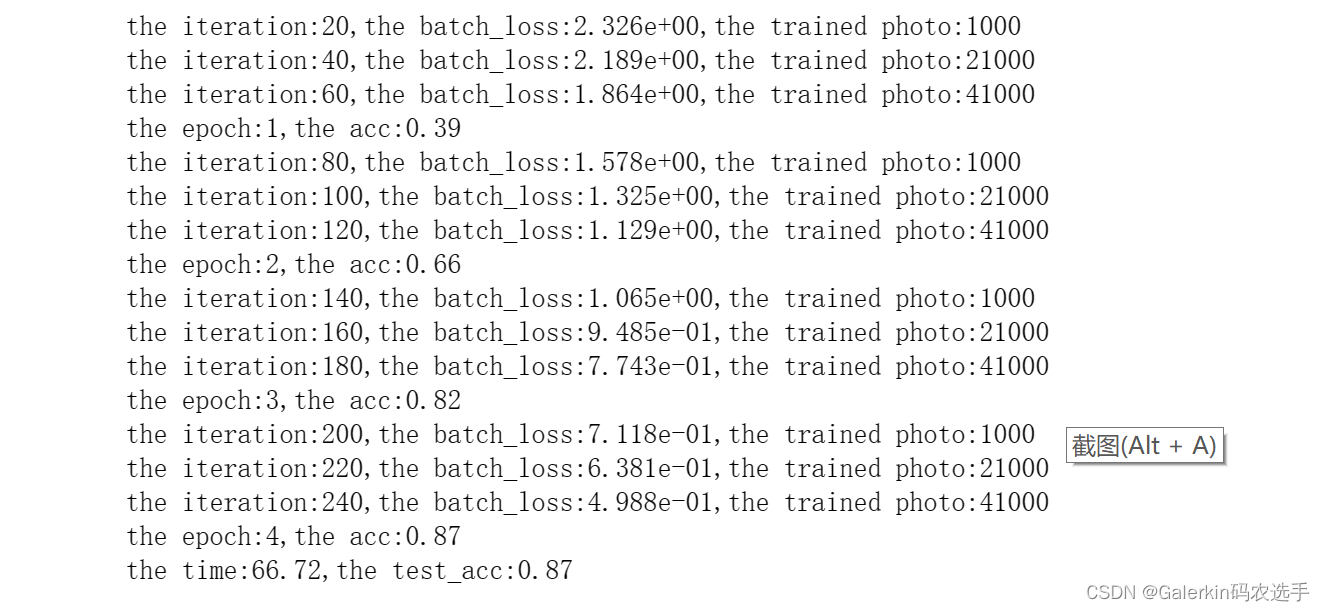

反二维卷积

import numpy as np

import matplotlib.pyplot as plt

import torch

from struct import unpack

import gzip

import torch.nn as nn

import time

np.random.seed(1234)

def __read_image(path):

with gzip.open(path, 'rb') as f:

magic, num, rows, cols = unpack('>4I', f.read(16))

img=np.frombuffer(f.read(), dtype=np.uint8).reshape(num, 28*28)

return img

def __read_label(path):

with gzip.open(path, 'rb') as f:

magic, num = unpack('>2I', f.read(8))

lab = np.frombuffer(f.read(), dtype=np.uint8)

# print(lab[1])

return lab

def __normalize_image(image):

img = image.astype(np.float32) / 255.0

return img

def __one_hot_label(label):

lab = np.zeros((label.shape[0], 10))

for i, row in enumerate(lab):

row[label[i]] = 1

return lab

def load_mnist(x_train_path, y_train_path, x_test_path, y_test_path, normalize=True, one_hot=False):

image = {

'train' : __read_image(x_train_path),

'test' : __read_image(x_test_path)

}

label = {

'train' : __read_label(y_train_path),

'test' : __read_label(y_test_path)

}

if normalize:

for key in ('train', 'test'):

image[key] = __normalize_image(image[key])

if one_hot:

for key in ('train', 'test'):

label[key] = __one_hot_label(label[key])

return (image['train'], label['train']), (image['test'], label['test'])

class Net(nn.Module):

def __init__(self,layers_q,layers_hid,dtype):

super(Net,self).__init__()

self.dtype = dtype

self.layers_q = layers_q

self.layers_qhid_num = len(self.layers_q) - 2

fc_q = []

for i in range(self.layers_qhid_num + 1):

fc_q.append(torch.nn.Linear(self.layers_q[i],self.layers_q[i + 1]))

self.fc_q = torch.nn.Sequential(*fc_q)

for i in range(self.layers_qhid_num + 1):

self.fc_q[i].weight.data = self.fc_q[i].weight.data.type(dtype)

self.fc_q[i].bias.data = self.fc_q[i].bias.data.type(dtype)

#-----------------

input_dim = layers_q[-1]

high_dim = 4

weight_dim = 4

self.iker = [10,6]

self.istride = [[2,1],[2,1]]

icov = []

iout_dim = [3,1]

iin_dim = 1

for i in range(len(self.iker)):

icov.append(nn.ConvTranspose2d(in_channels = iin_dim,out_channels = iout_dim[i],kernel_size = self.iker[i],stride = self.istride[i]))

high_dim = (high_dim - 1)*self.istride[i][0] + self.iker[i]

weight_dim = (weight_dim - 1)*self.istride[i][1] + self.iker[i]

iin_dim = iout_dim[i]

self.icov = torch.nn.Sequential(*icov)

for i in range(len(self.iker)):

self.icov[i].weight.data = self.icov[i].weight.data.type(dtype)

self.icov[i].bias.data = self.icov[i].bias.data.type(dtype)

#---------------------------

self.ker = [10,6]

self.stride = [[2,1],[1,2]]

cov = []

out_dim = [3,1]

in_dim = 1

for i in range(len(self.ker)):

cov.append(nn.Conv2d(in_channels = in_dim,out_channels = out_dim[i],kernel_size = self.ker[i],stride = self.stride[i]))

high_dim = (high_dim - self.ker[i])//self.stride[i][0] + 1

weight_dim = (weight_dim - self.ker[i])//self.stride[i][1] + 1

in_dim = out_dim[i]

self.cov = torch.nn.Sequential(*cov)

for i in range(len(self.ker)):

self.cov[i].weight.data = self.cov[i].weight.data.type(dtype)

self.cov[i].bias.data = self.cov[i].bias.data.type(dtype)

#-------------------------------

input_dim = high_dim*weight_dim

self.layers_hid = [input_dim] + layers_hid

self.layers_hid_num = len(self.layers_hid) - 2

fc_hid = []

for i in range(self.layers_hid_num + 1):

fc_hid.append(torch.nn.Linear(self.layers_hid[i],self.layers_hid[i+1]))

self.fc_hid = torch.nn.Sequential(*fc_hid)

for i in range(self.layers_hid_num + 1):

self.fc_hid[i].weight.data = self.fc_hid[i].weight.data.type(dtype)

self.fc_hid[i].bias.data = self.fc_hid[i].bias.data.type(dtype)

def fnn_q(self,x):

for i in range(self.layers_qhid_num):

x = torch.sin(self.fc_q[i](x))

temp = torch.eye(x.shape[-1],self.layers_q[i + 1],dtype = self.dtype,device = x.device)

x = x + x@temp

return self.fc_q[-1](x)

def icnn(self,x):

h = x.reshape(x.shape[0],1,4,4)

for i in range(len(self.icov)):

h = self.icov[i](h)

h = torch.sin(h)

return h

def cnn(self,h):

for i in range(len(self.cov)):

h = self.cov[i](h)

h = torch.sin(h)

return (h.squeeze(dim = 1)).reshape(h.shape[0],-1)

def fnn_h(self,x):

for i in range(self.layers_hid_num):

x = torch.relu(self.fc_hid[i](x))#.to(device)

temp = torch.eye(x.shape[-1],self.layers_hid[i + 1],dtype = self.dtype,device = x.device)

x = x + x@temp

return self.fc_hid[-1](x)

def forward(self,x):

x = self.fnn_q(x)#;print(x.shape)

x = self.icnn(x)#;print(x.shape)

x = self.cnn(x)#;print(x.shape)

x = self.fnn_h(x)

return x

def total_para(self):#计算参数数目

return sum([x.numel() for x in self.parameters()])

def pred(net,images):

return net.forward(images).argmax(dim = 1)

def SOmax(X,y):

y = torch.tensor(__one_hot_label(y))

return (torch.exp(X)*y).sum(1,keepdims = True)/torch.exp(X).sum(1,keepdims = True)

'''

def Loss(net,images,labels):

logits = net.forward(images)

loss = -torch.log(SOmax(logits,labels)).mean()

return loss

'''

def Loss(net,images,labels):

criteon = nn.CrossEntropyLoss()

logits = net.forward(images)

pred_label = logits.argmax(dim = 1)

out = criteon(logits,labels)

return out

def pred_acc(net,images,labels):

logits = net.forward(images)

pred_label = logits.argmax(dim = 1)

return pred_label.eq(labels).sum()

def Train(net,train_images,train_labels,batch,epoch,optim):

m = 0

iter_num = int(epoch*train_images.shape[0]/batch)

train_acc = 0

for i in range(iter_num):

x = i*batch%len(train_images)

y = x + batch

for k in range(1):

loss = Loss(net,train_images[x:y],train_labels[x:y])

optim.zero_grad()

loss.backward()

optim.step()

if i%20 == 0:

print('the iteration:%d,the batch_loss:%.3e,the trained photo:%d'%(i + 20,loss.item(),y))

elif (i + 1)*batch%len(train_images) == 0:

train_acc = pred_acc(net,train_images,train_labels)

m = m + 1

acc = train_acc.item()/train_images.shape[0]

print('the epoch:%d,the acc:%.2f'%(m,acc))

train_acc = 0

def test_acc(net,images,labels):

logits = net.forward(images)

pred_label = logits.argmax(dim = 1)

return pred_label.eq(labels).sum().item()/images.shape[0]

x_train_path = 'C:\\Users\\2001213226\\Desktop\\mnist_dataset\\train-images-idx3-ubyte.gz'

y_train_path = 'C:\\Users\\2001213226\\Desktop\\mnist_dataset\\train-labels-idx1-ubyte.gz'

x_test_path = 'C:\\Users\\2001213226\\Desktop\\mnist_dataset\\t10k-images-idx3-ubyte.gz'

y_test_path = 'C:\\Users\\2001213226\\Desktop\\mnist_dataset\\t10k-labels-idx1-ubyte.gz'

(x_train,y_train),(x_test,y_test)=load_mnist(x_train_path, y_train_path, x_test_path, y_test_path)

tic = time.time()

train_images = torch.tensor(x_train).float()

train_labels = torch.tensor(y_train).long()

test_images = torch.tensor(x_test).float()

test_labels = torch.tensor(y_test).long()

input_dim = 784

dtype = torch.float32

layers_q = [input_dim,10,16]

layers_hid = [20,10]

net = Net(layers_q,layers_hid,dtype)

#optim = torch.optim.SGD(net.parameters(),lr = 1e-3,momentum = 0.78)

optim = torch.optim.Adam(net.parameters(),lr = 1e-3,betas=(0.9,0.999))

batch = 1000

epoch = 4

Train(net,train_images,train_labels,batch,epoch,optim)

ela = time.time() - tic

print('the time:%.2f,the test_acc:%.2f'%(ela,test_acc(net,test_images,test_labels)))

plt.imshow(x_train[0,:].reshape(28,28))

7224

7224

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?