这次,让我们用一个真实的程序说明到底如何把TensorBoard应用到我们的真实生产环境中。这次我们的任务是搭建一个CNN神经网络,解决MNIST数字识别问题。

首先,把必要的Python库加载进来:

import tensorflow as tf

from tensorflow.contrib import slim

from tensorflow.examples.tutorials.mnist import input_data

slim是一个使构建,训练,评估神经网络变得简单的库。它可以消除原生tensorflow里面很多重复的模板性的代码,让代码更紧凑,更具备可读性。另外slim提供了很多计算机视觉方面的著名模型(VGG, AlexNet等),我们不仅可以直接使用,甚至能以各种方式进行扩展。

with tf.Graph().as_default() as graph:

mnist = input_data.read_data_sets('MNIST_data', one_hot=True)

x = tf.placeholder(tf.float32, shape=[None, 784])

y = tf.placeholder(tf.float32, shape=[None, 10])

x_train = tf.reshape(x, [-1, 28, 28, 1])

tf.summary.image('input', x_train, 10)

with slim.arg_scope([slim.conv2d, slim.fully_connected],

normalizer_fn=slim.batch_norm,

activation_fn=tf.nn.relu):

with slim.arg_scope([slim.max_pool2d], padding='SAME'):

conv1 = slim.conv2d(x_train, 32, [5, 5], name='conv1')

conv_vars = tf.get_collection(tf.GraphKeys.MODEL_VARIABLES, 'Conv')

tf.summary.histogram('conv_weights', conv_vars[0])

pool1 = slim.max_pool2d(conv1, [2, 2])

conv2 = slim.conv2d(pool1, 64, [5, 5])

pool2 = slim.max_pool2d(conv2, [2, 2])

flatten = slim.flatten(pool2)

fc = slim.fully_connected(flatten, 1024)

logits = slim.fully_connected(fc, 10, activation_fn=None)

softmax = tf.nn.softmax(logits, name='output')

with tf.name_scope('loss'):

loss = slim.losses.softmax_cross_entropy(logits, y)

tf.summary.scalar('loss', loss)

train_op = slim.optimize_loss(loss, slim.get_or_create_global_step(),

learning_rate=0.01,

optimizer='Adam')

correct_prediction = tf.equal(tf.argmax(logits, 1), tf.argmax(y, 1))

with tf.name_scope('accuracy'):

accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))

tf.summary.scalar('accuracy', accuracy)

summary = tf.summary.merge_all()

被忘了把我们的模型启动起来,然后进行summary信息汇总,最后使用FileWriter输出到本地磁盘(这里是./cnn这个目录)。

with tf.Session() as sess:

writer = tf.summary.FileWriter('cnn', sess.graph)

sess.run(tf.global_variables_initializer())

sess.run(tf.local_variables_initializer())

for i in range(500):

batch = mnist.train.next_batch(50)

if i % 100 == 0:

train_accuracy = accuracy.eval(feed_dict={x:batch[0], y:batch[1]})

print("step %d, training accuracy %g"%(i, train_accuracy))

_, w_summary = sess.run([train_op, summary], feed_dict={x:batch[0], y:batch[1]})

writer.add_summary(w_summary, i)

最后,我们就可以使用tensorboard命令进行可视化分析了:

$ tensorboard --logdir=./cnn

打开浏览器,访问localhost:6006,一切都清晰明了。

调参

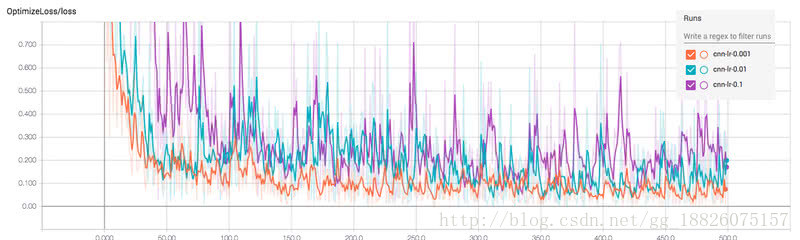

下面,我们来展示如何利用TensorBoard来进行模型超参数的选择(这里以学习速率为例)。

我们只用对代码进行少量的修改,把学习速率0.1、0.001、0.0001的日志信息保存到不同的子目录下即可。

import os

import tensorflow as tf

from tensorflow.contrib import slim

from tensorflow.examples.tutorials.mnist import input_data

def cnn_model(learning_rate):

with tf.Graph().as_default() as graph:

mnist = input_data.read_data_sets('MNIST_data', one_hot=True)

x = tf.placeholder(tf.float32, shape=[None, 784])

y = tf.placeholder(tf.float32, shape=[None, 10])

x_train = tf.reshape(x, [-1, 28, 28, 1])

tf.summary.image('input', x_train, 10)

with slim.arg_scope([slim.conv2d, slim.fully_connected],

normalizer_fn=slim.batch_norm,

activation_fn=tf.nn.relu):

with slim.arg_scope([slim.max_pool2d], padding='SAME'):

conv1 = slim.conv2d(x_train, 32, [5, 5])

conv_vars = tf.get_collection(tf.GraphKeys.MODEL_VARIABLES, 'Conv')

tf.summary.histogram('conv_weights', conv_vars[0])

pool1 = slim.max_pool2d(conv1, [2, 2])

conv2 = slim.conv2d(pool1, 64, [5, 5])

pool2 = slim.max_pool2d(conv2, [2, 2])

flatten = slim.flatten(pool2)

fc = slim.fully_connected(flatten, 1024)

logits = slim.fully_connected(fc, 10, activation_fn=None)

softmax = tf.nn.softmax(logits, name='output')

with tf.name_scope('loss'):

loss = slim.losses.softmax_cross_entropy(logits, y)

tf.summary.scalar('loss', loss)

train_op = slim.optimize_loss(loss, slim.get_or_create_global_step(),

learning_rate=learning_rate,

optimizer='Adam')

with tf.name_scope('accuracy'):

correct_prediction = tf.equal(tf.argmax(logits, 1), tf.argmax(y, 1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))

tf.summary.scalar('accuracy', accuracy)

summary = tf.summary.merge_all()

return {'x': x, 'y': y, 'accuracy': accuracy, 'summary': summary, 'mnist': mnist}, train_op, graph

for learning_rate in [0.1, 0.01, 0.001]:

vars, train_op, graph = cnn_model(learning_rate)

with tf.Session(graph=graph) as sess:

log_dir = os.path.join('cnn', 'cnn-lr-{}'.format(learning_rate))

writer = tf.summary.FileWriter(log_dir, sess.graph)

sess.run(tf.global_variables_initializer())

sess.run(tf.local_variables_initializer())

for i in range(500):

batch = vars['mnist'].train.next_batch(50)

if i % 100 == 0:

train_accuracy = vars['accuracy'].eval(feed_dict={vars['x']:batch[0],

vars['y']:batch[1]})

print("step %d, training accuracy %g"%(i, train_accuracy))

_, w_summary = sess.run([train_op, vars['summary']],

feed_dict={vars['x']:batch[0], vars['y']:batch[1]})

writer.add_summary(w_summary, i)

然后跟之前一样,使用tensorboard命令

$ tensorboard --logdir=./cnn

打开浏览器,访问localhost:6006

显然,橙色曲线的loss最低,也最快收敛,由此可知把最优学习速率应该在0.001附近。

7655

7655

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?