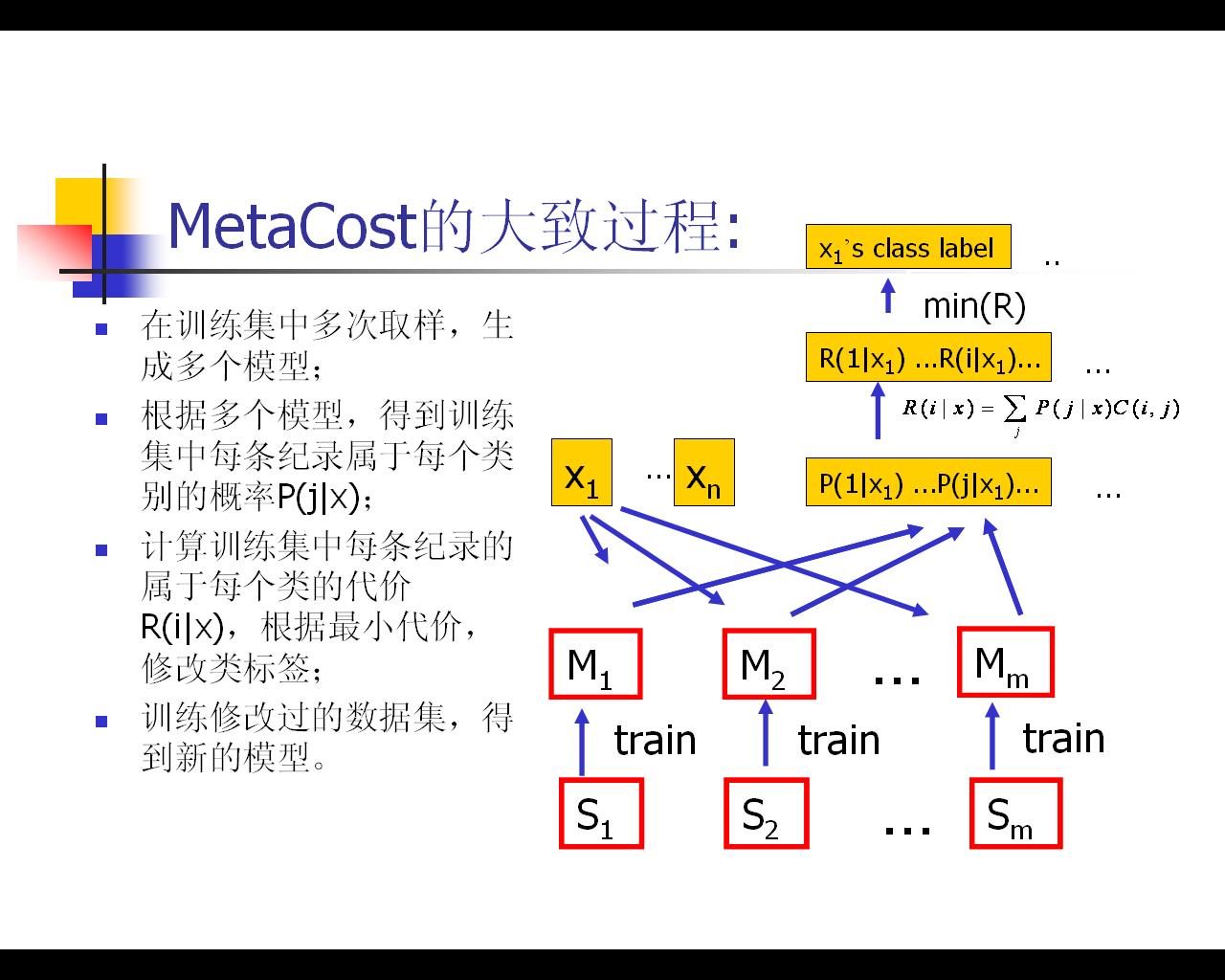

This classifier should produce similar results to one created by passing the base learner to Bagging, which is in turn passed to a CostSensitiveClassifier operating on minimum expected cost. The difference is that MetaCost produces a single cost-sensitive classifier of the base learner, giving the benefits of fast classification and interpretable output (if the base learner itself is interpretable). This implementation uses all bagging iterations when reclassifying training data (the MetaCost paper reports a marginal improvement when only those iterations containing each training instance are used in reclassifying that instance).

/**

* Builds the model of the base learner.

*

* @param data the training data

* @exception Exception if the classifier could not be built successfully

*/

public void buildClassifier(Instances data) throws Exception {

if (!data.classAttribute().isNominal()) {

throw new UnsupportedClassTypeException("Class attribute must be nominal!");

}

if (m_MatrixSource == MATRIX_ON_DEMAND) {

String costName = data.relationName() + CostMatrix.FILE_EXTENSION;

File costFile = new File(getOnDemandDirectory(), costName);

if (!costFile.exists()) {

throw new Exception("On-demand cost file doesn't exist: " + costFile);

}

setCostMatrix(new CostMatrix(new BufferedReader(

new FileReader(costFile))));

}

// Set up the bagger

Bagging bagger = new Bagging();

bagger.setClassifier(getClassifier());

bagger.setSeed(getSeed());

bagger.setNumIterations(getNumIterations());

bagger.setBagSizePercent(getBagSizePercent());

bagger.buildClassifier(data);

// Use the bagger to reassign class values according to minimum expected

// cost

Instances newData = new Instances(data);

for (int i = 0; i < newData.numInstances(); i++) {

Instance current = newData.instance(i);

double [] pred = bagger.distributionForInstance(current);

int minCostPred = Utils.minIndex(m_CostMatrix.expectedCosts(pred));

current.setClassValue(minCostPred);

}

// Build a classifier using the reassigned data

m_Classifier.buildClassifier(newData);

}

485

485

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?