你好!这里是风筝的博客,

欢迎和我一起交流。

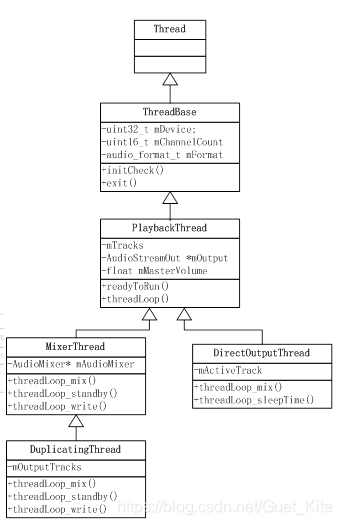

AndioFlinger 作为 Android 的音频系统引擎,重任之一是负责输入输出流设备的管理及音频流数据的处理传输,这是由回放线程(PlaybackThread 及其派生的子类)和录制线程(RecordThread)进行的。

播放线程的基类都是Thread:

- class Thread : virtual public RefBase (system/core/include/utils/Thread.h)

- class ThreadBase : public Thread(frameworks/av/services/audioflinger/Threads.h)

- class PlaybackThread : public ThreadBase(frameworks/av/services/audioflinger/Threads.h)

- class MixerThread : public PlaybackThread(frameworks/av/services/audioflinger/Threads.h)

之前在讲解Android音频子系统(一)------openOutput打开流程 的时候,在openOutput_l里会创建MixerThread对象:

sp<AudioFlinger::PlaybackThread> AudioFlinger::openOutput_l(audio_module_handle_t module,

audio_io_handle_t *output,

audio_config_t *config,

audio_devices_t devices,

const String8& address,

audio_output_flags_t flags)

{

if (status == NO_ERROR) {

//创建播放线程

PlaybackThread *thread;

if (flags & AUDIO_OUTPUT_FLAG_COMPRESS_OFFLOAD) {

thread = new OffloadThread(this, outputStream, *output, devices, mSystemReady);

ALOGV("openOutput_l() created offload output: ID %d thread %p", *output, thread);

} else if ((flags & AUDIO_OUTPUT_FLAG_DIRECT)

|| !isValidPcmSinkFormat(config->format)

|| !isValidPcmSinkChannelMask(config->channel_mask)) {

thread = new DirectOutputThread(this, outputStream, *output, devices, mSystemReady);

ALOGV("openOutput_l() created direct output: ID %d thread %p", *output, thread);

} else {

thread = new MixerThread(this, outputStream, *output, devices, mSystemReady);

ALOGV("openOutput_l() created mixer output: ID %d thread %p", *output, thread);

}

//添加到mPlaybackThreads中

mPlaybackThreads.add(*output, thread);//添加播放线程

return thread;

}

}

创建MixerThread之后添加到mPlaybackThreads里。

我们知道:

AudioFlinger中用于记录Record和Playback线程的有两个全局变量,如下:

DefaultKeyedVector< audio_io_handle_t, sp<PlaybackThread>> mPlaybackThreads;

DefaultKeyedVector< audio_io_handle_t, sp<RecordThread>> mRecordThreads;

先看MixerThread的构造函数:

AudioFlinger::MixerThread::MixerThread(const sp<AudioFlinger>& audioFlinger, AudioStreamOut* output,

audio_io_handle_t id, audio_devices_t device, bool systemReady, type_t type)

: PlaybackThread(audioFlinger, output, id, device, type, systemReady),

// mAudioMixer below

// mFastMixer below

mFastMixerFutex(0),

mMasterMono(false)

// mOutputSink below

// mPipeSink below

// mNormalSink below

{

mAudioMixer = new AudioMixer(mNormalFrameCount, mSampleRate);

mOutputSink = new AudioStreamOutSink(output->stream);

mOutputSink->negotiate(offers, 1, NULL, numCounterOffers);

// initialize fast mixer depending on configuration

bool initFastMixer;

switch (kUseFastMixer) {

case FastMixer_Never:

initFastMixer = false;

break;

case FastMixer_Always:

initFastMixer = true;

break;

case FastMixer_Static:

case FastMixer_Dynamic:

initFastMixer = mFrameCount < mNormalFrameCount;

break;

}

}

里面主要创建了两个类,一个是AudioMixer对象mAudioMixer,这是混音处理的关键。还有一个是AudioStreamOutSink对象mOutputSink,并进行negotiate。最后根据配置(initFastMixer)来判断是否使用fast mixer。

正常来说,一个播放线程的任务就是循环处理上层的音频数据回放请求,然后将其传到下一层,最终写入硬件设备,所以理论上应该会有一个线程循环的地方。

从前面MixerThread的继承关系,它的父类有RefBase这个强指针,根据强指针的特性,目标对象在第一次被引用时是会调用onFirstRef的,所以在其第一次被引用时将调用onFirstRef方法。

//@Threads.cpp

void AudioFlinger::PlaybackThread::onFirstRef()

{

run(mThreadName, ANDROID_PRIORITY_URGENT_AUDIO);

}

很简单函数,里面调用了run方法,我们看下具体代码实现:

//@Threads.cpp

status_t Thread::run(const char* name, int32_t priority, size_t stack)

{

//这个函数一方面CreateThread创建一个线程,另一方面_threadLoop方法,这个_threadLoop方法中将调用子类的threadLoop,并判断是否结束循环,

if (mCanCallJava) {

res = createThreadEtc(_threadLoop,

this, name, priority, stack, &mThread);

} else {

res = androidCreateRawThreadEtc(_threadLoop,

this, name, priority, stack, &mThread);

}

}

这里启动一个新线程调用了子类的_threadLoop,也即是playbackthread的threadloop

int Thread::_threadLoop(void* user)

{

do {

bool result;

if (first) {

first = false;

self->mStatus = self->readyToRun();

result = (self->mStatus == NO_ERROR);

if (result && !self->exitPending()) {

result = self->threadLoop();

}

} else {

result = self->threadLoop();

}

} while(strong != 0);

}

这里的self就是thread子类对象,如果threadLoop返回false,或者子类自己退出了,都会跳出while循环。

总的来说,run里面还是比较简单的,就是启动一个新线程并间接调用threadLoop,然后循环的处理混音Mix业务。

这样音频通道的建立就完成了,这样AudioTrack就可以往这个通道输入数据。

接下来看playbackthread的循环体threadloop的处理过程:

bool AudioFlinger::PlaybackThread::threadLoop()

{

while (!exitPending())

{

//处理配置变更,当有配置改变的事件发生时,需要调用 sendConfigEvent_l() 来通知 PlaybackThread,

//这样 PlaybackThread 才能及时处理配置事件;常见的配置事件是切换音频通路;

processConfigEvents_l();

//......

//没有活跃音轨而且standbyTime过期或者需要需要Suspend,则进入进入standby

//一般创建MixerThread时,mActiveTracks肯定是空的,并且当前时间会超出standbyTime,所以会进入待机

if ((!mActiveTracks.size() && systemTime() > mStandbyTimeNs) ||

isSuspended()) {

// put audio hardware into standby after short delay

if (shouldStandby_l()) {

threadLoop_standby();

mStandby = true;

}

//没有可供使用的active track并且没有广播消息

if (!mActiveTracks.size() && mConfigEvents.isEmpty()) {

// we're about to wait, flush the binder command buffer

IPCThreadState::self()->flushCommands();

clearOutputTracks();

//MixerThread会在这里睡眠等待,直到AudioTrack:: start发送广播唤醒

mWaitWorkCV.wait(mLock);

continue;

}

}

// mMixerStatusIgnoringFastTracks is also updated internally

// 为音轨混音做准备

mMixerStatus = prepareTracks_l(&tracksToRemove);

if (mBytesRemaining == 0) {

mCurrentWriteLength = 0;

//只有当音轨准备就绪,才能进入到混音处理

if (mMixerStatus == MIXER_TRACKS_READY) {

// threadLoop_mix() sets mCurrentWriteLength

threadLoop_mix();//混音处理

} else if ((mMixerStatus != MIXER_DRAIN_TRACK)

&& (mMixerStatus != MIXER_DRAIN_ALL)) {

// threadLoop_sleepTime sets mSleepTimeUs to 0 if data

// must be written to HAL

threadLoop_sleepTime();//如果没准备好,休眠一段时间

if (mSleepTimeUs == 0) {

mCurrentWriteLength = mSinkBufferSize;

}

}

//......

}

//......

if (!waitingAsyncCallback()) {

// mSleepTimeUs == 0 means we must write to audio hardware

if (mSleepTimeUs == 0) {

mLastWriteTime = systemTime(); // also used for dumpsys

ret = threadLoop_write();//里面实际在向硬件抽象层写数据,写入hal

lastWriteFinished = systemTime();

delta = lastWriteFinished - mLastWriteTime;//写数据花了多少时间

} else if ((mMixerStatus == MIXER_DRAIN_TRACK) ||

(mMixerStatus == MIXER_DRAIN_ALL)) {

threadLoop_drain();

}

if (mType == MIXER && !mStandby) {

// write blocked detection

//写的太慢!maxPeriod是按FramCount和SampleRate算出来的,和硬件延时有关,即I2S播放完DMAbuffer数据需要的时间

if (delta > maxPeriod) {

mNumDelayedWrites++;

//发生xrun,输出log告知

if ((lastWriteFinished - lastWarning) > kWarningThrottleNs) {

ATRACE_NAME("underrun");

ALOGW("write blocked for %llu msecs, %d delayed writes, thread %p",

(unsigned long long) ns2ms(delta), mNumDelayedWrites, this);

lastWarning = lastWriteFinished;

}

}

}

}

threadLoop_removeTracks(tracksToRemove);//最后,移除相关track

clearOutputTracks();

}

threadLoop_exit();

}

整个函数还是比较复杂的,不过大致的流程可以分为三个步骤:

1.prepareTracks_l()检查是否有track 的状态,track 状态的 track 会添加到 mActiveTracks并做相应的处理。

2.threadLoop_mix()混音处理,将同时处于active状态的track进行混音。

3.threadLoop_write()数据写入,写入到hal进行播放。

4.threadLoop_removeTracks()已出相关track。

看下关键步骤的详细过程:

prepareTracks_l准备数据:

AudioFlinger::PlaybackThread::mixer_state AudioFlinger::MixerThread::prepareTracks_l(

Vector< sp<Track> > *tracksToRemove)

{

// find out which tracks need to be processed

size_t count = mActiveTracks.size();

//循环处理每一个track

for (size_t i=0 ; i<count ; i++) {

const sp<Track> t = mActiveTracks[i].promote();

//准备数据块

audio_track_cblk_t* cblk = track->cblk();

//回放音频,需要准备多少帧数据

const uint32_t sampleRate = track->mAudioTrackServerProxy->getSampleRate();

AudioPlaybackRate playbackRate = track->mAudioTrackServerProxy->getPlaybackRate();

desiredFrames = sourceFramesNeededWithTimestretch(

sampleRate, mNormalFrameCount, mSampleRate, playbackRate.mSpeed);

desiredFrames += mAudioMixer->getUnreleasedFrames(track->name());

uint32_t minFrames = 1;

//track->sharedBuffer()为0,说明这个audiotrack不是static模式,也即是数据不是一次性传送的

if ((track->sharedBuffer() == 0) && !track->isStopped() && !track->isPausing() &&

(mMixerStatusIgnoringFastTracks == MIXER_TRACKS_READY)) {

minFrames = desiredFrames;

}

//数据准备完毕,设置音量、设置一些参数

size_t framesReady = track->framesReady();

mAudioMixer->setParameter(name, param, AudioMixer::VOLUME0, &vlf);

}

}

妈耶,这也太多太复杂了,还是参考下别人的分析吧,搞不定了!

AudioFlinger::PlaybackThread::threadLoop() 得悉情况有变后,调用

prepareTracks_l() 重新准备音频流和混音器:ACTIVE 状态的 Track 会添加到 mActiveTracks,此外的

Track 会从 mActiveTracks 上移除出来,然后重新准备 AudioMixer。

prepareTracks_l(): 准备音频流和混音器,该函数非常复杂,这里不详细分析了,仅列一下流程要点:

- 遍历 mActiveTracks,逐个处理 mActiveTracks 上的 Track,检查该 Track 是否为 ACTIVE 状态;

- 如果 Track 设置是 ACTIVE 状态,则再检查该 Track 的数据是否准备就绪了;

- 根据音频流的音量值、格式、声道数、音轨的采样率、硬件设备的采样率,配置好混音器参数;

- 如果 Track 的状态是 PAUSED 或 STOPPED,则把该 Track 添加到 tracksToRemove 向量中;

如果prepare_track_l的数据准备工作已经完成,就开始进行混音操作,即threadLoop_mix

void AudioFlinger::MixerThread::threadLoop_mix()

{

// mix buffers...

mAudioMixer->process();

}

里面就是进行混音处理了,具体内容先略过,等我功力大成再来挑战!

之后就是将混音处理好的数据写入hal层,从而进一步写入硬件设备中了:

ssize_t AudioFlinger::MixerThread::threadLoop_write()

{

if (mFastMixer != 0) {

FastMixerStateQueue *sq = mFastMixer->sq();

FastMixerState *state = sq->begin();

if (state->mCommand != FastMixerState::MIX_WRITE &&

(kUseFastMixer != FastMixer_Dynamic || state->mTrackMask > 1)) {

if (state->mCommand == FastMixerState::COLD_IDLE) {

// FIXME workaround for first HAL write being CPU bound on some devices

mOutput->write((char *)mSinkBuffer, 0);

}

}

}

return PlaybackThread::threadLoop_write();

}

ssize_t AudioFlinger::PlaybackThread::threadLoop_write()

{

// If an NBAIO sink is present, use it to write the normal mixer's submix

if (mNormalSink != 0) {

const size_t count = mBytesRemaining / mFrameSize;

ssize_t framesWritten = mNormalSink->write((char *)mSinkBuffer + offset, count);

if (framesWritten > 0) {

bytesWritten = framesWritten * mFrameSize;

} else {

bytesWritten = framesWritten;

}

// otherwise use the HAL / AudioStreamOut directly

} else {

bytesWritten = mOutput->write((char *)mSinkBuffer + offset, mBytesRemaining);

}

}

主要就是在->write中调到hal层的write了,将数据写入底层。

具体可以参考这个:Android音频子系统(二)------threadLoop_write数据写入流程

最后,调用:threadLoop_removeTracks移除tracksToRemove中指示的tracks,在这个列表中的track,与其相关的output将收到stop请求(即AudioSystem::stopOutput(…))。

void AudioFlinger::MixerThread::threadLoop_removeTracks(const Vector< sp<Track> >& tracksToRemove)

{

PlaybackThread::threadLoop_removeTracks(tracksToRemove);

}

void AudioFlinger::PlaybackThread::threadLoop_removeTracks(

const Vector< sp<Track> >& tracksToRemove)

{

size_t count = tracksToRemove.size();

if (count > 0) {

for (size_t i = 0 ; i < count ; i++) {

const sp<Track>& track = tracksToRemove.itemAt(i);

if (track->isExternalTrack()) {

AudioSystem::stopOutput(mId, track->streamType(),

track->sessionId());

if (track->isTerminated()) {

AudioSystem::releaseOutput(mId, track->streamType(),

track->sessionId());

}

}

}

}

}

对了,还有个重要的,之前在AudioFlinger::PlaybackThread::threadLoop() 的注释里写有,active track为空时会休眠,那么啥时候唤醒呢?

其实就是在Track::start里了:

status_t AudioFlinger::PlaybackThread::Track::start(AudioSystem::sync_event_t event __unused,

audio_session_t triggerSession __unused)

{

status = playbackThread->addTrack_l(this);

}

status_t AudioFlinger::PlaybackThread::addTrack_l(const sp<Track>& track)

{

onAddNewTrack_l();

}

void AudioFlinger::PlaybackThread::onAddNewTrack_l()

{

ALOGV("signal playback thread");

//发送广播,唤醒线程

broadcast_l();

}

这里就会就会唤醒threadLoop了。

音频流控制最常用的三个接口:

- AudioFlinger::PlaybackThread::Track::start:开始播放:把该 Track 置 ACTIVE 状态,然后添加到 mActiveTracks 向量中,最后调用 AudioFlinger::PlaybackThread::broadcast_l() 告知 PlaybackThread 情况有变

- AudioFlinger::PlaybackThread::Track::stop:停止播放:把该 Track 置 STOPPED 状态,最后调用 AudioFlinger::PlaybackThread::broadcast_l() 告知 PlaybackThread 情况有变

- AudioFlinger::PlaybackThread::Track::pause:暂停播放:把该 Track 置 PAUSING 状态,最后调用 AudioFlinger::PlaybackThread::broadcast_l() 告知 PlaybackThread 情况有变

每一个MixerThread都有一个唯一对应的AudioMixer,它的作用是完成音频的混音操作。

AudioMixer对外接口主要有Parameter相关(setParameter),Resampler(setResampler),Volume(adjustVolumeRamp),Buffer(setBufferProvider),Track(getTrackName)几部分。

AudioMixer的核心是一个state_t类型的变量mState,所有的混音工作都会在这个变量中体现出来:

//@AudioMixer.h

struct state_t {

uint32_t enabledTracks;

uint32_t needsChanged;

size_t frameCount;

process_hook_t hook; // one of process__*, never NULL

int32_t *outputTemp;

int32_t *resampleTemp;

NBLog::Writer* mLog;

int32_t reserved[1];

// FIXME allocate dynamically to save some memory when maxNumTracks < MAX_NUM_TRACKS

track_t tracks[MAX_NUM_TRACKS] __attribute__((aligned(32)));

};

数组tracks的大小MAX_NUM_TRACKS =32,表示最多支持32路同时混音,其类型track_t是对每一个track的描述,setParameter接口最终影响的就是track的属性.

AudioFlinger中threadloop,不断调用prepareTracks_l来准备数据,每次prepare实际都是对所有Tracks的一次调整 ,如果属性有变化,会通过setParamter通知AudioMixer。

前面AudioMixer::process()调用了mState.hook(&mState);hook是一个函数指针,根据不同场景会分别指向不同函数实现:

在AudioMixer初始化时,hook指向process__nop;

mState.hook = process__nop;

在状态改变、参数变化时,hook指向process__validate

//@AudioMixer.cpp

void AudioMixer::setParameter(int name, int target, int param, void *value)

{

invalidateState(1 << name);

}

void AudioMixer::invalidateState(uint32_t mask)

{

mState.hook = process__validate;

}

process__validate又会根据不同的场景,将hook指向不同的函数:

void AudioMixer::process__validate(state_t* state)

{

//初始值

state->hook = process__nop;

//针对处于enable状态的track

if (countActiveTracks > 0) {

if (resampling)

state->hook = process__genericResampling; //重采样

else

state->hook = process__genericNoResampling;//不重采样

}

}

4023

4023

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?