先上项目效果图:

本次爬取的URL为智联招聘的网址:https://www.zhaopin.com/

首先先登录上去,为了保持我们代码的时效性,让每个人都能直接运行代码出结果,我们要获取到我们登录上去的cookies,并把他放在表头里headers。注意cookies就是保存你的登录信息,每个机器每个时间段该cookies是不同的

找到user-id这个网络请求,把headers里的cookies值复制下来,注意不要直接右键点复制Copy value,这样会导致自动变中文,我们直接点右键正常复制即可。

复制到请求表头里:

headers = {

"User-Agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko)",

"cookie":'x-zp-client-id=3c93dfd0-b090-49e6-e254-80e2fd244823; sts_deviceid=177e9137db1b32-00270991791f53-73e356b-1382400-177e9137db2cfe; sensorsdata2015jssdkcross=%7B%22distinct_id%22%3A%221062819381%22%2C%22first_id%22%3A%22177e91387de573-0029b455e8c501-73e356b-1382400-177e91387dfc4b%22%2C%22props%22%3A%7B%22%24latest_traffic_source_type%22%3A%22%E7%9B%B4%E6%8E%A5%E6%B5%81%E9%87%8F%22%2C%22%24latest_search_keyword%22%3A%22%E6%9C%AA%E5%8F%96%E5%88%B0%E5%80%BC_%E7%9B%B4%E6%8E%A5%E6%89%93%E5%BC%80%22%2C%22%24latest_referrer%22%3A%22%22%7D%2C%22%24device_id%22%3A%22177e91387de573-0029b455e8c501-73e356b-1382400-177e91387dfc4b%22%7D; ssxmod_itna=QqfxyDgDRGD==0KP0LKYIEP8rDCD0lrYt7nQrOx0vP2eGzDAxn40iDt==yD+/EQfwxawKmg25aYGmn4ba1jnraRNOIDB3DEx06xEGQxiiyDCeDIDWeDiDG+7D=xGYDjjtUlcDm4i7DYqGRDB=U8qDfGqGWFLQDmwNDGdK6D7QDIk=gnfrDEeDSKitdj7=DjubD/+DWV7=YT1cNjTUi51KPQDDHWBwC6B5P9=edbWpReWiDtqD9DC=Db+d3uc=lbepKWrhTjbhtI7D660GslDmXkADCaD4I0zGNzAqBrB3L0BqDG4VQeD; ssxmod_itna2=QqfxyDgDRGD==0KP0LKYIEP8rDCD0lrYt7nQEDnF8nDDsqzDLeEiq=m4Q9vd08DeMwD=; at=042ae3d365b444a4bcbf7a73316bb427; rt=7165b7bf4cc94bc786a37e1b14ef5e2d; acw_tc=2760827216207076735498974ea0a63d31d1cc2de256ee4bf6451ee43953cf; locationInfo_search={%22code%22:%22779%22%2C%22name%22:%22%E4%B8%9C%E8%8E%9E%22%2C%22message%22:%22%E5%8C%B9%E9%85%8D%E5%88%B0%E5%B8%82%E7%BA%A7%E7%BC%96%E7%A0%81%22}; Hm_lvt_38ba284938d5eddca645bb5e02a02006=1620652584,1620694762,1620707675; Hm_lpvt_38ba284938d5eddca645bb5e02a02006=1620707811'

}

搜下大数据职业,筛选下职业。

我们进入“大数据开发工程师专栏”:

老规矩,F12打开开发人员附页,我们需要的是工作名,工资,地址,工作经验,学历以及所需要的技能。

点击页面第一个招聘信息的“大数据开发工程师”,观察开发人员附页可以看到如下:

我们定位到![]() ,用xpath解析这里为根路径,之后的工作名,工资,地址,工作经验,学历以及所需要的技能

,用xpath解析这里为根路径,之后的工作名,工资,地址,工作经验,学历以及所需要的技能

都是从这个路径开始循环解析。(因为xpath解析到上面的路径是多个对象,因此我们对每个对象再进一步解析即可)。

xpath解析工作名为:

job_name = job.xpath('./a/div[1]/div[1]/span/span/text()')

xpath解析工资为:

job_salary = job.xpath('normalize-space(./a/div[2]/div[1]/p/text())')

xpath解析地址为:

job_area = job.xpath('./a/div[2]/div[1]/ul/li[1]/text()')[0]

xpath解析工作经验为:

job_experience = job.xpath('./a/div[2]/div[1]/ul/li[2]/text()')[0]

xpath解析学历为:

job_education = job.xpath('./a/div[2]/div[1]/ul/li[3]/text()')[0]

xpath解析技能为:

job_skill = job.xpath('./a/div[3]/div[1]//div[@class="iteminfo__line3__welfare__item"]/text()')

解析完后用字典装起来即可,最后再用列表追加。

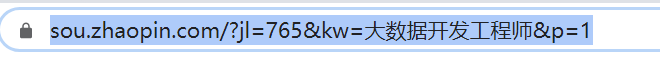

自动分页分析,可以看到每一页都是url最后一个字代表页数:

最后就是保存为csv文件即可:

def save_data(job_list): with open('./爬取智联招聘大数据开发.csv', 'w', encoding="utf-8",newline="") as file: write = csv.DictWriter(file, fieldnames=['工作名', '薪水', '地点', '经验', '学历', '技能']) write.writeheader() for i in job_list: write.writerow(i)

完整代码如下:

from lxml import etree import requests import csv URI = "https://sou.zhaopin.com/?jl=765&kw=大数据开发工程师&p={}" headers = { "User-Agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko)", "cookie":'x-zp-client-id=3c93dfd0-b090-49e6-e254-80e2fd244823; sts_deviceid=177e9137db1b32-00270991791f53-73e356b-1382400-177e9137db2cfe; sensorsdata2015jssdkcross=%7B%22distinct_id%22%3A%221062819381%22%2C%22first_id%22%3A%22177e91387de573-0029b455e8c501-73e356b-1382400-177e91387dfc4b%22%2C%22props%22%3A%7B%22%24latest_traffic_source_type%22%3A%22%E7%9B%B4%E6%8E%A5%E6%B5%81%E9%87%8F%22%2C%22%24latest_search_keyword%22%3A%22%E6%9C%AA%E5%8F%96%E5%88%B0%E5%80%BC_%E7%9B%B4%E6%8E%A5%E6%89%93%E5%BC%80%22%2C%22%24latest_referrer%22%3A%22%22%7D%2C%22%24device_id%22%3A%22177e91387de573-0029b455e8c501-73e356b-1382400-177e91387dfc4b%22%7D; ssxmod_itna=QqfxyDgDRGD==0KP0LKYIEP8rDCD0lrYt7nQrOx0vP2eGzDAxn40iDt==yD+/EQfwxawKmg25aYGmn4ba1jnraRNOIDB3DEx06xEGQxiiyDCeDIDWeDiDG+7D=xGYDjjtUlcDm4i7DYqGRDB=U8qDfGqGWFLQDmwNDGdK6D7QDIk=gnfrDEeDSKitdj7=DjubD/+DWV7=YT1cNjTUi51KPQDDHWBwC6B5P9=edbWpReWiDtqD9DC=Db+d3uc=lbepKWrhTjbhtI7D660GslDmXkADCaD4I0zGNzAqBrB3L0BqDG4VQeD; ssxmod_itna2=QqfxyDgDRGD==0KP0LKYIEP8rDCD0lrYt7nQEDnF8nDDsqzDLeEiq=m4Q9vd08DeMwD=; at=042ae3d365b444a4bcbf7a73316bb427; rt=7165b7bf4cc94bc786a37e1b14ef5e2d; acw_tc=2760827216207076735498974ea0a63d31d1cc2de256ee4bf6451ee43953cf; locationInfo_search={%22code%22:%22779%22%2C%22name%22:%22%E4%B8%9C%E8%8E%9E%22%2C%22message%22:%22%E5%8C%B9%E9%85%8D%E5%88%B0%E5%B8%82%E7%BA%A7%E7%BC%96%E7%A0%81%22}; Hm_lvt_38ba284938d5eddca645bb5e02a02006=1620652584,1620694762,1620707675; Hm_lpvt_38ba284938d5eddca645bb5e02a02006=1620707811' } def get_url(url): response = requests.get(url=url,headers=headers) html = response.content.decode("utf-8") return html def get_data(html): etree_obj = etree.HTML(html) all_job = etree_obj.xpath('//div[@class="joblist-box__item clearfix"]') job_list = [] for job in all_job: job_dic = {} job_name = job.xpath('./a/div[1]/div[1]/span[1]/span/text()') job_salary = job.xpath('normalize-space(./a/div[2]/div[1]/p/text())') job_area = job.xpath('./a/div[2]/div[1]/ul/li[1]/text()')[0] job_experience = job.xpath('./a/div[2]/div[1]/ul/li[2]/text()')[0] job_education = job.xpath('./a/div[2]/div[1]/ul/li[3]/text()')[0] #技能可能有多个,循环爬取 job_skill = job.xpath('./a/div[3]/div[1]//div[@class="iteminfo__line3__welfare__item"]/text()') print(job_skill) job_dic['技能'] = job_skill #有些职业名为空 if len(job_name) != 0: job_dic['工作名'] = job_name[0] job_dic['薪水'] = job_salary job_dic['地点'] = job_area job_dic['经验'] = job_experience job_dic['学历'] = job_education job_list.append(job_dic) return job_list def save_data(job_list): with open('./爬取智联招聘大数据开发.csv', 'w', encoding="utf-8",newline="") as file: write = csv.DictWriter(file, fieldnames=['工作名', '薪水', '地点', '经验', '学历', '技能']) write.writeheader() for i in job_list: write.writerow(i) if __name__ == "__main__": job_list = [] for i in range(23): url = URI.format(i) print("正在解析第{}页中".format(i)) html = get_url(URI) job_list1 = get_data(html) job_list+=job_list1 save_data(job_list)

本次分享到这就结束了,谢谢大家观看哈,欢迎有问题探讨!!!

580

580

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?