个人笔记 感谢指正

Import

参考来源 :刘博

from __future__ import print_function

import numpy as np

import warnings

from keras.layers import Input

from keras import layers

from keras.layers import Dense

from keras.layers import Activation

from keras.layers import Flatten

from keras.layers import Conv2D

from keras.layers import MaxPooling2D

from keras.layers import GlobalMaxPooling2D

from keras.layers import ZeroPadding2D

from keras.layers import AveragePooling2D

from keras.layers import GlobalAveragePooling2D

from keras.layers import BatchNormalization

from keras.models import Model

from keras.preprocessing import image

import keras.backend as K

from keras.utils import layer_utils

from keras.utils.data_utils import get_file

from keras.applications.imagenet_utils import decode_predictions

from keras.applications.imagenet_utils import preprocess_input

from keras_applications.imagenet_utils import _obtain_input_shape

from keras.engine.topology import get_source_inputs

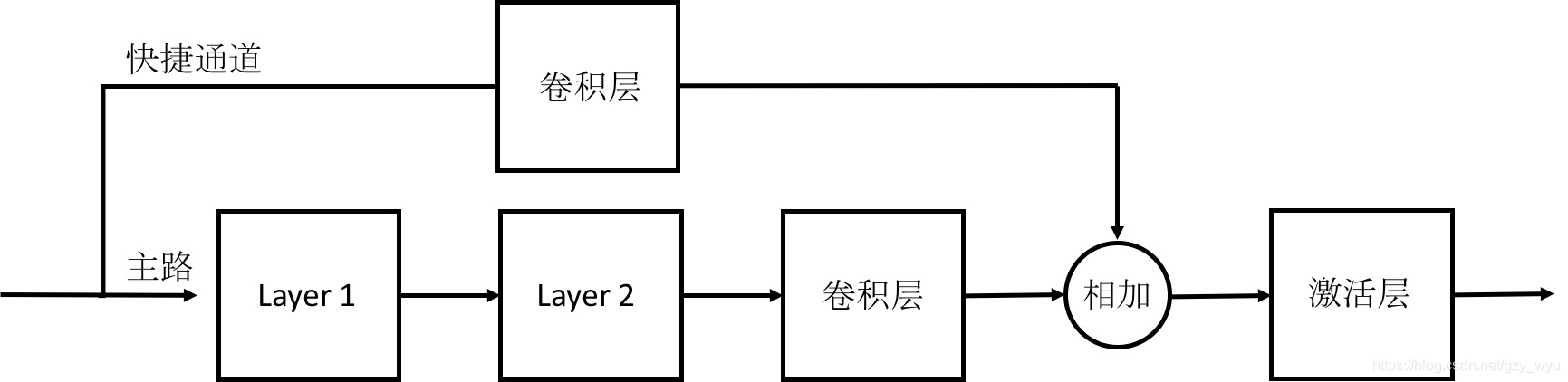

恒等模块(Identity_block 函数)

def identity_block(input_tensor, kernel_size, filters, stage, block):

"""The identity block is the block that has no conv layer at shortcut.

# Arguments

input_tensor: input tensor

kernel_size: defualt 3, the kernel size of middle conv layer at main path

filters: list of integers, the filterss of 3 conv layer at main path

stage: integer, current stage label, used for generating layer names

block: 'a','b'..., current block label, used for generating layer names

# Returns

Output tensor for the block.

"""

filters1, filters2, filters3 = filters

if K.image_data_format() == 'channels_last':

bn_axis = 3

else:

bn_axis = 1

conv_name_base = 'res' + str(stage) + block + '_branch'

bn_name_base = 'bn' + str(stage) + block + '_branch'

x = Conv2D(filters1, (1, 1), name=conv_name_base + '2a')(input_tensor)

x = BatchNormalization(axis=bn_axis, name=bn_name_base + '2a')(x)

x = Activation('relu')(x)

x = Conv2D(filters2, kernel_size,

padding='same', name=conv_name_base + '2b')(x)

x = BatchNormalization(axis=bn_axis, name=bn_name_base + '2b')(x)

x = Activation('relu')(x)

x = Conv2D(filters3, (1, 1), name=conv_name_base + '2c')(x)

x = BatchNormalization(axis=bn_axis, name=bn_name_base + '2c')(x)

x = layers.add([x, input_tensor]) #residual

x = Activation('relu')(x)

return x

参数:

input_tensor : 输入给该模块的tensor

kernel_size : 默认值是3,主路中间卷积层卷积核的大小,其实就是卷积核的元组

filters : 以实数为元素的列表,主路3个卷积核的数目

stage : 当前阶段的标签,是实数,用于生成层的名称

block : 与satge配合,用于生成层的名称,例如:‘a’, ‘b’

函数作用概述:一个恒等结构块由三个卷积层和一个恒等快捷通道组成,恒等快捷通道不需要任何参数!我们需要指定的参数是卷积核大小、三个过滤器以及每一层的名称。

K.image_data_format() 函数:Returns the default image data format convention (‘channels_first’ or ‘channels_last’),函数后面的括号一定不能漏掉

BatchNormalization()函数:Normalize the activations of the previous layer at each batch, i.e. applies a transformation that maintains the mean activation close to 0 and the activation standard deviation close to 1

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

2684

2684

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?