代码转自ShangtongZhang RL

图片截自 reinforcement learning an introduction

Chap 4 Dynamic Programming

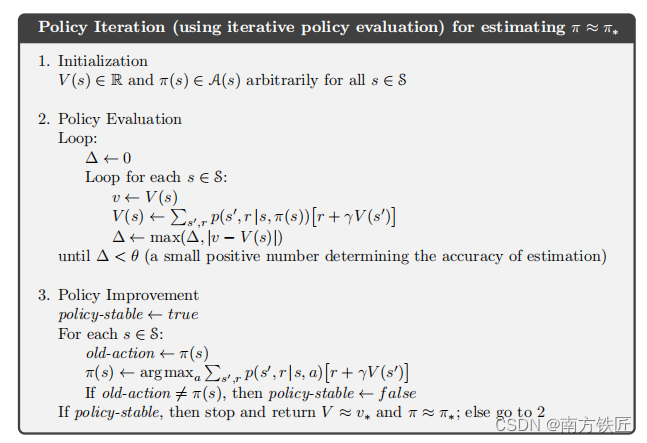

4.3 Policy Iteration

题4.2 租车还车问题

- 对所有状态,本题即为AB两点的车的数量作为xy轴,建立二维矩阵,矩阵的每个值即为value

- 遍历所有的AB可能的组合,在每个组合中,遍历所有可能的AB两点的租车换车数量,每次都计算reward,reward即为租出去的利润减去搬车的成本,并且将reward与对应的概率做乘累加,得到每个组合的value,value一直持续更新,直到稳定,即为policy evaluation

- 根据value数值,遍历所有的AB可能的组合,在每个组合中的都取argmax,直到policy稳定,即为policy iteration

- 持续2和3,直到稳定

截取的部分代码片段

def expected_return(state, action, state_value, constant_returned_cars):

# go through all possible rental requests

for rental_request_first_loc in range(POISSON_UPPER_BOUND):

for rental_request_second_loc in range(POISSON_UPPER_BOUND):

# get credits for renting

reward = (valid_rental_first_loc + valid_rental_second_loc) * RENTAL_CREDIT

num_of_cars_first_loc -= valid_rental_first_loc

num_of_cars_second_loc -= valid_rental_second_loc

if constant_returned_cars:

# get returned cars, those cars can be used for renting tomorrow

returned_cars_first_loc = RETURNS_FIRST_LOC

returned_cars_second_loc = RETURNS_SECOND_LOC

num_of_cars_first_loc = min(num_of_cars_first_loc + returned_cars_first_loc, MAX_CARS)

num_of_cars_second_loc = min(num_of_cars_second_loc + returned_cars_second_loc, MAX_CARS)

returns += prob * (reward + DISCOUNT * state_value[num_of_cars_first_loc, num_of_cars_second_loc])

return returns

def figure_4_2(constant_returned_cars=True):

while True:

# policy evaluation (in-place)

while True:

old_value = value.copy()

for i in range(MAX_CARS + 1):

for j in range(MAX_CARS + 1):

new_state_value = expected_return([i, j], policy[i, j], value, constant_returned_cars)

value[i, j] = new_state_value

max_value_change = abs(old_value - value).max()

print('max value change {}'.format(max_value_change))

if max_value_change < 1e-4:

break

# policy improvement

policy_stable = True

for i in range(MAX_CARS + 1):

for j in range(MAX_CARS + 1):

old_action = policy[i, j]

action_returns = []

for action in actions:

if (0 <= action <= i) or (-j <= action <= 0):

action_returns.append(expected_return([i, j], action, value, constant_returned_cars))

else:

action_returns.append(-np.inf)

new_action = actions[np.argmax(action_returns)]

policy[i, j] = new_action

if policy_stable and old_action != new_action:

policy_stable = False

print('policy stable {}'.format(policy_stable))

完整代码

#######################################################################

# Copyright (C) #

# 2016 Shangtong Zhang(zhangshangtong.cpp@gmail.com) #

# 2016 Kenta Shimada(hyperkentakun@gmail.com) #

# 2017 Aja Rangaswamy (aja004@gmail.com) #

# Permission given to modify the code as long as you keep this #

# declaration at the top #

#######################################################################

import matplotlib

import matplotlib.pyplot as plt

import numpy as np

import seaborn as sns

from scipy.stats import poisson

matplotlib.use('Agg')

# maximum # of cars in each location

MAX_CARS = 20

# maximum # of cars to move during night

MAX_MOVE_OF_CARS = 5

# expectation for rental requests in first location

RENTAL_REQUEST_FIRST_LOC = 3

# expectation for rental requests in second location

RENTAL_REQUEST_SECOND_LOC = 4

# expectation for # of cars returned in first location

RETURNS_FIRST_LOC = 3

# expectation for # of cars returned in second location

RETURNS_SECOND_LOC = 2

DISCOUNT = 0.9

# credit earned by a car

RENTAL_CREDIT = 10

# cost of moving a car

MOVE_CAR_COST = 2

# all possible actions

actions = np.arange(-MAX_MOVE_OF_CARS, MAX_MOVE_OF_CARS + 1)

# An up bound for poisson distribution

# If n is greater than this value, then the probability of getting n is truncated to 0

POISSON_UPPER_BOUND = 11

# Probability for poisson distribution

# @lam: lambda should be less than 10 for this function

poisson_cache = dict()

def poisson_probability(n, lam):

global poisson_cache

key = n * 10 + lam

if key not in poisson_cache:

poisson_cache[key] = poisson.pmf(n, lam)

return poisson_cache[key]

def expected_return(state, action, state_value, constant_returned_cars):

"""

@state: [# of cars in first location, # of cars in second location]

@action: positive if moving cars from first location to second location,

negative if moving cars from second location to first location

@stateValue: state value matrix

@constant_returned_cars: if set True, model is simplified such that

the # of cars returned in daytime becomes constant

rather than a random value from poisson distribution, which will reduce calculation time

and leave the optimal policy/value state matrix almost the same

"""

# initailize total return

returns = 0.0

# cost for moving cars

returns -= MOVE_CAR_COST * abs(action)

# moving cars

NUM_OF_CARS_FIRST_LOC = min(state[0] - action, MAX_CARS)

NUM_OF_CARS_SECOND_LOC = min(state[1] + action, MAX_CARS)

# go through all possible rental requests

for rental_request_first_loc in range(POISSON_UPPER_BOUND):

for rental_request_second_loc in range(POISSON_UPPER_BOUND):

# probability for current combination of rental requests

prob = poisson_probability(rental_request_first_loc, RENTAL_REQUEST_FIRST_LOC) * \

poisson_probability(rental_request_second_loc, RENTAL_REQUEST_SECOND_LOC)

num_of_cars_first_loc = NUM_OF_CARS_FIRST_LOC

num_of_cars_second_loc = NUM_OF_CARS_SECOND_LOC

# valid rental requests should be less than actual # of cars

valid_rental_first_loc = min(num_of_cars_first_loc, rental_request_first_loc)

valid_rental_second_loc = min(num_of_cars_second_loc, rental_request_second_loc)

# get credits for renting

reward = (valid_rental_first_loc + valid_rental_second_loc) * RENTAL_CREDIT

num_of_cars_first_loc -= valid_rental_first_loc

num_of_cars_second_loc -= valid_rental_second_loc

if constant_returned_cars:

# get returned cars, those cars can be used for renting tomorrow

returned_cars_first_loc = RETURNS_FIRST_LOC

returned_cars_second_loc = RETURNS_SECOND_LOC

num_of_cars_first_loc = min(num_of_cars_first_loc + returned_cars_first_loc, MAX_CARS)

num_of_cars_second_loc = min(num_of_cars_second_loc + returned_cars_second_loc, MAX_CARS)

returns += prob * (reward + DISCOUNT * state_value[num_of_cars_first_loc, num_of_cars_second_loc])

else:

for returned_cars_first_loc in range(POISSON_UPPER_BOUND):

for returned_cars_second_loc in range(POISSON_UPPER_BOUND):

prob_return = poisson_probability(

returned_cars_first_loc, RETURNS_FIRST_LOC) * poisson_probability(returned_cars_second_loc, RETURNS_SECOND_LOC)

num_of_cars_first_loc_ = min(num_of_cars_first_loc + returned_cars_first_loc, MAX_CARS)

num_of_cars_second_loc_ = min(num_of_cars_second_loc + returned_cars_second_loc, MAX_CARS)

prob_ = prob_return * prob

returns += prob_ * (reward + DISCOUNT *

state_value[num_of_cars_first_loc_, num_of_cars_second_loc_])

return returns

def figure_4_2(constant_returned_cars=True):

value = np.zeros((MAX_CARS + 1, MAX_CARS + 1))

policy = np.zeros(value.shape, dtype=np.int)

iterations = 0

_, axes = plt.subplots(2, 3, figsize=(40, 20))

plt.subplots_adjust(wspace=0.1, hspace=0.2)

axes = axes.flatten()

while True:

fig = sns.heatmap(np.flipud(policy), cmap="YlGnBu", ax=axes[iterations])

fig.set_ylabel('# cars at first location', fontsize=30)

fig.set_yticks(list(reversed(range(MAX_CARS + 1))))

fig.set_xlabel('# cars at second location', fontsize=30)

fig.set_title('policy {}'.format(iterations), fontsize=30)

# policy evaluation (in-place)

while True:

old_value = value.copy()

for i in range(MAX_CARS + 1):

for j in range(MAX_CARS + 1):

new_state_value = expected_return([i, j], policy[i, j], value, constant_returned_cars)

value[i, j] = new_state_value

max_value_change = abs(old_value - value).max()

print('max value change {}'.format(max_value_change))

if max_value_change < 1e-4:

break

# policy improvement

policy_stable = True

for i in range(MAX_CARS + 1):

for j in range(MAX_CARS + 1):

old_action = policy[i, j]

action_returns = []

for action in actions:

if (0 <= action <= i) or (-j <= action <= 0):

action_returns.append(expected_return([i, j], action, value, constant_returned_cars))

else:

action_returns.append(-np.inf)

new_action = actions[np.argmax(action_returns)]

policy[i, j] = new_action

if policy_stable and old_action != new_action:

policy_stable = False

print('policy stable {}'.format(policy_stable))

if policy_stable:

fig = sns.heatmap(np.flipud(value), cmap="YlGnBu", ax=axes[-1])

fig.set_ylabel('# cars at first location', fontsize=30)

fig.set_yticks(list(reversed(range(MAX_CARS + 1))))

fig.set_xlabel('# cars at second location', fontsize=30)

fig.set_title('optimal value', fontsize=30)

break

iterations += 1

plt.savefig('../images/figure_4_2.png')

plt.close()

if __name__ == '__main__':

figure_4_2()

1626

1626

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?