1. ask bing(Temporal Convolutional Network)

问:“我对CNN、RNN、TCN等神经网络没有任何基础,你能直观地给我讲一下TCN的结构、输入输出和原理吗?”

bing对TCN的解释如下:

TCN是一种用于处理序列数据的神经网络,它可以看作是一种特殊的CNN。CNN是一种用于处理图像数据的神经网络,它可以看作是一种特殊的卷积运算。卷积运算就是将一个小的滑动窗口(称为卷积核或过滤器)在一个大的数据(称为输入或特征图)上滑动,并对每个窗口内的数据进行加权求和,得到一个新的数据(称为输出或激活图)。这样就可以提取出输入中的局部特征,并减少参数数量。

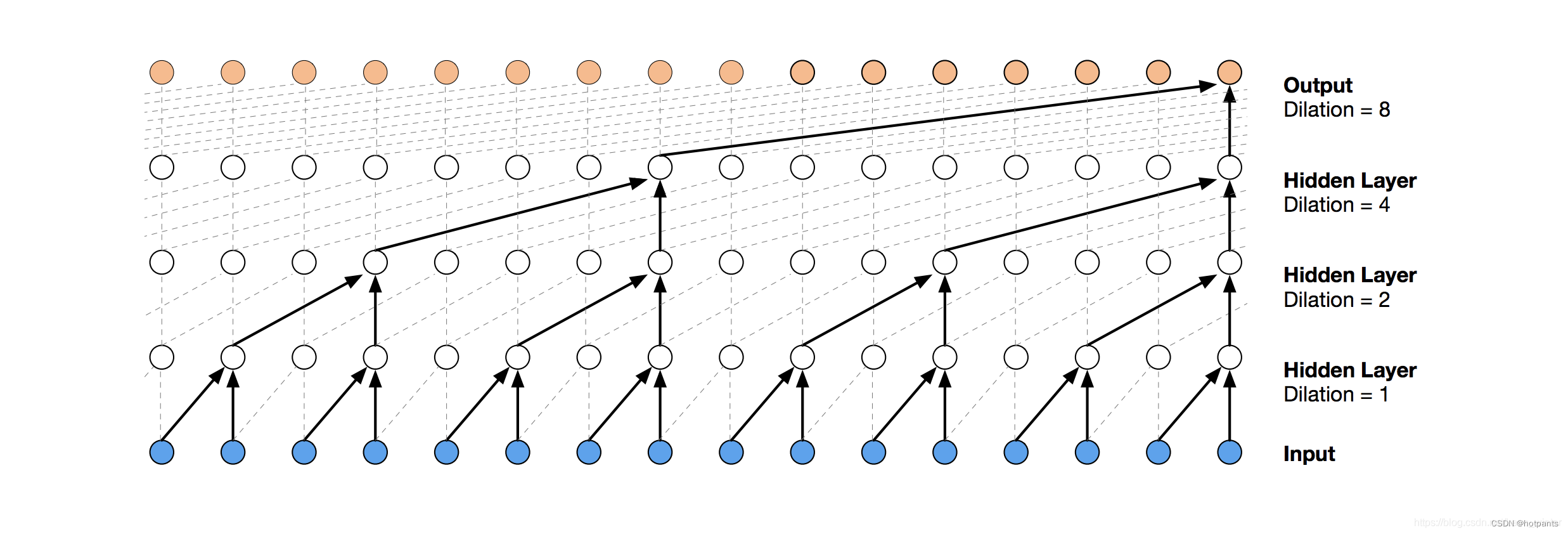

TCN与CNN不同之处在于,它只在序列数据的时间维度上进行卷积运算,而不是在空间维度上。这样就可以保持序列数据的时序信息,并且使得输出只依赖于当前及之前的输入,而不会受到未来输入的影响。这种卷积运算称为因果卷积。

TCN还使用了空洞卷积,这是一种在卷积核中插入空白位置(称为膨胀因子)的技术,使得卷积核可以覆盖更长范围的输入,而不增加参数数量。这样就可以增加感受野,即输出能够感知到输入中多远距离的信息。

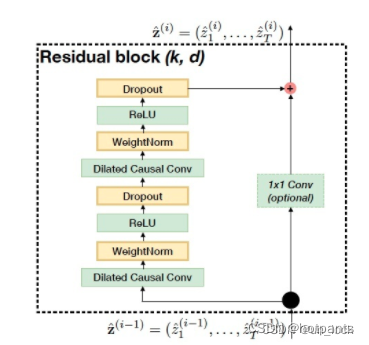

TCN还使用了残差连接,这是一种将每层输入直接与输出相加(称为跳跃连接)的技术,使得网络可以更容易地学习恒等映射,并防止梯度消失或爆炸。这样就可以构建更深层次的网络,并提高性能。

TCN通常由多个重复模块组成,每个模块包含两个因果空洞卷积层和一个残差连接。每个模块都有自己独立的膨胀因子和过滤器数量。最后一个模块后面还有一个全连接层或者softmax层来输出预测结果。

TCN接收一个多维数组作为输入,其中第一维表示序列长度(即时间步数),第二维表示特征数量(即信号通道数)。例如,在生物信号处理中,如果我们有1000个时间步长和8个信号通道,则我们可以将其表示为一个1000×8 的数组作为TCN 的输入。

TCN输出一个多维数组作为预测结果,其中第一维表示序列长度(即时间步数),第二维表示类别数量(即预测目标数)。例如,在生物信号分类中,如果我们有1000个时间步长和4个类别,则我们可以将其表示为一个1000×4 的数组作为TCN 的输出。

总结一下:

- TCN是在CNN基础上衍生的一种神经网络;CNN主要用于处理数字图像,本质是一种特殊的卷积(滤波)运算,且是在空间维度进行的;而FCN使用的是因果卷积,即不是在空间维度进行卷积运算,而是对序列(sequence)数据在时间维度进行卷积运算。

- TCN在算法优化上采用了:膨胀因果卷积、空洞卷积(通过在卷积核中插入空白位置/膨胀因子,使卷积核覆盖更长范围的输入而不增加参数数量,实现增加感受野)、残差连接(可以构建更深层次的网络、提高性能)。

- TCN的结构上由多个重复模块组成,每个模块包含两个因果空洞卷积层+一个残差连接,每个模块的膨胀因子、过滤器数量都不一样;最后一个模块后再加一个全连接层/softmax层来输出预测结果。

- TCN输入输出数据:输入数据是一个多维数组,比如处理生物信号的话,第一维度表示信号序列长度/时间步数(取决于采样频率),第二维度表示特征数量/信号通道数(取决于采集了几个通道和特征处理的方法);输出数据也是多维数组,第一维度还是信号序列长度/时间步数,第二维度表示类别数量/预测目标数(比如根据多个通道的生物信号预测关节角度和时间的关系)。

2. 边跑边学

2.1 先跑起来

The Adding Problem with various T (we evaluated on T=200, 400, 600)

Copying Memory Task with various T (we evaluated on T=500, 1000, 2000)

Sequential MNIST digit classification

Permuted Sequential MNIST (based on Seq. MNIST, but more challenging)

JSB Chorales polyphonic music

Nottingham polyphonic music

PennTreebank [SMALL] word-level language modeling (LM)

Wikitext-103 [LARGE] word-level LM

LAMBADA [LARGE] word-level LM and textual understanding

PennTreebank [MEDIUM] char-level LM

text8 [LARGE] char-level LM

我这里选择了:JSB Chorales polyphonic music和Nottingham polyphonic music,对应poly_music文件夹,因为处理预测声波数据看起来和我要应用的处理生物信号数据比较相近。当然我们可以根据自己的需求选择其他的案例跑。

README中强调了,对应每个案例跑模型时只需要运行[TASK_NAME]_test.py,比如打开music_test.py,先让他运行着。

2.2 学习原理

2.2.1 TCN网络结构直观了解(参考:机器学习进阶之 时域/时间卷积网络 TCN 概念+由来+原理+代码实现)

这部分内容对应tcn.py中的class Chomp1d、class TemporalBlock。

TCN网络结构左边一大串主要包括四个部分:

膨胀因果卷积(Dilated Causal Conv):膨胀就是说卷积时的输入存在间隔采样;因果指每层某时刻的数据只依赖于之前层当前时刻及之前时刻的数据,与未来时刻的数据无关;卷积就是CNN的卷积(卷积核在数据上进行的一种滑动运算的操作)。

权重归一化(WeightNorm):通过重写深度网络的权重来进行加速。代码tcn.py中从torch.nn.utils中调用weight_norm使用。

激活函数(ReLU):挺有名的,从torch.nn中调用。

Dropout:指在深度学习网络的训练过程中,对于神经网络单元,按照一定的概率将其暂时从网络中丢弃。意义是防止过拟合,提高模型的运算速度。

TCN网络结构右边是残差连接:

残差连接:

1*1的卷积块儿,作者说:不仅可以使网络拥有跨层传递信息的功能,而且可以保证输入输出的一致性。

2.2.2 TCN结构关系详解(参考:时间卷积网络(TCN):结构+pytorch代码,有对代码非常详细的标注)

TCN与LSTM的区别:LSTM是通过引入卷积操作使其能够处理图像信息,卷积只对一个时刻的输入图像进行操作;而TCN是利用卷积进行跨时间步提取特征。

TCN的实现——1-D FCN结构;

TCN的实现——因果卷积、膨胀因果卷积(对比膨胀非因果卷积)、残差块结构(参考ResNet,使TCN结构更具有泛化能力)

2.3测试结果

Namespace(clip=0.2, cuda=True, data='Nott', dropout=0.25, epochs=100, ksize=5, levels=4, log_interval=100, lr=0.001, nhid=150, optim='Adam', seed=1111)

loading Nott data...

Epoch 1 | lr 0.00100 | loss 24.20483

Epoch 1 | lr 0.00100 | loss 12.54757

Epoch 1 | lr 0.00100 | loss 10.34167

Epoch 1 | lr 0.00100 | loss 7.46519

Epoch 1 | lr 0.00100 | loss 6.22717

Epoch 1 | lr 0.00100 | loss 5.83443

Validation loss: 5.29395

Test loss: 5.40475

Saved model!

Epoch 2 | lr 0.00100 | loss 5.49445

Epoch 2 | lr 0.00100 | loss 5.08582

Epoch 2 | lr 0.00100 | loss 5.28078

Epoch 2 | lr 0.00100 | loss 5.21557

Epoch 2 | lr 0.00100 | loss 5.09972

Epoch 2 | lr 0.00100 | loss 4.87487

Validation loss: 4.88671

Test loss: 4.91229

Saved model!

Epoch 3 | lr 0.00100 | loss 4.55071

Epoch 3 | lr 0.00100 | loss 4.64663

Epoch 3 | lr 0.00100 | loss 4.62720

Epoch 3 | lr 0.00100 | loss 4.40581

Epoch 3 | lr 0.00100 | loss 4.54712

Epoch 3 | lr 0.00100 | loss 4.48989

Validation loss: 4.23855

Test loss: 4.27290

Saved model!

Epoch 4 | lr 0.00100 | loss 4.15868

Epoch 4 | lr 0.00100 | loss 4.19424

Epoch 4 | lr 0.00100 | loss 3.93361

Epoch 4 | lr 0.00100 | loss 3.87698

Epoch 4 | lr 0.00100 | loss 4.26776

Epoch 4 | lr 0.00100 | loss 4.32880

Validation loss: 4.00616

Test loss: 4.04496

Saved model!

Epoch 5 | lr 0.00100 | loss 4.02574

Epoch 5 | lr 0.00100 | loss 4.59837

Epoch 5 | lr 0.00100 | loss 4.17430

Epoch 5 | lr 0.00100 | loss 3.96050

Epoch 5 | lr 0.00100 | loss 4.04181

Epoch 5 | lr 0.00100 | loss 3.97466

Validation loss: 3.78924

Test loss: 3.84233

Saved model!

Epoch 6 | lr 0.00100 | loss 3.77337

Epoch 6 | lr 0.00100 | loss 3.78759

Epoch 6 | lr 0.00100 | loss 4.05782

Epoch 6 | lr 0.00100 | loss 3.61807

Epoch 6 | lr 0.00100 | loss 3.66880

Epoch 6 | lr 0.00100 | loss 3.68237

Validation loss: 3.69415

Test loss: 3.71941

Saved model!

Epoch 7 | lr 0.00100 | loss 3.71184

Epoch 7 | lr 0.00100 | loss 3.65575

Epoch 7 | lr 0.00100 | loss 3.50422

Epoch 7 | lr 0.00100 | loss 3.69709

Epoch 7 | lr 0.00100 | loss 3.39189

Epoch 7 | lr 0.00100 | loss 3.60912

Validation loss: 3.50421

Test loss: 3.52113

Saved model!

Epoch 8 | lr 0.00100 | loss 3.39342

Epoch 8 | lr 0.00100 | loss 3.45223

Epoch 8 | lr 0.00100 | loss 3.47272

Epoch 8 | lr 0.00100 | loss 3.47585

Epoch 8 | lr 0.00100 | loss 3.88333

Epoch 8 | lr 0.00100 | loss 3.51368

Validation loss: 3.45557

Test loss: 3.46655

Saved model!

Epoch 9 | lr 0.00100 | loss 3.38787

Epoch 9 | lr 0.00100 | loss 3.49427

Epoch 9 | lr 0.00100 | loss 3.42003

Epoch 9 | lr 0.00100 | loss 3.45465

Epoch 9 | lr 0.00100 | loss 3.44894

Epoch 9 | lr 0.00100 | loss 3.35138

Validation loss: 3.39177

Test loss: 3.39885

Saved model!

Epoch 10 | lr 0.00100 | loss 3.33982

Epoch 10 | lr 0.00100 | loss 3.33333

Epoch 10 | lr 0.00100 | loss 3.27813

Epoch 10 | lr 0.00100 | loss 3.39872

Epoch 10 | lr 0.00100 | loss 3.31045

Epoch 10 | lr 0.00100 | loss 3.47179

Validation loss: 3.35350

Test loss: 3.35821

Saved model!

Epoch 11 | lr 0.00100 | loss 3.24939

Epoch 11 | lr 0.00100 | loss 3.28225

Epoch 11 | lr 0.00100 | loss 3.31755

Epoch 11 | lr 0.00100 | loss 3.31538

Epoch 11 | lr 0.00100 | loss 3.34717

Epoch 11 | lr 0.00100 | loss 3.47794

Validation loss: 3.27830

Test loss: 3.28621

Saved model!

Epoch 12 | lr 0.00100 | loss 3.24459

Epoch 12 | lr 0.00100 | loss 3.26871

Epoch 12 | lr 0.00100 | loss 2.83995

Epoch 12 | lr 0.00100 | loss 3.24781

Epoch 12 | lr 0.00100 | loss 3.25777

Epoch 12 | lr 0.00100 | loss 3.09675

Validation loss: 3.25199

Test loss: 3.25987

Saved model!

Epoch 13 | lr 0.00100 | loss 3.18712

Epoch 13 | lr 0.00100 | loss 3.15744

Epoch 13 | lr 0.00100 | loss 3.08412

Epoch 13 | lr 0.00100 | loss 2.98677

Epoch 13 | lr 0.00100 | loss 3.23000

Epoch 13 | lr 0.00100 | loss 3.12484

Validation loss: 3.22609

Test loss: 3.22669

Saved model!

Epoch 14 | lr 0.00100 | loss 2.86843

Epoch 14 | lr 0.00100 | loss 3.05798

Epoch 14 | lr 0.00100 | loss 3.11845

Epoch 14 | lr 0.00100 | loss 3.14372

Epoch 14 | lr 0.00100 | loss 3.19728

Epoch 14 | lr 0.00100 | loss 3.12642

Validation loss: 3.20776

Test loss: 3.20785

Saved model!

Epoch 15 | lr 0.00100 | loss 3.09529

Epoch 15 | lr 0.00100 | loss 3.05085

Epoch 15 | lr 0.00100 | loss 3.12605

Epoch 15 | lr 0.00100 | loss 3.14538

Epoch 15 | lr 0.00100 | loss 3.09047

Epoch 15 | lr 0.00100 | loss 3.14403

Validation loss: 3.19157

Test loss: 3.19761

Saved model!

Epoch 16 | lr 0.00100 | loss 3.08435

Epoch 16 | lr 0.00100 | loss 3.06446

Epoch 16 | lr 0.00100 | loss 3.07964

Epoch 16 | lr 0.00100 | loss 2.92217

Epoch 16 | lr 0.00100 | loss 3.02095

Epoch 16 | lr 0.00100 | loss 3.04373

Validation loss: 3.18486

Test loss: 3.18550

Saved model!

Epoch 17 | lr 0.00100 | loss 3.02295

Epoch 17 | lr 0.00100 | loss 2.94601

Epoch 17 | lr 0.00100 | loss 2.90517

Epoch 17 | lr 0.00100 | loss 3.02466

Epoch 17 | lr 0.00100 | loss 2.96160

Epoch 17 | lr 0.00100 | loss 3.63558

Validation loss: 3.14967

Test loss: 3.15910

Saved model!

Epoch 18 | lr 0.00100 | loss 3.01251

Epoch 18 | lr 0.00100 | loss 2.82075

Epoch 18 | lr 0.00100 | loss 2.78892

Epoch 18 | lr 0.00100 | loss 2.99531

Epoch 18 | lr 0.00100 | loss 2.96843

Epoch 18 | lr 0.00100 | loss 2.98169

Validation loss: 3.14602

Test loss: 3.15058

Saved model!

Epoch 19 | lr 0.00100 | loss 2.90797

Epoch 19 | lr 0.00100 | loss 3.09173

Epoch 19 | lr 0.00100 | loss 2.91924

Epoch 19 | lr 0.00100 | loss 2.99306

Epoch 19 | lr 0.00100 | loss 2.91742

Epoch 19 | lr 0.00100 | loss 2.93122

Validation loss: 3.13545

Test loss: 3.13629

Saved model!

Epoch 20 | lr 0.00100 | loss 2.81639

Epoch 20 | lr 0.00100 | loss 2.90578

Epoch 20 | lr 0.00100 | loss 2.88055

Epoch 20 | lr 0.00100 | loss 2.93285

Epoch 20 | lr 0.00100 | loss 3.00227

Epoch 20 | lr 0.00100 | loss 1.93661

Validation loss: 3.12148

Test loss: 3.11480

Saved model!

Epoch 21 | lr 0.00100 | loss 2.72758

Epoch 21 | lr 0.00100 | loss 2.84461

Epoch 21 | lr 0.00100 | loss 2.90508

Epoch 21 | lr 0.00100 | loss 2.96725

Epoch 21 | lr 0.00100 | loss 2.87752

Epoch 21 | lr 0.00100 | loss 2.14672

Validation loss: 3.08553

Test loss: 3.09944

Saved model!

Epoch 22 | lr 0.00100 | loss 2.81496

Epoch 22 | lr 0.00100 | loss 2.86394

Epoch 22 | lr 0.00100 | loss 2.81450

Epoch 22 | lr 0.00100 | loss 2.87718

Epoch 22 | lr 0.00100 | loss 2.79423

Epoch 22 | lr 0.00100 | loss 2.84248

Validation loss: 3.07362

Test loss: 3.07531

Saved model!

Epoch 23 | lr 0.00100 | loss 2.80338

Epoch 23 | lr 0.00100 | loss 2.77892

Epoch 23 | lr 0.00100 | loss 2.73683

Epoch 23 | lr 0.00100 | loss 2.80235

Epoch 23 | lr 0.00100 | loss 2.89891

Epoch 23 | lr 0.00100 | loss 2.83766

Validation loss: 3.07538

Test loss: 3.07808

Epoch 24 | lr 0.00100 | loss 2.69057

Epoch 24 | lr 0.00100 | loss 2.53861

Epoch 24 | lr 0.00100 | loss 2.96076

Epoch 24 | lr 0.00100 | loss 2.82431

Epoch 24 | lr 0.00100 | loss 2.76815

Epoch 24 | lr 0.00100 | loss 2.70349

Validation loss: 3.05619

Test loss: 3.05848

Saved model!

Epoch 25 | lr 0.00100 | loss 2.58305

Epoch 25 | lr 0.00100 | loss 2.75510

Epoch 25 | lr 0.00100 | loss 2.73684

Epoch 25 | lr 0.00100 | loss 2.79742

Epoch 25 | lr 0.00100 | loss 2.75214

Epoch 25 | lr 0.00100 | loss 2.74800

Validation loss: 3.04583

Test loss: 3.05974

Saved model!

Epoch 26 | lr 0.00100 | loss 2.67008

Epoch 26 | lr 0.00100 | loss 2.52432

Epoch 26 | lr 0.00100 | loss 2.77907

Epoch 26 | lr 0.00100 | loss 2.66775

Epoch 26 | lr 0.00100 | loss 2.76033

Epoch 26 | lr 0.00100 | loss 2.77000

Validation loss: 3.02619

Test loss: 3.03652

Saved model!

Epoch 27 | lr 0.00100 | loss 2.59768

Epoch 27 | lr 0.00100 | loss 2.66384

Epoch 27 | lr 0.00100 | loss 2.68714

Epoch 27 | lr 0.00100 | loss 2.65115

Epoch 27 | lr 0.00100 | loss 2.66188

Epoch 27 | lr 0.00100 | loss 2.73795

Validation loss: 3.00045

Test loss: 3.01429

Saved model!

Epoch 28 | lr 0.00100 | loss 2.62839

Epoch 28 | lr 0.00100 | loss 2.54048

Epoch 28 | lr 0.00100 | loss 2.47716

Epoch 28 | lr 0.00100 | loss 2.64177

Epoch 28 | lr 0.00100 | loss 2.59507

Epoch 28 | lr 0.00100 | loss 2.63312

Validation loss: 3.00037

Test loss: 3.00544

Saved model!

Epoch 29 | lr 0.00100 | loss 2.64653

Epoch 29 | lr 0.00100 | loss 2.58176

Epoch 29 | lr 0.00100 | loss 2.57617

Epoch 29 | lr 0.00100 | loss 2.69560

Epoch 29 | lr 0.00100 | loss 2.80495

Epoch 29 | lr 0.00100 | loss 2.61169

Validation loss: 3.00765

Test loss: 3.01136

Epoch 30 | lr 0.00100 | loss 2.69717

Epoch 30 | lr 0.00100 | loss 2.54373

Epoch 30 | lr 0.00100 | loss 2.63413

Epoch 30 | lr 0.00100 | loss 2.58480

Epoch 30 | lr 0.00100 | loss 2.59596

Epoch 30 | lr 0.00100 | loss 2.69300

Validation loss: 3.01752

Test loss: 3.01258

Epoch 31 | lr 0.00010 | loss 2.45645

Epoch 31 | lr 0.00010 | loss 2.45380

Epoch 31 | lr 0.00010 | loss 2.48504

Epoch 31 | lr 0.00010 | loss 2.51676

Epoch 31 | lr 0.00010 | loss 2.56140

Epoch 31 | lr 0.00010 | loss 2.45933

Validation loss: 2.95486

Test loss: 2.96315

Saved model!

Epoch 32 | lr 0.00010 | loss 2.43954

Epoch 32 | lr 0.00010 | loss 2.43467

Epoch 32 | lr 0.00010 | loss 2.42177

Epoch 32 | lr 0.00010 | loss 2.46623

Epoch 32 | lr 0.00010 | loss 2.61942

Epoch 32 | lr 0.00010 | loss 2.46129

Validation loss: 2.95515

Test loss: 2.96563

Epoch 33 | lr 0.00010 | loss 2.54713

Epoch 33 | lr 0.00010 | loss 2.41953

Epoch 33 | lr 0.00010 | loss 2.34418

Epoch 33 | lr 0.00010 | loss 2.44249

Epoch 33 | lr 0.00010 | loss 2.53604

Epoch 33 | lr 0.00010 | loss 2.43318

Validation loss: 2.95291

Test loss: 2.96509

Saved model!

Epoch 34 | lr 0.00010 | loss 2.43941

Epoch 34 | lr 0.00010 | loss 2.41067

Epoch 34 | lr 0.00010 | loss 2.43031

Epoch 34 | lr 0.00010 | loss 2.62946

Epoch 34 | lr 0.00010 | loss 2.46241

Epoch 34 | lr 0.00010 | loss 2.36850

Validation loss: 2.95889

Test loss: 2.96941

Epoch 35 | lr 0.00001 | loss 2.43545

Epoch 35 | lr 0.00001 | loss 2.44627

Epoch 35 | lr 0.00001 | loss 3.08806

Epoch 35 | lr 0.00001 | loss 2.42842

Epoch 35 | lr 0.00001 | loss 2.24893

Epoch 35 | lr 0.00001 | loss 2.36822

Validation loss: 2.95521

Test loss: 2.96670

Epoch 36 | lr 0.00001 | loss 2.34449

Epoch 36 | lr 0.00001 | loss 2.52862

Epoch 36 | lr 0.00001 | loss 2.42900

Epoch 36 | lr 0.00001 | loss 2.04666

Epoch 36 | lr 0.00001 | loss 2.41113

Epoch 36 | lr 0.00001 | loss 2.45220

Validation loss: 2.95483

Test loss: 2.96588

Epoch 37 | lr 0.00001 | loss 2.43688

Epoch 37 | lr 0.00001 | loss 2.81418

Epoch 37 | lr 0.00001 | loss 2.39969

Epoch 37 | lr 0.00001 | loss 2.36796

Epoch 37 | lr 0.00001 | loss 2.41917

Epoch 37 | lr 0.00001 | loss 2.21677

Validation loss: 2.95496

Test loss: 2.96567

Epoch 38 | lr 0.00001 | loss 2.34746

Epoch 38 | lr 0.00001 | loss 2.45062

Epoch 38 | lr 0.00001 | loss 2.43343

Epoch 38 | lr 0.00001 | loss 2.46355

Epoch 38 | lr 0.00001 | loss 2.41640

Epoch 38 | lr 0.00001 | loss 2.15776

Validation loss: 2.95320

Test loss: 2.96515

Epoch 39 | lr 0.00001 | loss 2.40601

Epoch 39 | lr 0.00001 | loss 2.49198

Epoch 39 | lr 0.00001 | loss 2.53223

Epoch 39 | lr 0.00001 | loss 2.00882

Epoch 39 | lr 0.00001 | loss 2.34943

Epoch 39 | lr 0.00001 | loss 2.43459

Validation loss: 2.95460

Test loss: 2.96632

Epoch 40 | lr 0.00001 | loss 2.48286

Epoch 40 | lr 0.00001 | loss 2.33617

Epoch 40 | lr 0.00001 | loss 2.42163

Epoch 40 | lr 0.00001 | loss 2.35010

Epoch 40 | lr 0.00001 | loss 2.40796

Epoch 40 | lr 0.00001 | loss 2.45041

Validation loss: 2.95403

Test loss: 2.96556

Epoch 41 | lr 0.00001 | loss 2.40313

Epoch 41 | lr 0.00001 | loss 2.32656

Epoch 41 | lr 0.00001 | loss 2.47946

Epoch 41 | lr 0.00001 | loss 2.15760

Epoch 41 | lr 0.00001 | loss 2.37480

Epoch 41 | lr 0.00001 | loss 2.46791

Validation loss: 2.95404

Test loss: 2.96532

Epoch 42 | lr 0.00001 | loss 2.45571

Epoch 42 | lr 0.00001 | loss 2.39349

Epoch 42 | lr 0.00001 | loss 2.40195

Epoch 42 | lr 0.00001 | loss 2.40755

Epoch 42 | lr 0.00001 | loss 2.20085

Epoch 42 | lr 0.00001 | loss 2.55087

Validation loss: 2.95563

Test loss: 2.96605

Epoch 43 | lr 0.00000 | loss 2.41390

Epoch 43 | lr 0.00000 | loss 2.38766

Epoch 43 | lr 0.00000 | loss 2.40005

Epoch 43 | lr 0.00000 | loss 2.40574

Epoch 43 | lr 0.00000 | loss 2.45363

Epoch 43 | lr 0.00000 | loss 2.45474

Validation loss: 2.95526

Test loss: 2.96588

Epoch 44 | lr 0.00000 | loss 2.44101

Epoch 44 | lr 0.00000 | loss 2.38717

Epoch 44 | lr 0.00000 | loss 2.42643

Epoch 44 | lr 0.00000 | loss 2.37804

Epoch 44 | lr 0.00000 | loss 2.40502

Epoch 44 | lr 0.00000 | loss 2.44630

Validation loss: 2.95480

Test loss: 2.96562

Epoch 45 | lr 0.00000 | loss 2.35229

Epoch 45 | lr 0.00000 | loss 2.39950

Epoch 45 | lr 0.00000 | loss 2.47582

Epoch 45 | lr 0.00000 | loss 2.46909

Epoch 45 | lr 0.00000 | loss 2.40886

Epoch 45 | lr 0.00000 | loss 2.46704

Validation loss: 2.95471

Test loss: 2.96563

Epoch 46 | lr 0.00000 | loss 2.46100

Epoch 46 | lr 0.00000 | loss 1.39584

Epoch 46 | lr 0.00000 | loss 2.35312

Epoch 46 | lr 0.00000 | loss 2.70966

Epoch 46 | lr 0.00000 | loss 2.71677

Epoch 46 | lr 0.00000 | loss 2.42208

Validation loss: 2.95453

Test loss: 2.96552

Epoch 47 | lr 0.00000 | loss 2.43515

Epoch 47 | lr 0.00000 | loss 2.50489

Epoch 47 | lr 0.00000 | loss 2.41215

Epoch 47 | lr 0.00000 | loss 2.34724

Epoch 47 | lr 0.00000 | loss 2.49304

Epoch 47 | lr 0.00000 | loss 2.32401

Validation loss: 2.95436

Test loss: 2.96538

Epoch 48 | lr 0.00000 | loss 2.41615

Epoch 48 | lr 0.00000 | loss 2.39621

Epoch 48 | lr 0.00000 | loss 2.38097

Epoch 48 | lr 0.00000 | loss 2.44820

Epoch 48 | lr 0.00000 | loss 2.02717

Epoch 48 | lr 0.00000 | loss 2.44434

Validation loss: 2.95430

Test loss: 2.96533

Epoch 49 | lr 0.00000 | loss 2.39831

Epoch 49 | lr 0.00000 | loss 2.53042

Epoch 49 | lr 0.00000 | loss 2.48773

Epoch 49 | lr 0.00000 | loss 2.45923

Epoch 49 | lr 0.00000 | loss 2.39248

Epoch 49 | lr 0.00000 | loss 2.41314

Validation loss: 2.95415

Test loss: 2.96527

Epoch 50 | lr 0.00000 | loss 2.34241

Epoch 50 | lr 0.00000 | loss 2.43070

Epoch 50 | lr 0.00000 | loss 2.05006

Epoch 50 | lr 0.00000 | loss 2.49058

Epoch 50 | lr 0.00000 | loss 2.40379

Epoch 50 | lr 0.00000 | loss 2.46354

Validation loss: 2.95412

Test loss: 2.96525

Epoch 51 | lr 0.00000 | loss 2.50044

Epoch 51 | lr 0.00000 | loss 2.31235

Epoch 51 | lr 0.00000 | loss 2.35816

Epoch 51 | lr 0.00000 | loss 2.48627

Epoch 51 | lr 0.00000 | loss 2.42042

Epoch 51 | lr 0.00000 | loss 2.40909

Validation loss: 2.95393

Test loss: 2.96513

Epoch 52 | lr 0.00000 | loss 2.41315

Epoch 52 | lr 0.00000 | loss 2.44901

Epoch 52 | lr 0.00000 | loss 1.71025

Epoch 52 | lr 0.00000 | loss 2.42413

Epoch 52 | lr 0.00000 | loss 2.42102

Epoch 52 | lr 0.00000 | loss 2.39134

Validation loss: 2.95397

Test loss: 2.96515

Epoch 53 | lr 0.00000 | loss 2.40649

Epoch 53 | lr 0.00000 | loss 2.54095

Epoch 53 | lr 0.00000 | loss 2.19728

Epoch 53 | lr 0.00000 | loss 2.51835

Epoch 53 | lr 0.00000 | loss 2.40190

Epoch 53 | lr 0.00000 | loss 2.39805

Validation loss: 2.95385

Test loss: 2.96504

Epoch 54 | lr 0.00000 | loss 2.39350

Epoch 54 | lr 0.00000 | loss 2.49204

Epoch 54 | lr 0.00000 | loss 2.31756

Epoch 54 | lr 0.00000 | loss 2.43664

Epoch 54 | lr 0.00000 | loss 2.39233

Epoch 54 | lr 0.00000 | loss 2.46368

Validation loss: 2.95395

Test loss: 2.96514

Epoch 55 | lr 0.00000 | loss 2.39194

Epoch 55 | lr 0.00000 | loss 2.46516

Epoch 55 | lr 0.00000 | loss 2.45878

Epoch 55 | lr 0.00000 | loss 2.35791

Epoch 55 | lr 0.00000 | loss 2.23562

Epoch 55 | lr 0.00000 | loss 2.40309

Validation loss: 2.95402

Test loss: 2.96521

Epoch 56 | lr 0.00000 | loss 2.42875

Epoch 56 | lr 0.00000 | loss 2.39907

Epoch 56 | lr 0.00000 | loss 2.35058

Epoch 56 | lr 0.00000 | loss 2.46811

Epoch 56 | lr 0.00000 | loss 2.37041

Epoch 56 | lr 0.00000 | loss 2.40081

Validation loss: 2.95403

Test loss: 2.96521

Epoch 57 | lr 0.00000 | loss 2.42965

Epoch 57 | lr 0.00000 | loss 2.36886

Epoch 57 | lr 0.00000 | loss 2.52495

Epoch 57 | lr 0.00000 | loss 2.40957

Epoch 57 | lr 0.00000 | loss 2.50273

Epoch 57 | lr 0.00000 | loss 2.31355

Validation loss: 2.95403

Test loss: 2.96521

Epoch 58 | lr 0.00000 | loss 2.44439

Epoch 58 | lr 0.00000 | loss 2.44985

Epoch 58 | lr 0.00000 | loss 2.35233

Epoch 58 | lr 0.00000 | loss 2.40324

Epoch 58 | lr 0.00000 | loss 2.44942

Epoch 58 | lr 0.00000 | loss 2.45389

Validation loss: 2.95402

Test loss: 2.96521

Epoch 59 | lr 0.00000 | loss 2.38148

Epoch 59 | lr 0.00000 | loss 2.36841

Epoch 59 | lr 0.00000 | loss 2.41448

Epoch 59 | lr 0.00000 | loss 2.44373

Epoch 59 | lr 0.00000 | loss 2.44111

Epoch 59 | lr 0.00000 | loss 2.45866

Validation loss: 2.95402

Test loss: 2.96520

Epoch 60 | lr 0.00000 | loss 2.41296

Epoch 60 | lr 0.00000 | loss 2.53527

Epoch 60 | lr 0.00000 | loss 2.39205

Epoch 60 | lr 0.00000 | loss 2.31394

Epoch 60 | lr 0.00000 | loss 2.38146

Epoch 60 | lr 0.00000 | loss 2.43245

Validation loss: 2.95402

Test loss: 2.96520

Epoch 61 | lr 0.00000 | loss 2.48459

Epoch 61 | lr 0.00000 | loss 2.36444

Epoch 61 | lr 0.00000 | loss 2.42401

Epoch 61 | lr 0.00000 | loss 2.38782

Epoch 61 | lr 0.00000 | loss 2.39042

Epoch 61 | lr 0.00000 | loss 2.40236

Validation loss: 2.95402

Test loss: 2.96520

Epoch 62 | lr 0.00000 | loss 2.43595

Epoch 62 | lr 0.00000 | loss 2.44351

Epoch 62 | lr 0.00000 | loss 2.38162

Epoch 62 | lr 0.00000 | loss 2.41288

Epoch 62 | lr 0.00000 | loss 2.44867

Epoch 62 | lr 0.00000 | loss 2.20912

Validation loss: 2.95402

Test loss: 2.96520

Epoch 63 | lr 0.00000 | loss 2.18615

Epoch 63 | lr 0.00000 | loss 2.54030

Epoch 63 | lr 0.00000 | loss 2.47183

Epoch 63 | lr 0.00000 | loss 2.39264

Epoch 63 | lr 0.00000 | loss 2.38341

Epoch 63 | lr 0.00000 | loss 2.43780

Validation loss: 2.95402

Test loss: 2.96520

Epoch 64 | lr 0.00000 | loss 2.44530

Epoch 64 | lr 0.00000 | loss 2.28641

Epoch 64 | lr 0.00000 | loss 2.26978

Epoch 64 | lr 0.00000 | loss 2.47008

Epoch 64 | lr 0.00000 | loss 2.46534

Epoch 64 | lr 0.00000 | loss 2.40426

Validation loss: 2.95401

Test loss: 2.96520

Epoch 65 | lr 0.00000 | loss 2.45915

Epoch 65 | lr 0.00000 | loss 2.50910

Epoch 65 | lr 0.00000 | loss 2.48938

Epoch 65 | lr 0.00000 | loss 2.32067

Epoch 65 | lr 0.00000 | loss 2.37479

Epoch 65 | lr 0.00000 | loss 2.51838

Validation loss: 2.95401

Test loss: 2.96520

Epoch 66 | lr 0.00000 | loss 2.43692

Epoch 66 | lr 0.00000 | loss 2.34911

Epoch 66 | lr 0.00000 | loss 2.30305

Epoch 66 | lr 0.00000 | loss 2.37067

Epoch 66 | lr 0.00000 | loss 2.40642

Epoch 66 | lr 0.00000 | loss 2.46048

Validation loss: 2.95401

Test loss: 2.96520

Epoch 67 | lr 0.00000 | loss 2.59787

Epoch 67 | lr 0.00000 | loss 2.47146

Epoch 67 | lr 0.00000 | loss 2.43156

Epoch 67 | lr 0.00000 | loss 2.44966

Epoch 67 | lr 0.00000 | loss 2.40391

Epoch 67 | lr 0.00000 | loss 2.37677

Validation loss: 2.95401

Test loss: 2.96520

Epoch 68 | lr 0.00000 | loss 2.41856

Epoch 68 | lr 0.00000 | loss 2.47008

Epoch 68 | lr 0.00000 | loss 2.47840

Epoch 68 | lr 0.00000 | loss 2.36878

Epoch 68 | lr 0.00000 | loss 2.43735

Epoch 68 | lr 0.00000 | loss 2.34884

Validation loss: 2.95401

Test loss: 2.96519

Epoch 69 | lr 0.00000 | loss 1.76942

Epoch 69 | lr 0.00000 | loss 2.38943

Epoch 69 | lr 0.00000 | loss 2.41059

Epoch 69 | lr 0.00000 | loss 2.41351

Epoch 69 | lr 0.00000 | loss 2.48355

Epoch 69 | lr 0.00000 | loss 2.46324

Validation loss: 2.95401

Test loss: 2.96520

Epoch 70 | lr 0.00000 | loss 2.08691

Epoch 70 | lr 0.00000 | loss 2.44040

Epoch 70 | lr 0.00000 | loss 2.36904

Epoch 70 | lr 0.00000 | loss 2.42060

Epoch 70 | lr 0.00000 | loss 2.43333

Epoch 70 | lr 0.00000 | loss 2.41498

Validation loss: 2.95401

Test loss: 2.96520

Epoch 71 | lr 0.00000 | loss 2.26960

Epoch 71 | lr 0.00000 | loss 2.28289

Epoch 71 | lr 0.00000 | loss 2.36861

Epoch 71 | lr 0.00000 | loss 2.38697

Epoch 71 | lr 0.00000 | loss 2.47841

Epoch 71 | lr 0.00000 | loss 2.48060

Validation loss: 2.95401

Test loss: 2.96520

Epoch 72 | lr 0.00000 | loss 2.13208

Epoch 72 | lr 0.00000 | loss 2.41577

Epoch 72 | lr 0.00000 | loss 2.42331

Epoch 72 | lr 0.00000 | loss 2.48011

Epoch 72 | lr 0.00000 | loss 1.39558

Epoch 72 | lr 0.00000 | loss 2.46180

Validation loss: 2.95401

Test loss: 2.96520

Epoch 73 | lr 0.00000 | loss 2.39402

Epoch 73 | lr 0.00000 | loss 2.48592

Epoch 73 | lr 0.00000 | loss 2.36022

Epoch 73 | lr 0.00000 | loss 2.47524

Epoch 73 | lr 0.00000 | loss 2.13461

Epoch 73 | lr 0.00000 | loss 2.36569

Validation loss: 2.95401

Test loss: 2.96520

Epoch 74 | lr 0.00000 | loss 2.38321

Epoch 74 | lr 0.00000 | loss 2.44158

Epoch 74 | lr 0.00000 | loss 2.26542

Epoch 74 | lr 0.00000 | loss 2.35360

Epoch 74 | lr 0.00000 | loss 2.44588

Epoch 74 | lr 0.00000 | loss 2.41055

Validation loss: 2.95401

Test loss: 2.96520

Epoch 75 | lr 0.00000 | loss 2.44660

Epoch 75 | lr 0.00000 | loss 2.47491

Epoch 75 | lr 0.00000 | loss 2.42964

Epoch 75 | lr 0.00000 | loss 2.41180

Epoch 75 | lr 0.00000 | loss 2.44037

Epoch 75 | lr 0.00000 | loss 2.39054

Validation loss: 2.95401

Test loss: 2.96520

Epoch 76 | lr 0.00000 | loss 2.42163

Epoch 76 | lr 0.00000 | loss 2.47723

Epoch 76 | lr 0.00000 | loss 2.46514

Epoch 76 | lr 0.00000 | loss 2.34455

Epoch 76 | lr 0.00000 | loss 2.40418

Epoch 76 | lr 0.00000 | loss 2.40259

Validation loss: 2.95401

Test loss: 2.96519

Epoch 77 | lr 0.00000 | loss 2.41411

Epoch 77 | lr 0.00000 | loss 2.45589

Epoch 77 | lr 0.00000 | loss 2.44414

Epoch 77 | lr 0.00000 | loss 2.37484

Epoch 77 | lr 0.00000 | loss 2.37498

Epoch 77 | lr 0.00000 | loss 2.31428

Validation loss: 2.95401

Test loss: 2.96519

Epoch 78 | lr 0.00000 | loss 2.55251

Epoch 78 | lr 0.00000 | loss 2.31894

Epoch 78 | lr 0.00000 | loss 2.44500

Epoch 78 | lr 0.00000 | loss 2.42809

Epoch 78 | lr 0.00000 | loss 2.46257

Epoch 78 | lr 0.00000 | loss 2.40419

Validation loss: 2.95401

Test loss: 2.96519

Epoch 79 | lr 0.00000 | loss 2.32429

Epoch 79 | lr 0.00000 | loss 2.53482

Epoch 79 | lr 0.00000 | loss 2.42113

Epoch 79 | lr 0.00000 | loss 2.17599

Epoch 79 | lr 0.00000 | loss 2.41099

Epoch 79 | lr 0.00000 | loss 2.59278

Validation loss: 2.95400

Test loss: 2.96519

Epoch 80 | lr 0.00000 | loss 2.39924

Epoch 80 | lr 0.00000 | loss 2.65279

Epoch 80 | lr 0.00000 | loss 2.44098

Epoch 80 | lr 0.00000 | loss 2.36354

Epoch 80 | lr 0.00000 | loss 2.25048

Epoch 80 | lr 0.00000 | loss 2.35756

Validation loss: 2.95400

Test loss: 2.96519

Epoch 81 | lr 0.00000 | loss 2.41862

Epoch 81 | lr 0.00000 | loss 2.48602

Epoch 81 | lr 0.00000 | loss 2.48530

Epoch 81 | lr 0.00000 | loss 2.66520

Epoch 81 | lr 0.00000 | loss 2.43285

Epoch 81 | lr 0.00000 | loss 2.35819

Validation loss: 2.95400

Test loss: 2.96519

Epoch 82 | lr 0.00000 | loss 2.42112

Epoch 82 | lr 0.00000 | loss 2.32117

Epoch 82 | lr 0.00000 | loss 2.36124

Epoch 82 | lr 0.00000 | loss 2.36869

Epoch 82 | lr 0.00000 | loss 2.56043

Epoch 82 | lr 0.00000 | loss 2.40713

Validation loss: 2.95400

Test loss: 2.96520

Epoch 83 | lr 0.00000 | loss 2.77187

Epoch 83 | lr 0.00000 | loss 2.41533

Epoch 83 | lr 0.00000 | loss 2.36156

Epoch 83 | lr 0.00000 | loss 2.52006

Epoch 83 | lr 0.00000 | loss 2.44264

Epoch 83 | lr 0.00000 | loss 2.48203

Validation loss: 2.95400

Test loss: 2.96519

Epoch 84 | lr 0.00000 | loss 2.39321

Epoch 84 | lr 0.00000 | loss 1.88318

Epoch 84 | lr 0.00000 | loss 2.40187

Epoch 84 | lr 0.00000 | loss 2.43431

Epoch 84 | lr 0.00000 | loss 2.57168

Epoch 84 | lr 0.00000 | loss 2.33964

Validation loss: 2.95400

Test loss: 2.96519

Epoch 85 | lr 0.00000 | loss 2.40599

Epoch 85 | lr 0.00000 | loss 2.42410

Epoch 85 | lr 0.00000 | loss 2.39999

Epoch 85 | lr 0.00000 | loss 2.47565

Epoch 85 | lr 0.00000 | loss 2.37174

Epoch 85 | lr 0.00000 | loss 2.45941

Validation loss: 2.95400

Test loss: 2.96519

Epoch 86 | lr 0.00000 | loss 2.15863

Epoch 86 | lr 0.00000 | loss 2.37759

Epoch 86 | lr 0.00000 | loss 2.56286

Epoch 86 | lr 0.00000 | loss 2.42264

Epoch 86 | lr 0.00000 | loss 2.47878

Epoch 86 | lr 0.00000 | loss 2.46373

Validation loss: 2.95400

Test loss: 2.96519

Epoch 87 | lr 0.00000 | loss 2.76105

Epoch 87 | lr 0.00000 | loss 2.35281

Epoch 87 | lr 0.00000 | loss 2.45527

Epoch 87 | lr 0.00000 | loss 2.45856

Epoch 87 | lr 0.00000 | loss 2.62649

Epoch 87 | lr 0.00000 | loss 2.52481

Validation loss: 2.95400

Test loss: 2.96519

Epoch 88 | lr 0.00000 | loss 2.47774

Epoch 88 | lr 0.00000 | loss 2.34679

Epoch 88 | lr 0.00000 | loss 2.44432

Epoch 88 | lr 0.00000 | loss 2.12840

Epoch 88 | lr 0.00000 | loss 2.51886

Epoch 88 | lr 0.00000 | loss 2.06461

Validation loss: 2.95400

Test loss: 2.96519

Epoch 89 | lr 0.00000 | loss 2.37020

Epoch 89 | lr 0.00000 | loss 2.47868

Epoch 89 | lr 0.00000 | loss 2.39565

Epoch 89 | lr 0.00000 | loss 2.40516

Epoch 89 | lr 0.00000 | loss 2.41972

Epoch 89 | lr 0.00000 | loss 2.38832

Validation loss: 2.95400

Test loss: 2.96519

Epoch 90 | lr 0.00000 | loss 2.23446

Epoch 90 | lr 0.00000 | loss 2.45653

Epoch 90 | lr 0.00000 | loss 2.40566

Epoch 90 | lr 0.00000 | loss 2.49196

Epoch 90 | lr 0.00000 | loss 2.36378

Epoch 90 | lr 0.00000 | loss 2.41977

Validation loss: 2.95400

Test loss: 2.96519

Epoch 91 | lr 0.00000 | loss 2.34804

Epoch 91 | lr 0.00000 | loss 2.42081

Epoch 91 | lr 0.00000 | loss 2.42765

Epoch 91 | lr 0.00000 | loss 2.51739

Epoch 91 | lr 0.00000 | loss 2.50900

Epoch 91 | lr 0.00000 | loss 2.50998

Validation loss: 2.95400

Test loss: 2.96519

Epoch 92 | lr 0.00000 | loss 2.44960

Epoch 92 | lr 0.00000 | loss 2.38403

Epoch 92 | lr 0.00000 | loss 2.49420

Epoch 92 | lr 0.00000 | loss 2.32383

Epoch 92 | lr 0.00000 | loss 2.22930

Epoch 92 | lr 0.00000 | loss 2.41387

Validation loss: 2.95400

Test loss: 2.96519

Epoch 93 | lr 0.00000 | loss 2.50621

Epoch 93 | lr 0.00000 | loss 2.40276

Epoch 93 | lr 0.00000 | loss 2.35815

Epoch 93 | lr 0.00000 | loss 2.42412

Epoch 93 | lr 0.00000 | loss 2.36929

Epoch 93 | lr 0.00000 | loss 2.40508

Validation loss: 2.95400

Test loss: 2.96519

Epoch 94 | lr 0.00000 | loss 2.32516

Epoch 94 | lr 0.00000 | loss 2.63810

Epoch 94 | lr 0.00000 | loss 2.53540

Epoch 94 | lr 0.00000 | loss 2.49643

Epoch 94 | lr 0.00000 | loss 2.43261

Epoch 94 | lr 0.00000 | loss 2.39358

Validation loss: 2.95400

Test loss: 2.96519

Epoch 95 | lr 0.00000 | loss 2.37413

Epoch 95 | lr 0.00000 | loss 2.41371

Epoch 95 | lr 0.00000 | loss 1.82993

Epoch 95 | lr 0.00000 | loss 2.46905

Epoch 95 | lr 0.00000 | loss 2.41483

Epoch 95 | lr 0.00000 | loss 2.42171

Validation loss: 2.95400

Test loss: 2.96519

Epoch 96 | lr 0.00000 | loss 2.40594

Epoch 96 | lr 0.00000 | loss 2.46985

Epoch 96 | lr 0.00000 | loss 2.41713

Epoch 96 | lr 0.00000 | loss 2.42794

Epoch 96 | lr 0.00000 | loss 2.34145

Epoch 96 | lr 0.00000 | loss 2.39331

Validation loss: 2.95400

Test loss: 2.96519

Epoch 97 | lr 0.00000 | loss 2.46766

Epoch 97 | lr 0.00000 | loss 2.50765

Epoch 97 | lr 0.00000 | loss 2.39896

Epoch 97 | lr 0.00000 | loss 2.46505

Epoch 97 | lr 0.00000 | loss 2.52749

Epoch 97 | lr 0.00000 | loss 2.40895

Validation loss: 2.95400

Test loss: 2.96519

Epoch 98 | lr 0.00000 | loss 2.43740

Epoch 98 | lr 0.00000 | loss 2.42547

Epoch 98 | lr 0.00000 | loss 2.65314

Epoch 98 | lr 0.00000 | loss 2.36240

Epoch 98 | lr 0.00000 | loss 2.21236

Epoch 98 | lr 0.00000 | loss 2.42001

Validation loss: 2.95400

Test loss: 2.96519

Epoch 99 | lr 0.00000 | loss 2.44869

Epoch 99 | lr 0.00000 | loss 2.41306

Epoch 99 | lr 0.00000 | loss 2.46927

Epoch 99 | lr 0.00000 | loss 2.29154

Epoch 99 | lr 0.00000 | loss 2.40120

Epoch 99 | lr 0.00000 | loss 2.36292

Validation loss: 2.95400

Test loss: 2.96519

Epoch 100 | lr 0.00000 | loss 2.51047

Epoch 100 | lr 0.00000 | loss 2.29219

Epoch 100 | lr 0.00000 | loss 2.29253

Epoch 100 | lr 0.00000 | loss 2.42894

Epoch 100 | lr 0.00000 | loss 2.38489

Epoch 100 | lr 0.00000 | loss 2.40344

Validation loss: 2.95400

Test loss: 2.96519

-----------------------------------------------------------------------------------------

Eval loss: 2.96509

Process finished with exit code 0

1893

1893

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?