一、实验环境准备

镜像选择:CentOS-7-x86_64-DVD-2009.iso

配置:4核、6G内存、80G硬盘

兼容性:ESXI 7.0及更高版本

服务器信息:

| k8s集群角色 | ip | 主机名 | 安装的组件 |

|---|---|---|---|

| 控制节点 | 10.104.26.192 | hqs-master | apiserver、controller-manager、scheduler、etcd、kube-proxy、docker、calico |

| 工作节点 | 10.104.26.193 | hqs-node1 | kubelet、kube-proxy、docker、calico、coredns |

| 工作节点 | 10.104.26.194 | hqs-node2 | kubelet、kube-proxy、docker、calico、coredns |

1、kubeadm 和二进制安装 k8s 适用场景分析

kubeadm是官方提供的开源工具,是一个开源项目,用于快速搭建 kubernetes 集群。

kubeadmin init 和 kubeadm join 是 kubeadm 中最重要的两个命令,前者用于初始化集群,后者用于加入节点。

kubeadm 初始化 k8s 的过程中,会创建 kube-apiserver、kube-controller-manager、kube-scheduler、etcd、kube-proxy 等组件,这些组件都是以 Pod 的形式运行在 k8s 集群中的,具备故障自恢复能力。

二进制安装 k8s,需要自己手动创建这些组件,而且二进制安装的 k8s 集群,不具备故障自恢复能力。

二进制安装的 k8s 集群,不具备故障自恢复能力,而且二进制安装的 k8s 集群,不支持 kubeadm 升级。所以,kubeadm 适合用于生产环境,二进制安装适合用于测试环境。

2、初始化部署环境

(1)修改机器IP为静态IP

# 控制节点

[root@localhost ~]# cat /etc/sysconfig/network-scripts/ifcfg-ens192

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=none

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=no

NAME=ens192

UUID=1070fb40-0984-46df-9559-6d193e974c6c

DEVICE=ens192

ONBOOT=yes

IPADDR=10.104.26.192

NETMASK=255.255.255.0

GATEWAY=10.104.26.252

ZONE=public

PREFIX=24

# 工作节点1

[root@localhost ~]# cat /etc/sysconfig/network-scripts/ifcfg-ens192

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=none

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=no

NAME=ens192

UUID=1070fb40-0984-46df-9559-6d193e974c6c

DEVICE=ens192

ONBOOT=yes

IPADDR=10.104.26.193

NETMASK=255.255.255.0

GATEWAY=10.104.26.252

ZONE=public

PREFIX=24

# 工作节点2

[root@localhost ~]# cat /etc/sysconfig/network-scripts/ifcfg-ens192

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=none

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=no

NAME=ens192

UUID=1070fb40-0984-46df-9559-6d193e974c6c

DEVICE=ens192

ONBOOT=yes

IPADDR=10.104.26.194

NETMASK=255.255.255.0

GATEWAY=10.104.26.252

ZONE=public

PREFIX=24(2)修改主机名

# 控制节点

[root@localhost ~]# hostnamectl set-hostname hqs-master && bash

[root@hqs-master ~]#

# 工作节点1

[root@localhost ~]# hostnamectl set-hostname hqs-node1 && bash

[root@hqs-node1 ~]#

# 工作节点2

[root@localhost ~]# hostnamectl set-hostname hqs-node2 && bash

[root@hqs-node2 ~]#(3)修改hosts文件

让各个节点都能够通过主机名访问到其他节点。修改每个机器的 /etc/hosts 文件,执行如下内容:

echo '10.104.26.192 hqs-master

10.104.26.193 hqs-node1

10.104.26.194 hqs-node2' >> /etc/hosts(4)配置主机间免密登录

k8s 集群中的各个节点之间需要通过 ssh 进行通信,所以需要配置主机间免密登录。

控制节点执行如下命令:

# 生成密钥(一路回车)

[root@hqs-master ~]# ssh-keygen

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Created directory '/root/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:/GSg0F2vu/qUv1TqjaHDrsm4Fgj08wwmzjw2HbqBLB8 root@hqs-master

The keys randomart image is:

+---[RSA 2048]----+

| . |

| . . . . . |

| . .. . o . |

| o *. o . . |

|.= * B. S + . |

|o.E o + + o o |

|.o * . .= + |

| o .o oo* + |

| .o.===.=.. |

+----[SHA256]-----+

# 将本地生成的密钥文件和私钥文件拷贝到其他节点

[root@hqs-master ~]# ssh-copy-id hqs-master

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

The authenticity of host 'hqs-master (10.104.26.192)' cant be established.

ECDSA key fingerprint is SHA256:dPx3U0PFkordJ6nnl7V//yfM4LBJdqvn0ElacmkkHmk.

ECDSA key fingerprint is MD5:aa:87:49:8f:d8:30:5f:0c:1e:40:a5:03:16:56:53:27.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@hqs-masters password: <<== 这里输入密码

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'hqs-master'"

and check to make sure that only the key(s) you wanted were added.

[root@hqs-master ~]# ssh-copy-id hqs-node1

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

The authenticity of host 'hqs-node1 (10.104.26.193)' can't be established.

ECDSA key fingerprint is SHA256:dPx3U0PFkordJ6nnl7V//yfM4LBJdqvn0ElacmkkHmk.

ECDSA key fingerprint is MD5:aa:87:49:8f:d8:30:5f:0c:1e:40:a5:03:16:56:53:27.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@hqs-node1's password: <<== 这里输入密码

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'hqs-node1'"

and check to make sure that only the key(s) you wanted were added.

[root@hqs-master ~]# ssh-copy-id hqs-node2

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

The authenticity of host 'hqs-node2 (10.104.26.194)' can't be established.

ECDSA key fingerprint is SHA256:dPx3U0PFkordJ6nnl7V//yfM4LBJdqvn0ElacmkkHmk.

ECDSA key fingerprint is MD5:aa:87:49:8f:d8:30:5f:0c:1e:40:a5:03:16:56:53:27.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@hqs-node2's password: <<== 这里输入密码

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'hqs-node2'"

and check to make sure that only the key(s) you wanted were added.node1 和 node2 也执行如上命令,将密钥文件拷贝到其他节点。

# node1

[root@hqs-node1 ~]# ssh-keygen

[root@hqs-node1 ~]# ssh-copy-id hqs-master

[root@hqs-node1 ~]# ssh-copy-id hqs-node1

[root@hqs-node1 ~]# ssh-copy-id hqs-node2

# node2

[root@hqs-node2 ~]# ssh-keygen

[root@hqs-node2 ~]# ssh-copy-id hqs-master

[root@hqs-node2 ~]# ssh-copy-id hqs-node1

[root@hqs-node2 ~]# ssh-copy-id hqs-node2(5)关闭交换分区swap

swap交换分区,在机器内存不够时会使用,但是swap分区的性能较低,k8s设计时为了提升性能,默认不允许使用交换分区。

kubeadm初始化的时候会检查是否开启了swap分区,如果开启了,会报错,所以需要关闭swap分区。如果不想关闭交换分区,可以使用--ignore-preflight-errors=Swap参数忽略检查。

# 临时关闭

[root@hqs-master ~]# swapoff -a

[root@hqs-node1 ~]# swapoff -a

[root@hqs-node2 ~]# swapoff -a

# 永久关闭————注释swap挂载

[root@hqs-master ~]# vim /etc/fstab

#/dev/mapper/centos-swap swap swap defaults 0 0

# 这两台因为时克隆的机器,还需要删除UUID

[root@hqs-node1 ~]# vim /etc/fstab

#UUID=b64332af-5acd-4202-8dbe-8dc83c50bfae /boot xfs defaults 0 0

#/dev/mapper/centos-swap swap swap defaults 0 0

[root@hqs-node2 ~]# vim /etc/fstab

#UUID=b64332af-5acd-4202-8dbe-8dc83c50bfae /boot xfs defaults 0 0

#/dev/mapper/centos-swap swap swap defaults 0 0(6)修改机器内核参数

k8s需要修改机器内核参数,使其支持路由转发和桥接,否则会报错。

# 1.master节点修改

# 临时修改,加载 br_netfilter 模块(桥接模块)

[root@hqs-master ~]# modprobe br_netfilter

# 永久修改

[root@hqs-master ~]# echo "modprobe br_netfilter" >> /etc/profile

# k8s.conf作用是修改内核参数,使其支持路由转发和桥接

[root@hqs-master ~]# cat > /etc/sysctl.d/k8s.conf <<EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

# 使配置生效

# sysctl 命令用于在运行时动态地修改内核的运行参数,可用于修改网络相关的参数

[root@hqs-master ~]# sysctl -p /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

# 查看内核参数

[root@hqs-master ~]# sysctl net.bridge.bridge-nf-call-ip6tables

# 2.node1节点修改

[root@hqs-node1 ~]# modprobe br_netfilter

[root@hqs-node1 ~]# echo "modprobe br_netfilter" >> /etc/profile

[root@hqs-node1 ~]# cat > /etc/sysctl.d/k8s.conf <<EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

[root@hqs-node1 ~]# sysctl -p /etc/sysctl.d/k8s.conf

# 3.node2节点修改

[root@hqs-node2 ~]# modprobe br_netfilter

[root@hqs-node2 ~]# echo "modprobe br_netfilter" >> /etc/profile

[root@hqs-node2 ~]# cat > /etc/sysctl.d/k8s.conf <<EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

[root@hqs-node2 ~]# sysctl -p /etc/sysctl.d/k8s.conf修改上述内核参数主要是为了解决以下问题:

- 问题1:执行

sysctl -p /etc/sysctl.d/k8s.conf出现报错:sysctl: cannot stat /proc/sys/net/bridge/bridge-nf-call-ip6tables: No such file or directory。该问题是因为没有加载br_netfilter模块,执行modprobe br_netfilter加载该模块即可。 - 问题2:安装docker后,执行

docker info出现报错:WARNING: bridge-nf-call-iptables is disabled. WARNING: bridge-nf-call-ip6tables is disabled.。该问题是因为没有开启路由转发,执行sysctl net.bridge.bridge-nf-call-ip6tables=1和sysctl net.bridge.bridge-nf-call-iptables=1开启路由转发即可。 - 问题3:kubeadm初始化k8s报错:

ERROR FileContent--proc-sys-net-ipv4-ip_forward contents are not set to 1。该问题是因为没有开启路由转发,执行sysctl net.ipv4.ip_forward=1开启路由转发即可。

(7)关闭防火墙

关闭防火墙,或者开放k8s需要的端口。

[root@hqs-master ~]# systemctl stop firewalld && systemctl disable firewalld

Removed symlink /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

[root@hqs-node1 ~]# systemctl stop firewalld && systemctl disable firewalld

[root@hqs-node2 ~]# systemctl stop firewalld && systemctl disable firewalld(8)关闭selinux

关闭selinux,或者设置为permissive模式。

# 修改配置文件

[root@hqs-master ~]# sed -i 's/SELINUX=enforcing/SELINUX=disabled/' /etc/selinux/config

[root@hqs-node1 ~]# sed -i 's/SELINUX=enforcing/SELINUX=disabled/' /etc/selinux/config

[root@hqs-node2 ~]# sed -i 's/SELINUX=enforcing/SELINUX=disabled/' /etc/selinux/config

# 修改selinux配置后,需要重启机器,配置才能永久生效

[root@hqs-master ~]# reboot

[root@hqs-node1 ~]# reboot

[root@hqs-node2 ~]# reboot

# 查看selinux状态

[root@hqs-master ~]# getenforce

Disabled(9)配置阿里云yum源

配置阿里云yum源,或者使用其他yum源。

# 删除原有yum源

[root@hqs-master ~]# rm -rf /etc/yum.repos.d/*.repo

[root@hqs-node1 ~]# rm -rf /etc/yum.repos.d/*.repo

[root@hqs-node2 ~]# rm -rf /etc/yum.repos.d/*.repo

# 下载阿里云yum源

[root@hqs-master ~]# wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

# 如没有wget命令,可上传CentOS-Base.repo文件到/etc/yum.repos.d/目录下

[root@hqs-master yum.repos.d]# scp CentOS-Base.repo root@hqs-node1:/etc/yum.repos.d/

CentOS-Base.repo 100% 2523 253.5KB/s 00:00

[root@hqs-master yum.repos.d]# scp CentOS-Base.repo root@hqs-node2:/etc/yum.repos.d/

CentOS-Base.repo 100% 2523 253.5KB/s 00:00

# 清除缓存

[root@hqs-master ~]# yum clean all && yum makecache

[root@hqs-node1 ~]# yum clean all && yum makecache

[root@hqs-node2 ~]# yum clean all && yum makecache

# 安装lrzsz、scp、vim、wget、net-tools

[root@hqs-master ~]# yum install -y lrzsz scp vim wget net-tools yum-utils

[root@hqs-node1 ~]# yum install -y lrzsz scp vim wget net-tools yum-utils

[root@hqs-node2 ~]# yum install -y lrzsz scp vim wget net-tools yum-utils

# 配置国内docker的repo源

[root@hqs-master ~]# yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

Loaded plugins: fastestmirror

adding repo from: http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

grabbing file http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo to /etc/yum.repos.d/docker-ce.repo

repo saved to /etc/yum.repos.d/docker-ce.repo

[root@hqs-master ~]# ls /etc/yum.repos.d/

CentOS-Base.repo docker-ce.repo

[root@hqs-node1 ~]# yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

[root@hqs-node2 ~]# yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

# 配置epel源

# 下载epel源

[root@hqs-master ~]# wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo

--2023-06-21 13:47:03-- http://mirrors.aliyun.com/repo/epel-7.repo

Resolving mirrors.aliyun.com (mirrors.aliyun.com)... 119.96.33.219, 182.40.59.176, 182.40.41.199, ...

Connecting to mirrors.aliyun.com (mirrors.aliyun.com)|119.96.33.219|:80... connected.

HTTP request sent, awaiting response... 200 OK

Length: 664 [application/octet-stream]

Saving to: ‘/etc/yum.repos.d/epel.repo’

100%[=======================================================================================================>] 664 --.-K/s in 0s

2023-06-21 13:47:03 (147 MB/s) - ‘/etc/yum.repos.d/epel.repo’ saved [664/664]

[root@hqs-master ~]# scp /etc/yum.repos.d/epel.repo hqs-node1:/etc/yum.repos.d/

epel.repo 100% 664 1.0MB/s 00:00

[root@hqs-master ~]# scp /etc/yum.repos.d/epel.repo hqs-node2:/etc/yum.repos.d/

epel.repo 100% 664 1.2MB/s 00:00(10)配置安装k8s组件的yum源

配置k8s组件的yum源,或者使用其他yum源。

# 编写kubernetes.repo文件

[root@hqs-master ~]# cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=0

EOF

[root@hqs-master ~]# ls /etc/yum.repos.d/

CentOS-Base.repo docker-ce.repo epel.repo kubernetes.repo

# 将kubernetes.repo文件分发到其他节点

[root@hqs-master ~]# scp /etc/yum.repos.d/kubernetes.repo hqs-node1:/etc/yum.repos.d/

kubernetes.repo 100% 129 145.5KB/s 00:00

[root@hqs-master ~]# scp /etc/yum.repos.d/kubernetes.repo hqs-node2:/etc/yum.repos.d/

kubernetes.repo 100% 129 237.0KB/s 00:00(11)配置时间同步

使用ntpdate同步时间。

# 安装ntpdate

[root@hqs-master ~]# yum install -y ntpdate

[root@hqs-node1 ~]# yum install -y ntpdate

[root@hqs-node2 ~]# yum install -y ntpdate

# 与ntp服务器同步时间

[root@hqs-master ~]# ntpdate cn.pool.ntp.org

[root@hqs-node1 ~]# ntpdate cn.pool.ntp.org

[root@hqs-node2 ~]# ntpdate cn.pool.ntp.org

# 将时间同步命令写入crontab

[root@hqs-master ~]# crontab -e

0 2 * * * /usr/sbin/ntpdate cn.pool.ntp.org

[root@hqs-node1 ~]# crontab -e

0 2 * * * /usr/sbin/ntpdate cn.pool.ntp.org

[root@hqs-node2 ~]# crontab -e

0 2 * * * /usr/sbin/ntpdate cn.pool.ntp.org

# 重启crontab服务

[root@hqs-master ~]# systemctl restart crond

[root@hqs-node1 ~]# systemctl restart crond

[root@hqs-node2 ~]# systemctl restart crond(12)开启ipvs

开启ipvs,需要在所有节点上执行。

ipvs(IP Vertual Server):实现了传输层的负载均衡,是一种高性能、可扩展的负载均衡方案。

- Linux内核的一部分,承担着负载均衡的功能。

- 通过ipvsadm工具来配置ipvs。

- ipvs可基于TCP和UDP的服务请求转发到真实服务器上

ipvs和iptables的区别:

- ipvs是在传输层工作,iptables是在网络层工作。

- ipvs是基于内容的负载均衡,iptables是基于地址的负载均衡。

- ipvs为大型集群提供了高性能的负载均衡,iptables为小型集群提供了负载均衡。

- ipvs支持更多的负载均衡算法,iptables只支持轮询算法。

- ipvs支持服务器健康检查、连接重试等功能,iptables不支持。

# 准备ipvs.modules文件

[root@hqs-master ~]# cat <<EOF > /etc/sysconfig/modules/ipvs.modules

#!/bin/bash

ipvs_modules="ip_vs ip_vs_lc ip_vs_wlc ip_vs_rr ip_vs_wrr ip_vs_lblc ip_vs_lblcr ip_vs_dh ip_vs_sh ip_vs_nq ip_vs_sed ip_vs_ftp nf_conntrack"

for kernel_module in ${ipvs_modules}; do

/sbin/modinfo -F filename ${kernel_module} > /dev/null 2>&1

if [ 0 -eq 0 ]; then

/sbin/modprobe ${kernel_module}

fi

done

EOF

# 修改ipvs.modules文件权限并执行

[root@hqs-master ~]# chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep ip_vs

# 将ipvs.modules文件分发到其他节点

[root@hqs-master ~]# scp /etc/sysconfig/modules/ipvs.modules hqs-node1:/etc/sysconfig/modules/

ipvs.modules 100% 164 188.5KB/s 00:00

[root@hqs-master ~]# scp /etc/sysconfig/modules/ipvs.modules hqs-node2:/etc/sysconfig/modules/

ipvs.modules 100% 164 188.5KB/s 00:00

# 修改node节点 ipvs.modules文件权限并执行

[root@hqs-node1 ~]# chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep ip_vs

[root@hqs-node2 ~]# chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep ip_vs(13)安装基础软件包

[root@hqs-master ~]# yum install -y device-mapper-persistent-data lvm2 net-tools conntrack-tools wget nfs-utils telnet gcc gcc-c++ make cmake libxml2-devel openssl-devel curl-devel unzip sudo ntp libaio-devel ncurses-devel autoconf automake zlib-devel python-devel epel-release openssh-server socat ipvsadm conntrack ntpdate telnet

[root@hqs-node1 ~]# yum install -y device-mapper-persistent-data lvm2 net-tools conntrack-tools wget nfs-utils telnet gcc gcc-c++ make cmake libxml2-devel openssl-devel curl-devel unzip sudo ntp libaio-devel ncurses-devel autoconf automake zlib-devel python-devel epel-release openssh-server socat ipvsadm conntrack ntpdate telnet

[root@hqs-node2 ~]# yum install -y device-mapper-persistent-data lvm2 net-tools conntrack-tools wget nfs-utils telnet gcc gcc-c++ make cmake libxml2-devel openssl-devel curl-devel unzip sudo ntp libaio-devel ncurses-devel autoconf automake zlib-devel python-devel epel-release openssh-server socat ipvsadm conntrack ntpdate telnet(14)安装iptables

iptables 是Linux系统上常用的防火墙软件,用于设置、审计和检查IPv4和IPv6数据包过滤规则以及NAT表的工具。

在所有节点上执行如下操作:

# 安装iptables

yum install -y iptables-services

# 禁用iptables

systemctl stop iptables && systemctl disable iptables

# 清空防火墙规则

iptables -F3、安装Docker

(1)安装Docker-ce

# 安装docker-ce和containerd

[root@hqs-master ~]# yum install -y docker-ce-20.10.6 docker-ce-cli-20.10.6 containerd.io

[root@hqs-node1 ~]# yum install -y docker-ce-20.10.6 docker-ce-cli-20.10.6 containerd.io

[root@hqs-node2 ~]# yum install -y docker-ce-20.10.6 docker-ce-cli-20.10.6 containerd.io

# 启动docker

[root@hqs-master ~]# systemctl enable docker && systemctl start docker

[root@hqs-node1 ~]# systemctl enable docker && systemctl start docker

[root@hqs-node2 ~]# systemctl enable docker && systemctl start docker(2)配置Docker镜像加速器

# 创建配置文件

[root@hqs-master ~]# mkdir -p /etc/docker

[root@hqs-node1 ~]# mkdir -p /etc/docker

[root@hqs-node2 ~]# mkdir -p /etc/docker

# 创建daemon.json文件

[root@hqs-master ~]# cat <<EOF > /etc/docker/daemon.json

{

"registry-mirrors": ["https://rsbud4vc.mirror.aliyuncs.com", "https://docker.mirrors.ustc.edu.cn", "https://registry.docker-cn.com", "http://hub-mirror.c.163.com"],

"exec-opts": ["native.cgroupdriver=systemd"]

}

EOF

# 分发daemon.json文件到其他节点

[root@hqs-master ~]# scp /etc/docker/daemon.json hqs-node1:/etc/docker/

daemon.json 100% 100 101.6KB/s 00:00

[root@hqs-master ~]# scp /etc/docker/daemon.json hqs-node2:/etc/docker/

daemon.json 100% 100 101.6KB/s 00:00

# 加载配置、重启docker

[root@hqs-master ~]# systemctl daemon-reload && systemctl restart docker

[root@hqs-node1 ~]# systemctl daemon-reload && systemctl restart docker

[root@hqs-node2 ~]# systemctl daemon-reload && systemctl restart docker二、kubernetes集群部署

1、安装初始化 Kubernetes 需要软件包

kubeadm: 用于初始化集群的指令

kubelet: 运行在集群所有节点上,负责启动 Pod 和容器等

kubectl: 用于与集群通信的命令行工具

# 安装kubeadm、kubelet、kubectl

[root@hqs-master ~]# yum install -y kubelet-1.20.6 kubeadm-1.20.6 kubectl-1.20.6

[root@hqs-node1 ~]# yum install -y kubelet-1.20.6 kubeadm-1.20.6 kubectl-1.20.6

[root@hqs-node2 ~]# yum install -y kubelet-1.20.6 kubeadm-1.20.6 kubectl-1.20.6

# 开机启动kubelet

[root@hqs-master ~]# systemctl enable kubelet

[root@hqs-node1 ~]# systemctl enable kubelet

[root@hqs-node2 ~]# systemctl enable kubelet2、kubeadm初始化k8s集群

(1)上传加载离线镜像包

把初始化k8s 集群需要的离线镜像包上传到所有机器

# 上传离线镜像包 k8simage-1-20-6.tar.gz

[root@hqs-master ~]# ls

anaconda-ks.cfg k8simage-1-20-6.tar.gz

# 分发离线镜像包到其他节点

[root@hqs-master ~]# scp k8simage-1-20-6.tar.gz hqs-node1:/root/

k8simage-1-20-6.tar.gz 100% 1033MB 103.9MB/s 00:09

[root@hqs-master ~]# scp k8simage-1-20-6.tar.gz hqs-node2:/root/

k8simage-1-20-6.tar.gz 100% 1033MB 103.3MB/s 00:10

# 加载离线镜像包

[root@hqs-master ~]# docker load -i k8simage-1-20-6.tar.gz

[root@hqs-node1 ~]# docker load -i k8simage-1-20-6.tar.gz

[root@hqs-node2 ~]# docker load -i k8simage-1-20-6.tar.gz(2)使用kubeadm初始化k8s集群

# --kubernetes-version 指定k8s版本

# --apiserver-advertise-address 指定apiserver的地址

# --image-repository 指定镜像仓库地址

# --pod-network-cidr 指定pod网络地址

# --ignore-preflight-errors 忽略检查

[root@hqs-master ~]# kubeadm init --kubernetes-version=v1.20.6 \

--apiserver-advertise-address=10.104.26.192 \

--image-repository registry.aliyuncs.com/google_containers \

--pod-network-cidr=10.244.0.0/16 \

--service-cidr=10.96.0.0/16 \

--ignore-preflight-errors=SystemVerification

输出如下:

...略

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 10.104.26.192:6443 --token h9i86i.nr1w2agt9siqxrmb \

--discovery-token-ca-cert-hash sha256:6be0821689ac5a20d875f3d71d88a0785f1b91f4e97964717cd1fcc60201fb02(3)配置kubectl的配置文件config

这部操作相当于对 kubectl 命令行工具进行初始化,使其能够与 Kubernetes 集群通信。

[root@hqs-master ~]# mkdir -p $HOME/.kube && sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config && sudo chown $(id -u):$(id -g) $HOME/.kube/config

# 查看集群状态

[root@hqs-master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

hqs-master NotReady control-plane,master 5m41s v1.20.6

# 由于还没有安装网络插件,所以状态是NotReady

# 生成配置文件并修改

[root@hqs-master ~]# kubeadm config print init-defaults > a.yaml

[root@hqs-master ~]# ls

anaconda-ks.cfg a.yaml k8simage-1-20-6.tar.gz

[root@hqs-master ~]# vi a.yaml

apiVersion: kubeadm.k8s.io/v1beta3

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 10.104.26.192

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

imagePullPolicy: IfNotPresent

name: hqs-master

taints: null

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: 1.20.6

networking:

dnsDomain: cluster.local

podSubnet: 10.244.0.0/16

serviceSubnet: 10.96.0.0/12

scheduler: {}

# 修改containerd配置文件

[root@hqs-master ~]# containerd config default > /etc/containerd/config.toml

[root@hqs-master ~]# vi /etc/containerd/config.toml

SystemdCgroup = true

sandbox_image = "registry.aliyuncs.com/google_containers/pause:3.7"

# 传输配置文件到node节点

[root@hqs-master ~]# scp /etc/containerd/config.toml hqs-node1:/etc/containerd/

[root@hqs-master ~]# scp /etc/containerd/config.toml hqs-node2:/etc/containerd/

# 重启运行时

[root@hqs-master ~]# systemctl restart containerd

[root@hqs-node1 ~]# systemctl restart containerd

[root@hqs-node2 ~]# systemctl restart containerd

# 重新初始化主节点

[root@hqs-master ~]# kubeadm init --config a.yaml

[root@hqs-master ~]# mkdir -p $HOME/.kube && sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config && sudo chown $(id -u):$(id -g) $HOME/.kube/config3、扩容k8s集群-添加node节点

[root@hqs-node2 ~]# systemctl restart containerd

[root@hqs-node2 ~]# systemctl restart containerd

[root@hqs-node1 ~]# kubeadm join 10.104.26.192:6443 --token abcdef.0123456789abcdef --discovery-token-ca-cert-hash sha256:83f812e02f4bd7688a90bdb79a5873689c6e9b573610c51ba12f63ac45a4e90c --ignore-preflight-errors=SystemVerification,"ERROR SystemVerification"

[root@hqs-node2 ~]# kubeadm join 10.104.26.192:6443 --token abcdef.0123456789abcdef --discovery-token-ca-cert-hash sha256:83f812e02f4bd7688a90bdb79a5873689c6e9b573610c51ba12f63ac45a4e90c --ignore-preflight-errors=SystemVerification,"ERROR SystemVerification"

# 查看集群状态

[root@hqs-master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

hqs-master NotReady control-plane,master 15m v1.20.6

hqs-node1 NotReady <none> 84s v1.20.6

hqs-node2 NotReady <none> 63s v1.20.6

# ROLES为<none>,说明这个节点时工作节点

# STATUS为NotReady,说明这个节点还没有安装网络插件

# 将两个node的ROLES修改为worker

[root@hqs-master ~]# kubectl label node hqs-node1 node-role.kubernetes.io/worker=worker

node/hqs-node1 labeled

[root@hqs-master ~]# kubectl label node hqs-node2 node-role.kubernetes.io/worker=worker

node/hqs-node2 labeled4、安装网络插件-Calico

上传 calico.yaml(https://github.com/hqs2212586/docker-study/blob/main/calico.yaml) 到master节点,使用 yaml 文件安装 calico 网络插件。

[root@hqs-master ~]# ls

anaconda-ks.cfg a.yaml calico.yaml

# 使用 yaml 文件安装 calico.yaml

[root@hqs-master ~]# kubectl apply -f calico.yaml

# 查看pod状态

[root@hqs-master ~]# kubectl get pod -n kube-system

# 查看节点状态

[root@hqs-master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

hqs-master Ready control-plane,master 21d v1.20.6

hqs-node1 Ready worker 21d v1.20.6

hqs-node2 Ready worker 21d v1.20.6

# 查看pod状态

[root@hqs-master ~]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-6949477b58-hv6gn 1/1 Running 0 50s

calico-node-29wjw 1/1 Running 0 50s

calico-node-j72mc 1/1 Running 0 50s

calico-node-klmjw 1/1 Running 0 50s(1)测试在k8s创建 pod 是否可以正常访问网络

上传 busybox-1-28.tar.gz 到 hqs-node1、hqs-node2 节点,解压后创建pod,测试是否可以正常访问网络。

[root@hqs-master ~]# ls

anaconda-ks.cfg a.yaml busybox-1-28.tar.gz

# 上传busybox-1-28.tar.gz到node1、node2节点

[root@hqs-master ~]# scp busybox-1-28.tar.gz hqs-node1:/root/

[root@hqs-master ~]# scp busybox-1-28.tar.gz hqs-node2:/root/

# 解压busybox-1-28.tar.gz

[root@hqs-node1 ~]# docker load -i busybox-1-28.tar.gz

432b65032b94: Loading layer [==================================================>] 1.36MB/1.36MB

Loaded image: busybox:1.28

[root@hqs-node2 ~]# docker load -i busybox-1-28.tar.gz

432b65032b94: Loading layer [==================================================>] 1.36MB/1.36MB

Loaded image: busybox:1.28

# 创建pod

[root@hqs-master ~]# kubectl run busybox --image=busybox:1.28 --restart=Never --rm -it busybox -- sh

If you don't see a command prompt, try pressing enter.

/ # ping www.baidu.com

PING www.baidu.com (14.119.104.254): 56 data bytes

64 bytes from 14.119.104.254: seq=0 ttl=52 time=24.328 ms

64 bytes from 14.119.104.254: seq=1 ttl=52 time=23.159 ms通过上面的测试,可以看到,创建pod可以正常访问网络。

(2)测试k8s集群部署tomcat服务

上传 tomcat.tar.gz 到 hqs-node1、hqs-node2 节点,解压后创建pod,测试是否可以正常访问 tomcat 首页。

[root@hqs-master ~]# scp tomcat.tar.gz hqs-node1:/root/

tomcat.tar.gz 100% 105MB 105.1MB/s 00:01

[root@hqs-master ~]# scp tomcat.tar.gz hqs-node2:/root/

tomcat.tar.gz 100% 105MB 86.1MB/s 00:01

# 解压tomcat.tar.gz

[root@hqs-node1 ~]# docker load -i tomcat.tar.gz

Loaded image: tomcat:8.5.61

[root@hqs-node2 ~]# docker load -i tomcat.tar.gz

Loaded image: tomcat:8.5.61

# 编辑tomcat.yaml

[root@hqs-master ~]# cat tomcat.yaml

apiVersion: v1 #pod属于k8s核心组v1

kind: Pod #创建的是一个Pod资源

metadata: #元数据

name: demo-pod #pod名字

namespace: default #pod所属的名称空间

labels:

app: myapp #pod具有的标签

env: dev #pod具有的标签

spec:

containers: #定义一个容器,容器是对象列表,下面可以有多个name

- name: tomcat-pod-java #容器的名字

ports:

- containerPort: 8080

image: tomcat:8.5-jre8-alpine #容器使用的镜像

imagePullPolicy: IfNotPresent

# 创建pod

[root@hqs-master ~]# kubectl apply -f tomcat.yaml

pod/demo-pod created

# 编辑 tomcat-service.yaml

[root@hqs-master ~]# cat tomcat-service.yaml

apiVersion: v1

kind: Service

metadata:

name: tomcat

spec:

type: NodePort

ports:

- port: 8080

nodePort: 30080

selector:

app: myapp

env: dev

# 创建service

[root@hqs-master ~]# kubectl apply -f tomcat-service.yaml

service/tomcat created

# 查看服务状态

[root@hqs-master ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 21d

tomcat NodePort 10.106.166.173 <none> 8080:30080/TCP 18s

# 访问tomcat首页 http://10.104.26.192:30080/ 访问到tomcat首页(3)测试 coredns 是否可以正常解析域名

# 创建pod

[root@hqs-master ~]# kubectl run busybox --image busybox:1.28 --restart=Never --rm -it busybox -- sh

If you dont see a command prompt, try pressing enter.

# 在pod中执行nslookup命令

/ # nslookup kubernetes.default.svc.cluster.local

Server: 10.96.0.10

Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.local

Name: kubernetes.default.svc.cluster.local

Address 1: 10.96.0.1 kubernetes.default.svc.cluster.local10.96.0.10 就是 coreDNS 的 clusterIP,说明 coreDNS 已经正常解析域名。

注意:busybox 要用指定的1.28版本,不能用最新版本,最新版本 snlookup 命令不支持域名解析。

5、安装 k8s 可视化UI界面Dashboard

(1)准备yaml文件

# Copyright 2017 The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: v1

kind: Namespace

metadata:

name: kubernetes-dashboard

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

ports:

- port: 443

targetPort: 8443

selector:

k8s-app: kubernetes-dashboard

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kubernetes-dashboard

type: Opaque

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-csrf

namespace: kubernetes-dashboard

type: Opaque

data:

csrf: ""

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-key-holder

namespace: kubernetes-dashboard

type: Opaque

---

kind: ConfigMap

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-settings

namespace: kubernetes-dashboard

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

rules:

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs", "kubernetes-dashboard-csrf"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster", "dashboard-metrics-scraper"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:", "dashboard-metrics-scraper", "http:dashboard-metrics-scraper"]

verbs: ["get"]

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

rules:

# Allow Metrics Scraper to get metrics from the Metrics server

- apiGroups: ["metrics.k8s.io"]

resources: ["pods", "nodes"]

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

containers:

- name: kubernetes-dashboard

image: kubernetesui/dashboard:v2.0.0-beta8

imagePullPolicy: IfNotPresent

ports:

- containerPort: 8443

protocol: TCP

args:

- --auto-generate-certificates

- --namespace=kubernetes-dashboard

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt to auto discover the API server and connect

# to it. Uncomment only if the default does not work.

# - --apiserver-host=http://my-address:port

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

# Create on-disk volume to store exec logs

- mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

nodeSelector:

"beta.kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

ports:

- port: 8000

targetPort: 8000

selector:

k8s-app: dashboard-metrics-scraper

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: dashboard-metrics-scraper

template:

metadata:

labels:

k8s-app: dashboard-metrics-scraper

annotations:

seccomp.security.alpha.kubernetes.io/pod: 'runtime/default'

spec:

containers:

- name: dashboard-metrics-scraper

image: kubernetesui/metrics-scraper:v1.0.1

imagePullPolicy: IfNotPresent

ports:

- containerPort: 8000

protocol: TCP

livenessProbe:

httpGet:

scheme: HTTP

path: /

port: 8000

initialDelaySeconds: 30

timeoutSeconds: 30

volumeMounts:

- mountPath: /tmp

name: tmp-volume

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

serviceAccountName: kubernetes-dashboard

nodeSelector:

"beta.kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

volumes:

- name: tmp-volume

emptyDir: {}(2)安装Dashboard

把安装 kubernetes-dashboard 需要的镜像上传到工作节点,然后使用 yaml 文件安装 kubernetes-dashboard。

# 上传 dashboard_2_0_0.tar.gz 和 metrics-scrapter-1-0-1.tar.gz 到 hqs-node1、hqs-node2 节点

[root@hqs-master ~]# scp dashboard_2_0_0.tar.gz hqs-node1:/root/

dashboard_2_0_0.tar.gz 100% 87MB 87.0MB/s 00:01

[root@hqs-master ~]# scp dashboard_2_0_0.tar.gz hqs-node2:/root/

dashboard_2_0_0.tar.gz 100% 87MB 77.5MB/s 00:01

[root@hqs-master ~]# scp metrics-scrapter-1-0-1.tar.gz hqs-node1:/root/

metrics-scrapter-1-0-1.tar.gz 100% 38MB 96.2MB/s 00:00

[root@hqs-master ~]# scp metrics-scrapter-1-0-1.tar.gz hqs-node2:/root/

metrics-scrapter-1-0-1.tar.gz 100% 38MB 71.5MB/s 00:00

# 解压 dashboard_2_0_0.tar.gz 和 metrics-scrapter-1-0-1.tar.gz

[root@hqs-node1 ~]# docker load -i dashboard_2_0_0.tar.gz

954115f32d73: Loading layer [==================================================>] 91.22MB/91.22MB

Loaded image: kubernetesui/dashboard:v2.0.0-beta8

[root@hqs-node1 ~]# docker load -i metrics-scrapter-1-0-1.tar.gz

89ac18ee460b: Loading layer [==================================================>] 238.6kB/238.6kB

878c5d3194b0: Loading layer [==================================================>] 39.87MB/39.87MB

1dc71700363a: Loading layer [==================================================>] 2.048kB/2.048kB

Loaded image: kubernetesui/metrics-scraper:v1.0.1

[root@hqs-node2 ~]# docker load -i dashboard_2_0_0.tar.gz

954115f32d73: Loading layer [==================================================>] 91.22MB/91.22MB

Loaded image: kubernetesui/dashboard:v2.0.0-beta8

You have new mail in /var/spool/mail/root

[root@hqs-node2 ~]# docker load -i metrics-scrapter-1-0-1.tar.gz

89ac18ee460b: Loading layer [==================================================>] 238.6kB/238.6kB

878c5d3194b0: Loading layer [==================================================>] 39.87MB/39.87MB

1dc71700363a: Loading layer [==================================================>] 2.048kB/2.048kB

Loaded image: kubernetesui/metrics-scraper:v1.0.1

# 使用 yaml 文件安装 kubernetes-dashboard

[root@hqs-master ~]# kubectl apply -f kubernetes-dashboard.yaml

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-scraper created

# 查看 dashboard pod 状态

[root@hqs-master ~]# kubectl get pods -n kubernetes-dashboard

NAME READY STATUS RESTARTS AGE

dashboard-metrics-scraper-7445d59dfd-scvhp 1/1 Running 0 20s

kubernetes-dashboard-54f5b6dc4b-7t2pt 1/1 Running 0 20s

# 查看 dashboard service 状态

[root@hqs-master ~]# kubectl get svc -n kubernetes-dashboard

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

dashboard-metrics-scraper ClusterIP 10.109.232.116 <none> 8000/TCP 93s

kubernetes-dashboard ClusterIP 10.111.213.40 <none> 443/TCP 93s

# 修改 service type 类型变成 NodePort

[root@hqs-master ~]# kubectl edit svc kubernetes-dashboard -n kubernetes-dashboard

apiVersion: v1

kind: Service

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"v1","kind":"Service","metadata":{"annotations":{},"labels":{"k8s-app":"kubernetes-dashboard"},"name":"kubernetes-dashboard","namespace":"kubernetes-dashboard"},"spec":{"ports":[{"port":443,"targetPort":8443}],"selector":{"k8s-app":"kubernetes-dashboard"}}}

creationTimestamp: "2023-07-22T03:28:25Z"

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

resourceVersion: "1689092"

uid: c3d8ef62-3f73-435d-8f63-8b0cff037b6f

spec:

clusterIP: 10.111.213.40

clusterIPs:

- 10.111.213.40

ports:

- port: 443

protocol: TCP

targetPort: 8443

selector:

k8s-app: kubernetes-dashboard

sessionAffinity: None

type: NodePort 《———————修改这里

status:

loadBalancer: {}

# 查看 dashboard service 状态

# 确认 service type 类型变成 NodePort

[root@hqs-master ~]# kubectl get svc -n kubernetes-dashboard

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

dashboard-metrics-scraper ClusterIP 10.109.232.116 <none> 8000/TCP 6m6s

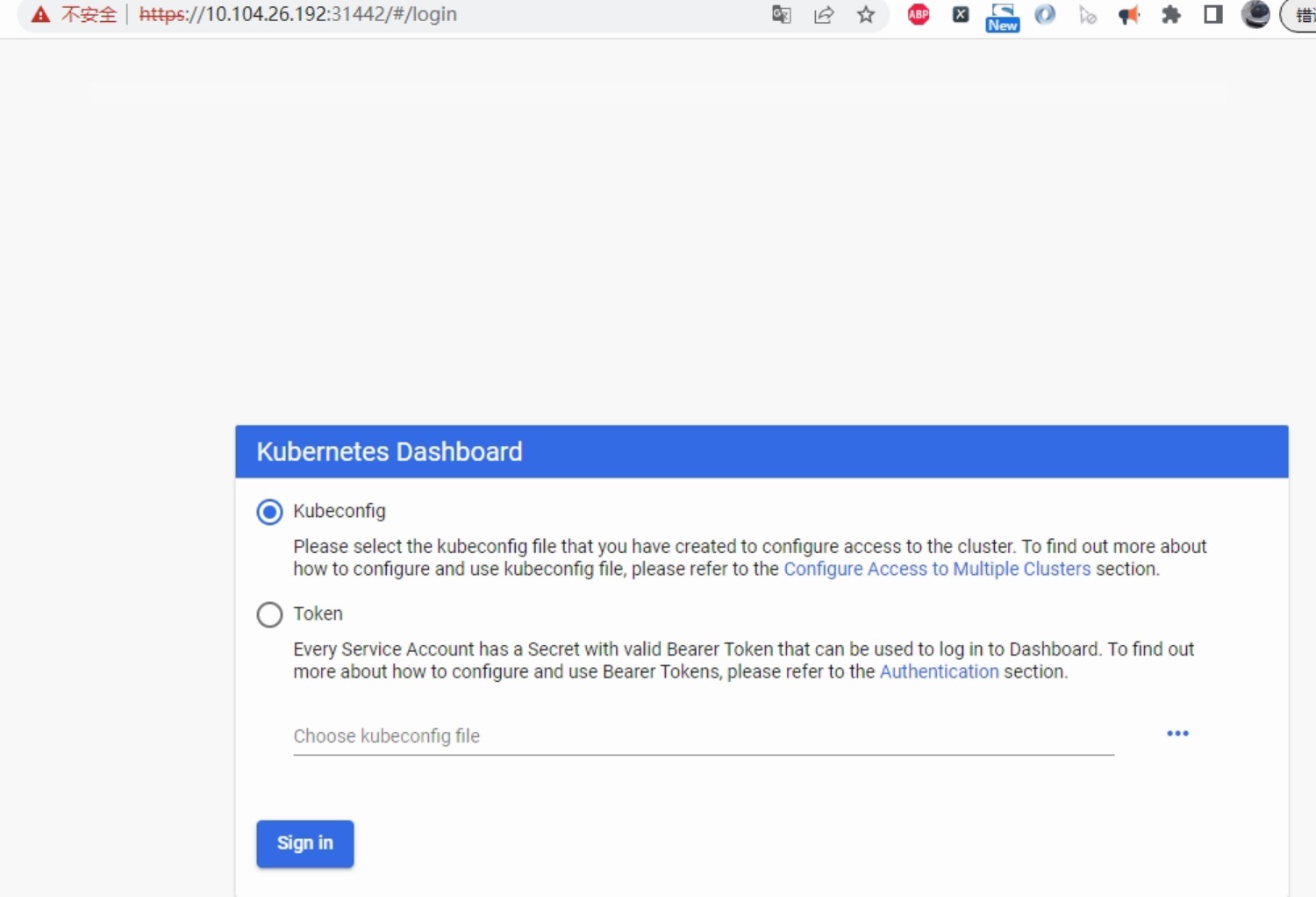

kubernetes-dashboard NodePort 10.111.213.40 <none> 443:32077/TCP 6m6s上面结果可知 service 类型是 NodePort,访问任何一个工作节点IP:32077,即可访问到 kubernetes-dashboard 界面。

这里尝试访问 https://10.104.26.192:32077/,报错,在当前页面点击任意空白处,直接键盘键入 thisisunsafe,即可访问到 kubernetes-dashboard 界面。

�

263

263

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?