假设 X∈Rn×mX \in \mathbb{R}^{n\times m}X∈Rn×m 为瘦高矩阵,n>mn > mn>m

rSVD 算法流程如下:

# Define randomized SVD function

def rSVD(X,r,q=0,p=0):

# Step 1: Sample column space of X with P matrix

ny = X.shape[1]

P = np.random.randn(ny,r+p)

Z = X @ P

for k in range(q):

Z = X @ (X.T @ Z)

Q, R = np.linalg.qr(Z,mode='reduced')

# Step 2: Compute SVD on projected Y = Q.T @ X

Y = Q.T @ X

UY, S, VT = np.linalg.svd(Y,full_matrices=0)

U = Q @ UY

return U, S, VT

代码详解

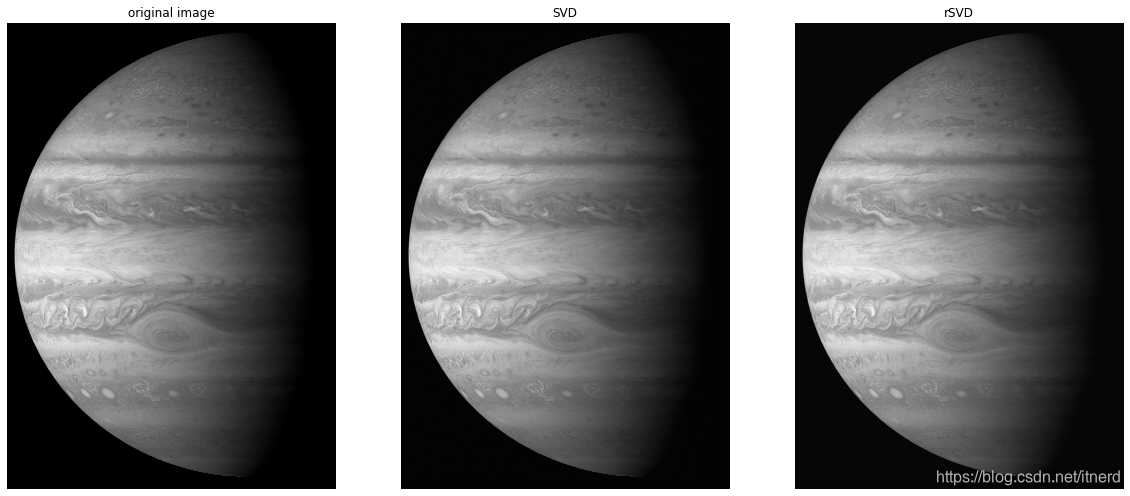

案例

原始图片,大小: (3207, 2260)

A = imread(os.path.join('..','DATA','jupiter.jpg'))

X = np.mean(A,axis=2) # Convert RGB -> grayscale

U, S, VT = np.linalg.svd(X,full_matrices=0) # Deterministic SVD, [time spent: 8.07s]

r = 400 # Target rank

q = 1 # Power iterations

p = 5 # Oversampling parameter

rU, rS, rVT = rSVD(X,r,q,p) # [time spent: 0.46s]

SVD 耗时 8.07 s

RSVD 耗时 0.46s,很快啊

## Reconstruction

XSVD = U[:,:(r+1)] @ np.diag(S[:(r+1)]) @ VT[:(r+1),:] # SVD approximation

errSVD = np.linalg.norm(X-XSVD,ord=2) / np.linalg.norm(X,ord=2)

XrSVD = rU[:,:(r+1)] @ np.diag(rS[:(r+1)]) @ rVT[:(r+1),:] # SVD approximation

errSVD = np.linalg.norm(X-XrSVD,ord=2) / np.linalg.norm(X,ord=2)

## Plot

fig, axs = plt.subplots(1,3,figsize=(20,10))

plt.set_cmap('gray')

axs[0].imshow(X)

axs[0].axis('off')

axs[0].set_title('original image')

axs[1].imshow(XSVD)

axs[1].axis('off')

axs[1].set_title('SVD')

axs[2].imshow(XrSVD)

axs[2].axis('off')

axs[2].set_title('rSVD')

plt.show()

参考文献

- http://databookuw.com/

- 《Data-Driven Science and Engineering —— Machine Learning, Dynamical Systems, and Control》

博客围绕RSVD和SVD算法展开,给出rSVD算法流程,进行代码详解和案例分析。以原始图片为例,对比了SVD和RSVD的耗时,SVD耗时8.07s,而RSVD仅耗时0.46s,体现出RSVD速度优势。还列出了参考文献。

博客围绕RSVD和SVD算法展开,给出rSVD算法流程,进行代码详解和案例分析。以原始图片为例,对比了SVD和RSVD的耗时,SVD耗时8.07s,而RSVD仅耗时0.46s,体现出RSVD速度优势。还列出了参考文献。

567

567

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?