Kaggle竞赛的猫狗分类:https://www.kaggle.com/c/dogs-vs-cats/

-展示如何使用卷积神经网络CNN的Tricks如Dropout、数据增强、预训练、Ensemble、多任务学习,来提高CNN的泛化能力。

CNN

0.下载和解压猫狗图片数据集

- 猫狗分类数据集下载: https://www.kaggle.com/c/dogs-vs-cats/data

- 下载好后解压到相应文件夹。

1.加载和处理猫狗图片数据集

1.1加载数据集(划分为猫狗两个文件夹)

from keras.preprocessing.image import ImageDataGenerator

#All images will be rescaled by 1./255

train_datagen = ImageDataGenerator(rescale=1./255)

test_datagen = ImageDataGenerator(rescale=1./255)

train_generator = train_datagen.flow_from_directory(

#This is the target directory(Here I use google colab)

'/content/drive/MyDrive/dogs-vs-cats/train' ,

#All images will be resized to 150x150

target_size=(150,150),

batch_size = 20,

#since we use binary_crossentropy loss, we need binary labels

class_mode = 'binary'

)

可以看到猫和狗图片的总数是25000张,但只有1个类别,是不对的。

Found 25000 images belonging to 1 classes.

/content/drive/MyDrive/dogs-vs-cats/train cp dog.*.jpg dog

/content/drive/MyDrive/dogs-vs-cats/train cp cat.*.jpg cat

如上,将25000张名字中含dog或cat的图片分别copy到dog或cat文件夹。再次运行,可以看到猫和狗图片的总数是25000张,有2个类别了。

Found 25000 images belonging to 2 classes.

for data_batch, labels_batch in train_generator:

print('data batch shape:', data_batch.shape)

print('labels batch shape:', labels_batch.shape)

break

看加载的每个数据的shape,batch_size为20,图片大小为150x150,通道数为3。

data batch shape: (20, 150, 150, 3)

labels batch shape: (20,)

1.2划分训练集和验证集

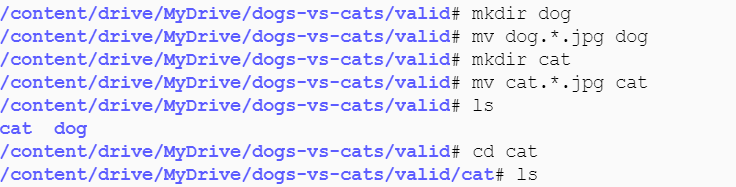

再进行如下操作,将dog和cat文件夹中的前2500个文件都移动到valid文件夹中,这样就有5000个数据作为验证,20000个数据用作训练。

然后将5000个数据集分别划分到valid文件夹中的猫和狗文件夹里。

文件夹目录如下所示:

2.构造CNN

from keras import models

from keras import layers

model = models.Sequential()

model.add(layers.Conv2D(32,(3,3),activation= 'relu', input_shape=(150,150,3)))

model.add(layers.MaxPooling2D((2,2)))

model.add(layers.Conv2D(64,(3,3),activation= 'relu'))

model.add(layers.MaxPooling2D((2,2)))

model.add(layers.Conv2D(128,(3,3),activation= 'relu'))

model.add(layers.MaxPooling2D((2,2)))

model.add(layers.Conv2D(128,(3,3),activation= 'relu'))

model.add(layers.MaxPooling2D((2,2)))

model.add(layers.Flatten())

model.add(layers.Dense(512,activation='relu'))

model.add(layers.Dense(1,activation='sigmoid'))

#print the summary of the model

model.summary()

输出:

Model: "sequential_3"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d_9 (Conv2D) (None, 148, 148, 32) 896

_________________________________________________________________

max_pooling2d_9 (MaxPooling2 (None, 74, 74, 32) 0

_________________________________________________________________

conv2d_10 (Conv2D) (None, 72, 72, 64) 18496

_________________________________________________________________

max_pooling2d_10 (MaxPooling (None, 36, 36, 64) 0

_________________________________________________________________

conv2d_11 (Conv2D) (None, 34, 34, 128) 73856

_________________________________________________________________

max_pooling2d_11 (MaxPooling (None, 17, 17, 128) 0

_________________________________________________________________

conv2d_12 (Conv2D) (None, 15, 15, 128) 147584

_________________________________________________________________

max_pooling2d_12 (MaxPooling (None, 7, 7, 128) 0

_________________________________________________________________

flatten_3 (Flatten) (None, 6272) 0

_________________________________________________________________

dense_6 (Dense) (None, 512) 3211776

_________________________________________________________________

dense_7 (Dense) (None, 1) 513

=================================================================

Total params: 3,453,121

Trainable params: 3,453,121

Non-trainable params: 0

_________________________________________________________________

3. 训练CNN

3.1 指定优化算法、学习率、损失函数、指标

from keras import optimizers

model.compile(optimizers.RMSprop(lr=0.0001),

loss = 'binary_crossentropy',

metrics =['accuracy'])

3.2 指定batch_size和epochs数

history = model.fit(

train_generator,

steps_per_epoch=1000, #totally n=20000 training samples, b=20, n/b

epochs=30,

validation_data=validation_generator,

validation_steps=250)

输出是每个epoch的loss、accuracy、val_loss、val_accuracy。

但训练速度实在太慢,11分钟只训练了1/30epoch的80/1000。

Epoch 1/30

80/1000 [=>............................] - ETA: 2:03:47 - loss: 0.6957 - accuracy: 0.4949

可以不用到这么多图片,可以选取它的一个子集。例如,从中挑选2000张图片用于训练,1000张用于验证,1000张用于测试。除了使用如前面linux的shell命令移动文件,也可以参考‘python实现从一个文件夹下随机抽取一定数量的图片并移动到另一个文件夹’。)

创建minitrain和minivalid后,再使用类似上面的程序跑一遍。(整合了一下)

from keras.preprocessing.image import ImageDataGenerator

#All images will be rescaled by 1./255

train_datagen = ImageDataGenerator(rescale=1./255)

valid_datagen = ImageDataGenerator(rescale=1./255)

test_datagen = ImageDataGenerator(rescale=1./255)

train_generator = train_datagen.flow_from_directory(

#This is the target directory

'/content/drive/MyDrive/dogs-vs-cats/minitrain' ,

#All images will be resized to 150x150

target_size=(150,150),

batch_size = 20,

#since we use binary_crossentropy loss, we need binary labels

class_mode = 'binary'

)

validation_generator = valid_datagen.flow_from_directory(

#This is the target directory

'/content/drive/MyDrive/dogs-vs-cats/minivalid' ,

#All images will be resized to 150x150

target_size=(150,150),

#since we use binary_crossentropy loss, we need binary labels

batch_size = 20,

class_mode = 'binary')

#Found 2000 images belonging to 2 classes.

#Found 2000 images belonging to 2 classes.

for data_batch, labels_batch in train_generator:

print('data batch shape:', data_batch.shape)

print('labels batch shape:', labels_batch.shape)

break

from keras import models

from keras import layers

model = models.Sequential()

model.add(layers.Conv2D(32,(3,3),activation= 'relu', input_shape=(150,150,3)))

model.add(layers.MaxPooling2D((2,2)))

model.add(layers.Conv2D(64,(3,3),activation= 'relu'))

model.add(layers.MaxPooling2D((2,2)))

model.add(layers.Conv2D(128,(3,3),activation= 'relu'))

model.add(layers.MaxPooling2D((2,2)))

model.add(layers.Conv2D(128,(3,3),activation= 'relu'))

model.add(layers.MaxPooling2D((2,2)))

model.add(layers.Flatten())

model.add(layers.Dense(512,activation='relu'))

model.add(layers.Dense(1,activation='sigmoid'))

#print the summary of the model

model.summary()

import os

# 使用第一张GPU卡(这也不知道有没有用到GPU,估计是没有)

os.environ["CUDA_VISIBLE_DEVICES"] = "0"

from keras import optimizers

model.compile(optimizers.RMSprop(lr=0.0001),

loss = 'binary_crossentropy',

metrics =['accuracy'])

history = model.fit(

train_generator,

steps_per_epoch=100, #totally n=2000 training samples, b=20, n/b

epochs=30,

validation_data=validation_generator,

validation_steps=50) #2000/20

第一遍运行第一个epoch的时候用时较长,需要大概1000s。但后面逐渐加快至稳定每个epoch耗时十几秒。

第二次再重新运行,从头就变快了(我也不知道为什么。)

Epoch 1/30

100/100 [==============================] - 16s 147ms/step - loss: 0.6956 - accuracy: 0.5321 - val_loss: 0.6621 - val_accuracy: 0.6265

Epoch 2/30

100/100 [==============================] - 14s 138ms/step - loss: 0.6630 - accuracy: 0.6126 - val_loss: 0.6275 - val_accuracy: 0.6710

Epoch 3/30

100/100 [==============================] - 14s 139ms/step - loss: 0.6297 - accuracy: 0.6519 - val_loss: 0.6043 - val_accuracy: 0.6895

Epoch 4/30

100/100 [==============================] - 14s 139ms/step - loss: 0.5989 - accuracy: 0.6666 - val_loss: 0.5912 - val_accuracy: 0.6745

······

Epoch 28/30

100/100 [==============================] - 14s 138ms/step - loss: 0.0651 - accuracy: 0.9827 - val_loss: 0.9011 - val_accuracy: 0.7370

Epoch 29/30

100/100 [==============================] - 14s 138ms/step - loss: 0.0488 - accuracy: 0.9856 - val_loss: 0.8706 - val_accuracy: 0.7475

Epoch 30/30

100/100 [==============================] - 14s 139ms/step - loss: 0.0337 - accuracy: 0.9938 - val_loss: 0.9049 - val_accuracy: 0.7455

4. 测试结果(74%)

4.1 描点打印每个epoch的accuracy和loss

plot30个epoch的accuracy值变化。

import matplotlib.pyplot as plt

%matplotlib inline

epochs = range(30) #30 is the number of epochs

train_acc = history.history['accuracy']

valid_acc = history.history['val_accuracy']

plt.plot(epochs, train_acc, 'bo',label = 'Training Accuracy')

plt.plot(epochs, valid_acc, 'r', label = 'Validation Accuracy')

plt.xlabel('Epochs')

plt.ylabel('Accuracy')

plt.legend()

plt.show()

模型在训练集上的准确度达到了99.38%,但在验证集上仅有74.55%,模型过拟合(overfitting)了。

再plot30个epoch的loss值变化。

import matplotlib.pyplot as plt

%matplotlib inline

epochs = range(30) #30 is the number of epochs

train_acc = history.history['loss']

valid_acc = history.history['val_loss']

plt.plot(epochs, train_acc, 'bo',label = 'Training Loss')

plt.plot(epochs, valid_acc, 'r', label = 'Validation Loss')

plt.xlabel('Epochs')

plt.ylabel('Accuracy')

plt.legend()

plt.show()

训练集loss一直在减小,而验证集loss在减小后又增大,说明产生了过拟合。

为什么会产生过拟合呢?

5.如何减小过拟合呢?

5.1 Dropout(78%)

model代码中仅添加一行。

随着dropout的加入,模型的过拟合被减轻了,30个epoch时,由于train_loss只到90%,还未到99%,理论上val_accuracy仍有上升空间, 再跑了20个epoch,最终从74%提升至了78%左右。

Epoch 1/50

100/100 [==============================] - 16s 143ms/step - loss: 0.6922 - accuracy: 0.5213 - val_loss: 0.6858 - val_accuracy: 0.5360

Epoch 2/50

100/100 [==============================] - 14s 139ms/step - loss: 0.6762 - accuracy: 0.5559 - val_loss: 0.6682 -

······

Epoch 29/50

100/100 [==============================] - 14s 138ms/step - loss: 0.2169 - accuracy: 0.9073 - val_loss: 0.5943 - val_accuracy: 0.7550

Epoch 30/50

100/100 [==============================] - 14s 139ms/step - loss: 0.2040 - accuracy: 0.9200 - val_loss: 0.5421 - val_accuracy: 0.7660

······

Epoch 50/50

100/100 [==============================] - 14s 136ms/step - loss: 0.0693 - accuracy: 0.9705 - val_loss: 0.6988 - val_accuracy: 0.7815

5.2 数据增广(82%)

更改1.1加载数据的代码如下:加入数据增广。

from keras.preprocessing.image import ImageDataGenerator

#All images will be rescaled by 1./255

train_datagen = ImageDataGenerator(

rescale=1./255,

rotation_range=40,

width_shift_range=0.2,

height_shift_range=0.2,

shear_range=0.2,

zoom_range=0.2,

horizontal_flip=True,)

valid_datagen = ImageDataGenerator(rescale=1./255)

test_datagen = ImageDataGenerator(rescale=1./255)

train_generator = train_datagen.flow_from_directory(

#This is the target directory

'/content/drive/MyDrive/dogs-vs-cats/minitrain' ,

#All images will be resized to 150x150

target_size=(150,150),

batch_size = 20,

#since we use binary_crossentropy loss, we need binary labels

class_mode = 'binary'

)

validation_generator = valid_datagen.flow_from_directory(

#This is the target directory

'/content/drive/MyDrive/dogs-vs-cats/minivalid' ,

#All images will be resized to 150x150

target_size=(150,150),

#since we use binary_crossentropy loss, we need binary labels

batch_size = 20,

class_mode = 'binary')

更改epoch数为100,其余不变。

history = model.fit(

train_generator,

steps_per_epoch=100, #totally n=2000 training samples, b=20, n/b

epochs=100,

validation_data=validation_generator,

validation_steps=100)

在使用dropout的基础上,将训练集图片做增广后,valid_loss超过了82%。而且train_loss几乎全程保持比valid_loss要低或是相近,证明了模型具有较好的泛化能力,但模型收敛所需的epoch数增加。可能随着epoch数的继续上升,性能还可以继续提升。

Epoch 1/100

100/100 [==============================] - 22s 225ms/step - loss: 0.6482 - accuracy: 0.6130 - val_loss: 0.6180 - val_accuracy: 0.6565

······

Epoch 29/100

100/100 [==============================] - 23s 227ms/step - loss: 0.5276 - accuracy: 0.7345 - val_loss: 0.5570 - val_accuracy: 0.7135

Epoch 30/100

100/100 [==============================] - 22s 225ms/step - loss: 0.5179 - accuracy: 0.7530 - val_loss: 0.4734 - val_accuracy: 0.7720

·······

Epoch 50/100

100/100 [==============================] - 23s 227ms/step - loss: 0.4771 - accuracy: 0.7620 - val_loss: 0.5499 - val_accuracy: 0.7460

·······

Epoch 90/100

100/100 [==============================] - 23s 231ms/step - loss: 0.4008 - accuracy: 0.8210 - val_loss: 0.4128 - val_accuracy: 0.8265

·······

Epoch 100/100

100/100 [==============================] - 23s 227ms/step - loss: 0.3914 - accuracy: 0.8200 - val_loss: 0.4188 - val_accuracy: 0.8150

Accuracy值随训练的变化。

Loss值随训练的变化。

5.3 预训练,重新训练参数(92%以上)

我们之前训练了一个具有4个卷积层和2个全连接层的神经网络。但相对而言,这个网络仍然不够深,从而导致容易过拟合。

然而,训练一个深度神经网络十分困难,因为参数的数量十分巨大,有一个巨大的容量,但我们这里只有2000个训练样本。

因此,在一个巨大的数据集上(例如ImageNet中有14M有标签的图像)预训练,但是需要移除掉最上层,更改为新任务中特殊的分类器,因为输出的shape和激活函数不同。

使用在ImageNet上预训练的VGG16:

from keras.applications.vgg16 import VGG16

conv_base = VGG16(weights='imagenet',

include_top=False,

input_shape=(150,150,3))

conv_base.summary()

网络结构与参数量如下:

将预训练conv_base后,接上新的New Top层。

from keras import models

from keras import layers

model = models.Sequential()

model.add(conv_base)

model.add(layers.Flatten())

model.add(layers.Dense(256,activation='relu'))

model.add(layers.Dense(1,activation='sigmoid'))

#print the summary of the model

model.summary()

网络结构与参数量如下:

可以看到模型在不到30个epoch时就轻松达到了92%以上的精度:

Epoch 1/30

100/100 [==============================] - 1141s 11s/step - loss: 0.7487 - accuracy: 0.4896 - val_loss: 0.6350 - val_accuracy: 0.6115

Epoch 2/30

100/100 [==============================] - 21s 214ms/step - loss: 0.6652 - accuracy: 0.6614 - val_loss: 0.4175 - val_accuracy: 0.8280

Epoch 3/30

100/100 [==============================] - 21s 214ms/step - loss: 0.5738 - accuracy: 0.7084 - val_loss: 0.3250 - val_accuracy: 0.8570

······

Epoch 28/30

100/100 [==============================] - 21s 213ms/step - loss: 0.2983 - accuracy: 0.9061 - val_loss: 0.2126 - val_accuracy: 0.9215

Epoch 29/30

100/100 [==============================] - 21s 214ms/step - loss: 0.2366 - accuracy: 0.9196 - val_loss: 0.1817 - val_accuracy: 0.9245

5.4 预训练,固定参数(89%)

在model.compile前面freeze Base,就是直接拿VGG16在ImageNet上的参数,不再重新训练,因此所需时间也可能更少,但得到的精度可能没有5.3重新训练参数高。

#to freee the conv_base parameters

for l in conv_base.layers:

l.trainable=False

model.summary()

from keras import optimizers

model.compile(optimizers.RMSprop(lr=0.0001),

loss = 'binary_crossentropy',

metrics =['accuracy'])

Epoch 1/30

100/100 [==============================] - 613s 6s/step - loss: 0.4792 - accuracy: 0.7849 - val_loss: 0.3476 - val_accuracy: 0.8495

Epoch 2/30

100/100 [==============================] - 25s 250ms/step - loss: 0.3865 - accuracy: 0.8243 - val_loss: 0.3134 - val_accuracy: 0.8670

Epoch 3/30

100/100 [==============================] - 25s 249ms/step - loss: 0.3716 - accuracy: 0.8389 - val_loss: 0.2964 - val_accuracy: 0.8730

······

Epoch 15/30

100/100 [==============================] - 25s 249ms/step - loss: 0.2734 - accuracy: 0.8785 - val_loss: 0.2795 - val_accuracy: 0.8965

······

poch 30/30

100/100 [==============================] - 25s 249ms/step - loss: 0.2407 - accuracy: 0.8971 - val_loss: 0.2788 - val_accuracy: 0.8970

5.4 预训练+微调(97%以上?)

先固定base的参数,然后训练top层,到大概89%的水平。

for l in conv_base.layers:

l.trainable=False

model.summary()

from keras import optimizers

model.compile(optimizers.RMSprop(lr=0.0001),

loss = 'binary_crossentropy',

metrics =['accuracy'])

history = model.fit(

train_generator,

steps_per_epoch=100, #totally n/b=2000/20 ,

epochs=30,

validation_data=validation_generator,

validation_steps=100)

再固定刚才训练好的新的top层,微调最上面的block5的卷积层参数。

trainable_layer_names = ['block5_conv1', 'block5_conv2',

'block5_conv3','block5_pool']

conv_base.trainable = True

for layer in conv_base.layers: #Fine tuning the top conv layers

if layer.name in trainable_layer_names:

layer.trainable = True

else:

layer.trainable = False

model.summary()

#并将学习率调低到0.00001

from keras import optimizers

model.compile(optimizers.RMSprop(lr=0.00001),

loss = 'binary_crossentropy',

metrics =['accuracy'])

history = model.fit(

train_generator,

steps_per_epoch=100, #totally n=2000 training samples, b=20, n/b

epochs=100,

validation_data=validation_generator,

validation_steps=100)

最终在验证集上的准确度也就在93%左右,并没有达到预计的97%之高,效果和使用VGG16的结构,并重新训练所有参数的结果是差不多的。可能是样本抽取的2000张不够随机,可能在使用全数据集的情况下更好。

import matplotlib.pyplot as plt

%matplotlib inline

epochs = range(100) #30 is the number of epochs

train_acc = history.history['accuracy']

valid_acc = history.history['val_accuracy']

plt.plot(epochs, train_acc, 'bo',label = 'Training Accuracy')

plt.plot(epochs, valid_acc, 'r', label = 'Validation Accuracy')

plt.xlabel('Epochs')

plt.ylabel('Accuracy')

plt.legend()

plt.show()

import matplotlib.pyplot as plt

%matplotlib inline

epochs = range(100) #30 is the number of epochs

train_acc = history.history['loss']

valid_acc = history.history['val_loss']

plt.plot(epochs, train_acc, 'bo',label = 'Training Loss')

plt.plot(epochs, valid_acc, 'r', label = 'Validation Loss')

plt.xlabel('Epochs')

plt.ylabel('Loss')

plt.legend()

plt.show()

5.5 Ensemble 方法

5.6 Multy-task Learning 方法

6 完整的kaggle数据集上,使用VGG16预训练+微调(95%以上)完整代码。

6.1 加载和处理猫狗图片数据集,并对训练集做数据增强

from keras.preprocessing.image import ImageDataGenerator

#All images will be rescaled by 1./255

#对训练集做数据增强

train_datagen = ImageDataGenerator(

rescale=1./255,

rotation_range=40,

width_shift_range=0.2,

height_shift_range=0.2,

shear_range=0.2,

zoom_range=0.2,

horizontal_flip=True,)

valid_datagen = ImageDataGenerator(rescale=1./255)

test_datagen = ImageDataGenerator(rescale=1./255)

train_generator = train_datagen.flow_from_directory(

#This is the target directory

'/content/drive/MyDrive/dogs-vs-cats/train' ,

#All images will be resized to 150x150

target_size=(150,150),

batch_size = 20,

#since we use binary_crossentropy loss, we need binary labels

class_mode = 'binary'

)

validation_generator = valid_datagen.flow_from_directory(

#This is the target directory

'/content/drive/MyDrive/dogs-vs-cats/valid' ,

#All images will be resized to 150x150

target_size=(150,150),

#since we use binary_crossentropy loss, we need binary labels

batch_size = 20,

class_mode = 'binary')

Found 20000 images belonging to 2 classes.

Found 5000 images belonging to 2 classes.

6.1.2设置batchsize为20

for data_batch, labels_batch in train_generator:

print('data batch shape:', data_batch.shape)

print('labels batch shape:', labels_batch.shape)

break

data batch shape: (20, 150, 150, 3)

labels batch shape: (20,)

6.2.1 引入VGG的conv_base层

from keras.applications.vgg16 import VGG16

conv_base = VGG16(weights='imagenet',

include_top=False,

input_shape=(150,150,3))

conv_base.summary()

Total params: 14,714,688

Trainable params: 14,714,688

Non-trainable params: 0

6.2.2 在VGG16conv_base的基础上加上new top

from keras import models

from keras import layers

model = models.Sequential()

model.add(conv_base)

model.add(layers.Flatten())

model.add(layers.Dense(256,activation='relu'))

model.add(layers.Dense(1,activation='sigmoid'))

#print the summary of the model

model.summary()

Total params: 16,812,353

Trainable params: 16,812,353

Non-trainable params: 0

6.2.3先固定base的参数,然后训练top层。

for l in conv_base.layers:

l.trainable=False

model.summary()

from keras import optimizers

model.compile(optimizers.RMSprop(lr=0.0001),

loss = 'binary_crossentropy',

metrics =['accuracy'])

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

vgg16 (Functional) (None, 4, 4, 512) 14714688

_________________________________________________________________

flatten (Flatten) (None, 8192) 0

_________________________________________________________________

dense (Dense) (None, 256) 2097408

_________________________________________________________________

dense_1 (Dense) (None, 1) 257

=================================================================

Total params: 16,812,353

Trainable params: 2,097,665

Non-trainable params: 14,714,688

history = model.fit(

train_generator,

steps_per_epoch=1000, #totally n=20000 training samples, b=20, n/b=1000

epochs=100,

validation_data=validation_generator,

validation_steps=250) #5000/20=250

Epoch 1/100

1000/1000 [==============================] - 9373s 9s/step - loss: 0.3615 - accuracy: 0.8378 - val_loss: 0.2519 - val_accuracy: 0.8960

Epoch 2/100

1000/1000 [==============================] - 197s 197ms/step - loss: 0.3289 - accuracy: 0.8540 - val_loss: 0.2357 - val_accuracy: 0.9030

······

Epoch 20/100

1000/1000 [==============================] - 196s 196ms/step - loss: 0.2738 - accuracy: 0.8864 - val_loss: 0.2152 - val_accuracy: 0.9212

·······

Epoch 99/100

1000/1000 [==============================] - 204s 204ms/step - loss: 0.2324 - accuracy: 0.9115 - val_loss: 0.2342 - val_accuracy: 0.9230

Epoch 100/100

1000/1000 [==============================] - 214s 214ms/step - loss: 0.2283 - accuracy: 0.9126 - val_loss: 0.2329 - val_accuracy: 0.9252

6.2.4 再固定刚才训练好的新的top层,微调最上面的block5的卷积层参数。

trainable_layer_names = ['block5_conv1', 'block5_conv2',

'block5_conv3','block5_pool']

conv_base.trainable = True

for layer in conv_base.layers: #Fine tuning the top conv layers

if layer.name in trainable_layer_names:

layer.trainable = True

else:

layer.trainable = False

model.summary()

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

vgg16 (Functional) (None, 4, 4, 512) 14714688

_________________________________________________________________

flatten (Flatten) (None, 8192) 0

_________________________________________________________________

dense (Dense) (None, 256) 2097408

_________________________________________________________________

dense_1 (Dense) (None, 1) 257

=================================================================

Total params: 16,812,353

Trainable params: 9,177,089

Non-trainable params: 7,635,264

from keras import optimizers

model.compile(optimizers.RMSprop(lr=0.00005),

loss = 'binary_crossentropy',

metrics =['accuracy'])

history = model.fit(

train_generator,

steps_per_epoch=100, #totally n=2000 training samples, b=20, n/b

epochs=100,

validation_data=validation_generator,

validation_steps=100)

poch 1/100

100/100 [==============================] - 30s 283ms/step - loss: 0.5235 - accuracy: 0.7673 - val_loss: 0.2659 - val_accuracy: 0.9085

Epoch 2/100

100/100 [==============================] - 27s 272ms/step - loss: 0.3685 - accuracy: 0.8475 - val_loss: 0.2492 - val_accuracy: 0.8970

······

Epoch 11/100

100/100 [==============================] - 27s 272ms/step - loss: 0.2344 - accuracy: 0.9032 - val_loss: 0.2721 - val_accuracy: 0.9370

Epoch 12/100

100/100 [==============================] - 27s 271ms/step - loss: 0.2787 - accuracy: 0.8853 - val_loss: 0.2154 - val_accuracy: 0.9330

·····

Epoch 40/100

100/100 [==============================] - 27s 273ms/step - loss: 0.2407 - accuracy: 0.9224 - val_loss: 0.2025 - val_accuracy: 0.9415

Epoch 41/100

100/100 [==============================] - 27s 270ms/step - loss: 0.2163 - accuracy: 0.9180 - val_loss: 0.3448 - val_accuracy: 0.9470

·······

Epoch 99/100

100/100 [==============================] - 25s 251ms/step - loss: 0.1660 - accuracy: 0.9400 - val_loss: 0.2487 - val_accuracy: 0.9515

Epoch 100/100

100/100 [==============================] - 25s 250ms/step - loss: 0.1599 - accuracy: 0.9456 - val_loss: 0.3910 - val_accuracy: 0.9480

6.2.5 再调整学习率至0.00001进行微调。(最好达到96%以上)

from keras import optimizers

model.compile(optimizers.RMSprop(lr=0.00001),

loss = 'binary_crossentropy',

metrics =['accuracy'])

history = model.fit(

train_generator,

steps_per_epoch=100, #totally n=2000 training samples, b=20, n/b

epochs=100,

validation_data=validation_generator,

validation_steps=100)

Epoch 1/100

100/100 [==============================] - 30s 280ms/step - loss: 0.1317 - accuracy: 0.9516 - val_loss: 0.3573 - val_accuracy: 0.9505

Epoch 2/100

100/100 [==============================] - 26s 262ms/step - loss: 0.1487 - accuracy: 0.9576 - val_loss: 0.3422 - val_accuracy: 0.9515

······

Epoch 75/100

100/100 [==============================] - 26s 259ms/step - loss: 0.1133 - accuracy: 0.9543 - val_loss: 0.2948 - val_accuracy: 0.9635

······

Epoch 99/100

100/100 [==============================] - 26s 257ms/step - loss: 0.1676 - accuracy: 0.9514 - val_loss: 0.3990 - val_accuracy: 0.9545

Epoch 100/100

100/100 [==============================] - 26s 261ms/step - loss: 0.1298 - accuracy: 0.9505 - val_loss: 0.2371 - val_accuracy: 0.9515

总结

5016

5016

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?