准备

安装docker。

安装好Docker Compose。

注意:运行内存最好8g以上,es运行会占用很多内存(2-3g)

方式1:

单机多节点。

参考官网的方式创建(docker-compose搭建elasticsearch集群)。

步骤

创建文件夹(以下用此文件夹表示)

mkdir cd /usr/local/src/es/docker

在此文件夹创建docker-compose.yml文件,内容如下:

version: "2.2"

services:

setup:

image: docker.elastic.co/elasticsearch/elasticsearch:${STACK_VERSION}

volumes:

- certs:/usr/share/elasticsearch/config/certs

user: "0"

command: >

bash -c '

if [ x${ELASTIC_PASSWORD} == x ]; then

echo "Set the ELASTIC_PASSWORD environment variable in the .env file";

exit 1;

elif [ x${KIBANA_PASSWORD} == x ]; then

echo "Set the KIBANA_PASSWORD environment variable in the .env file";

exit 1;

fi;

if [ ! -f config/certs/ca.zip ]; then

echo "Creating CA";

bin/elasticsearch-certutil ca --silent --pem -out config/certs/ca.zip;

unzip config/certs/ca.zip -d config/certs;

fi;

if [ ! -f config/certs/certs.zip ]; then

echo "Creating certs";

echo -ne \

"instances:\n"\

" - name: es01\n"\

" dns:\n"\

" - es01\n"\

" - localhost\n"\

" ip:\n"\

" - 127.0.0.1\n"\

" - name: es02\n"\

" dns:\n"\

" - es02\n"\

" - localhost\n"\

" ip:\n"\

" - 127.0.0.1\n"\

" - name: es03\n"\

" dns:\n"\

" - es03\n"\

" - localhost\n"\

" ip:\n"\

" - 127.0.0.1\n"\

> config/certs/instances.yml;

bin/elasticsearch-certutil cert --silent --pem -out config/certs/certs.zip --in config/certs/instances.yml --ca-cert config/certs/ca/ca.crt --ca-key config/certs/ca/ca.key;

unzip config/certs/certs.zip -d config/certs;

fi;

echo "Setting file permissions"

chown -R root:root config/certs;

find . -type d -exec chmod 750 \{\} \;;

find . -type f -exec chmod 640 \{\} \;;

echo "Waiting for Elasticsearch availability";

until curl -s --cacert config/certs/ca/ca.crt https://es01:9200 | grep -q "missing authentication credentials"; do sleep 30; done;

echo "Setting kibana_system password";

until curl -s -X POST --cacert config/certs/ca/ca.crt -u elastic:${ELASTIC_PASSWORD} -H "Content-Type: application/json" https://es01:9200/_security/user/kibana_system/_password -d "{\"password\":\"${KIBANA_PASSWORD}\"}" | grep -q "^{}"; do sleep 10; done;

echo "All done!";

'

healthcheck:

test: ["CMD-SHELL", "[ -f config/certs/es01/es01.crt ]"]

interval: 1s

timeout: 5s

retries: 120

es01:

depends_on:

setup:

condition: service_healthy

image: docker.elastic.co/elasticsearch/elasticsearch:${STACK_VERSION}

volumes:

- certs:/usr/share/elasticsearch/config/certs

- esdata01:/usr/share/elasticsearch/data

ports:

- ${ES_PORT}:9200

environment:

- node.name=es01

- cluster.name=${CLUSTER_NAME}

- cluster.initial_master_nodes=es01,es02,es03

- discovery.seed_hosts=es02,es03

- ELASTIC_PASSWORD=${ELASTIC_PASSWORD}

- bootstrap.memory_lock=true

- xpack.security.enabled=true

- xpack.security.http.ssl.enabled=true

- xpack.security.http.ssl.key=certs/es01/es01.key

- xpack.security.http.ssl.certificate=certs/es01/es01.crt

- xpack.security.http.ssl.certificate_authorities=certs/ca/ca.crt

- xpack.security.http.ssl.verification_mode=certificate

- xpack.security.transport.ssl.enabled=true

- xpack.security.transport.ssl.key=certs/es01/es01.key

- xpack.security.transport.ssl.certificate=certs/es01/es01.crt

- xpack.security.transport.ssl.certificate_authorities=certs/ca/ca.crt

- xpack.security.transport.ssl.verification_mode=certificate

- xpack.license.self_generated.type=${LICENSE}

mem_limit: ${MEM_LIMIT}

ulimits:

memlock:

soft: -1

hard: -1

healthcheck:

test:

[

"CMD-SHELL",

"curl -s --cacert config/certs/ca/ca.crt https://localhost:9200 | grep -q 'missing authentication credentials'",

]

interval: 10s

timeout: 10s

retries: 120

es02:

depends_on:

- es01

image: docker.elastic.co/elasticsearch/elasticsearch:${STACK_VERSION}

volumes:

- certs:/usr/share/elasticsearch/config/certs

- esdata02:/usr/share/elasticsearch/data

environment:

- node.name=es02

- cluster.name=${CLUSTER_NAME}

- cluster.initial_master_nodes=es01,es02,es03

- discovery.seed_hosts=es01,es03

- bootstrap.memory_lock=true

- xpack.security.enabled=true

- xpack.security.http.ssl.enabled=true

- xpack.security.http.ssl.key=certs/es02/es02.key

- xpack.security.http.ssl.certificate=certs/es02/es02.crt

- xpack.security.http.ssl.certificate_authorities=certs/ca/ca.crt

- xpack.security.http.ssl.verification_mode=certificate

- xpack.security.transport.ssl.enabled=true

- xpack.security.transport.ssl.key=certs/es02/es02.key

- xpack.security.transport.ssl.certificate=certs/es02/es02.crt

- xpack.security.transport.ssl.certificate_authorities=certs/ca/ca.crt

- xpack.security.transport.ssl.verification_mode=certificate

- xpack.license.self_generated.type=${LICENSE}

mem_limit: ${MEM_LIMIT}

ulimits:

memlock:

soft: -1

hard: -1

healthcheck:

test:

[

"CMD-SHELL",

"curl -s --cacert config/certs/ca/ca.crt https://localhost:9200 | grep -q 'missing authentication credentials'",

]

interval: 10s

timeout: 10s

retries: 120

es03:

depends_on:

- es02

image: docker.elastic.co/elasticsearch/elasticsearch:${STACK_VERSION}

volumes:

- certs:/usr/share/elasticsearch/config/certs

- esdata03:/usr/share/elasticsearch/data

environment:

- node.name=es03

- cluster.name=${CLUSTER_NAME}

- cluster.initial_master_nodes=es01,es02,es03

- discovery.seed_hosts=es01,es02

- bootstrap.memory_lock=true

- xpack.security.enabled=true

- xpack.security.http.ssl.enabled=true

- xpack.security.http.ssl.key=certs/es03/es03.key

- xpack.security.http.ssl.certificate=certs/es03/es03.crt

- xpack.security.http.ssl.certificate_authorities=certs/ca/ca.crt

- xpack.security.http.ssl.verification_mode=certificate

- xpack.security.transport.ssl.enabled=true

- xpack.security.transport.ssl.key=certs/es03/es03.key

- xpack.security.transport.ssl.certificate=certs/es03/es03.crt

- xpack.security.transport.ssl.certificate_authorities=certs/ca/ca.crt

- xpack.security.transport.ssl.verification_mode=certificate

- xpack.license.self_generated.type=${LICENSE}

mem_limit: ${MEM_LIMIT}

ulimits:

memlock:

soft: -1

hard: -1

healthcheck:

test:

[

"CMD-SHELL",

"curl -s --cacert config/certs/ca/ca.crt https://localhost:9200 | grep -q 'missing authentication credentials'",

]

interval: 10s

timeout: 10s

retries: 120

kibana:

depends_on:

es01:

condition: service_healthy

es02:

condition: service_healthy

es03:

condition: service_healthy

image: docker.elastic.co/kibana/kibana:${STACK_VERSION}

volumes:

- certs:/usr/share/kibana/config/certs

- kibanadata:/usr/share/kibana/data

ports:

- ${KIBANA_PORT}:5601

environment:

- SERVERNAME=kibana

- ELASTICSEARCH_HOSTS=https://es01:9200

- ELASTICSEARCH_USERNAME=kibana_system

- ELASTICSEARCH_PASSWORD=${KIBANA_PASSWORD}

- ELASTICSEARCH_SSL_CERTIFICATEAUTHORITIES=config/certs/ca/ca.crt

mem_limit: ${MEM_LIMIT}

healthcheck:

test:

[

"CMD-SHELL",

"curl -s -I http://localhost:5601 | grep -q 'HTTP/1.1 302 Found'",

]

interval: 10s

timeout: 10s

retries: 120

volumes:

certs:

driver: local

esdata01:

driver: local

esdata02:

driver: local

esdata03:

driver: local

kibanadata:

driver: local

| 特别注意!!!特别注意!!!特别注意!!!特别注意!!! |

| 特别注意!!!特别注意!!!特别注意!!!特别注意!!! |

| 特别注意!!!特别注意!!!特别注意!!!特别注意!!! |

上面文件的第一行 version: “2.2” 在后面运行时可能会造成如下错误:Non-string key at top level: true

[root@ycj docker]# docker-compose up -d

Non-string key at top level: true

[root@ycj docker]#

此时去掉文件第一行的version: "2.2"就可以了

在此文件夹创建.env文件,内容如下:

# Password for the 'elastic' user (at least 6 characters)

ELASTIC_PASSWORD=123456789

# Password for the 'kibana_system' user (at least 6 characters)

KIBANA_PASSWORD=123456789

# Version of Elastic products

STACK_VERSION=8.2.0

# Set the cluster name

CLUSTER_NAME=docker-cluster

# Set to 'basic' or 'trial' to automatically start the 30-day trial

LICENSE=basic

#LICENSE=trial

# Port to expose Elasticsearch HTTP API to the host

ES_PORT=9200

#ES_PORT=127.0.0.1:9200

# Port to expose Kibana to the host

KIBANA_PORT=5601

#KIBANA_PORT=80

# Increase or decrease based on the available host memory (in bytes)

MEM_LIMIT=1073741824

# Project namespace (defaults to the current folder name if not set)

#COMPOSE_PROJECT_NAME=myproject

注意:

- 这个文件可有可无,如果不要这个文件的话,则docker-compose.yml这个文件的里面的参数必须填上 如文件上的

${CLUSTER_NAME} 变量必须写死。 - docker-compose.yml文件的变量会引用**.env**文件的变量

- .env文件和docker-compose.yml文件必须在 同一个目录

在此目录启动

docker-compose up -d

此命令会创建镜像并启动容器等。一步到位。

其他命令

#停止并删除集群

docker-compose down

#停止集群时删除网络、容器和卷

docker-compose down -v

复制pod 集群cert证书到宿主机路径 的当前路径下

这个路径 /usr/share/elasticsearch/config/certs/ca/ca.crt 在 docker-compose.yml 中有配置。

docker cp docker-es01-1:/usr/share/elasticsearch/config/certs/ca/ca.crt .

在生产环境中在 Docker 中运行 Elasticsearch 时适用以下要求和建议。

设置vm.max_map_count为至少262144编辑

内核设置必须设置为vm.max_map_count至少262144用于生产用途。

如何设置vm.max_map_count取决于您的平台。

Linux编辑

要查看vm.max_map_count设置的当前值,请运行:

grep vm.max_map_count /etc/sysctl.conf

vm.max_map_count=262144

要在实时系统上应用设置,请运行:

sysctl -w vm.max_map_count=262144

要永久更改设置vm.max_map_count的值,请更新/etc/sysctl.conf中的值。

方式2

多机多节点。

步骤

1、准备三台机器,192.168.110.141~192.168.110.143

2、在每台机器新建文件夹mkdir /home/es/docker /home/es/docker/data 。并且为data文件夹赋予权限chmod 777 /home/es/docker/data

3、在每台机器的此目录下编写docker-compose.yml文件

192.168.110.141

version: '2.2'

services:

es01: # 服务名称

image: docker.elastic.co/elasticsearch/elasticsearch:7.13.3 # 使用的镜像

container_name: es01 # 容器名称

#restart: always # 失败自动重启策略

environment:

- node.name=es01 # 节点名称,集群模式下每个节点名称唯一

- cluster.name=es-docker-cluster # 集群名称,相同名称为一个集群, 三个es节点须一致

- discovery.seed_hosts=es02,es03 # es7.0之后新增的写法,写入候选主节点的设备地址,在开启服务后,如果master挂了,哪些可以被投票选为主节点

- cluster.initial_master_nodes=es01,es02,es03 # es7.0之后新增的配置,初始化一个新的集群时需要此配置来选举master

- bootstrap.memory_lock=true # 内存交换的选项,官网建议为true

#- network.bind_host=0.0.0.0 # 设置绑定的ip地址,可以是ipv4或ipv6的,默认为0.0.0.0,即本机

#- network.host=127.0.0.1

- network.publish_host=es01 # 用于集群内各机器间通信,对外使用,其他机器访问本机器的es服务,一般为本机宿主机IP

- cluster.join.timeout=180s

- cluster.publish.timeout=180s

#- network.publish_host=192.168.4.170

#- network.host=127.0.0.1

- "ES_JAVA_OPTS=-Xms2048m -Xmx2048m" # 设置内存,如内存不足,可以尝试调低点

ulimits: # 栈内存的上限

memlock:

soft: -1 # 不限制

hard: -1 # 不限制

volumes:

- ./data:/usr/share/elasticsearch/data # 存放数据的文件, 注意:这里的esdata为 顶级volumes下的一项。

ports:

- 9200:9200 # http端口,可以直接浏览器访问

- 9300:9300 # es集群之间相互访问的端口,jar之间就是通过此端口进行tcp协议通信,遵循tcp协议。

extra_hosts:

- "es01:192.168.110.141"

- "es02:192.168.110.142"

- "es03:192.168.110.143"

networks:

- elastic

kib01:

image: docker.elastic.co/kibana/kibana:7.13.3

container_name: kib01

ports:

- 5601:5601

extra_hosts:

- "es01:192.168.110.141"

- "es02:192.168.110.142"

- "es03:192.168.110.143"

environment:

ELASTICSEARCH_URL: http://es01:9200

ELASTICSEARCH_HOSTS: '["http://es01:9200","http://es02:9200","http://es03:9200"]'

networks:

- elastic

networks:

elastic:

driver: bridge

192.168.110.142

version: '2.2'

services:

es02:

image: docker.elastic.co/elasticsearch/elasticsearch:7.13.3

container_name: es02

environment:

- node.name=es02

- cluster.name=es-docker-cluster

- discovery.seed_hosts=es01,es03

- cluster.initial_master_nodes=es01,es02,es03

- bootstrap.memory_lock=true

#- network.bind_host=192.168.4.171

#- network.host=127.0.0.1

- network.publish_host=es02

- cluster.join.timeout=180s

- cluster.publish.timeout=180s

#- network.publish_host=192.168.4.170

#- network.host=127.0.0.1

- "ES_JAVA_OPTS=-Xms2048m -Xmx2048m"

ulimits:

memlock:

soft: -1

hard: -1

volumes:

- data:/usr/share/elasticsearch/data

ports:

- 9200:9200

- 9300:9300

extra_hosts:

- "es01:192.168.110.141"

- "es02:192.168.110.142"

- "es03:192.168.110.143"

networks:

- elastic

volumes:

data02:

driver: local

networks:

elastic:

driver: bridge

192.168.110.143

version: '2.2'

services:

es03:

image: docker.elastic.co/elasticsearch/elasticsearch:7.13.3

container_name: es03

environment:

- node.name=es03

- cluster.name=es-docker-cluster

- discovery.seed_hosts=es01,es02

- cluster.initial_master_nodes=es01,es02,es03

- bootstrap.memory_lock=true

#- network.bind_host=192.168.4.171

#- network.host=127.0.0.1

- network.publish_host=es03

- cluster.join.timeout=180s

- cluster.publish.timeout=180s

#- network.publish_host=192.168.4.170

#- network.host=127.0.0.1

- "ES_JAVA_OPTS=-Xms2048m -Xmx2048m"

ulimits:

memlock:

soft: -1

hard: -1

volumes:

- data:/usr/share/elasticsearch/data

ports:

- 9200:9200

- 9300:9300

extra_hosts:

- "es01:192.168.110.141"

- "es02:192.168.110.142"

- "es03:192.168.110.143"

networks:

- elastic

volumes:

data02:

driver: local

networks:

elastic:

driver: bridge

4、各机器下执行docker-compose命令

docker-compose -f docker-compose.yml up -d

#如果上面无法执行,执行下面的,2选1

docker compose -f docker-compose.yml up -d

如果启动失败了,可能是没有配置vm.max_map_count的值。

Linux编辑

要查看vm.max_map_count设置的当前值,请运行:

grep vm.max_map_count /etc/sysctl.conf

vm.max_map_count=262144

要在实时系统上应用设置,请运行:

sysctl -w vm.max_map_count=262144

要永久更改设置vm.max_map_count的值,请更新/etc/sysctl.conf中的值。

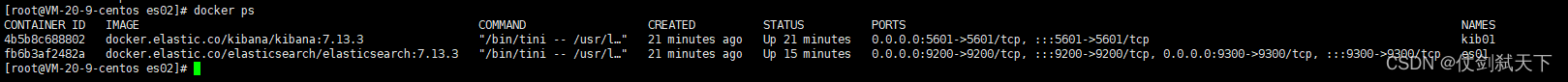

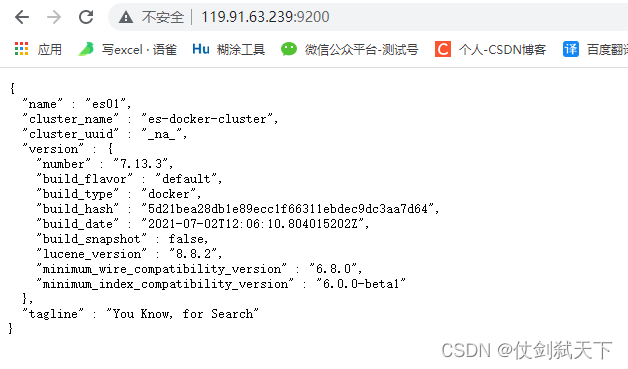

启动成功后:

安装 ik 分词器

从 ik 分词器项目仓库中下载 ik 分词器安装包,下载的版本需要与 Elasticsearch 版本匹配:

https://github.com/medcl/elasticsearch-analysis-ik

或者可以访问 gitee 镜像仓库:

https://gitee.com/mirrors/elasticsearch-analysis-ik

下载 elasticsearch-analysis-ik-7.13.3.zip 复制到 /root/ 目录下

在三个节点上分别安装 ik 分词器

# 复制 ik 分词器到三个 es 容器

docker cp elasticsearch-analysis-ik-7.13.3.zip es01:/root/

# 在 es01 中安装 ik 分词器

docker exec -it es01 elasticsearch-plugin install file:///root/elasticsearch-analysis-ik-7.13.3.zip

查看安装结果

在浏览器中访问 http://192.168.110.141:9200/_cat/plugins可以看到结果

springboot配置es集群

导入包

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-data-elasticsearch</artifactId>

</dependency>

方式1(springboot版本太高的话,很多属性会过时):

spring:

data:

elasticsearch:

cluster-name: media

cluster-nodes: 192.168.110.141:9300,192.168.110.142:9300,192.168.110.143:9300

方式2(上面不行时换下面的)

spring:

elasticsearch:

rest:

uris: http://192.168.110.141:9200,http://192.168.110.142:9200,http://192.168.110.143:9200 #配置ES集群的地址, 多个地址之间我们使用,隔开即可。

es与java交互的默认端口号是9300,与http交互的端口号是9200

5559

5559

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?