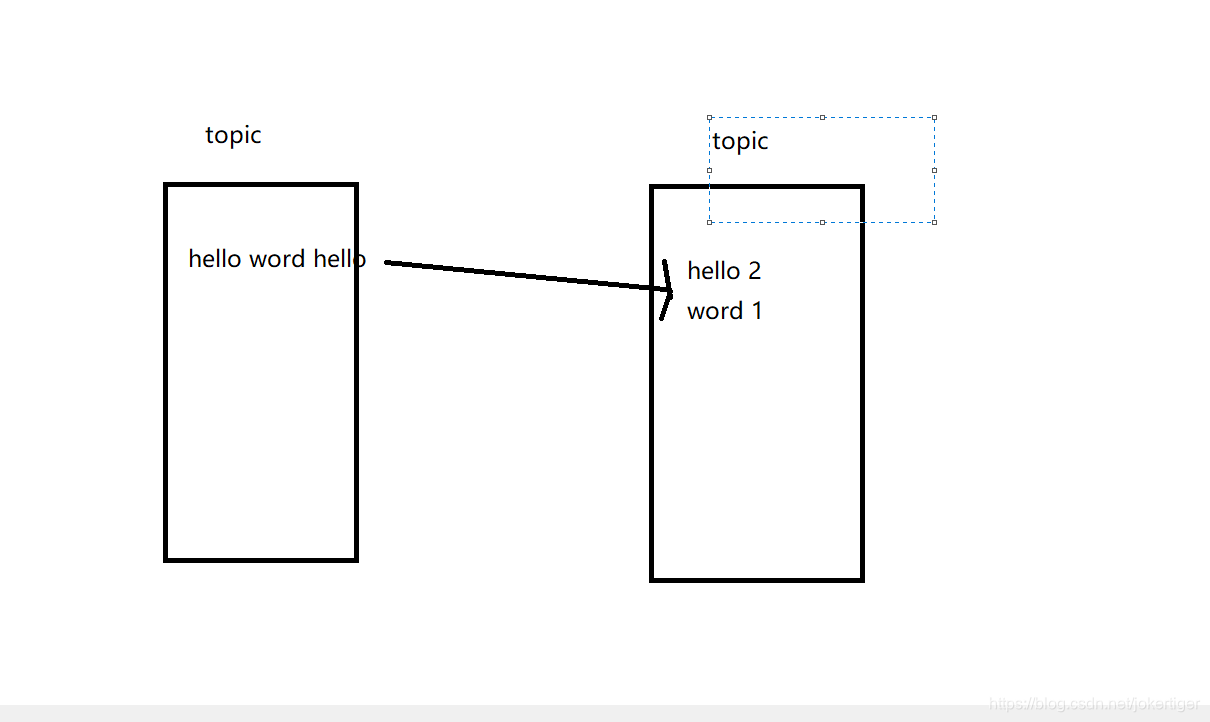

将一个Topic消息写入另一个Topic

统计数字之和

创建topic

kafka-topics.sh --zookeeper192.168.119.125:2181 --create --topic suminput--partitions 1 --replication-factor 1

kafka-topics.sh --zookeeper 192.168.119.125:2181 --create --topic sumoutput--partitions 1 --replication-factor 1

需求示意:

代码:

public static void main(String[] args) {

Properties prop = new Properties();

prop.put(StreamsConfig.APPLICATION_ID_CONFIG,"wordcount");

prop.put(StreamsConfig.BOOTSTRAP_SERVERS_CONFIG,"192.168.119.125:9092");

prop.put(StreamsConfig.COMMIT_INTERVAL_MS_CONFIG,3000);

prop.put(ConsumerConfig.ENABLE_AUTO_COMMIT_CONFIG,"flase");

//earliset latest none

prop.put(ConsumerConfig.AUTO_OFFSET_RESET_CONFIG,"latest");

prop.put(StreamsConfig.DEFAULT_KEY_SERDE_CLASS_CONFIG, Serdes.String().getClass());

prop.put(StreamsConfig.DEFAULT_VALUE_SERDE_CLASS_CONFIG,Serdes.String().getClass());

StreamsBuilder builder = new StreamsBuilder();

KStream<Object, Object> source = builder.stream("suminput1");

//source=[null 4.null 5,,null 3]

KTable<String, String> sum1 = source.map((key, value) ->

new KeyValue<String, String>("sum", value.toString())

) //sum 4,sum 5,sum 3

.groupByKey() //[sum ,(4,5,3)]

.reduce((x, y) ->

{

Integer sum=Integer.parseInt(y)+Integer.parseInt(x);

System.out.println("x:" + x + "y:" + y + "=" + sum);

return sum.toString();

});

sum1.toStream().to("sumoutput1");

Topology topo = builder.build();

KafkaStreams streams = new KafkaStreams(topo,prop);

//固定结构

CountDownLatch latch = new CountDownLatch(1);

Runtime.getRuntime().addShutdownHook(new Thread("stream3"){

@Override

public void run() {

streams.close();

latch.countDown();

}

});

try {

streams.start();

latch.await();

} catch (InterruptedException e) {

e.printStackTrace();

}

System.exit(0);

wordcount

创建topic

kafka-topics.sh --zookeeper 192.168.119.125:2181 --create --topic wordcountin --partitions 1 --replication-factor 1

kafka-topics.sh --zookeeper 192.168.119.125:2181 --create --topic wordcountout --partitions 1 --replication-factor 1

代码:

package kafka;

import org.apache.kafka.clients.consumer.ConsumerConfig;

import org.apache.kafka.common.serialization.Serdes;

import org.apache.kafka.streams.*;

import org.apache.kafka.streams.kstream.KTable;

import java.util.Arrays;

import java.util.List;

import java.util.Properties;

import java.util.concurrent.CountDownLatch;

/**

* @author zhouhu

* @Date

* @Desription

*/

public class WordCountSreamDemo {

public static void main(String[] args) {

Properties prop = new Properties();

prop.put(StreamsConfig.APPLICATION_ID_CONFIG,"wordcount");

prop.put(StreamsConfig.BOOTSTRAP_SERVERS_CONFIG,"192.168.119.125:9092");

prop.put(StreamsConfig.COMMIT_INTERVAL_MS_CONFIG,3000);

prop.put(ConsumerConfig.ENABLE_AUTO_COMMIT_CONFIG,"flase");

//earliset latest none

prop.put(ConsumerConfig.AUTO_OFFSET_RESET_CONFIG,"earliest");

prop.put(StreamsConfig.DEFAULT_KEY_SERDE_CLASS_CONFIG, Serdes.String().getClass());

prop.put(StreamsConfig.DEFAULT_VALUE_SERDE_CLASS_CONFIG,Serdes.String().getClass());

StreamsBuilder builder = new StreamsBuilder();

KTable<String, Long> count = builder.stream("wordcountin").flatMapValues(//null:"hello world"

(Object value) -> {

String[] split = value.toString().split("\\s+");

List<String> strings = Arrays.asList(split);

return strings;

} //[null hello,null world]

).map((k, v) -> {

KeyValue<String, String> keyValue = new KeyValue<>(v, "1");

return keyValue;

}) // hello:1,world :1

.groupByKey() //hello:(1,1),word:(1,1,1)

.count();//heloo:2,world :3

count.toStream().foreach((key,value)->{

System.out.println("key:"+key+"value:"+value);

});

count.toStream().map((key,value)->{

return new KeyValue<String,String>(key,key+value.toString());

}).to("wordcountout");

Topology topo = builder.build();

KafkaStreams streams = new KafkaStreams(topo,prop);

CountDownLatch latch = new CountDownLatch(1);

Runtime.getRuntime().addShutdownHook(new Thread("stream3"){

@Override

public void run() {

streams.close();

latch.countDown();

}

});

try {

streams.start();

latch.await();

} catch (InterruptedException e) {

e.printStackTrace();

}

System.exit(0);

}

}

494

494

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?