TITLE: Progressive Growing of GANs for Improved Quality, Stability, and Variation

AUTHOR: Tero Karras, Timo Aila, Samuli Laine, Jaakko Lehtinen

ASSOCIATION: NVIDIA

FROM: ICLR2018

CONTRIBUTION

A training methodology is proposed for GANs which starts with low-resolution images, and then progressively increases the resolution by adding layers to the networks. This incremental nature allows the training to first discover large-scale structure of the image distribution and then shift attention to increasingly finer scale detail, instead of having to learn

all scales simultaneously.

METHOD

PROGRESSIVE GROWING OF GANS

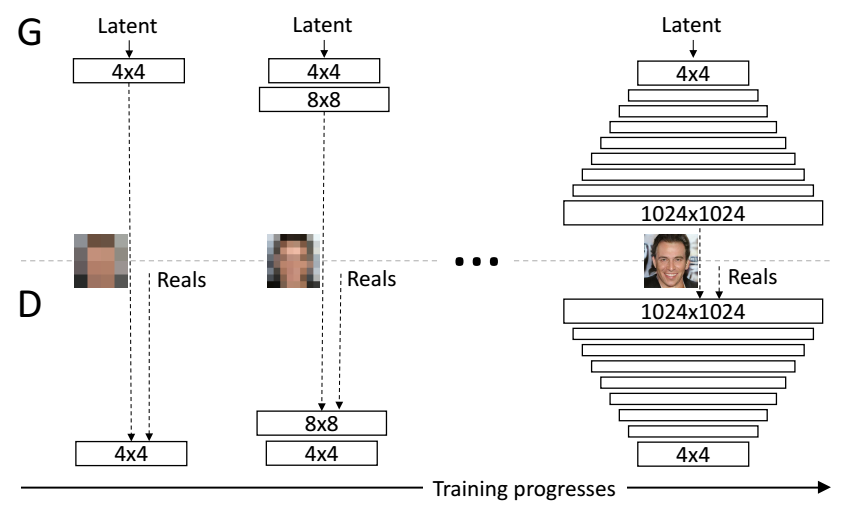

The following figure illustrates the training procedure of this work.

The training starts with both the generator

G

and discriminator

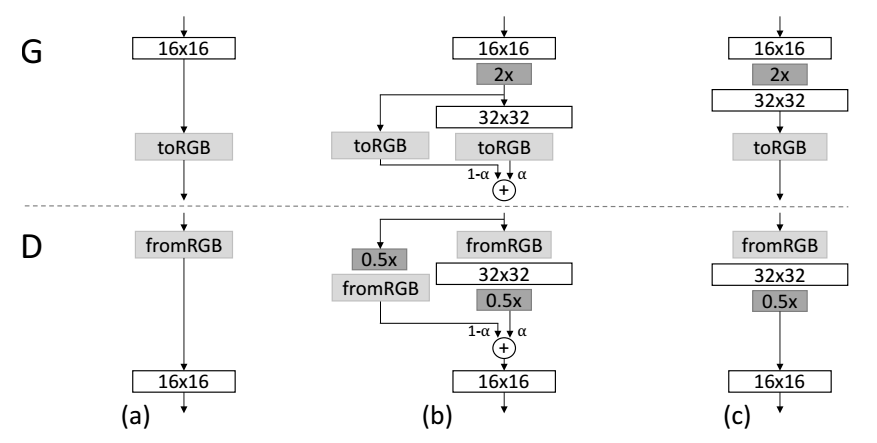

fade in is adopted when the new layers are added to double resolution of the generator

G

and discriminator

INCREASING VARIATION USING MINIBATCH STANDARD DEVIATION

- Compute the standard deviation for each feature in each spatial location over the minibatch.

- Average these estimates over all features and spatial locations to arrive at a single value.

- Consturct one additional (constant) feature map by replicating the value and concatenate it to all spatial locations and over the minibatch

NORMALIZATION IN GENERATOR AND DISCRIMINATOR

EQUALIZED LEARNING RATE. A trivial N(0;1) initialization is used and then explicitly the weights are scaled at runtime. To be precise, w^i=wi/c , where wi are the weights and c is the per-layer normalization constant from He’s initializer.The benefit of doing this dynamically instead of during initialization is somewhat subtle, and relates to the scale-invariance in commonly used adaptive stochastic gradient descent methods.

PIXELWISE FEATURE VECTOR NORMALIZATION IN GENERATOR. To disallow the scenario where the magnitudes in the generator and discriminator spiral out of control as a result of competition, the feature vector is normalized in each pixel to unit length in the generator after each convolutional layer, using a variant of “local response normalization”, configured as

where

ϵ=10−8

,

N

is the number of feature maps, and

77

77

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?