IV. Linear Regression with Multiple Variables (Week 2) Programming Exercise 1

1) Warm up exercise [ warmUpExercise.m ]

2) Computing Cost (for one variable) [ computeCost.m ]

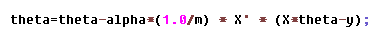

3) Gradient Descent (for one variable) [ gradientDescent.m ]

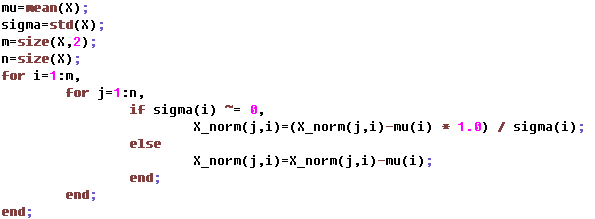

4) Feature Normalization [ featureNormalize.m ]

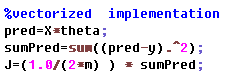

5) Computing Cost (for multiple variables) [ computeCostMulti.m ]

6) Gradient Descent (for multiple variables) [ gradientDescentMulti.m ]

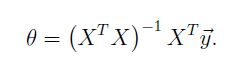

7) Normal Equations [ normalEqn.m ]

VII. Regularization (Week 3) Programming Exercise 2

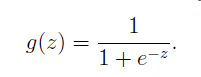

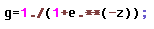

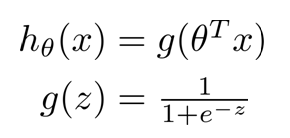

1) Sigmoid Function [ sigmoid.m ]

Octave expression:

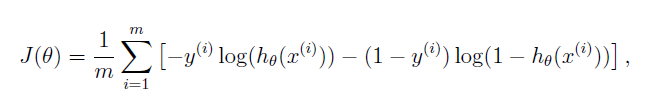

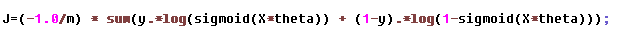

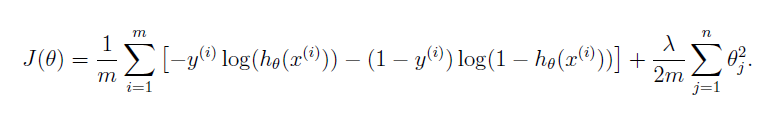

2) Logistic Regression Cost [ costFunction.m ]

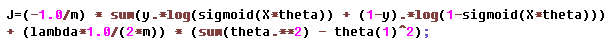

Vectorization Implementation:

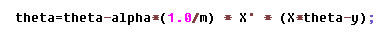

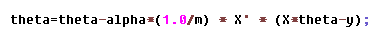

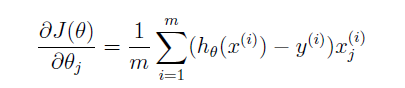

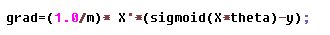

3) Logistic Regression Gradient [ costFunction.m ]

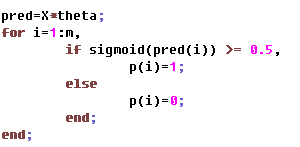

4) Predict [ predict.m ]

5) Regularized Logistic Regression Cost [ costFunctionReg.m ]

not including theta(0) , same as theta(1) in Octave

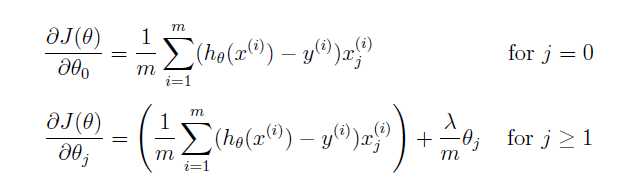

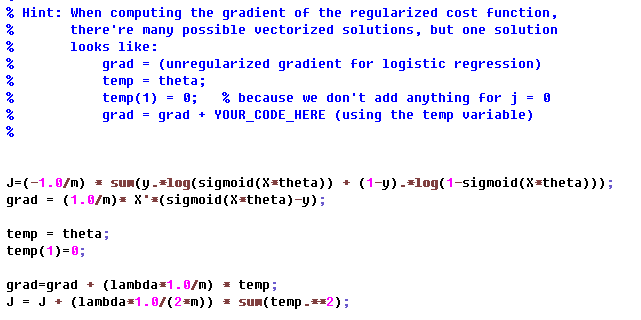

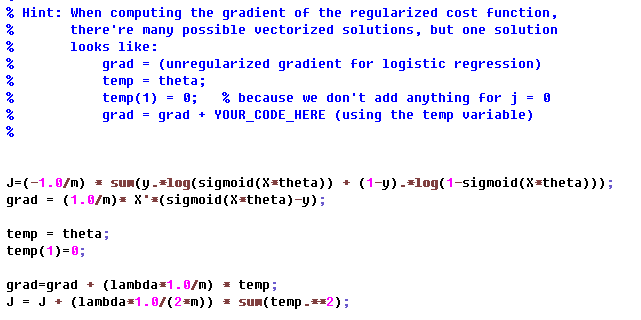

6) Regularized Logistic Regression Gradient [ costFunctionReg.m ]

reset the value of grad(1) in line two

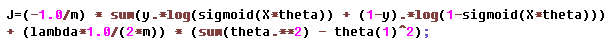

5 and 6 can also represented as follows:

VIII. Neural Networks: Representation (Week 4) Programming Exercise 3

1) Vectorized Logistic Regression [ lrCostFunction.m ]

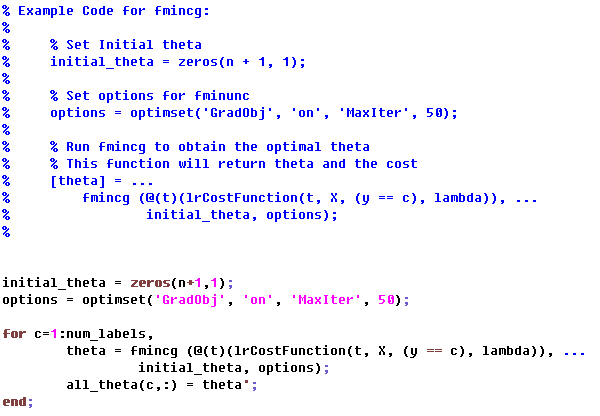

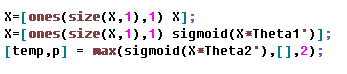

2) One-vs-all classifier training [ oneVsAll.m ]

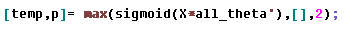

3) One-vs-all classifier prediction [ predictOneVsAll.m ]

4) Neural network prediction function [ predict.m ]

IX. Neural Networks: Learning (Week 5) Programming Exercise 4

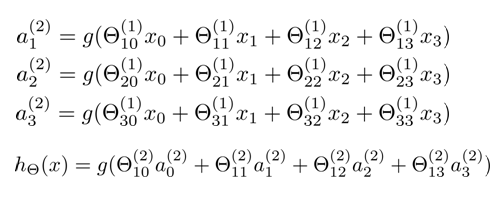

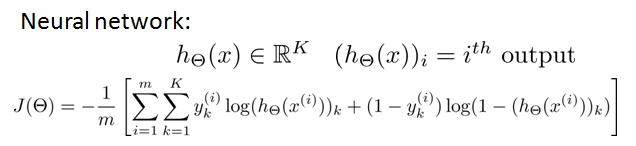

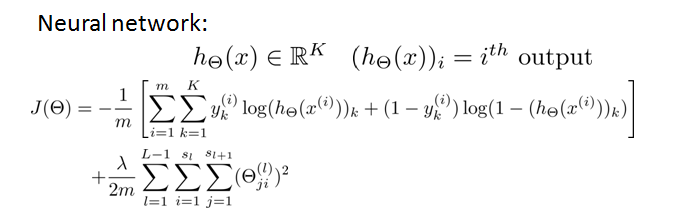

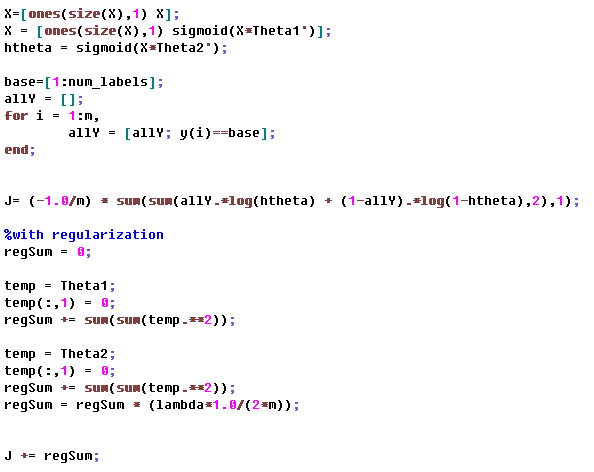

1) Feedforward and Cost Function [ nnCostFunction.m ]

hTheta(x) is the vector with K-dimension

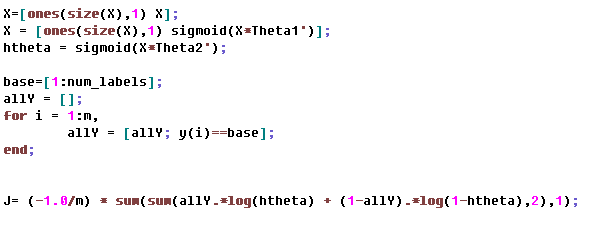

2) Regularized Cost Function [ nnCostFunction.m ]

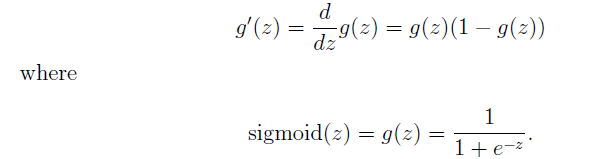

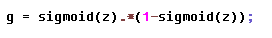

3) Sigmoid Gradient [ sigmoidGradient.m ]

4) Neural Network Gradient (Backpropagation) [ nnCostFunction.m ]

cost lots of time.....

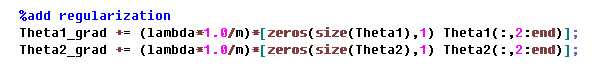

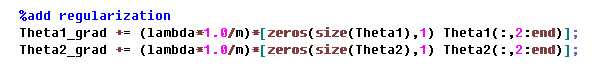

5) Regularized Gradient [ nnCostFunction.m ]

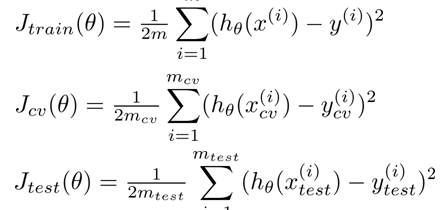

X. Advice for Applying Machine Learning (Week 6) Programming Exercise 5

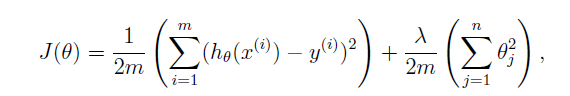

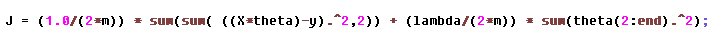

1) Regularized Linear Regression Cost Function [ linearRegCostFunction.m ]

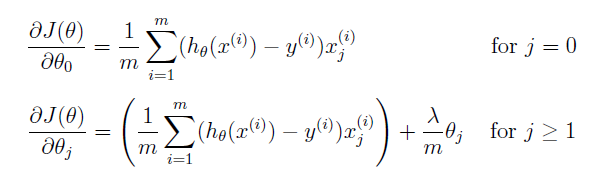

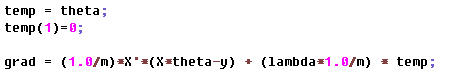

2) Regularized Linear Regression Gradient [ linearRegCostFunction.m ]

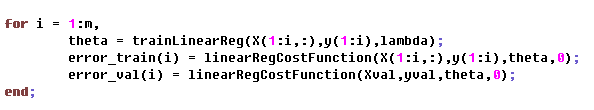

3) Learning Curve [ learningCurve.m ]

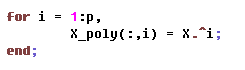

4) Polynomial Feature Mapping [ polyFeatures.m ]

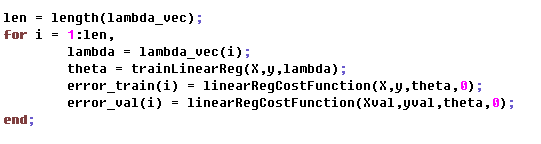

5) Validation Curve [ validationCurve.m ]

not including regularization

XII. Support Vector Machines (Week 7) Programming Exercise 6

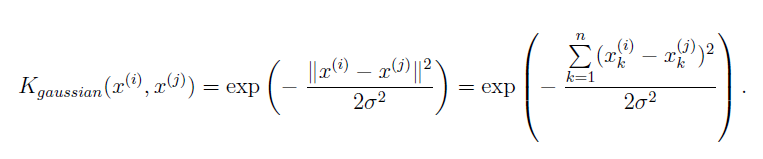

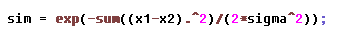

1) Gaussian Kernel [ gaussianKernel.m ]

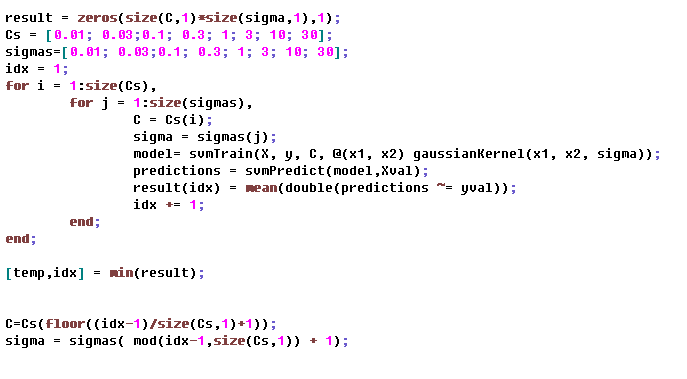

2) Parameters (C, sigma) for Dataset 3 [ dataset3Params.m ]

calcate the parameters based on training set for every C and sigma pair. Then calcate the cross validation error based on cross validation set for every pair. Choose the minimum pair for cross validation error.

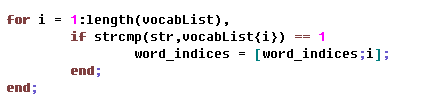

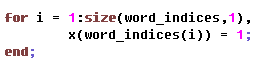

3) Email Preprocessing [ processEmail.m ]

4) Email Feature Extraction [ emailFeatures.m ]

XIV. Dimensionality Reduction (Week 8) Programming Exercise 7

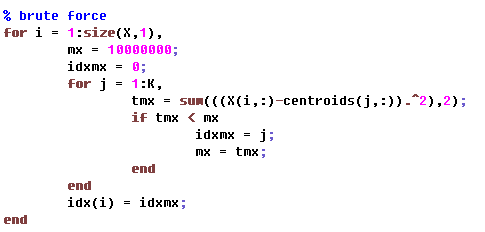

1) Find Closest Centroids (k-Means) [ findClosestCentroids.m ]

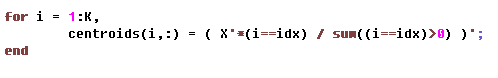

2) Compute Centroid Means (k-Means) [ computeCentroids.m ]

vectorization implementation

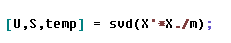

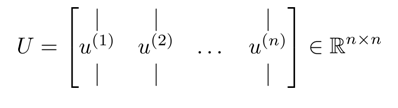

3) PCA [ pca.m ]

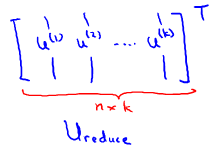

4) Project Data (PCA) [ projectData.m ]

vectorization implementation

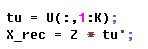

5) Recover Data (PCA) [ recoverData.m ]

XVI. Recommender Systems (Week 9) Programming Exercise 8

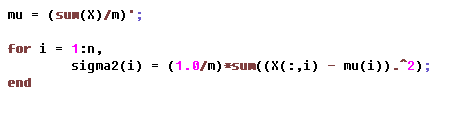

1) Estimate Gaussian Parameters [ estimateGaussian.m ]

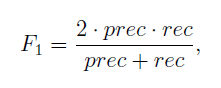

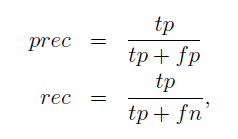

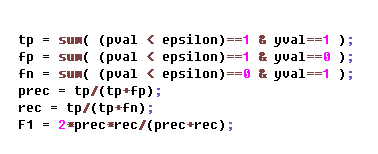

2) Select Threshold [ selectThreshold.m ]

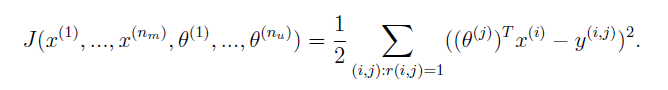

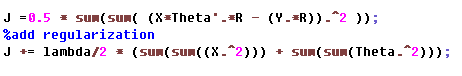

3) Collaborative Filtering Cost [ cofiCostFunc.m ]

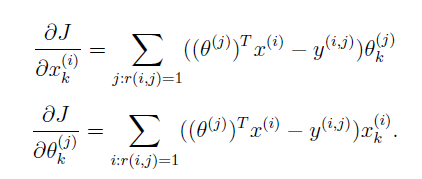

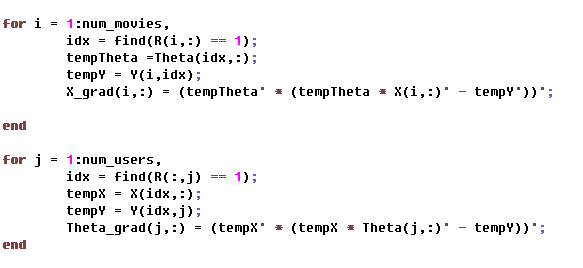

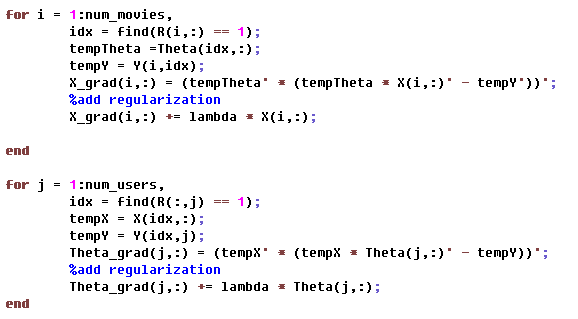

4) Collaborative Filtering Gradient [ cofiCostFunc.m ]

5) Regularized Cost [ cofiCostFunc.m ]

6) Regularized Gradient [ cofiCostFunc.m ]

635

635

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?