http://qnx.com/developers/docs/7.1/index.html#com.qnx.doc.hypervisor.nav/topic/bookset.html

Architecture

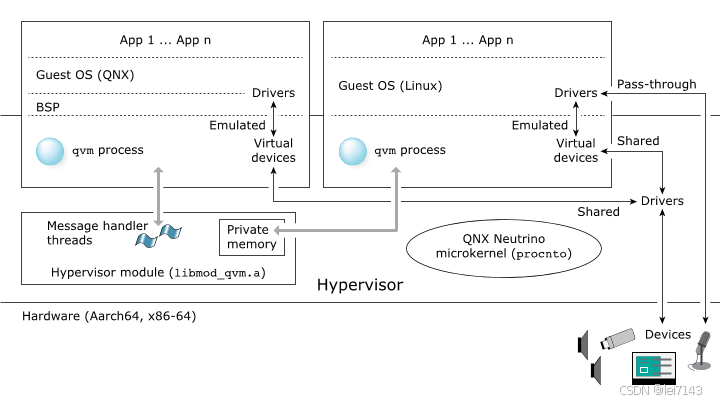

A QNX hypervisor comprises the hypervisor microkernel, virtualization extensions, and one or more instances of the qvm process.

qnx hypervisor 由微内核、虚拟扩展、qvm 实例组成

In fact, a guest doesn't actually run in a VM. The hypervisor isn't an intermediary that translates the guest's instructions for the CPU. The VM defines virtual hardware (see “Virtual devices”) and presents it and pass-through hardware (see “Pass-through devices”) to the guest, which doesn't need to know it is running “in” a VM rather than in an environment defined directly by the hardware.

客户系统不是运行在vm 中,hypervisor 也不是转执行客户系统cpu 指令的中间体。vm 是定义虚拟设备并将虚拟设备和直通设备呈现给客户系统,从而客户系统无需关注其是运行在虚拟环境还是实际物理环境。

That is, when a guest is running, its instructions execute on a physical CPU, just as if the guest were running without a hypervisor. Only when the guest attempts to execute an instruction that it is not permitted to execute or when it accesses guest memory that the hypervisor is monitoring does the virtualization hardware trap the attempt and force the guest to exit.

On the trap, the hardware notifies the hypervisor, which saves the guest's context (guest exit) and completes the task the guest had begun but was unable to complete for itself. On completion of the task, the hypervisor restores the guest's context and hands execution back to the guest (guest entrance).

当客户系统运行,其指令是直接在物理cpu 上执行,就像没有hypervisor 。当客户系统尝试执行不被运行的指令或者访问被hyperisor 监管的客户系统内存,虚拟设备会陷入对应中断并强制qvm 退出。在中断中,硬件通知hypervisor ,并保存客户机上下文,完成客户机的被终止时的执行任务。一旦执行完成,hypervisor 就会恢复客户系统上下文并恢复其继续执行。

比如下图单核执行情况

Virtual machines

A running hypervisor comprises the hypervisor microkernel and its virtualization module, and one or more instances of the virtual machine process (qvm).

In a QNX hypervisor environment, a VM is implemented in a qvm process instance. The qvm process is an OS process that runs in the hypervisor host, outside the kernel. Each instance has an identifier marking it so that the microkernel knows that it is a qvm process.

在hypervisor 环境中,vm 是在qvm 进程实例中实现的。qvm 进程是一个运行在hypervisor host 中的操作系统进程,是在内核之外。

If you remember anything about VMs, remember that from the point of view of a guest OS, the VM hosting that guest is hardware. This means that, just as an OS running on a physical board expects certain hardware characteristics (architecture, board-specifics, memory and CPUs, devices, etc.), an OS running in a VM expects those characteristics: the VM in which a guest will run must match the guest's expectations.

从客户机操作系统的角度来看,托管该客户机的虚拟机是硬件。正如运行在物理板上的操作系统需要某些硬件特性(架构、板特性、内存和CPU、设备等)一样,运行在VM中的操作系统也需要这些特性:客户机将在其中运行的VM必须符合客户机的期望。

When you configure a VM, you are assembling a hardware platform. The difference is that instead of assembling physical memory cards, CPUs, etc., you specify the virtual components of your machine, which a qvm process will create and configure according to your specifications.

The rules about where things appear are the same as for a real board:

- Don't install two things that try to respond to the same physical address.

- The environment your VM configuration assembles must be one that the software you will run (the guest OS) is prepared to deal with.

配置好vm ,客户机进程qvm 会根据配置场景对应的组件。

qvm services

Each qvm process instance provides key hypervisor services.

每个qvm实例都提供关键的管理程序服务。

VM assembly and configuration

In order to create the virtual environment in which a guest OS can run, a qvm process instance does the following when it starts:

- Reads, parses and validates VM configuration files (*.qvmconf) and configuration information input with the process command line at startup, exiting if the configuration is invalid and printing a meaningful error message to a log.

- Sets up intermediate stage tables (ARM: Stage 2 page tables, x86: Extended Page Tables (EPT)).

-

Creates (assembles) and configures its VM, including:

- allocates RAM (r/w) and ROM (r only) to guests

- provisions a thread for every virtual CPU (vCPU) it presents to the guest

- provisions pass-through devices to make them available to the guest

- defines and configures virtual devices (vdevs) for the hosted guest

vm 组装和配置过程

qnx/hlos_dev_qnx/apps/qnx_ap/target/hypervisor/host/fdt_config/dtb/sdm-host_la.dts

配置qvm

235 memory-regions = 236 /* This order needs to be maintained */ 237 "gvm_sysram2" , 238 "gvm_sysram1" , 239 "gvm_sysram3" , 240 "gvm_ion_audio_mem" , 241 "gvm_secmem", 242 "gvm_pmem"; 243 }

内存定义

qnx/hlos_dev_qnx/apps/qnx_ap/target/hypervisor/host/fdt_config/public/amss

pidin syspage=asinfo

pidin info

showmem -s

Mem Total(KB) Used(KB) Free(KB)

-------------------------------------------------------------------

sysram 2954284 1611200 1343084

dma_ecc_above_4g 1048576 102712 945864

mdf_mem 10240 8192 2048

qcpe 16384 16384 0

gvm_secmem 327680 0 327680

gvm_pmem 40960 200 40760

qseecom 20480 8036 12444

mm_dma 1310720 142852 1167868

smmu_s1_pt 32768 5636 27132

dma 65536 12940 52596

VM operation

During VM operation, a qvm process instance does the following:

- Traps outbound and inbound guest access attempts and determines what to do with them (i.e., if the address is to a vdev, invoke the vdev code (guest exit, then guest entrance when the vdev code completes); if the address is indeed out of bounds, treat it as such).

- Saves its guest's context before relinquishing a physical CPU.

- Restores its guest's context before putting the guest back into execution.

- Looks after any fault handling.

- Performs any maintenance activities required to ensure the integrity of the VM.

1、捕获客户系统enter、exit。vdev 地址就会exit

2、保存客户系统上下文

3、处理任何异常

4、

Guest startup and shutdown

A guest in a VM can start just like it does on hardware. From the perspective of the guest, it begins execution on a physical CPU. However, this CPU is in fact a qvm vCPU thread. The guest can enable its interrupts, just as it would if it were running in a non-virtualized system.

When the first vCPU thread in a guest's VM begins executing, the VM can know that that guest has booted.

Initiating guest shutdown is the responsibility of the guest. The qvm process detects shutdowns initiated through commonly used methods such as PSCI or ACPI. If you want to use a method that the qvm doesn't automatically recognize, you can write a vdev that detects the shutdown action and responds appropriately.

在Linux系统启动过程中,多CPU的启动机制主要依赖于特定的方法来唤醒并启动额外的CPU。主要有三种方法:Spin-table、PSCI(Power State Coordination Interface)以及ACPI Parking-protocol。

第一种方式是使用Spin-table,这种方法类似于spin锁机制,用于唤醒CPU。

第二种方式是通过PSCI,这是一种标准的电源管理接口,允许操作系统在ARM设备上管理软件在不同异常级别下的运行状态。这种方法比Spin-table更复杂,但可以控制CPU的行为,如挂起、关机等。

第三种方式利用ACPI(Advanced Configuration and Power Interface)协议启动CPU,与PSCI相比,ACPI使用IPI(Interrupt Pointer)唤醒机制。关于ACPI启动CPU的具体实现,本文不再详细展开

Manage guest contexts

In a virtualized environment, it is the responsibility of the CPU virtualization extensions to recognize from a guest's actions when the guest needs to exit. When a CPU triggers a guest exit, however, it is the qvm process instance (i.e., the VM) hosting the guest that saves the guest's context. The qvm process instance completes the action initiated by the guest, then restores the guest's context before allowing it to re-enter (see “Guest exits” in the “Performance Tuning” chapter).

在虚拟环境中,cpu 虚拟扩展负责识别客户系统进入和退出。当cpu 触发客户系统退出,qvm 进程实例(vm)保存上下文。

Manage privilege levels

The qvm process instances manage privilege levels at guest entrances and exits, to ensure that guests can run, and that the system is protected from errant code.

On a guest entrance, the qvm process instance asks the CPU to give the guest the privilege levels it needs to run, but no more. On a guest exit, the qvm process instance asks the CPU to return to the privilege levels it requires to run in the hypervisor host.

Only the CPU hardware can change privilege levels. The qvm process performs the operations required to have the CPU change privilege levels. This mechanism (hence the operations) is architecture-specific.

Guest access to virtual and physical resources

Each qvm process instance manages its hosted guest's access to both virtual and physical resources.

When a guest attempts to access an address in its guest-physical memory, this access can be one of the following, and the qvm process hosting the guest checks the access attempt and responds as described:

Permitted

The guest is attempting to access a memory region that it owns.

The qvm process instance doesn't do anything.

Pass-through device

The guest is attempting to access memory assigned to a physical device, and the guest's VM is configured to know that the guest has direct access to this device.

The qvm process instance doesn't do anything. The guest communicates directly with the device.

Virtual device

The guest is attempting to access an address that is assigned to a virtual device (either virtual or para-virtual).

The qvm process instance requests the appropriate privilege level changes, and passes execution on to its code for the requested device. For example, a guest CPUID request triggers the qvm process instance to emulate the hardware and respond to the guest exactly as the hardware would respond in a non-virtualized system.

Fault

The guest is attempting to access memory for which it does not have permission.

The qvm process instance returns an appropriate error to the guest.

The above are for access attempts that go through the CPU. DMA access control is managed by the SMMU manager (see “DMA device containment (smmuman)”).

Memory

In a QNX virtualized environment, the guest-physical memory that a guest sees as contiguous physical memory may in fact be discontiguous host-physical memory assembled by the virtualization.

A guest in a QNX virtualized environment uses memory for:

- normal operation (see “Memory in a virtualized environment”)

- accessing pass-through devices (see “Pass-through memory”)

- sharing information with other guests (“Shared memory”)

Note the following about memory in a QNX hypervisor:

- With the exception of shared memory, memory allocated to a VM is for the exclusive use of the guest hosted by the VM; that is, the address space for each guest is exclusive and independent of the address space of any other guest in the hypervisor system.

- If there isn't enough free memory on the system to complete the configured memory allocation for a VM, the hypervisor won't complete the configuration and won't start the VM.

- If the memory allocated to its hosting VM is insufficient for a guest, the guest can't start, no matter how much memory is available on the board.

- With the exception of memory used for pass-through devices to prevent information leakage, the hypervisor zeroes memory before it allocates it to a VM. Depending on the amount of memory assigned to the guest, this may take several seconds.

除了共享内存,管理程序分配给每个承载客户系统的vm的内存是独占的,跟其它客户系统是独立的。各个客户系统内存地址是独立的

如果没有足够的内存用于配置vm ,则vm 管理程序无法完成vm 的配置

如果承载客户系统的vm 配置的内存不够用,则客户系统是无法启动的

除了直通设备外,管理程序在分配内存给vm 前会清零。分配内存可能花费几秒

In a QNX virtualized environment, a guest configured with 1 GB of RAM will see 1 GB available to it, just as it would see the RAM available to it if it were running in a non-virtualized environment. This memory allocation appears to the guest as physical memory. It is in fact memory assembled by the virtualization configuration from discontiguous physical memory. ARM calls this assembled memory intermediate physical memory; Intel calls it guest physical memory. For simplicity we will use guest-physical memory, regardless of the platform (see “Guest memory” in Terminology).

在虚拟环境中分配给客户系统的内存是通过配置文件组装起来的连续的,但是实际上可能在物理上不连续。相当虚拟地址空间。

- Memory allocations must be in multiples of the QNX OS system page size (4 KB).

qnx/hlos_dev_qnx/apps/qnx_ap/target/hypervisor/host/build_files/mifs.build.tmpl

qnx/hlos_dev_qnx/apps/qnx_ap/target/hypervisor/host/create_images.sh

Devices

A QNX hypervisor provides guests with access to physical devices, including pass-through and shared devices, and virtual devices, including emulation and para-virtualized devices.

- if the guest or the hypervisor must include a device driver

- if the qvm hosting a guest must include the relevant vdev

- if the guest needs to know that it is running in a virtualized environment

In a non-virtualized system, the device driver in an OS must match the hardware device on the physical board. In a virtualized system, the device driver in the guest must match the vdev.

........

Scheduling

It is important to understand how scheduling affects system behavior in a QNX virtualized environment.

Hypervisor thread priorities and guest thread priorities

To begin with, you should keep in mind the following:

- The hypervisor host has no knowledge of what is running in its VMs, or how guests schedule their own internal software. When you set priorities in a guest OS, these priorities are known only to that guest.

- Virtual CPUs (vCPUs) are scheduled by qvm vCPU scheduling threads; these threads exist in the hypervisor host domain.

- Thread priorities in guests have no relation to thread priorities in the hypervisor host. The relative priorities of the qvm process vCPU scheduling threads determine which vCPU gets access to the physical CPU.

cpu

Create a new vCPU in the VM

Synopsis:

cpu [options]*

Options:

partition name

If adaptive partitioning (APS) is implemented in the hypervisor host domain, the vCPU will run in the host domain APS partition specified by name. If the partition option isn't specified, the vCPU thread will run in the partition where the qvm process was started.

runmask cpu_number{,cpu_number}

cpu sched 45r runmask 7,6,5,4

cpu sched 45r runmask 6,5,4,7

runmask 配置vCPU 可以运行在哪些物理cpu上

sched priority[r | f | o ]

sched high_priority,low_priority,max_replacements,replacement_period,initial_budget s

Set the vCPU's scheduling priority and scheduling algorithm. The algorithm can be round-robin (r), FIFO (f), or sporadic (s). The other (o) algorithm is reserved for future use; currently it is equivalent to r.

The default vCPU configuration uses round-robin scheduling for vCPUs. Our testing has indicated that most guests respond most favorably to this algorithm. It allows a guest that has its own internal scheduling policies to operate efficiently.

See “Configuring sporadic scheduling” below for more information about using sporadic scheduling.

sched 配置优先级和调度算法

vcpu 线程是运行在 hypervisor host domain

https://zhuanlan.zhihu.com/p/300692946

https://zhuanlan.zhihu.com/p/680190158

vCPUs and hypervisor performance

Perhaps counter-intuitively for someone accustomed to working in a non-virtualized environment, more vCPUs doesn't mean more power.

vCPUs and hypervisor overload

Multiple vCPUs are useful for managing guest activities, but they don't add processor cycles. In fact, the opposite may be true: too many vCPUs may degrade system performance.

A vCPU is a VM thread (see cpu in the “VM Configuration Reference” chapter). These vCPUs appear to a guest just like physical CPUs. A guest's scheduling algorithm can't know that when it is migrating execution between vCPUs it is switching threads, not physical CPUs.

vCPU 是在vm中配置的,在hypervisor 中对应qvm 中一个线程。对客户系统来说,其相当非性能环境中的一个物理cpu,客户系统并不知道其是一个线程。客户系统调度算法进行任务迁移,其并不知道其实是在vcpu对应的线程之间切换线程,而不是迁移到不同的物理cpu 上。

This switching between threads can degrade performance of all guests and the overall system. This is especially common when VMs are configured with more vCPUs than there are physical CPUs on the hardware.

vcpu 线程直接的切换会降低客户系统及整个系统性能。当配置的vcpu 数量比实际物理cpu 多就更显而易见了

Specifically, if in the hypervisor host there are more threads (including vCPU threads) ready to run than there are physical CPUs available to run them, the hypervisor host scheduler must apply its priority and scheduling policies (round-robin, FIFO, etc.) to decide which threads to run. These scheduling policies may employ preemption and time slicing to manage threads competing for physical CPUs.

Every preemption requires a guest exit, context switch and restore, and a guest entrance. Thus, inversely to what usually occurs with physical CPUs, reducing the number of vCPUs in a VM can improve overall performance: fewer threads will compete for time on the physical CPUs, so the hypervisor will not be obliged to preempt threads (with the attendant guest exits) as often. In brief, fewer vCPUs in a VM may sometimes yield the best performance.

hypervisor 中每次对vcpu 抢占会引起客户系统退出、上下文切换和恢复、进入执行,消耗比较大。

Multiple vCPUs sharing a physical CPU

When configuring your VM, it is prudent to not assume that the guest will always do the right thing. For example, multiple vCPUs pinned to a single physical CPU may cause unexpected behavior in the guest: timeouts or delays might not behave as expected, or spin loops might never return, etc. Assigning different priorities to the vCPUs might exacerbate the problem; for example, the vCPU might never be allowed to run.

In short, when assembling a VM, consider carefully how the guest will run on it.

For more information about scheduling in a hypervisor system, see “Scheduling” in the “Understanding Virtual Environments” chapter.

on -C 1 foo 指定运行vcpu

on -X sched 指定运行分区

2958

2958

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?