本文为🔗365天深度学习训练营 中的学习记录博客

原作者:K同学啊**

本周任务:

●1.训练所用的数据集与《第J1周:ResNet-50算法实战与解析》是一样的,使用数据测试(鸟类识别)测试一下模型是否构建正确)

●2.了解ResNetV2与ResNetV的区别

●3.改进思路是否可以迁移到其他地方呢(自由探索)

我的环境:

● 语言环境:Python3.9.13

● 编译器:Jupyter Lab

● 深度学习环境:Pytorch

○ torch2.3.0+cpu

○ torchvision0.18.0+cpu

一、ResNet50V2结构与ResNet结构对比

实线表示测试误差(右边的y轴),虚线表示训练损失(左边的y轴),lterations表示迭代次数

实线表示测试误差(右边的y轴),虚线表示训练损失(左边的y轴),lterations表示迭代次数

1、改进点:(a)original 表示原始的 ResNet 的残差结构,(b)proposed 表示新的 ResNet 的残差结构。主要差别就是(a)结构先卷积后进行 BN 和激活函数计算,最后执行 addition 后再进行ReLU 计算; (b)结构先进行 BN 和激活函数计算后卷积,把 addition 后的 ReLU 计算放到了残差结构内部。

2、改进结果:作者使用这两种不同的结构在 CIFAR-10 数据集上做测试,模型用的是 1001层的 ResNet 模型。从图中结果我们可以看出,(b)proposed 的测试集错误率明显更低一些,达到了 4.92%的错误率,(a)original 的测试集错误率是 7.61%。

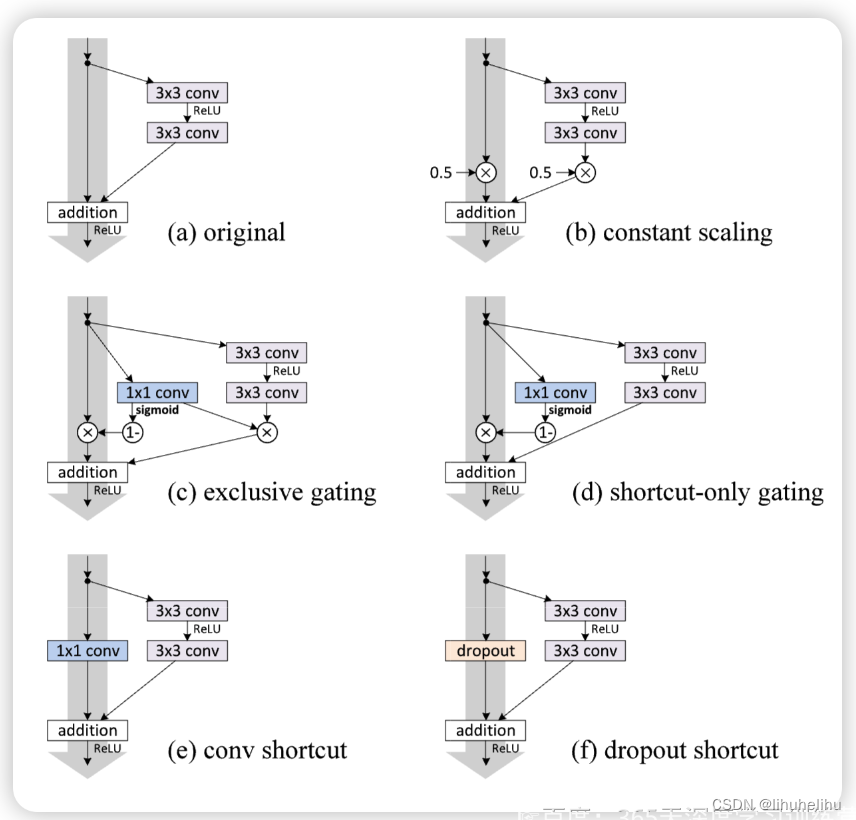

二、关于不同残差结构的尝试

(b-f)中的快捷连接被不同的组件阻碍。为了简化插图,我们不显示BN层,这里所有单位均采用权值层之后的BN层。图中(a-f)都是对残差结构的 shortcut 部分进行的不同尝试 ,对不同 shortcut 结构的尝试结果如下表所示 。

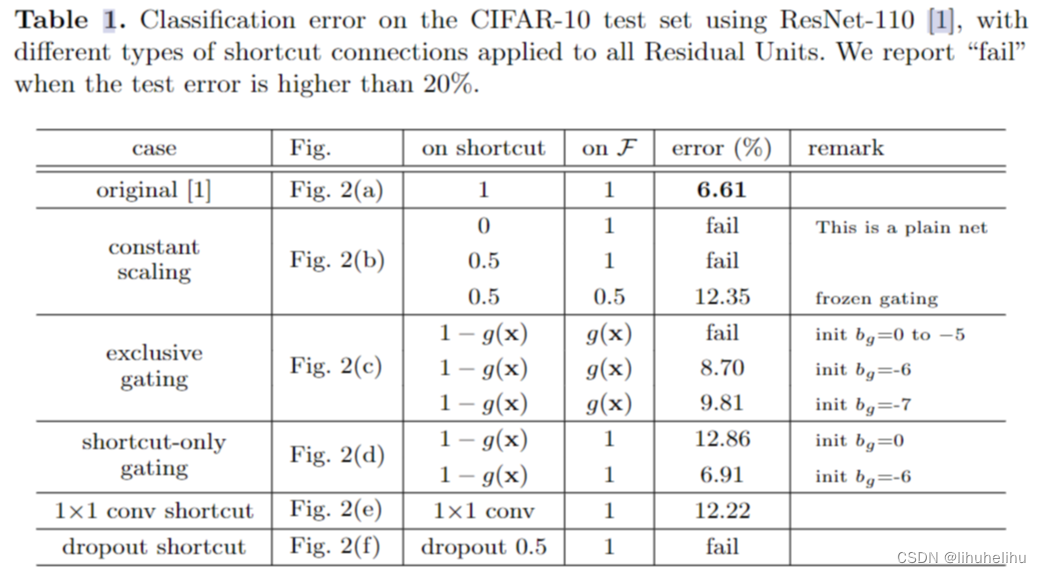

使用ResNet-110在CIFAR-10测试集上的分类错误,对所有残差单元应用了不同类型的shortcut connections。当测试误

差高于20%时,标注为“fail”

用不同 shortcut 结构的 ResNet-110 在CIFAR-10 数据集上做测试,发现最原始的(a)original 结构是最好的,也就是identity mapping 恒等映射是最好的。

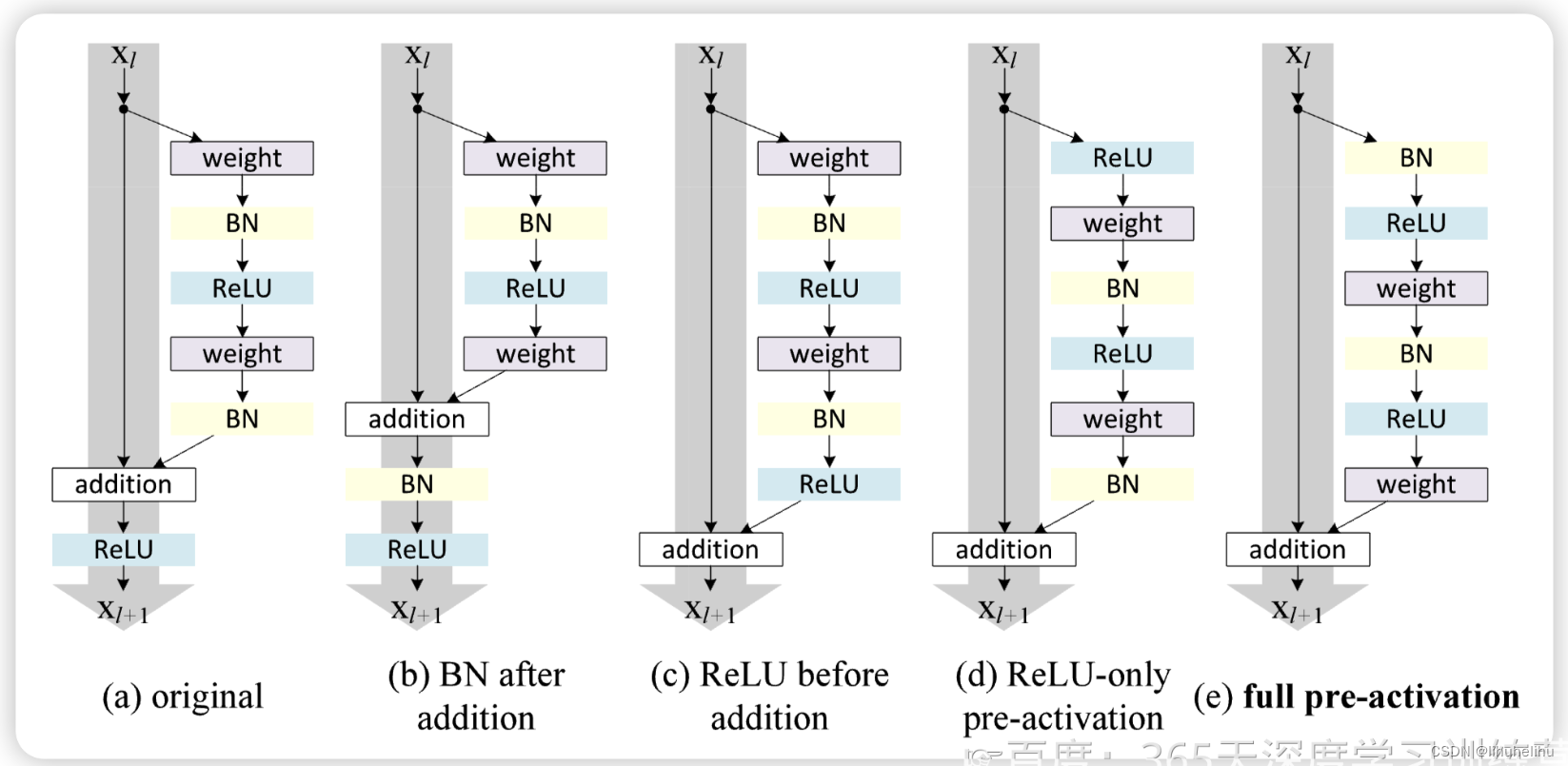

三、关于激活的尝试

使用不同激活函数的CIFAR-10测试集上的分类误差(%)

使用不同激活函数的CIFAR-10测试集上的分类误差(%)

最好的结果是(e)full pre-activation,其次到(a)original。

四、模型复现

import torch

import torch.nn as nn

import torchvision.transforms as transforms

import torchvision

from torchvision import transforms, datasets

import os,PIL,pathlib,warnings

import torch.nn.functional as F

warnings.filterwarnings("ignore") #忽略警告信息

# 设置CPU/GPU

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

device

代码输出

device(type='cpu')

train_transforms = transforms.Compose([

transforms.Resize([224,224]),

transforms.ToTensor(),

transforms.Normalize(

mean = [0.485,0.456,0.406],

std = [0.229,0.224,0.225]

)

])

test_transforms = transforms.Compose([

transforms.Resize([224,224]),

transforms.ToTensor(),

transforms.Normalize(

mean = [0.485,0.456,0.406],

std = [0.229,0.224,0.225]

)

])

# 导入数据

data_dir='./J2/bird_photos/'

data_dir=pathlib.Path(data_dir)

data_paths=list(data_dir.glob('*'))

classNames=[str(path).split('\\')[2] for path in data_paths]

classNames

代码输出

['Bananaquit', 'Black Skimmer', 'Black Throated Bushtiti', 'Cockatoo']

num_classes=len(classNames)

num_classes

代码输出

4

total_data = datasets.ImageFolder(data_dir,transform = train_transforms)

total_data

代码输出

Dataset ImageFolder

Number of datapoints: 565

Root location: J2\bird_photos

StandardTransform

Transform: Compose(

Resize(size=[224, 224], interpolation=bilinear, max_size=None, antialias=True)

ToTensor()

Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

)

# 划分数据集

train_size = int(0.8 * len(total_data))

test_size = len(total_data) - train_size

train_dataset, test_dataset = torch.utils.data.random_split(total_data, [train_size, test_size])

print(train_dataset)

print(test_dataset)

batch_size = 8

train_dl = torch.utils.data.DataLoader(train_dataset,

batch_size=batch_size,

shuffle=True,

#num_workers=1

)

test_dl = torch.utils.data.DataLoader(test_dataset,

batch_size=batch_size,

shuffle=True,

#num_workers=1

)

for X, y in test_dl:

print("Shape of X [N, C, H, W]: ", X.shape)

print("Shape of y: ", y.shape, y.dtype)

break

代码输出

<torch.utils.data.dataset.Subset object at 0x000002538E571730>

<torch.utils.data.dataset.Subset object at 0x000002538E571880>

Shape of X [N, C, H, W]: torch.Size([8, 3, 224, 224])

Shape of y: torch.Size([8]) torch.int64

#Residual Block

class Block2(nn.Module):

def __init__(self,in_channel,filters,kernel_size=3,stride=1,conv_shortcut=False):

super(Block2,self).__init__()

self.preact=nn.Sequential(

nn.BatchNorm2d(in_channel),

nn.ReLU(True)

)

self.shortcut=conv_shortcut

if self.shortcut:

self.short=nn.Conv2d(in_channel,4*filters,1,stride=stride,padding=0,bias=False)

elif stride>1:

self.short=nn.MaxPool2d(kernel_size=1,stride=stride,padding=0)

else:

self.short=nn.Identity()

self.conv1=nn.Sequential(

nn.Conv2d(in_channel,filters,1,stride=1,bias=False),

nn.BatchNorm2d(filters),

nn.ReLU(True)

)

self.conv2=nn.Sequential(

nn.Conv2d(filters,filters,kernel_size,stride=stride,padding=1,bias=False),

nn.BatchNorm2d(filters),

nn.ReLU(True)

)

self.conv3=nn.Conv2d(filters,4*filters,1,stride=1,bias=False)

def forward(self,x):

x1=self.preact(x)

if self.shortcut:

x2=self.short(x1)

else:

x2=self.short(x)

x1=self.conv1(x1)

x1=self.conv2(x1)

x1=self.conv3(x1)

x=x1+x2

return x

class Stack2(nn.Module):

def __init__(self, in_channel, filters, blocks, stride=2):

super(Stack2, self).__init__()

self.conv = nn.Sequential()

self.conv.add_module(str(0), Block2(in_channel, filters, conv_shortcut=True))

for i in range(1, blocks-1):

self.conv.add_module(str(i), Block2(4*filters, filters))

self.conv.add_module(str(blocks-1), Block2(4*filters, filters, stride=stride))

def forward(self, x):

x = self.conv(x)

return x

#构建ResNet50V2

class ResNet50V2(nn.Module):

def __init__(self,

include_top=True, # 是否包含位于网络顶部的全链接层

preact=True, # 是否使用预激活

use_bias=True, # 是否对卷积层使用偏置

input_shape=[224, 224, 3],

classes=1000,

pooling=None): # 用于分类图像的可选类数

super(ResNet50V2, self).__init__()

self.conv1 = nn.Sequential()

self.conv1.add_module('conv', nn.Conv2d(3, 64, 7, stride=2, padding=3, bias=use_bias, padding_mode='zeros'))

if not preact:

self.conv1.add_module('bn', nn.BatchNorm2d(64))

self.conv1.add_module('relu', nn.ReLU())

self.conv1.add_module('max_pool', nn.MaxPool2d(kernel_size=3, stride=2, padding=1))

self.conv2 = Stack2(64, 64, 3)

self.conv3 = Stack2(256, 128, 4)

self.conv4 = Stack2(512, 256, 6)

self.conv5 = Stack2(1024, 512, 3, stride=1)

self.post = nn.Sequential()

if preact:

self.post.add_module('bn', nn.BatchNorm2d(2048))

self.post.add_module('relu', nn.ReLU())

if include_top:

self.post.add_module('avg_pool', nn.AdaptiveAvgPool2d((1, 1)))

self.post.add_module('flatten', nn.Flatten())

self.post.add_module('fc', nn.Linear(2048, classes))

else:

if pooling=='avg':

self.post.add_module('avg_pool', nn.AdaptiveAvgPool2d((1, 1)))

elif pooling=='max':

self.post.add_module('max_pool', nn.AdaptiveMaxPool2d((1, 1)))

def forward(self, x):

x = self.conv1(x)

x = self.conv2(x)

x = self.conv3(x)

x = self.conv4(x)

x = self.conv5(x)

x = self.post(x)

return x

model = ResNet50V2().to(device)

# 显示网络结构

model

代码输出

ResNet50V2(

(conv1): Sequential(

(conv): Conv2d(3, 64, kernel_size=(7, 7), stride=(2, 2), padding=(3, 3))

(max_pool): MaxPool2d(kernel_size=3, stride=2, padding=1, dilation=1, ceil_mode=False)

)

(conv2): Stack2(

(conv): Sequential(

(0): Block2(

(preact): Sequential(

(0): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

)

(short): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(conv1): Sequential(

(0): Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(conv2): Sequential(

(0): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(conv3): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

)

(1): Block2(

(preact): Sequential(

(0): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

)

(short): Identity()

(conv1): Sequential(

(0): Conv2d(256, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(conv2): Sequential(

(0): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(conv3): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

)

(2): Block2(

(preact): Sequential(

(0): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

)

(short): MaxPool2d(kernel_size=1, stride=2, padding=0, dilation=1, ceil_mode=False)

(conv1): Sequential(

(0): Conv2d(256, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(conv2): Sequential(

(0): Conv2d(64, 64, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(conv3): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

)

)

)

(conv3): Stack2(

(conv): Sequential(

(0): Block2(

(preact): Sequential(

(0): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

)

(short): Conv2d(256, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(conv1): Sequential(

(0): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(conv2): Sequential(

(0): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

)

(1): Block2(

(preact): Sequential(

(0): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

)

(short): Identity()

(conv1): Sequential(

(0): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(conv2): Sequential(

(0): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

)

(2): Block2(

(preact): Sequential(

(0): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

)

(short): Identity()

(conv1): Sequential(

(0): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(conv2): Sequential(

(0): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

)

(3): Block2(

(preact): Sequential(

(0): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

)

(short): MaxPool2d(kernel_size=1, stride=2, padding=0, dilation=1, ceil_mode=False)

(conv1): Sequential(

(0): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(conv2): Sequential(

(0): Conv2d(128, 128, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

)

)

)

(conv4): Stack2(

(conv): Sequential(

(0): Block2(

(preact): Sequential(

(0): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

)

(short): Conv2d(512, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(conv1): Sequential(

(0): Conv2d(512, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(conv2): Sequential(

(0): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

)

(1): Block2(

(preact): Sequential(

(0): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

)

(short): Identity()

(conv1): Sequential(

(0): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(conv2): Sequential(

(0): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

)

(2): Block2(

(preact): Sequential(

(0): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

)

(short): Identity()

(conv1): Sequential(

(0): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(conv2): Sequential(

(0): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

)

(3): Block2(

(preact): Sequential(

(0): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

)

(short): Identity()

(conv1): Sequential(

(0): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(conv2): Sequential(

(0): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

)

(4): Block2(

(preact): Sequential(

(0): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

)

(short): Identity()

(conv1): Sequential(

(0): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(conv2): Sequential(

(0): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

)

(5): Block2(

(preact): Sequential(

(0): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

)

(short): MaxPool2d(kernel_size=1, stride=2, padding=0, dilation=1, ceil_mode=False)

(conv1): Sequential(

(0): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(conv2): Sequential(

(0): Conv2d(256, 256, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

)

)

)

(conv5): Stack2(

(conv): Sequential(

(0): Block2(

(preact): Sequential(

(0): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

)

(short): Conv2d(1024, 2048, kernel_size=(1, 1), stride=(1, 1), bias=False)

(conv1): Sequential(

(0): Conv2d(1024, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(conv2): Sequential(

(0): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(conv3): Conv2d(512, 2048, kernel_size=(1, 1), stride=(1, 1), bias=False)

)

(1): Block2(

(preact): Sequential(

(0): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

)

(short): Identity()

(conv1): Sequential(

(0): Conv2d(2048, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(conv2): Sequential(

(0): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(conv3): Conv2d(512, 2048, kernel_size=(1, 1), stride=(1, 1), bias=False)

)

(2): Block2(

(preact): Sequential(

(0): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

)

(short): Identity()

(conv1): Sequential(

(0): Conv2d(2048, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(conv2): Sequential(

(0): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(conv3): Conv2d(512, 2048, kernel_size=(1, 1), stride=(1, 1), bias=False)

)

)

)

(post): Sequential(

(bn): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU()

(avg_pool): AdaptiveAvgPool2d(output_size=(1, 1))

(flatten): Flatten(start_dim=1, end_dim=-1)

(fc): Linear(in_features=2048, out_features=1000, bias=True)

)

)

# 统计模型参数量以及其他指标

import torchsummary as summary

summary.summary(model,(3,224,224))

代码输出

----------------------------------------------------------------

Layer (type) Output Shape Param #

================================================================

Conv2d-1 [-1, 64, 112, 112] 9,472

MaxPool2d-2 [-1, 64, 56, 56] 0

BatchNorm2d-3 [-1, 64, 56, 56] 128

ReLU-4 [-1, 64, 56, 56] 0

Conv2d-5 [-1, 256, 56, 56] 16,384

Conv2d-6 [-1, 64, 56, 56] 4,096

BatchNorm2d-7 [-1, 64, 56, 56] 128

ReLU-8 [-1, 64, 56, 56] 0

Conv2d-9 [-1, 64, 56, 56] 36,864

BatchNorm2d-10 [-1, 64, 56, 56] 128

ReLU-11 [-1, 64, 56, 56] 0

Conv2d-12 [-1, 256, 56, 56] 16,384

Block2-13 [-1, 256, 56, 56] 0

BatchNorm2d-14 [-1, 256, 56, 56] 512

ReLU-15 [-1, 256, 56, 56] 0

Identity-16 [-1, 256, 56, 56] 0

Conv2d-17 [-1, 64, 56, 56] 16,384

BatchNorm2d-18 [-1, 64, 56, 56] 128

ReLU-19 [-1, 64, 56, 56] 0

Conv2d-20 [-1, 64, 56, 56] 36,864

BatchNorm2d-21 [-1, 64, 56, 56] 128

ReLU-22 [-1, 64, 56, 56] 0

Conv2d-23 [-1, 256, 56, 56] 16,384

Block2-24 [-1, 256, 56, 56] 0

BatchNorm2d-25 [-1, 256, 56, 56] 512

ReLU-26 [-1, 256, 56, 56] 0

MaxPool2d-27 [-1, 256, 28, 28] 0

Conv2d-28 [-1, 64, 56, 56] 16,384

BatchNorm2d-29 [-1, 64, 56, 56] 128

ReLU-30 [-1, 64, 56, 56] 0

Conv2d-31 [-1, 64, 28, 28] 36,864

BatchNorm2d-32 [-1, 64, 28, 28] 128

ReLU-33 [-1, 64, 28, 28] 0

Conv2d-34 [-1, 256, 28, 28] 16,384

Block2-35 [-1, 256, 28, 28] 0

Stack2-36 [-1, 256, 28, 28] 0

BatchNorm2d-37 [-1, 256, 28, 28] 512

ReLU-38 [-1, 256, 28, 28] 0

Conv2d-39 [-1, 512, 28, 28] 131,072

Conv2d-40 [-1, 128, 28, 28] 32,768

BatchNorm2d-41 [-1, 128, 28, 28] 256

ReLU-42 [-1, 128, 28, 28] 0

Conv2d-43 [-1, 128, 28, 28] 147,456

BatchNorm2d-44 [-1, 128, 28, 28] 256

ReLU-45 [-1, 128, 28, 28] 0

Conv2d-46 [-1, 512, 28, 28] 65,536

Block2-47 [-1, 512, 28, 28] 0

BatchNorm2d-48 [-1, 512, 28, 28] 1,024

ReLU-49 [-1, 512, 28, 28] 0

Identity-50 [-1, 512, 28, 28] 0

Conv2d-51 [-1, 128, 28, 28] 65,536

BatchNorm2d-52 [-1, 128, 28, 28] 256

ReLU-53 [-1, 128, 28, 28] 0

Conv2d-54 [-1, 128, 28, 28] 147,456

BatchNorm2d-55 [-1, 128, 28, 28] 256

ReLU-56 [-1, 128, 28, 28] 0

Conv2d-57 [-1, 512, 28, 28] 65,536

Block2-58 [-1, 512, 28, 28] 0

BatchNorm2d-59 [-1, 512, 28, 28] 1,024

ReLU-60 [-1, 512, 28, 28] 0

Identity-61 [-1, 512, 28, 28] 0

Conv2d-62 [-1, 128, 28, 28] 65,536

BatchNorm2d-63 [-1, 128, 28, 28] 256

ReLU-64 [-1, 128, 28, 28] 0

Conv2d-65 [-1, 128, 28, 28] 147,456

BatchNorm2d-66 [-1, 128, 28, 28] 256

ReLU-67 [-1, 128, 28, 28] 0

Conv2d-68 [-1, 512, 28, 28] 65,536

Block2-69 [-1, 512, 28, 28] 0

BatchNorm2d-70 [-1, 512, 28, 28] 1,024

ReLU-71 [-1, 512, 28, 28] 0

MaxPool2d-72 [-1, 512, 14, 14] 0

Conv2d-73 [-1, 128, 28, 28] 65,536

BatchNorm2d-74 [-1, 128, 28, 28] 256

ReLU-75 [-1, 128, 28, 28] 0

Conv2d-76 [-1, 128, 14, 14] 147,456

BatchNorm2d-77 [-1, 128, 14, 14] 256

ReLU-78 [-1, 128, 14, 14] 0

Conv2d-79 [-1, 512, 14, 14] 65,536

Block2-80 [-1, 512, 14, 14] 0

Stack2-81 [-1, 512, 14, 14] 0

BatchNorm2d-82 [-1, 512, 14, 14] 1,024

ReLU-83 [-1, 512, 14, 14] 0

Conv2d-84 [-1, 1024, 14, 14] 524,288

Conv2d-85 [-1, 256, 14, 14] 131,072

BatchNorm2d-86 [-1, 256, 14, 14] 512

ReLU-87 [-1, 256, 14, 14] 0

Conv2d-88 [-1, 256, 14, 14] 589,824

BatchNorm2d-89 [-1, 256, 14, 14] 512

ReLU-90 [-1, 256, 14, 14] 0

Conv2d-91 [-1, 1024, 14, 14] 262,144

Block2-92 [-1, 1024, 14, 14] 0

BatchNorm2d-93 [-1, 1024, 14, 14] 2,048

ReLU-94 [-1, 1024, 14, 14] 0

Identity-95 [-1, 1024, 14, 14] 0

Conv2d-96 [-1, 256, 14, 14] 262,144

BatchNorm2d-97 [-1, 256, 14, 14] 512

ReLU-98 [-1, 256, 14, 14] 0

Conv2d-99 [-1, 256, 14, 14] 589,824

BatchNorm2d-100 [-1, 256, 14, 14] 512

ReLU-101 [-1, 256, 14, 14] 0

Conv2d-102 [-1, 1024, 14, 14] 262,144

Block2-103 [-1, 1024, 14, 14] 0

BatchNorm2d-104 [-1, 1024, 14, 14] 2,048

ReLU-105 [-1, 1024, 14, 14] 0

Identity-106 [-1, 1024, 14, 14] 0

Conv2d-107 [-1, 256, 14, 14] 262,144

BatchNorm2d-108 [-1, 256, 14, 14] 512

ReLU-109 [-1, 256, 14, 14] 0

Conv2d-110 [-1, 256, 14, 14] 589,824

BatchNorm2d-111 [-1, 256, 14, 14] 512

ReLU-112 [-1, 256, 14, 14] 0

Conv2d-113 [-1, 1024, 14, 14] 262,144

Block2-114 [-1, 1024, 14, 14] 0

BatchNorm2d-115 [-1, 1024, 14, 14] 2,048

ReLU-116 [-1, 1024, 14, 14] 0

Identity-117 [-1, 1024, 14, 14] 0

Conv2d-118 [-1, 256, 14, 14] 262,144

BatchNorm2d-119 [-1, 256, 14, 14] 512

ReLU-120 [-1, 256, 14, 14] 0

Conv2d-121 [-1, 256, 14, 14] 589,824

BatchNorm2d-122 [-1, 256, 14, 14] 512

ReLU-123 [-1, 256, 14, 14] 0

Conv2d-124 [-1, 1024, 14, 14] 262,144

Block2-125 [-1, 1024, 14, 14] 0

BatchNorm2d-126 [-1, 1024, 14, 14] 2,048

ReLU-127 [-1, 1024, 14, 14] 0

Identity-128 [-1, 1024, 14, 14] 0

Conv2d-129 [-1, 256, 14, 14] 262,144

BatchNorm2d-130 [-1, 256, 14, 14] 512

ReLU-131 [-1, 256, 14, 14] 0

Conv2d-132 [-1, 256, 14, 14] 589,824

BatchNorm2d-133 [-1, 256, 14, 14] 512

ReLU-134 [-1, 256, 14, 14] 0

Conv2d-135 [-1, 1024, 14, 14] 262,144

Block2-136 [-1, 1024, 14, 14] 0

BatchNorm2d-137 [-1, 1024, 14, 14] 2,048

ReLU-138 [-1, 1024, 14, 14] 0

MaxPool2d-139 [-1, 1024, 7, 7] 0

Conv2d-140 [-1, 256, 14, 14] 262,144

BatchNorm2d-141 [-1, 256, 14, 14] 512

ReLU-142 [-1, 256, 14, 14] 0

Conv2d-143 [-1, 256, 7, 7] 589,824

BatchNorm2d-144 [-1, 256, 7, 7] 512

ReLU-145 [-1, 256, 7, 7] 0

Conv2d-146 [-1, 1024, 7, 7] 262,144

Block2-147 [-1, 1024, 7, 7] 0

Stack2-148 [-1, 1024, 7, 7] 0

BatchNorm2d-149 [-1, 1024, 7, 7] 2,048

ReLU-150 [-1, 1024, 7, 7] 0

Conv2d-151 [-1, 2048, 7, 7] 2,097,152

Conv2d-152 [-1, 512, 7, 7] 524,288

BatchNorm2d-153 [-1, 512, 7, 7] 1,024

ReLU-154 [-1, 512, 7, 7] 0

Conv2d-155 [-1, 512, 7, 7] 2,359,296

BatchNorm2d-156 [-1, 512, 7, 7] 1,024

ReLU-157 [-1, 512, 7, 7] 0

Conv2d-158 [-1, 2048, 7, 7] 1,048,576

Block2-159 [-1, 2048, 7, 7] 0

BatchNorm2d-160 [-1, 2048, 7, 7] 4,096

ReLU-161 [-1, 2048, 7, 7] 0

Identity-162 [-1, 2048, 7, 7] 0

Conv2d-163 [-1, 512, 7, 7] 1,048,576

BatchNorm2d-164 [-1, 512, 7, 7] 1,024

ReLU-165 [-1, 512, 7, 7] 0

Conv2d-166 [-1, 512, 7, 7] 2,359,296

BatchNorm2d-167 [-1, 512, 7, 7] 1,024

ReLU-168 [-1, 512, 7, 7] 0

Conv2d-169 [-1, 2048, 7, 7] 1,048,576

Block2-170 [-1, 2048, 7, 7] 0

BatchNorm2d-171 [-1, 2048, 7, 7] 4,096

ReLU-172 [-1, 2048, 7, 7] 0

Identity-173 [-1, 2048, 7, 7] 0

Conv2d-174 [-1, 512, 7, 7] 1,048,576

BatchNorm2d-175 [-1, 512, 7, 7] 1,024

ReLU-176 [-1, 512, 7, 7] 0

Conv2d-177 [-1, 512, 7, 7] 2,359,296

BatchNorm2d-178 [-1, 512, 7, 7] 1,024

ReLU-179 [-1, 512, 7, 7] 0

Conv2d-180 [-1, 2048, 7, 7] 1,048,576

Block2-181 [-1, 2048, 7, 7] 0

Stack2-182 [-1, 2048, 7, 7] 0

BatchNorm2d-183 [-1, 2048, 7, 7] 4,096

ReLU-184 [-1, 2048, 7, 7] 0

AdaptiveAvgPool2d-185 [-1, 2048, 1, 1] 0

Flatten-186 [-1, 2048] 0

Linear-187 [-1, 1000] 2,049,000

================================================================

Total params: 25,549,416

Trainable params: 25,549,416

Non-trainable params: 0

----------------------------------------------------------------

Input size (MB): 0.57

Forward/backward pass size (MB): 241.69

Params size (MB): 97.46

Estimated Total Size (MB): 339.73

----------------------------------------------------------------

# 编写训练函数

def train(dataloader,model,loss_fn,optimizer):

size = len(dataloader.dataset)

num_batches = len(dataloader)

train_acc,train_loss = 0,0

for X,y in dataloader:

X,y = X.to(device),y.to(device)

pred = model(X)

loss = loss_fn(pred,y)

optimizer.zero_grad()

loss.backward()

optimizer.step()

train_loss += loss.item()

train_acc += (pred.argmax(1) == y).type(torch.float).sum().item()

train_loss /= num_batches

train_acc /= size

return train_acc,train_loss

# 编写测试函数

def test(dataloader, model, loss_fn):

size = len(dataloader.dataset) # 测试集的大小

num_batches = len(dataloader) # 批次数目, (size/batch_size,向上取整)

test_loss, test_acc = 0, 0

# 当不进行训练时,停止梯度更新,节省计算内存消耗

with torch.no_grad():

for imgs, target in dataloader:

imgs, target = imgs.to(device), target.to(device)

# 计算loss

target_pred = model(imgs)

loss = loss_fn(target_pred, target)

test_loss += loss.item()

test_acc += (target_pred.argmax(1) == target).type(torch.float).sum().item()

test_acc /= size

test_loss /= num_batches

return test_acc, test_loss

import copy

loss_fn = nn.CrossEntropyLoss()

learn_rate = 1e-4

# SGD与Adam优化器,选择其中一个

# opt = torch.optim.SGD(model.parameters(),lr=learn_rate)

opt = torch.optim.Adam(model.parameters(),lr=learn_rate)

scheduler=torch.optim.lr_scheduler.StepLR(opt,step_size=1,gamma=0.9) #定义学习率高度器

epochs = 100 #设置训练模型的最大轮数为100,但可能到不了100

patience=10 #早停的耐心值,即如果模型连续10个周期没有准确率提升,则跳出训练

train_loss=[]

train_acc=[]

test_loss=[]

test_acc=[]

best_acc = 0 #设置一个最佳的准确率,作为最佳模型的判别指标

no_improve_epoch=0 #用于跟踪准确率是否提升的计数器

epoch=0 #用于统计最终的训练模型的轮数,这里设置初始值为0;为绘图作准备,这里的绘图范围不是epochs = 100

#开始训练

for epoch in range(epochs):

model.train()

epoch_train_acc,epoch_train_loss = train(train_dl,model,loss_fn,opt)

model.eval()

epoch_test_acc,epoch_test_loss = test(test_dl,model,loss_fn)

if epoch_test_acc > best_acc:

best_acc = epoch_test_acc

best_model = copy.deepcopy(model)

no_improve_epoch=0 #重置计数器

#保存最佳模型的检查点

PATH='./J2_best_model.pth'

torch.save({

'epoch':epoch,

'model_state_dict':best_model.state_dict(),

'optimizer_state_dict':opt.state_dict(),

'loss':epoch_test_loss,

},PATH)

else:

no_improve_epoch += 1

if no_improve_epoch >= patience:

print(f"Early stoping triggered at epoch {epoch+1}")

break #早停

train_acc.append(epoch_train_acc)

train_loss.append(epoch_train_loss)

test_acc.append(epoch_test_acc)

test_loss.append(epoch_test_loss)

scheduler.step() #更新学习率

lr = opt.state_dict()['param_groups'][0]['lr']

template = ('Epoch:{:2d}, Train_acc:{:.1f}%, Train_loss:{:.3f}, Test_acc:{:.1f}%, Test_loss:{:.3f}, Lr:{:.2E}')

print(template.format(epoch+1, epoch_train_acc*100, epoch_train_loss,epoch_test_acc*100, epoch_test_loss, lr))

# 保存最佳模型到文件中

PATH='./j2_best_model_j2.pth' #保存的参数文件名

torch.save(best_model.state_dict(),PATH)

print('Done')

print(epoch)

print('no_improve_epoch:',no_improve_epoch)

代码输出

Epoch: 1, Train_acc:54.9%, Train_loss:2.851, Test_acc:77.0%, Test_loss:0.715, Lr:9.00E-05

Epoch: 2, Train_acc:72.6%, Train_loss:0.770, Test_acc:52.2%, Test_loss:1.315, Lr:8.10E-05

Epoch: 3, Train_acc:82.1%, Train_loss:0.560, Test_acc:75.2%, Test_loss:0.796, Lr:7.29E-05

Epoch: 4, Train_acc:85.0%, Train_loss:0.436, Test_acc:83.2%, Test_loss:0.467, Lr:6.56E-05

Epoch: 5, Train_acc:89.6%, Train_loss:0.311, Test_acc:88.5%, Test_loss:0.347, Lr:5.90E-05

Epoch: 6, Train_acc:90.5%, Train_loss:0.261, Test_acc:78.8%, Test_loss:0.918, Lr:5.31E-05

Epoch: 7, Train_acc:92.3%, Train_loss:0.236, Test_acc:88.5%, Test_loss:0.364, Lr:4.78E-05

Epoch: 8, Train_acc:97.1%, Train_loss:0.115, Test_acc:85.0%, Test_loss:0.395, Lr:4.30E-05

Epoch: 9, Train_acc:94.9%, Train_loss:0.155, Test_acc:85.0%, Test_loss:0.405, Lr:3.87E-05

Epoch:10, Train_acc:96.7%, Train_loss:0.126, Test_acc:92.0%, Test_loss:0.261, Lr:3.49E-05

Epoch:11, Train_acc:98.0%, Train_loss:0.076, Test_acc:89.4%, Test_loss:0.285, Lr:3.14E-05

Epoch:12, Train_acc:96.9%, Train_loss:0.109, Test_acc:87.6%, Test_loss:0.378, Lr:2.82E-05

Epoch:13, Train_acc:98.0%, Train_loss:0.078, Test_acc:90.3%, Test_loss:0.353, Lr:2.54E-05

Epoch:14, Train_acc:99.3%, Train_loss:0.049, Test_acc:88.5%, Test_loss:0.327, Lr:2.29E-05

Epoch:15, Train_acc:98.9%, Train_loss:0.045, Test_acc:91.2%, Test_loss:0.276, Lr:2.06E-05

Epoch:16, Train_acc:98.5%, Train_loss:0.075, Test_acc:88.5%, Test_loss:0.359, Lr:1.85E-05

Epoch:17, Train_acc:99.3%, Train_loss:0.040, Test_acc:86.7%, Test_loss:0.342, Lr:1.67E-05

Epoch:18, Train_acc:98.2%, Train_loss:0.075, Test_acc:88.5%, Test_loss:0.368, Lr:1.50E-05

Epoch:19, Train_acc:99.1%, Train_loss:0.040, Test_acc:88.5%, Test_loss:0.447, Lr:1.35E-05

Early stoping triggered at epoch 20

Done

19

no_improve_epoch: 10

# 结果可视化

# Loss与Accuracy图

import matplotlib.pyplot as plt

#隐藏警告

import warnings

warnings.filterwarnings("ignore") #忽略警告信息

plt.rcParams['font.sans-serif'] = ['SimHei'] # 用来正常显示中文标签

plt.rcParams['axes.unicode_minus'] = False # 用来正常显示负号

plt.rcParams['figure.dpi'] = 100 #分辨率

epochs_range = range(epoch)

plt.figure(figsize=(12, 3))

plt.subplot(1, 2, 1)

plt.plot(epochs_range, train_acc, label='Training Accuracy')

plt.plot(epochs_range, test_acc, label='Test Accuracy')

plt.legend(loc='lower right')

plt.title('Training and Validation Accuracy')

plt.subplot(1, 2, 2)

plt.plot(epochs_range, train_loss, label='Training Loss')

plt.plot(epochs_range, test_loss, label='Test Loss')

plt.legend(loc='upper right')

plt.title('Training and Validation Loss')

plt.show()

代码输出

from PIL import Image

classes = list(total_data.class_to_idx)

def predict_one_image(image_path, model, transform, classes):

test_img = Image.open(image_path).convert('RGB')

plt.imshow(test_img) # 展示预测的图片

test_img = transform(test_img)

img = test_img.to(device).unsqueeze(0)

model.eval()

output = model(img)

_,pred = torch.max(output,1)

pred_class = classes[pred]

print(f'预测结果是:{pred_class}')

import os

from pathlib import Path

import random

#从所有的图片的随机选择一张图片

image=[]

def image_path(data_dir):

file_list=os.listdir(data_dir) #列出四个分类标签

data_file_dir=file_list #从四个分类标签中随机选择一个

data_dir=Path(data_dir)

for i in data_file_dir:

i=Path(i)

image_file_path=data_dir.joinpath(i) #拼接路径

data_file_paths=image_file_path.iterdir() #罗列文件夹的内容

data_file_paths=list(data_file_paths) #要转换为列表

image.append(data_file_paths)

file=random.choice(image) #从所有的图像中随机选择一类

file=random.choice(file) #从选择的类中随机选择一张图片

return file

data_dir='J2/bird_photos'

image_path=image_path(data_dir)

image_path

代码输出

WindowsPath('J2/bird_photos/Cockatoo/071.jpg')

# 预测训练集中的某张照片

predict_one_image(image_path=image_path,

model=model,

transform=train_transforms,

classes=classes)

代码输出

预测结果是:Cockatoo

五、总结

重点是如何搭建ResNet50V2的层。

六、ResNet50V2模型结构大图

243

243

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?