之前做过数据平台,对于实时数据采集,使用了Flink。现在想想,在数据开发平台中,Flink的身影几乎无处不在,由于之前是边用边学,总体有点混乱,借此空隙,整理一下Flink的内容,算是一个知识积累,同时也分享给大家。

注意:由于框架不同版本改造会有些使用的不同,因此本次系列中使用基本框架是 Flink-1.19.x,Flink支持多种语言,这里的所有代码都是使用java,JDK版本使用的是19。

代码参考:https://github.com/forever1986/flink-study.git

在《系列之一 - 开篇》中说过,Flink是一个支持有状态的流处理。这一章就来讲一下Flink的状态管理

1 状态的分类

当用户在计算过程中可能需要存储一些中间状态时,比如在《系列之十 - Data Stream API的中间算子:合流和分流》中的1.2.3 模拟关联表的示例中使用过自己定义中间map存储流的数据,当时就说这种存储其实存在没有落盘以及没有清理的风险。后来在讲到窗口时补充了join和intervalJoin使用Flink自带的把用户保存中间状态数据。join和intervalJoin是内部Flink帮用户保存状态,如果用户想自定义状态,那么Flink也提供了对外存储数据的接口。

Flink的状态有两种: 托管状态(Managed State) 和 原始状态(Raw State) 。

- 托管状态(Managed State) :就是由Flink统一管理的,状态的存储访问、故障恢复和重组等一系列问题都由Flink实现,用户只要调接口就可以;

- 原始状态(Raw State):则是自定义的,相当于就是开辟了一块内存,需要用户自己管理,实现状态的序列化和故障恢复(在演示模拟关联表的示例中就是一个原始状态,只不过那时候还没有处理序列化等问题)。

其中 托管状态(Managed State) 又分为 算子状态(Operator State) 和 按键分区状态(keyed State) :

- 算子状态(Operator State) :适合所有算子,算子状态在整个子任务中共享。

- 按键分区状态(keyed State):需要经过keyBy之后使用,并且在子任务中会按照key进行分开存储,不同key之间不会共享。

Flink中提供的 托管状态(Managed State) 基本上能涵盖用户的99.9%需求,因此这里就不对 原始状态(Raw State) 做介绍,下面将从 托管状态(Managed State) 的两个分类 算子状态(Operator State) 和 按键分区状态(keyed State) 分别做讲解。

2 按键分区状态(keyed State)

2.1 常用的按键分区状态

前面提到 按键分区状态(keyed State) 本身需要经过keyBy之后使用,但无需用户自己实现落盘操作,由Flink自动管理。那么有哪些常用的按键分区状态,如下图:

- ValueState:存储一个普通变量值

- ListState:存储一个List的变量值

- MapState:存储一个Map的变量值

- ReducingState:存储一个规约后的数据,也就是会存储一个数据,但是自动规约

- AggregatingState:存储一个聚合后的数据,也就是会存储一个数据,但是自动聚合

2.2 使用按键分区状态的步骤

不同的流和不同的函数,其使用按键分区状态的步骤都差不多,只不过初始化和获取的Context定义有点差异,下面列举常用的两种类型,示例代码中也会分别演示这两种。

2.2.1 继承RichFunction函数使用按键分区状态的步骤

如果某个函数是继承RichFunction函数的,RichFunction本身已经封装了一遍,所以其使用步骤如下:

1)需要定义一个 按键分区状态(keyed State) 变量

2)在RichFunction中的open方法做初始化

3)使用不同 按键分区状态(keyed State) 进行操作

其中关于 按键分区状态(keyed State) 的初始化,需要使用一个StateDescriptor类进行初始化,每个 按键分区状态(keyed State) 都实现了一个StateDescriptor类。StateDescriptor类的初始化一般由2个参数:

- 第一个参数是自定义一个变量名称(变量名称不要重复即可)

- 第二个参数则是数据类型(参考《系列之十四 - Data Stream API的自定义数据类型》)

2.2.2 非RichFunction函数使用按键分区状态的步骤

前面之所以有open方法是因为实现了RichFunction接口封装的,但是对于那些没有继承RichFunction的函数,比如MapFunction等,该如何使用 按键分区状态(keyed State) 呢?步骤如下:

1)在定义Function时,实现CheckpointedFunction接口

2)需要定义一个 按键分区状态(keyed State) 变量

3)在initializeState方法中初始化按键分区状态(keyed State) 的值

4)使用不同 按键分区状态(keyed State) 进行操作

注意:CheckpointedFunction是检查点保存的内容,这一块还没有讲,这里先简单理解initializeState是从检查点获得数据,snapshotState是往检查点存入数据。

讲完了分类和使用步骤,下面就开始对常见的几种 按键分区状态(keyed State) 进行代码演示

2.3 代码示例

2.3.1 ValueState

| 方法 | 描述 |

|---|---|

| value() | 返回ValueState中的值 |

| update() | 更新ValueState中的值 |

示例说明:假设来自服务器的cpu值,使用一个ValueState存储累加cpu值

ValueStateDemo 类:

import org.apache.flink.api.common.eventtime.WatermarkStrategy;

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.api.common.functions.OpenContext;

import org.apache.flink.api.common.state.ValueState;

import org.apache.flink.api.common.state.ValueStateDescriptor;

import org.apache.flink.api.common.typeinfo.Types;

import org.apache.flink.api.java.functions.KeySelector;

import org.apache.flink.api.java.tuple.Tuple3;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.datastream.KeyedStream;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.KeyedProcessFunction;

import org.apache.flink.util.Collector;

public class ValueStateDemo {

public static void main(String[] args) throws Exception {

// 1. 创建执行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

// 2. 读取数据

DataStreamSource<String> text = env.socketTextStream("127.0.0.1", 9999);

// 3. map做类型转换

SingleOutputStreamOperator<Tuple3<String,Double,Long>> map = text.map(new Tuple3MapFunction());

// 4. 定义单调递增watermark以及TimestampAssigner

WatermarkStrategy<Tuple3<String,Double,Long>> watermarkStrategy = WatermarkStrategy

// 设置单调递增

.<Tuple3<String,Double,Long>>forMonotonousTimestamps()

// 设置事件时间处理器

.withTimestampAssigner((element, recordTimestamp) ->{

return element.f2 * 1000L;

} );

SingleOutputStreamOperator<Tuple3<String,Double,Long>> mapWithWatermark = map.assignTimestampsAndWatermarks(watermarkStrategy);

// 5. 做keyBy

KeyedStream<Tuple3<String,Double,Long>, String> kyStream = mapWithWatermark.keyBy(new KeySelectorFunction());

SingleOutputStreamOperator<String> process = kyStream.process(new KeyedProcessFunction<>() {

// 1)定义ValueState值

ValueState<Tuple3<String, Double, Long>> currentValue;

@Override

public void open(OpenContext openContext) throws Exception {

super.open(openContext);

// 2)初始化ValueState值

ValueStateDescriptor<Tuple3<String, Double, Long>> descriptor = new ValueStateDescriptor<>("currentValue", Types.TUPLE(Types.STRING, Types.DOUBLE, Types.LONG));

currentValue = getRuntimeContext().getState(descriptor);

}

@Override

public void processElement(Tuple3<String, Double, Long> value, KeyedProcessFunction<String, Tuple3<String, Double, Long>, String>.Context ctx, Collector<String> out) throws Exception {

// 3)获取ValueState值

Tuple3<String, Double, Long> curValue = currentValue.value();

if(curValue==null){

curValue = value;

}else{

curValue.f1 = curValue.f1 + value.f1;

}

// 4)更新ValueState值

currentValue.update(curValue);

out.collect(curValue.toString());

}

});

// 6. 打印

process.print();

// 执行

env.execute();

}

public static class Tuple3MapFunction implements MapFunction<String, Tuple3<String,Double,Long>> {

@Override

public Tuple3<String, Double, Long> map(String value) throws Exception {

String[] values = value.split(",");

String value1 = values[0];

double value2 = Double.parseDouble("0");

long value3 = 0;

if(values.length >= 2){

try {

value2 = Double.parseDouble(values[1]);

}catch (Exception e){

value2 = Double.parseDouble("0");

}

}

if(values.length >= 3){

try {

value3 = Long.parseLong(values[2]);

}catch (Exception ignored){

}

}

return new Tuple3<>(value1,value2,value3);

}

}

public static class KeySelectorFunction implements KeySelector<Tuple3<String,Double,Long>, String> {

@Override

public String getKey(Tuple3<String,Double,Long> value) throws Exception {

// 返回第一个值,作为keyBy的分类

return value.f0;

}

}

}

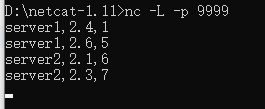

输入:

输出:

知识点:总共输入3条数据

1)可以看到server1的cpu是累加的结果

2)可以看到server1和server2自动按照key分开存储,所以server2的cpu值是2.1

2.3.2 ListState

| 方法 | 描述 |

|---|---|

| get() | 返回ListState中的值,返回的是一个Iterable迭代接口 |

| update() | 更新ListState中的值,需要传入一个List数据 |

| add() | 添加一个数据到ListState |

| addAll() | 添加一个List列表数据到ListState |

示例说明:假设来自服务器的cpu值,使用一个ListState存储,并计算cpu的平均值

ListStateDemo 类:

import org.apache.flink.api.common.eventtime.WatermarkStrategy;

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.api.common.functions.OpenContext;

import org.apache.flink.api.common.state.ListState;

import org.apache.flink.api.common.state.ListStateDescriptor;

import org.apache.flink.api.common.typeinfo.Types;

import org.apache.flink.api.java.functions.KeySelector;

import org.apache.flink.api.java.tuple.Tuple3;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.datastream.KeyedStream;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.KeyedProcessFunction;

import org.apache.flink.util.Collector;

import java.util.Iterator;

public class ListStateDemo {

public static void main(String[] args) throws Exception {

// 1. 创建执行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

// 2. 读取数据

DataStreamSource<String> text = env.socketTextStream("127.0.0.1", 9999);

// 3. map做类型转换

SingleOutputStreamOperator<Tuple3<String,Double,Long>> map = text.map(new Tuple3MapFunction());

// 4. 定义单调递增watermark以及TimestampAssigner

WatermarkStrategy<Tuple3<String,Double,Long>> watermarkStrategy = WatermarkStrategy

// 设置单调递增

.<Tuple3<String,Double,Long>>forMonotonousTimestamps()

// 设置事件时间处理器

.withTimestampAssigner((element, recordTimestamp) ->{

return element.f2 * 1000L;

} );

SingleOutputStreamOperator<Tuple3<String,Double,Long>> mapWithWatermark = map.assignTimestampsAndWatermarks(watermarkStrategy);

// 5. 做keyBy

KeyedStream<Tuple3<String,Double,Long>, String> kyStream = mapWithWatermark.keyBy(new KeySelectorFunction());

SingleOutputStreamOperator<String> process = kyStream.process(new KeyedProcessFunction<>() {

// 1)定义ListState值

ListState<Tuple3<String, Double, Long>> currentValue;

@Override

public void open(OpenContext openContext) throws Exception {

super.open(openContext);

// 2)初始化ListState值

ListStateDescriptor<Tuple3<String, Double, Long>> descriptor = new ListStateDescriptor<>("currentValue", Types.TUPLE(Types.STRING, Types.DOUBLE, Types.LONG));

currentValue = getRuntimeContext().getListState(descriptor);

}

@Override

public void processElement(Tuple3<String, Double, Long> value, KeyedProcessFunction<String, Tuple3<String, Double, Long>, String>.Context ctx, Collector<String> out) throws Exception {

// 3)添加ListState数据

currentValue.add(value);

// 4)获取ListState值

Iterator<Tuple3<String, Double, Long>> iterator = currentValue.get().iterator();

double sum = 0;

int num = 0;

while (iterator.hasNext()){

Tuple3<String, Double, Long> tmpValue = iterator.next();

sum = sum + tmpValue.f1;

num++;

}

out.collect(value.f0 + "的平均cpu值=" + (sum/num));

}

});

// 6. 打印

process.print();

// 执行

env.execute();

}

public static class Tuple3MapFunction implements MapFunction<String, Tuple3<String,Double,Long>> {

@Override

public Tuple3<String, Double, Long> map(String value) throws Exception {

String[] values = value.split(",");

String value1 = values[0];

double value2 = Double.parseDouble("0");

long value3 = 0;

if(values.length >= 2){

try {

value2 = Double.parseDouble(values[1]);

}catch (Exception e){

value2 = Double.parseDouble("0");

}

}

if(values.length >= 3){

try {

value3 = Long.parseLong(values[2]);

}catch (Exception ignored){

}

}

return new Tuple3<>(value1,value2,value3);

}

}

public static class KeySelectorFunction implements KeySelector<Tuple3<String,Double,Long>, String> {

@Override

public String getKey(Tuple3<String,Double,Long> value) throws Exception {

// 返回第一个值,作为keyBy的分类

return value.f0;

}

}

}

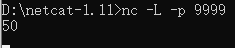

输入:

输出:

知识点:

1)输入第一条数据,现在key为server1的数据只有1条,因此平均值为2.4

2)输入第二条数据,现在key为server1的数据有2条,因此平均值为2.5

3)输入第一条数据,现在key为server2的数据只有1条,因此平均值为2.1

4)输入第二条数据,现在key为server2的数据有2条,因此平均值为2.2

2.3.3 MapState

| 方法 | 描述 |

|---|---|

| get() | 返回MapState中某个key的值 |

| put() | 更新MapState中某个key的值 |

| iterator() | 返回MapState中map的迭代器 |

| values() | 返回MapState中map的所有value的迭代器 |

| keys() | 返回MapState中map的所有value的迭代器 |

| contains() | 判断MapState中map的是否包含某个key值 |

| putAll() | 更新MapState的值 |

| entries() | 返回MapState中map的迭代器 |

| isEmpty() | 判断MapState是否为空 |

| remove() | 移除MapState中某个key的值 |

示例说明:假设来自不同超市的商品卖出情况,统计不同超市不同商品卖出的数量

MapStateDemo类:

import org.apache.flink.api.common.eventtime.WatermarkStrategy;

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.api.common.functions.OpenContext;

import org.apache.flink.api.common.state.MapState;

import org.apache.flink.api.common.state.MapStateDescriptor;

import org.apache.flink.api.common.typeinfo.Types;

import org.apache.flink.api.java.functions.KeySelector;

import org.apache.flink.api.java.tuple.Tuple3;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.datastream.KeyedStream;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.KeyedProcessFunction;

import org.apache.flink.util.Collector;

import java.util.Iterator;

import java.util.Map;

public class MapStateDemo {

public static void main(String[] args) throws Exception {

// 1. 创建执行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

// 2. 读取数据

DataStreamSource<String> text = env.socketTextStream("127.0.0.1", 9999);

// 3. map做类型转换

SingleOutputStreamOperator<Tuple3<String,String,Long>> map = text.map(new Tuple3MapFunction());

// 4. 定义单调递增watermark以及TimestampAssigner

WatermarkStrategy<Tuple3<String,String,Long>> watermarkStrategy = WatermarkStrategy

// 设置单调递增

.<Tuple3<String,String,Long>>forMonotonousTimestamps()

// 设置事件时间处理器

.withTimestampAssigner((element, recordTimestamp) ->{

return element.f2 * 1000L;

} );

SingleOutputStreamOperator<Tuple3<String,String,Long>> mapWithWatermark = map.assignTimestampsAndWatermarks(watermarkStrategy);

// 5. 做keyBy

KeyedStream<Tuple3<String,String,Long>, String> kyStream = mapWithWatermark.keyBy(new KeySelectorFunction());

SingleOutputStreamOperator<String> process = kyStream.process(new KeyedProcessFunction<>() {

// 1)定义MapState值

MapState<String,Long> currentValue;

@Override

public void open(OpenContext openContext) throws Exception {

super.open(openContext);

// 2)初始化MapState值

MapStateDescriptor<String,Long> descriptor = new MapStateDescriptor<>("currentValue",Types.STRING, Types.LONG);

currentValue = getRuntimeContext().getMapState(descriptor);

}

@Override

public void processElement(Tuple3<String, String, Long> value, KeyedProcessFunction<String, Tuple3<String, String, Long>, String>.Context ctx, Collector<String> out) throws Exception {

// 3)获取某个key值的LMapState数据

Long num = currentValue.get(value.f1);

if(num==null){

num = value.f2;

}else {

num = num + value.f2;

}

// 4)更新LMapState数据

currentValue.put(value.f1, num);

// 4)获取所有LMapState数据

Iterator<Map.Entry<String, Long>> iterator = currentValue.iterator();

StringBuilder sb = new StringBuilder();

sb.append("==== key=").append(value.f0).append(" start ======\n");

while (iterator.hasNext()){

Map.Entry<String, Long> next = iterator.next();

sb.append("key=").append(next.getKey()).append(", value=").append(next.getValue()).append("\n");

}

sb.append("==== key=").append(value.f0).append(" end ======\n");

out.collect(sb.toString());

}

});

// 6. 打印

process.print();

// 执行

env.execute();

}

public static class Tuple3MapFunction implements MapFunction<String, Tuple3<String,String,Long>> {

@Override

public Tuple3<String, String, Long> map(String value) throws Exception {

String[] values = value.split(",");

String value1 = values[0];

String value2 = "";

long value3 = 0;

if(values.length >= 2){

value2 = values[1];

}

if(values.length >= 3){

try {

value3 = Long.parseLong(values[2]);

}catch (Exception ignored){

}

}

return new Tuple3<>(value1,value2,value3);

}

}

public static class KeySelectorFunction implements KeySelector<Tuple3<String,String,Long>, String> {

@Override

public String getKey(Tuple3<String,String,Long> value) throws Exception {

// 返回第一个值,作为keyBy的分类

return value.f0;

}

}

}

输入:

输出:

知识点:

1)输入第1条和第2条数据值,分别是不同supermarket的,因此goo1是分开的

2)输入第3条和第4条数据值,也是分别属于不同supermarket的,但是会与前面第1条和第2条数据值合并

2.3.4 ReducingState

reduce规约在前面已经讲过,就是不断累积成为一条数据。ReducingState只是Flink提供一个reduce操作的方便状态。

| 方法 | 描述 |

|---|---|

| add() | 将数据加入到ReducingState中,会触发ReducingStateDescriptor定义时的ReduceFunction方法 |

| get() | 获取ReducingState中累积到当前的结果 |

示例说明:假设来自服务器的cpu值,使用一个ReducingState存储累加cpu值,与ValueState示例一样

ReducingStateDemo类:

import org.apache.flink.api.common.eventtime.WatermarkStrategy;

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.api.common.functions.OpenContext;

import org.apache.flink.api.common.functions.ReduceFunction;

import org.apache.flink.api.common.state.ReducingState;

import org.apache.flink.api.common.state.ReducingStateDescriptor;

import org.apache.flink.api.common.typeinfo.Types;

import org.apache.flink.api.java.functions.KeySelector;

import org.apache.flink.api.java.tuple.Tuple3;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.datastream.KeyedStream;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.KeyedProcessFunction;

import org.apache.flink.util.Collector;

public class ReducingStateDemo {

public static void main(String[] args) throws Exception {

// 1. 创建执行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

// 2. 读取数据

DataStreamSource<String> text = env.socketTextStream("127.0.0.1", 9999);

// 3. map做类型转换

SingleOutputStreamOperator<Tuple3<String,Double,Long>> map = text.map(new Tuple3MapFunction());

// 4. 定义单调递增watermark以及TimestampAssigner

WatermarkStrategy<Tuple3<String,Double,Long>> watermarkStrategy = WatermarkStrategy

// 设置单调递增

.<Tuple3<String,Double,Long>>forMonotonousTimestamps()

// 设置事件时间处理器

.withTimestampAssigner((element, recordTimestamp) ->{

return element.f2 * 1000L;

} );

SingleOutputStreamOperator<Tuple3<String,Double,Long>> mapWithWatermark = map.assignTimestampsAndWatermarks(watermarkStrategy);

// 5. 做keyBy

KeyedStream<Tuple3<String,Double,Long>, String> kyStream = mapWithWatermark.keyBy(new KeySelectorFunction());

SingleOutputStreamOperator<String> process = kyStream.process(new KeyedProcessFunction<>() {

// 1)定义ReducingState值

ReducingState<Tuple3<String, Double, Long>> currentValue;

@Override

public void open(OpenContext openContext) throws Exception {

super.open(openContext);

// 2)初始化ReducingState值

ReducingStateDescriptor<Tuple3<String, Double, Long>> descriptor = new ReducingStateDescriptor<>(

"currentValue",

(ReduceFunction<Tuple3<String, Double, Long>>) (value1, value2) -> {

// 将cpu值累加到第一条数据,返回第一条数据

value1.f1 = value1.f1 + value2.f1;

return value1;

},

Types.TUPLE(Types.STRING, Types.DOUBLE, Types.LONG));

currentValue = getRuntimeContext().getReducingState(descriptor);

}

@Override

public void processElement(Tuple3<String, Double, Long> value, KeyedProcessFunction<String, Tuple3<String, Double, Long>, String>.Context ctx, Collector<String> out) throws Exception {

// 3)更新ReducingState值

currentValue.add(value);

// 4)获取ReducingState值

out.collect(currentValue.get().toString());

}

});

// 6. 打印

process.print();

// 执行

env.execute();

}

public static class Tuple3MapFunction implements MapFunction<String, Tuple3<String,Double,Long>> {

@Override

public Tuple3<String, Double, Long> map(String value) throws Exception {

String[] values = value.split(",");

String value1 = values[0];

double value2 = Double.parseDouble("0");

long value3 = 0;

if(values.length >= 2){

try {

value2 = Double.parseDouble(values[1]);

}catch (Exception e){

value2 = Double.parseDouble("0");

}

}

if(values.length >= 3){

try {

value3 = Long.parseLong(values[2]);

}catch (Exception ignored){

}

}

return new Tuple3<>(value1,value2,value3);

}

}

public static class KeySelectorFunction implements KeySelector<Tuple3<String,Double,Long>, String> {

@Override

public String getKey(Tuple3<String,Double,Long> value) throws Exception {

// 返回第一个值,作为keyBy的分类

return value.f0;

}

}

}

输入:

输出:

知识点:

这里使用和ValueState一样的示例和输入,可以看出输出结果是一样的。因此ReducingState功能就是前面提到的reduce方法规约功能一样

2.3.5 AggregatingState

Aggregate聚合与Reduce规约唯一不同之处就是Aggregate支持输入、累积器和输出的数据类型不一致,其它功能都是一样的。

| 方法 | 描述 |

|---|---|

| add() | 将数据加入到AggregatingState中,会触发AggregatingStateDescriptor定义时的AggregateFunction方法 |

| get() | 获取AggregatingState中累积到当前的结果 |

示例说明:假设来自服务器的cpu值,使用一个AggregatingState存储累加cpu值,与ReducingState示例一样

AggregatingStateDemo类

import org.apache.flink.api.common.eventtime.WatermarkStrategy;

import org.apache.flink.api.common.functions.AggregateFunction;

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.api.common.functions.OpenContext;

import org.apache.flink.api.common.state.AggregatingState;

import org.apache.flink.api.common.state.AggregatingStateDescriptor;

import org.apache.flink.api.common.typeinfo.Types;

import org.apache.flink.api.java.functions.KeySelector;

import org.apache.flink.api.java.tuple.Tuple3;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.datastream.KeyedStream;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.KeyedProcessFunction;

import org.apache.flink.util.Collector;

public class AggregatingStateDemo {

public static void main(String[] args) throws Exception {

// 1. 创建执行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

// 2. 读取数据

DataStreamSource<String> text = env.socketTextStream("127.0.0.1", 9999);

// 3. map做类型转换

SingleOutputStreamOperator<Tuple3<String,Double,Long>> map = text.map(new Tuple3MapFunction());

// 4. 定义单调递增watermark以及TimestampAssigner

WatermarkStrategy<Tuple3<String,Double,Long>> watermarkStrategy = WatermarkStrategy

// 设置单调递增

.<Tuple3<String,Double,Long>>forMonotonousTimestamps()

// 设置事件时间处理器

.withTimestampAssigner((element, recordTimestamp) ->{

return element.f2 * 1000L;

} );

SingleOutputStreamOperator<Tuple3<String,Double,Long>> mapWithWatermark = map.assignTimestampsAndWatermarks(watermarkStrategy);

// 5. 做keyBy

KeyedStream<Tuple3<String,Double,Long>, String> kyStream = mapWithWatermark.keyBy(new KeySelectorFunction());

SingleOutputStreamOperator<String> process = kyStream.process(new KeyedProcessFunction<>() {

// 1)定义AggregatingState值

AggregatingState<Tuple3<String, Double, Long>, Double> currentValue;

@Override

public void open(OpenContext openContext) throws Exception {

super.open(openContext);

// 2)初始化AggregatingState值

AggregatingStateDescriptor<Tuple3<String, Double, Long>, Double, Double> descriptor = new AggregatingStateDescriptor<>(

"currentValue",

new AggregateFunction<>() {

@Override

public Double createAccumulator() {

return 0.0;

}

@Override

public Double add(Tuple3<String, Double, Long> value, Double accumulator) {

accumulator = accumulator + value.f1;

return accumulator;

}

@Override

public Double getResult(Double accumulator) {

return accumulator;

}

@Override

public Double merge(Double a, Double b) {

return 0.0;

}

},

Types.DOUBLE);

currentValue = getRuntimeContext().getAggregatingState(descriptor);

}

@Override

public void processElement(Tuple3<String, Double, Long> value, KeyedProcessFunction<String, Tuple3<String, Double, Long>, String>.Context ctx, Collector<String> out) throws Exception {

// 3)更新AggregatingState值

currentValue.add(value);

// 4)获取AggregatingState值

out.collect(currentValue.get().toString());

}

});

// 6. 打印

process.print();

// 执行

env.execute();

}

public static class Tuple3MapFunction implements MapFunction<String, Tuple3<String,Double,Long>> {

@Override

public Tuple3<String, Double, Long> map(String value) throws Exception {

String[] values = value.split(",");

String value1 = values[0];

double value2 = Double.parseDouble("0");

long value3 = 0;

if(values.length >= 2){

try {

value2 = Double.parseDouble(values[1]);

}catch (Exception e){

value2 = Double.parseDouble("0");

}

}

if(values.length >= 3){

try {

value3 = Long.parseLong(values[2]);

}catch (Exception ignored){

}

}

return new Tuple3<>(value1,value2,value3);

}

}

public static class KeySelectorFunction implements KeySelector<Tuple3<String,Double,Long>, String> {

@Override

public String getKey(Tuple3<String,Double,Long> value) throws Exception {

// 返回第一个值,作为keyBy的分类

return value.f0;

}

}

}

输入:

输出:

知识点:可以看出效果和ReducingState示例一样,唯一不同的是这里定义输出是一个Double,只输出了cpu汇总值

2.3.6 非RichFunction使用演示

示例说明:这里使用和前面ValueState一样的示例,累加cpu值

import org.apache.flink.api.common.eventtime.WatermarkStrategy;

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.api.common.state.ValueState;

import org.apache.flink.api.common.state.ValueStateDescriptor;

import org.apache.flink.api.common.typeinfo.Types;

import org.apache.flink.api.java.functions.KeySelector;

import org.apache.flink.api.java.tuple.Tuple3;

import org.apache.flink.runtime.state.FunctionInitializationContext;

import org.apache.flink.runtime.state.FunctionSnapshotContext;

import org.apache.flink.streaming.api.checkpoint.CheckpointedFunction;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.datastream.KeyedStream;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

public class ValueState2Demo {

public static void main(String[] args) throws Exception {

// 1. 创建执行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

// 2. 读取数据

DataStreamSource<String> text = env.socketTextStream("127.0.0.1", 9999);

// 3. map做类型转换

SingleOutputStreamOperator<Tuple3<String,Double,Long>> map = text.map(new Tuple3MapFunction());

// 4. 定义单调递增watermark以及TimestampAssigner

WatermarkStrategy<Tuple3<String,Double,Long>> watermarkStrategy = WatermarkStrategy

// 设置单调递增

.<Tuple3<String,Double,Long>>forMonotonousTimestamps()

// 设置事件时间处理器

.withTimestampAssigner((element, recordTimestamp) ->{

return element.f2 * 1000L;

} );

SingleOutputStreamOperator<Tuple3<String,Double,Long>> mapWithWatermark = map.assignTimestampsAndWatermarks(watermarkStrategy);

// 5. 做keyBy

KeyedStream<Tuple3<String,Double,Long>, String> kyStream = mapWithWatermark.keyBy(new KeySelectorFunction());

// 6. 使用map做数据累加

SingleOutputStreamOperator<String> process = kyStream.map(new CustomMapFunction());

// 7. 打印

process.print();

// 执行

env.execute();

}

public static class CustomMapFunction implements MapFunction<Tuple3<String,Double,Long>, String>, CheckpointedFunction {

// 1)定义ValueState值

ValueState<Double> currentValue;

/**

* 初始化算子状态

*/

@Override

public void initializeState(FunctionInitializationContext context) throws Exception {

// 2)初始化ValueState值

System.out.println("initializeState...");

ValueStateDescriptor<Double> descriptor = new ValueStateDescriptor<>("currentValue", Types.DOUBLE);

currentValue = context.getKeyedStateStore().getState(descriptor);

}

@Override

public String map(Tuple3<String,Double,Long> value) throws Exception {

double curValue = 0l;

// 3)获取ValueState值

if(currentValue.value()==null){

curValue = value.f1;

}else{

curValue = currentValue.value() + value.f1;

}

// 4)更新ValueState值

currentValue.update(curValue);

value.f1 = curValue;

return value.toString();

}

/**

* 在检查点触发时的操作

*/

@Override

public void snapshotState(FunctionSnapshotContext context) throws Exception {

System.out.println("snapshotState...");

}

}

public static class Tuple3MapFunction implements MapFunction<String, Tuple3<String,Double,Long>> {

@Override

public Tuple3<String, Double, Long> map(String value) throws Exception {

String[] values = value.split(",");

String value1 = values[0];

double value2 = Double.parseDouble("0");

long value3 = 0;

if(values.length >= 2){

try {

value2 = Double.parseDouble(values[1]);

}catch (Exception e){

value2 = Double.parseDouble("0");

}

}

if(values.length >= 3){

try {

value3 = Long.parseLong(values[2]);

}catch (Exception ignored){

}

}

return new Tuple3<>(value1,value2,value3);

}

}

public static class KeySelectorFunction implements KeySelector<Tuple3<String,Double,Long>, String> {

@Override

public String getKey(Tuple3<String,Double,Long> value) throws Exception {

// 返回第一个值,作为keyBy的分类

return value.f0;

}

}

}

输入:

输出:

知识点:这个效果和ValueState一样,唯一不同的就是初始化时会调用initializeState方法进行初始化。其它的按键分区状态也是一样的,这里就不一一演示。

3 算子状态(Operator State)

3.1 常见的算子状态

算子状态(Operator State)与按键分区状态(keyed State)的区别在于是否经过keyBy,如果没有经过KeyBy则只能使用算子状态(Operator State),也就是意味着算子状态在整个子任务中共享。下图为2个在算子状态下可以使用的状态。

下面就ListState和BroadcastState进行演示,同时你会发现还有一种UnionListState,其实也是ListState,下面也会讲一下UnionListState这种状态

3.2 代码演示

3.2.1 ListState

示例说明:假设来自服务器的cpu值,使用一个ListState存储累加cpu值

OperatorListStateDemo类:

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.api.common.state.ListState;

import org.apache.flink.api.common.state.ListStateDescriptor;

import org.apache.flink.api.common.typeinfo.Types;

import org.apache.flink.runtime.state.FunctionInitializationContext;

import org.apache.flink.runtime.state.FunctionSnapshotContext;

import org.apache.flink.streaming.api.checkpoint.CheckpointedFunction;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

public class OperatorListStateDemo {

public static void main(String[] args) throws Exception {

// 1. 创建执行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

// 2. 读取数据

DataStreamSource<String> text = env.socketTextStream("127.0.0.1", 9999);

// 3. map做类型转换

SingleOutputStreamOperator<Double> map = text.map(new DoubleMapFunction());

// 5. 打印

map.print();

// 执行

env.execute();

}

public static class DoubleMapFunction implements MapFunction<String, Double>, CheckpointedFunction {

private Double cpu;

private ListState<Double> cpuState;

@Override

public Double map(String value) throws Exception {

String[] values = value.split(",");

double value2 = Double.parseDouble("0");

if(values.length >= 2){

try {

value2 = Double.parseDouble(values[1]);

}catch (Exception e){

value2 = Double.parseDouble("0");

}

}

cpu = cpu + value2;

return cpu;

}

/**

* 初始化算子状态

*/

@Override

public void initializeState(FunctionInitializationContext context) throws Exception {

System.out.println("initializeState...");

ListStateDescriptor<Double> descriptor = new ListStateDescriptor<>("cpu", Types.DOUBLE);

cpuState = context.getOperatorStateStore().getListState(descriptor);

if(context.isRestored()){

cpu = cpuState.get().iterator().next();

}else{

cpu = 0.0;

}

}

/**

* 在检查点触发时,存储算子状态

*/

@Override

public void snapshotState(FunctionSnapshotContext context) throws Exception {

System.out.println("snapshotState...");

cpuState.clear();

cpuState.add(cpu);

}

}

}

输入:

输出:

知识点:输入4条数据,2条来自server1,2条来自server2。并行度为2.

1)可以看到initializeState方法被调用2次,说每个子任务都会初始化一次

2)输入第1条数据时,在并行度子任务1下,所以值为2.4

3)输入第2条数据时,在并行度子任务2下,所以值为2.6

4)输入第3条数据时,在并行度子任务1下,子任务1原先的值为2.4,累加本次2.1,结果为4.5

5)输入第4条数据时,在并行度子任务2下,子任务2原先的值为2.6,累加本次2.3,结果为4.9

6)由此可见,每个子任务都是共享一个状态值

3.2.2 UnionListState

UnionListState实际上也是使用ListState,其获取代码如下,使用的是getUnionListState方法,但是返回的还是ListState

cpuState = context.getOperatorStateStore().getUnionListState(descriptor);

UnionListState 与 ListState 的不同主要体现在重启任务时,如果修改了并行度,状态分配策略不同。

ListState 修改并行度之后分配是按照轮询分配,比如下面示例,一开始有2个并行度,6个状态;重启之后修改为3个并行度,那么每个子任务都会得到2个状态。

UnionListState 修改并行度之后分配每个并行度都会得到全量状态,比如下面示例,一开始有2个并行度,6个状态;重启之后修改为3个并行度,那么每个子任务都会得到6个状态。

3.2.3 BroadcastState

BroadcastState主要是在BroadcastStream流中使用。BroadcastStream是一个具有广播状态的流。这可以由使用数据流的任何流创建。broadcast(MapStateDescriptor[])方法并隐式地创建状态,用户可以在其中存储已创建的BroadcastStream的元素。请注意,BroadcastStream不能对流进一步的操作。唯一可用的选项是使用connect方法将它与键控流或非键控流连接起来。简单来讲,就是BroadcastStream只能通过connect连接与第三方的流连接,这样BroadcastStream中的数据或者状态才能实现广播到其它流的算子中。

示例说明:来自两条数据流,一条是服务器cpu信息(服务器id,cpu值,时间),一条是报警值设置流,通过报警值设置流动态设置cpu的报警值。

OperatorBroadcastDemo类:

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.api.common.state.BroadcastState;

import org.apache.flink.api.common.state.MapStateDescriptor;

import org.apache.flink.api.common.state.ReadOnlyBroadcastState;

import org.apache.flink.api.common.typeinfo.Types;

import org.apache.flink.api.java.tuple.Tuple3;

import org.apache.flink.streaming.api.datastream.BroadcastStream;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.co.BroadcastProcessFunction;

import org.apache.flink.util.Collector;

public class OperatorBroadcastDemo {

public static void main(String[] args) throws Exception {

// 1. 创建执行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(2);

// 2. 读取数据

DataStreamSource<String> dataSource = env.socketTextStream("127.0.0.1", 8888);// 服务器cpu数据流

DataStreamSource<String> configSource = env.socketTextStream("127.0.0.1", 9999);// cpu报警值数据流

// 3. map做类型转换

SingleOutputStreamOperator<Tuple3<String,Double,Long>> dataMap = dataSource.map(new Tuple3MapFunction());

MapStateDescriptor<String, Double> descriptor = new MapStateDescriptor<>("broadcast", Types.STRING, Types.DOUBLE);

BroadcastStream<String> broadcast = configSource.broadcast(descriptor);

SingleOutputStreamOperator<String> process = dataMap.connect(broadcast).process(new BroadcastProcessFunction<Tuple3<String, Double, Long>, String, String>() {

private final String WARN_VALUE_KEY = "warnValue";

private final double DEFAULT_WARN_VALUE = 80;

@Override

public void processElement(Tuple3<String, Double, Long> value, BroadcastProcessFunction<Tuple3<String, Double, Long>, String, String>.ReadOnlyContext ctx, Collector<String> out) throws Exception {

ReadOnlyBroadcastState<String, Double> broadcastState = ctx.getBroadcastState(descriptor);

Double warnValue = broadcastState.get(WARN_VALUE_KEY);

if (warnValue==null){

warnValue = DEFAULT_WARN_VALUE;

}

if(warnValue.compareTo(value.f1)<=0){

out.collect("服务器id=" + value.f0 + ", 时间="+ value.f2+", cpu="+ value.f1 + " 超过了警戒值" + warnValue + ", 发生报警!!!!");

}

}

@Override

public void processBroadcastElement(String value, BroadcastProcessFunction<Tuple3<String, Double, Long>, String, String>.Context ctx, Collector<String> out) throws Exception {

BroadcastState<String, Double> broadcastState = ctx.getBroadcastState(descriptor);

double warnValue = DEFAULT_WARN_VALUE;

try {

warnValue = Double.parseDouble(value);

}catch (Exception ignored){

// 异常不做处理,默认80

}

// 将值放入到广播中

broadcastState.put(WARN_VALUE_KEY, warnValue);

}

});

// 5. 打印

process.print();

// 执行

env.execute();

}

public static class Tuple3MapFunction implements MapFunction<String, Tuple3<String,Double,Long>> {

@Override

public Tuple3<String, Double, Long> map(String value) throws Exception {

String[] values = value.split(",");

String value1 = values[0];

double value2 = Double.parseDouble("0");

long value3 = 0;

if(values.length >= 2){

try {

value2 = Double.parseDouble(values[1]);

}catch (Exception e){

value2 = Double.parseDouble("0");

}

}

if(values.length >= 3){

try {

value3 = Long.parseLong(values[2]);

}catch (Exception ignored){

}

}

return new Tuple3<>(value1,value2,value3);

}

}

}

输入1:先输入前2条数据,等待9999端口输入第1条数据后,再输入第3条数据

输入2:等待8888端口输入前2条数据后,才输入第1条数据

输出:

知识点:

1)8888端口输入前2条数据,只显示一条报警值,因为默认报警值是80,前2条数据只有一条大于80

2)9999端口输入第1条数据,这时候报警值被设置为50

3)8888端口输入第3条数据,显示报警,并且报警值已经被设置为50。

4)这里并行度设置为2,就是不同算子并行度子任务,广播都是共用的。

4 TTL

Flink提供这种 托管状态(Managed State) 的存储很方便,但是也会带来一个问题,就是如果用户想隔一段时间将数据如何清除,重新计算?比如前面ValueState案例,其ValueState是一直都会存在的,如果用户要清除是否需要自定义定时器的方式,定时清除数据。其实Flink提供了TTL(Time To Live 生存时间)的机制,只需要在初始化 托管状态(Managed State) 时设置TTL,即可自动定时清除。

示例说明:这里使用和前面ValueState一样的示例,累加cpu值,不过增加了TTL,定时10秒钟清除一次数据。

TTLDemo类:

import org.apache.flink.api.common.eventtime.WatermarkStrategy;

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.api.common.functions.OpenContext;

import org.apache.flink.api.common.state.StateTtlConfig;

import org.apache.flink.api.common.state.ValueState;

import org.apache.flink.api.common.state.ValueStateDescriptor;

import org.apache.flink.api.common.typeinfo.Types;

import org.apache.flink.api.java.functions.KeySelector;

import org.apache.flink.api.java.tuple.Tuple3;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.datastream.KeyedStream;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.KeyedProcessFunction;

import org.apache.flink.util.Collector;

import java.time.Duration;

public class TTLDemo {

public static void main(String[] args) throws Exception {

// 1. 创建执行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

// 2. 读取数据

DataStreamSource<String> text = env.socketTextStream("127.0.0.1", 9999);

// 3. map做类型转换

SingleOutputStreamOperator<Tuple3<String,Double,Long>> map = text.map(new Tuple3MapFunction());

// 4. 定义单调递增watermark以及TimestampAssigner

WatermarkStrategy<Tuple3<String,Double,Long>> watermarkStrategy = WatermarkStrategy

// 设置单调递增

.<Tuple3<String,Double,Long>>forMonotonousTimestamps()

// 设置事件时间处理器

.withTimestampAssigner((element, recordTimestamp) ->{

return element.f2 * 1000L;

} );

SingleOutputStreamOperator<Tuple3<String,Double,Long>> mapWithWatermark = map.assignTimestampsAndWatermarks(watermarkStrategy);

// 5. 做keyBy

KeyedStream<Tuple3<String,Double,Long>, String> kyStream = mapWithWatermark.keyBy(new KeySelectorFunction());

SingleOutputStreamOperator<String> process = kyStream.process(new KeyedProcessFunction<>() {

// 1)定义ValueState值

ValueState<Tuple3<String, Double, Long>> currentValue;

@Override

public void open(OpenContext openContext) throws Exception {

super.open(openContext);

// 2) 设置TTL策略

StateTtlConfig stateTtlConfig = StateTtlConfig

// 设置10秒钟过期

.newBuilder(Duration.ofSeconds(10))

// 设置什么条件下更新过期时间,支持3种方式:创建和写入(OnCreateAndWrite)、读取和写入(OnReadAndWrite)、都不更新(Disabled)

.setUpdateType(StateTtlConfig.UpdateType.OnCreateAndWrite)

// 设置是否返回过期未清除数据(因为Flink清除数据机制是通过标识某个数据过期,另外有一个线程定时清除数据,这样就会导致有些数据过期,但是还未清除)

// 支持2种方式:不返回过期未清除数据(NeverReturnExpired)、返回过期未清除数据(ReturnExpiredIfNotCleanedUp)

.setStateVisibility(StateTtlConfig.StateVisibility.NeverReturnExpired)

.build();

// 3)初始化ValueState值

ValueStateDescriptor<Tuple3<String, Double, Long>> descriptor = new ValueStateDescriptor<>("currentValue", Types.TUPLE(Types.STRING, Types.DOUBLE, Types.LONG));

descriptor.enableTimeToLive(stateTtlConfig);

currentValue = getRuntimeContext().getState(descriptor);

}

@Override

public void processElement(Tuple3<String, Double, Long> value, KeyedProcessFunction<String, Tuple3<String, Double, Long>, String>.Context ctx, Collector<String> out) throws Exception {

// 4)获取ValueState值

Tuple3<String, Double, Long> curValue = currentValue.value();

if(curValue==null){

curValue = value;

}else{

curValue.f1 = curValue.f1 + value.f1;

}

// 5)更新ValueState值

currentValue.update(curValue);

out.collect(curValue.toString());

}

});

// 6. 打印

process.print();

// 执行

env.execute();

}

public static class Tuple3MapFunction implements MapFunction<String, Tuple3<String,Double,Long>> {

@Override

public Tuple3<String, Double, Long> map(String value) throws Exception {

String[] values = value.split(",");

String value1 = values[0];

double value2 = Double.parseDouble("0");

long value3 = 0;

if(values.length >= 2){

try {

value2 = Double.parseDouble(values[1]);

}catch (Exception e){

value2 = Double.parseDouble("0");

}

}

if(values.length >= 3){

try {

value3 = Long.parseLong(values[2]);

}catch (Exception ignored){

}

}

return new Tuple3<>(value1,value2,value3);

}

}

public static class KeySelectorFunction implements KeySelector<Tuple3<String,Double,Long>, String> {

@Override

public String getKey(Tuple3<String,Double,Long> value) throws Exception {

// 返回第一个值,作为keyBy的分类

return value.f0;

}

}

}

输入:输入第1条和第2条数据,隔10秒钟之后,再输入第3条数据

输出:

知识点:总共输入3条数据,第3条数据是隔10秒钟之后再输入的

1)控制台可以看到第2次输出时,是累加第1次是数据,所以cpu值=5

2)第3次输出时,其cpu值=2.8,可以看出没有累加之前的数据,因此设置TTL=10秒,超过10秒之后就自动清除数据。

5 状态后端(State Backends)

5.1 分类

Flink的状态定义获取只是Flink有状态特性的一方面,状态实际上还需要进行存储,这样任务下次重启时,可以从某个状态开始运行,不用从头开始,这样才体现Flink有状态特性的完整性。如果使用 托管状态(Managed State) 的,Flink内部会进行自动落盘存储。那么它存在在什么地方?

根据《官方文档》,目前Flink支持2种方式的的状态后端(State Backends)存储方式:

- HashMapStateBackend:使用hashMap存储,主要是存储子任务的JVM内存,这是默认的就是这种方式。这种方式因为会放在内存存储中,因此存储速度快,但同时需要占用JVM的内存,因此不适合数量过大的状态存储。(注意:使用内存存储并不是说它不会落盘,落盘是checkpoint的功能,这一块下一章再讲)

- EmbeddedRocksDBStateBackend:使用RocksDB数据库进行存储,有一个本地落盘的数据库。它需要将状态进行序列化落盘。这种方式会序列化落到本地硬盘,因此存储速度会受影响,但是由于落地到硬盘,因此适合数据量大的状态存储。

5.2 使用方式

Flink提供3种方式设置 状态后端(State Backends) ,其设置优先级(从高到低):

- Job 代码配置

Configuration config = new Configuration();

config.set(StateBackendOptions.STATE_BACKEND, "hashmap"); // HashMapStateBackend

config.set(StateBackendOptions.STATE_BACKEND, "rocksdb"); // EmbeddedRocksDBStateBackend,如果在本地运行,需要引入flink-statebackend-rocksdb依赖

- Job 提交时指定

flink run-application -t yarn-applicaiton -p 3 -Dstate.backend.type=rocksdb -c 全类名 jar包

- flink-conf.yaml 中的全局配置

state.backend.type: hashmap # 类型配置,可以是hashmap或者rocksdb

state.backend.incremental: false # 是否增量配置

结语:本章讲解了Flink的状态管理,状态管理只是Flink有状态特性的一方面,状态实际上还需要进行落盘,这样任务下次重启时,可以从某个状态开始运行,不用从头开始,这样才体现Flink有状态特性的完整性。下一章就开始讲解Flink的落盘处理:检查点。

4238

4238

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?