文章目录

Spark-SQL物理执行

物理执行作为Spark-SQL执行过程中的最后一步,是将逻辑执行计划转换为物理执行计划SparkPlan,然后可以在Spark-Core上直接运行生成RDD。

Spark-Sql的整个执行过程其实在QueryExecution中定义得非常清楚,如代码所示:

//QueryExecution

//执行优化

lazy val optimizedPlan: LogicalPlan = sparkSession.sessionState.optimizer.execute(withCachedData)

//选取物理执行计划

lazy val sparkPlan: SparkPlan = {

SparkSession.setActiveSession(sparkSession)

//目前只是选取多个物理计划的第一个

planner.plan(ReturnAnswer(optimizedPlan)).next()

}

//执行前准备

lazy val executedPlan: SparkPlan = prepareForExecution(sparkPlan)

//执行物理计划

lazy val toRdd: RDD[InternalRow] = executedPlan.execute()

分区操作和分布情况(Partitioning和Distribution)

在spark中,分区一直是影响性能的重要指标。尤其是在Join和聚合的场景中,例如在plan1和plan2的Hash Join中,plan1和plan2的分区方式就必须要求是基于相同key的hash分布。如果是广播类型的Join,就要求至少有一边是广播变量数据分布。

Distribution是数据分布情况,Partitioning是分区操作

Distribution与Partitioning关联,定义了数据在集群各个节点上的分布情况

Distribution包括以下6种:

| Distribution类型 | 描述 |

|---|---|

| UnspecifiedDistribution | 未指定分布 |

| AllTuples | 单分区,例如GlobalLimit算子 |

| BroadcastDistribution | 广播分布,数据会广播到所有节点上,构造参数mode为广播模式(BroadcastMode),例如Broadcast的Join操作中的requiredChildDistribution为[BroadcastDistribution(mode)] |

| ClusteredDistribution | 构造参数clustering是Seq[Expression]类型,起到哈希函数的效果,经过clustering之后,相同的value数据会放到一个分区中,例如SortAggregateExec类型的Join操作中的requiredChildDistribution就是ClusteredDistribution(exprs) |

| HashClusteredDistribution | 构造参数expressions是Seq[Expression]类型,起到哈希函数的效果,经过expressions之后,相同的value数据会放到一个分区中,例如SortMerge类型的Join操作中的requiredChildDistribution就是[HashClusteredDistribution(leftKeys), HashClusteredDistribution(reghtKeys)] |

| OrderedDistribution | 构造参数ordering是Seq[SortOrder]类型,数据会根据ordering计算后的结果排序。在全局的Sort算子中,requiredChildDistribution就是[OrderedDistribution(sortOrder)] |

Partitioning表示数据分区操作,如上图所示,介绍下内部重要的成员变量和函数

trait Partitioning {

//该SparkPlan输出RDD的分区数目

val numPartitions: Int

//当前的partitioning操作能否得到所需的数据分布,当不满足时返回false,一般需要进行repartition操作,

//对数据进行重新组织

final def satisfies(required: Distribution): Boolean = {

required.requiredNumPartitions.forall(_ == numPartitions) && satisfies0(required)

}

protected def satisfies0(required: Distribution): Boolean = required match {

case UnspecifiedDistribution => true

case AllTuples => numPartitions == 1

case _ => false

}

}

Partitioning包括以下7种

| Partitioning类型 | 描述 |

|---|---|

| UnknownPartitioning | 不进行分区 |

| RoundRobinPartitioning | 在1-numPartitions范围内轮训式分区 |

| HashPartitioning | 基于Hash的分区 |

| RangePartitioning | 基于范围的分区 |

| PartitioningCollection | 分区方式的集合,描述物理算子的输出 |

| BroadcastPartitioning | 广播分区 |

| DataSourcePartitioning | V2 DataSource的分区方式 |

物理计划(SparkPlan)

SparkPlan和LogicalPlan基本是一一对应的,和LogicalPlan类似,都继承自QueryPlan[PlanType <: QueryPlan[PlanType]],命名规则都是XXXExec。SparkPlan的重要方法如下:

| 方法 | 作用描述 |

|---|---|

| outputPartitioning | 定义SparkPlan输出数据的分区方式 |

| requiredChildDistribution | 定义SparkPlan要求子节点遵守的分区方式 |

| outputOrdering | 定义SparkPlan输出数据的排序方式 |

| requiredChildOrdering | 定义SparkPlan要求子节点遵守的排序方式 |

| doExecute | 执行生成RDD |

abstract class SparkPlan extends QueryPlan[SparkPlan] with Logging with Serializable {

...

//定义SparkPlan输出数据的分区方式

def outputPartitioning: Partitioning = UnknownPartitioning(0)

//定义SparkPlan要求子节点遵守的分区方式

def requiredChildDistribution: Seq[Distribution] =

Seq.fill(children.size)(UnspecifiedDistribution)

//定义SparkPlan输出数据的排序方式

def outputOrdering: Seq[SortOrder] = Nil

//定义SparkPlan要求子节点遵守的排序方式

def requiredChildOrdering: Seq[Seq[SortOrder]] = Seq.fill(children.size)(Nil)

//执行操作

protected def doExecute(): RDD[InternalRow]

下面介绍几个比较常见的SparkPlan:

投影(ProjectExec)

doExecute方法比较简单,就是执行子节点的execute方法并通过子节点的输出属性构造RDD[InternalRow]返回,其中输出的Ordering和Partitioning都取自子节点的排序和分区。

case class ProjectExec(projectList: Seq[NamedExpression], child: SparkPlan)

extends UnaryExecNode with CodegenSupport {

override def output: Seq[Attribute] = projectList.map(_.toAttribute)

override def inputRDDs(): Seq[RDD[InternalRow]] = {

child.asInstanceOf[CodegenSupport].inputRDDs()

}

protected override def doExecute(): RDD[InternalRow] = {

child.execute().mapPartitionsWithIndexInternal { (index, iter) =>

val project = UnsafeProjection.create(projectList, child.output,

subexpressionEliminationEnabled)

project.initialize(index)

iter.map(project)

}

}

override def outputOrdering: Seq[SortOrder] = child.outputOrdering

override def outputPartitioning: Partitioning = child.outputPartitioning

}

过滤(FilterExec)

过滤的execute方法也比较简单,也是执行子节点的execute方法,通过子节点的输出属性和condition构造predicate表达式,计算数据并返回RDD[InternalRow]

case class FilterExec(condition: Expression, child: SparkPlan)

extends UnaryExecNode with CodegenSupport with PredicateHelper {

protected override def doExecute(): RDD[InternalRow] = {

val numOutputRows = longMetric("numOutputRows")

child.execute().mapPartitionsWithIndexInternal { (index, iter) =>

val predicate = newPredicate(condition, child.output)

predicate.initialize(0)

iter.filter { row =>

val r = predicate.eval(row)

if (r) numOutputRows += 1

r

}

}

}

override def outputOrdering: Seq[SortOrder] = child.outputOrdering

override def outputPartitioning: Partitioning = child.outputPartitioning

}

文件读取(FileSourceScanExec)

1.通过FileSourceStrategy如果匹配到LogicalRelation(fsRelation: HadoopFsRelation, _, table, _)将生成FileSourceScanExec

//FileSourceStrategy

def apply(plan: LogicalPlan): Seq[SparkPlan] = plan match {

case PhysicalOperation(projects, filters,

l @ LogicalRelation(fsRelation: HadoopFsRelation, _, table, _)) =>

...

val scan =

FileSourceScanExec(

fsRelation,

outputAttributes,

outputSchema,

partitionKeyFilters.toSeq,

bucketSet,

dataFilters,

table.map(_.identifier))

...

}

2.接下来看其doExecute方法,看看inputRDD是如何生成的

//FileSourceScanExec

protected override def doExecute(): RDD[InternalRow] = {

if (supportsBatch) {

WholeStageCodegenExec(this)(codegenStageId = 0).execute()

} else {

val numOutputRows = longMetric("numOutputRows")

if (needsUnsafeRowConversion) {

inputRDD.mapPartitionsWithIndexInternal { (index, iter) =>

val proj = UnsafeProjection.create(schema)

proj.initialize(index)

iter.map( r => {

numOutputRows += 1

proj(r)

})

}

} else {

inputRDD.map { r =>

numOutputRows += 1

r

}

}

}

}

3.如果设置了bucket,即按照设置的字段分区,则使用createBucketedReadRDD,否则用createNonBucketedReadRDD

//FileSourceScanExec

private lazy val inputRDD: RDD[InternalRow] = {

val readFile: (PartitionedFile) => Iterator[InternalRow] =

relation.fileFormat.buildReaderWithPartitionValues(

sparkSession = relation.sparkSession,

dataSchema = relation.dataSchema,

partitionSchema = relation.partitionSchema,

requiredSchema = requiredSchema,

filters = pushedDownFilters,

options = relation.options,

hadoopConf = relation.sparkSession.sessionState.newHadoopConfWithOptions(relation.options))

relation.bucketSpec match {

case Some(bucketing) if relation.sparkSession.sessionState.conf.bucketingEnabled =>

createBucketedReadRDD(bucketing, readFile, selectedPartitions, relation)

case _ =>

createNonBucketedReadRDD(readFile, selectedPartitions, relation)

}

}

4.以createBucketedReadRDD为例来分析,最终生成FileScanRDD返回。

//FileSourceScanExec

private def createBucketedReadRDD(

bucketSpec: BucketSpec,

readFile: (PartitionedFile) => Iterator[InternalRow],

selectedPartitions: Seq[PartitionDirectory],

fsRelation: HadoopFsRelation): RDD[InternalRow] = {

...

val filePartitions = Seq.tabulate(bucketSpec.numBuckets) { bucketId =>

FilePartition(bucketId, prunedFilesGroupedToBuckets.getOrElse(bucketId, Nil))

}

new FileScanRDD(fsRelation.sparkSession, readFile, filePartitions)

}

5.接下来看看FileScanRDD的compute方法,读取当前文件是通过readFunction,即构造inputRDD中定义的readFile,即FileFormat的buildReaderWithPartitionValues方法,buildReaderWithPartitionValues中构造的是ParquetFileReader,通过RecordReaderIterator迭代读取。返回的是Iterator[InternalRow]。

override def compute(split: RDDPartition, context: TaskContext): Iterator[InternalRow] = {

val iterator = new Iterator[Object] with AutoCloseable {

...

private[this] val files = split.asInstanceOf[FilePartition].files.toIterator

private[this] var currentFile: PartitionedFile = null

private[this] var currentIterator: Iterator[Object] = null

def hasNext: Boolean = {

context.killTaskIfInterrupted()

(currentIterator != null && currentIterator.hasNext) || nextIterator()

}

def next(): Object = {

val nextElement = currentIterator.next()

nextElement

}

private def readCurrentFile(): Iterator[InternalRow] = {

try {

readFunction(currentFile)

} catch {

}

}

private def nextIterator(): Boolean = {

if (files.hasNext) {

currentFile = files.next()

InputFileBlockHolder.set(currentFile.filePath, currentFile.start, currentFile.length)

if (ignoreMissingFiles || ignoreCorruptFiles) {

currentIterator = new NextIterator[Object] {

private lazy val internalIter = readCurrentFile()

override def getNext(): AnyRef = {

try {

if (internalIter.hasNext) {

internalIter.next()

} else {

finished = true

null

}

} catch {

}

}

override def close(): Unit = {}

}

} else {

currentIterator = readCurrentFile()

}

try {

hasNext

} catch {

}

} else {

currentFile = null

InputFileBlockHolder.unset()

false

}

}

override def close(): Unit = {

incTaskInputMetricsBytesRead()

InputFileBlockHolder.unset()

}

}

context.addTaskCompletionListener[Unit](_ => iterator.close())

iterator.asInstanceOf[Iterator[InternalRow]] // This is an erasure hack.

}

执行策略(SparkStrategy)

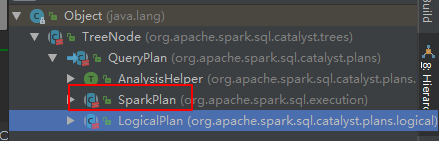

SparkStrategy作为逻辑执行计划到物理执行计划的桥梁,其继承关系如下:

QueryPlanner是将所有SparkStrategy应用于LogicalPlan将其输出多个物理执行计划PhysicalPlan,其子类SparkPlanner中定义了多个Strategy,从上图可以看到具体的Strategy,其Strategy的具体作用如下:

重要方法是plan

1.将strategies应用到LogicalPlan生成候选的物理执行计划集合

2.如果集合中存在PlanLater类型的SparkPlan,则通过placeholder取出对应的LogicalPlan后,递归调用plan()方法,将PlanLater替换成子节点的物理执行计划。(PlanLater在这里用处不大,只做占位符用)

3.对物理执行计划进行过滤(当前是直接返回传入的plans,未做过滤)

//QueryPlanner

def plan(plan: LogicalPlan): Iterator[PhysicalPlan] = {

val candidates = strategies.iterator.flatMap(_(plan))

val plans = candidates.flatMap { candidate =>

val placeholders = collectPlaceholders(candidate)

if (placeholders.isEmpty) {

Iterator(candidate)

} else {

placeholders.iterator.foldLeft(Iterator(candidate)) {

case (candidatesWithPlaceholders, (placeholder, logicalPlan)) =>

val childPlans = this.plan(logicalPlan)

candidatesWithPlaceholders.flatMap { candidateWithPlaceholders =>

childPlans.map { childPlan =>

candidateWithPlaceholders.transformUp {

case p if p.eq(placeholder) => childPlan

}

}

}

}

}

}

val pruned = prunePlans(plans)

assert(pruned.hasNext, s"No plan for $plan")

pruned

}

如上图,SparkStrategy包括几种常见的Strategy,其作用如下:

| 物理计划生成策略 | 描述 |

|---|---|

| DataSourceV2Strategy | V2版本的数据源策略 |

| DataSourceStrategy | V1版本的数据源策略 |

| FileSourceStrategy | 文件数据源扫描策略 |

| Aggregation | 聚合策略 |

| Window | 窗口策略 |

| JoinSelection | Join相关策略 |

| InMemoryScans | 内存数据源扫描策略 |

| BasicOperators | 基本算子生成策略 |

基本算子生成策略(BasicOperators)

其中定义了基本算子如Filter、Project、Union等的逻辑计划和物理计划的映射关系。

object BasicOperators extends Strategy {

def apply(plan: LogicalPlan): Seq[SparkPlan] = plan match {

...

case r: RunnableCommand => ExecutedCommandExec(r) :: Nil

case plan @ ApplicationLeafNode(output) =>

plan.buildLeafExecNode() :: Nil

case plan @ ApplicationUnaryNode(child, output) =>

plan.buildUnaryExecNode() :: Nil

case plan @ ApplicationBinaryNode(left, right, output) =>

plan.buildBinaryExecNode() :: Nil

case logical.Sort(sortExprs, global, child) =>

execution.SortExec(sortExprs, global, planLater(child)) :: Nil

case logical.Project(projectList, child) =>

execution.ProjectExec(projectList, planLater(child)) :: Nil

case logical.Filter(condition, child) =>

execution.FilterExec(condition, planLater(child)) :: Nil

case logical.LocalLimit(IntegerLiteral(limit), child) =>

execution.LocalLimitExec(limit, planLater(child)) :: Nil

case logical.GlobalLimit(IntegerLiteral(limit), child) =>

execution.GlobalLimitExec(limit, planLater(child)) :: Nil

case logical.Union(unionChildren) =>

execution.UnionExec(unionChildren.map(planLater)) :: Nil

...

}

}

文件数据源扫描策略(FileSourceStrategy)

匹配到PhysicalOperation加上LogicalRelation节点最终会构造FileSourceScanExec,并在之后加上过滤(FilterExec)和投影(ProjectExec)

//FileSourceStrategy

def apply(plan: LogicalPlan): Seq[SparkPlan] = plan match {

case PhysicalOperation(projects, filters,

l @ LogicalRelation(fsRelation: HadoopFsRelation, _, table, _)) =>

...

val scan =

FileSourceScanExec(

fsRelation,

outputAttributes,

outputSchema,

partitionKeyFilters.toSeq,

bucketSet,

dataFilters,

table.map(_.identifier))

val afterScanFilter = afterScanFilters.toSeq.reduceOption(expressions.And)

val withFilter = afterScanFilter.map(execution.FilterExec(_, scan)).getOrElse(scan)

val withProjections = if (projects == withFilter.output) {

withFilter

} else {

execution.ProjectExec(projects, withFilter)

}

withProjections :: Nil

case _ => Nil

}

执行前准备

通过执行策略生成了物理执行计划后,还不能直接执行,需要经过prepareForExecution之后才生成最终执行的物理执行计划,preparations中定义了5个规则,具体作用如下:

| 规则名 | 作用描述 |

|---|---|

| PlanSubqueries | 特殊子查询物理计划处理 |

| EnsureRequirements | 确保分区和排序正确 |

| CollapseCodegenStages | 代码生成相关 |

| ReuseExchange | 重用Exchange节点 |

| ReuseSubquery | 重用子查询 |

//QueryExecution

protected def prepareForExecution(plan: SparkPlan): SparkPlan = {

preparations.foldLeft(plan) { case (sp, rule) => rule.apply(sp) }

}

protected def preparations: Seq[Rule[SparkPlan]] = Seq(

PlanSubqueries(sparkSession),//特殊子查询物理计划处理

EnsureRequirements(sparkSession.sessionState.conf),//确保分区和排序正确

CollapseCodegenStages(sparkSession.sessionState.conf),//代码生成相关

ReuseExchange(sparkSession.sessionState.conf),//重用Exchange节点

ReuseSubquery(sparkSession.sessionState.conf))//重用子查询

接下来重点介绍下EnsureRequirements规则

EnsureRequirements规则

EnsureRequirements主要作用是确保分区和排序正确,也就是如果输入数据的分区或有序性无法满足当前节点的处理逻辑,则EnsureRequirements会在物理计划中添加一些Shuffle操作或排序操作来满足要求。先看下其apply方法:

1.如果匹配到ShuffleExchangeExec节点,如果其子节点的分区输出方式是HashPartitioning,则用子节点来替换自己。否则走ensureDistributionAndOrdering逻辑。

//EnsureRequirements

def apply(plan: SparkPlan): SparkPlan = plan.transformUp {

// TODO: remove this after we create a physical operator for `RepartitionByExpression`.

case operator @ ShuffleExchangeExec(upper: HashPartitioning, child, _) =>

child.outputPartitioning match {

case lower: HashPartitioning if upper.semanticEquals(lower) => child

case _ => operator

}

case operator: SparkPlan =>

ensureDistributionAndOrdering(reorderJoinPredicates(operator))

}

2.获取当前节点要求子节点满足的分区方式和排序方式,如果子节点输出的分区满足需要的分区方式。则用子节点替换,否则,如果需要BroadcastDistribution,则新增BroadcastExchangeExec(mode, child)节点,否则新增ShuffleExchangeExec(distribution.createPartitioning(numPartitions), child)节点(其中numPartitions可通过spark.sql.shuffle.partitions配置,默认200)。

//EnsureRequirements.ensureDistributionAndOrdering

val requiredChildDistributions: Seq[Distribution] = operator.requiredChildDistribution

val requiredChildOrderings: Seq[Seq[SortOrder]] = operator.requiredChildOrdering

var children: Seq[SparkPlan] = operator.children

children = children.zip(requiredChildDistributions).map {

case (child, distribution) if child.outputPartitioning.satisfies(distribution) =>

child

case (child, BroadcastDistribution(mode)) =>

BroadcastExchangeExec(mode, child)

case (child, distribution) =>

val numPartitions = distribution.requiredNumPartitions

.getOrElse(defaultNumPreShufflePartitions)

ShuffleExchangeExec(distribution.createPartitioning(numPartitions), child)

}

3.针对2个或2个以上的子节点情况,如果不满足时也需要创建ShuffleExchangeExec节点

//EnsureRequirements.ensureDistributionAndOrdering

val childrenIndexes = requiredChildDistributions.zipWithIndex.filter {

case (UnspecifiedDistribution, _) => false

case (_: BroadcastDistribution, _) => false

case _ => true

}.map(_._2)

val childrenNumPartitions =

childrenIndexes.map(children(_).outputPartitioning.numPartitions).toSet

if (childrenNumPartitions.size > 1) {

val requiredNumPartitions = {

val numPartitionsSet = childrenIndexes.flatMap {

index => requiredChildDistributions(index).requiredNumPartitions

}.toSet

numPartitionsSet.headOption

}

val targetNumPartitions = requiredNumPartitions.getOrElse(childrenNumPartitions.max)

children = children.zip(requiredChildDistributions).zipWithIndex.map {

case ((child, distribution), index) if childrenIndexes.contains(index) =>

if (child.outputPartitioning.numPartitions == targetNumPartitions) {

child

} else {

val defaultPartitioning = distribution.createPartitioning(targetNumPartitions)

child match {

case ShuffleExchangeExec(_, c, _) => ShuffleExchangeExec(defaultPartitioning, c)

case _ => ShuffleExchangeExec(defaultPartitioning, child)

}

}

case ((child, _), _) => child

}

}

4.利用ExchangeCoordinator协调分区,withCoordinator 开启需要设置spark.sql.adaptive.enabled为true,目前对SS不支持。

if (sparkSession.sessionState.conf.adaptiveExecutionEnabled) {

logWarning(s"${SQLConf.ADAPTIVE_EXECUTION_ENABLED.key} " +

"is not supported in streaming DataFrames/Datasets and will be disabled.")

}

val withCoordinator =

if (adaptiveExecutionEnabled && supportsCoordinator) {

val coordinator =

new ExchangeCoordinator(

targetPostShuffleInputSize,

minNumPostShufflePartitions)

children.zip(requiredChildDistributions).map {

case (e: ShuffleExchangeExec, _) =>

e.copy(coordinator = Some(coordinator))

case (child, distribution) =>

val targetPartitioning = distribution.createPartitioning(defaultNumPreShufflePartitions)

ShuffleExchangeExec(targetPartitioning, child, Some(coordinator))

}

} else {

children

}

参考资料

[1]: 《Spark SQL内部剖析》朱锋 张韶全 黄明 著

3139

3139

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?