| 时间 | 版本 | 修改人 | 描述 |

|---|---|---|---|

| V0.1 | 宋全恒 | 新建文档 | |

作者

Lizhuohan是单位是UC Berkeley(加州大学伯克利分校)。这可以从文献的作者信息中得到确认,其中提到了 “1UC Berkeley” 作为其隶属单位。

论文主要内容为:

这篇论文《Train Large, Then Compress: Rethinking Model Size for Efficient Training and Inference of Transformers》由Zhuohan Li等人撰写,探讨了在资源受限的情况下,如何通过改变Transformer模型的大小来提高训练和推理的效率。以下是该论文的核心内容:

-

研究背景:在深度学习模型训练中,通常目标是在时间和内存限制内最大化准确率。由于计算资源有限,这要求在给定的硬件和训练时间内实现最高的模型准确率。

-

主要发现:尽管较小的Transformer模型在每次迭代中执行速度更快,但更宽和更深的模型可以在更少的步骤中收敛。这种加速收敛通常超过了使用更大模型的额外计算开销。因此,最具计算效率的训练策略是反直觉地训练非常大的模型,但在少量迭代后停止。

-

模型压缩:大型模型对压缩技术(如量化和剪枝)的鲁棒性比小型模型更强。这意味着经过重度压缩的大型模型比轻度压缩的小型模型能够实现更高的准确率。

-

实验设置:研究者训练了用于自然语言处理任务的Transformer模型,特别是自监督预训练和高资源机器翻译任务。他们改变了Transformer模型的宽度和深度,并评估了它们在自监督预训练和机器翻译任务上的训练时间和准确率。

-

实验结果:实验表明,更大的模型在更少的梯度更新中收敛到更低的验证错误。此外,大型模型在训练效率上的优势超过了它们在推理效率上的劣势。

-

模型压缩效果:研究者展示了大型模型在量化和剪枝方面的压缩效果。他们发现,即使是在相同的推理预算下,经过重度压缩的大型模型也比轻度压缩的小型模型表现更好。

-

为什么大型模型更好:大型模型之所以训练更快和压缩更好,是因为它们具有更好的样本效率,能够更快地最小化训练误差,并且在测试误差方面收敛得更快。此外,大型模型的权重更容易用低精度或稀疏矩阵来近似。

-

相关工作:论文还讨论了与提高训练速度和效率、模型缩放训练、超参数调整和AutoML相关的工作。

-

结论和未来工作:研究表明,增加模型的宽度和深度可以加速收敛,并且大型模型在压缩后可以提供更高的准确率。未来的工作将检验这些结论在更多领域(如计算机视觉)的适用性,并探索为什么大型Transformer模型训练快速和压缩良好,以及模型大小如何影响过拟合和超参数调整等问题。

这篇论文提供了对Transformer模型大小与训练效率之间关系的新见解,并提出了一种新的训练策略,即首先训练大型模型,然后对它们进行压缩,以实现在资源受限环境中的高效训练和推理。

摘要

由于硬件资源有限,训练深度学习模型的目标通常是在训练和推理的时间和内存约束下最大限度地提高准确性。我们研究了在这种情况下模型大小的影响,重点研究了受计算限制的NLP任务的Transformer模型:自监督预训练和高资源机器翻译。我们首先表明,尽管较小的Transformer模型每次迭代执行得更快,但更宽、更深的模型收敛的步骤要少得多。此外,这种收敛的加速通常超过了使用更大模型的额外计算开销。因此,计算效率最高的训练策略是违反直觉地训练非常大的模型,但在少量迭代后停止。这导致了大型Transformer模型的训练效率和小型Transformer模型推理效率之间的明显权衡。然而,我们表明,与小模型相比,大模型对量化和修剪等压缩技术更具鲁棒性。因此,我们可以两全其美:高度压缩的大型模型比轻度压缩的小型模型实现更高的精度。

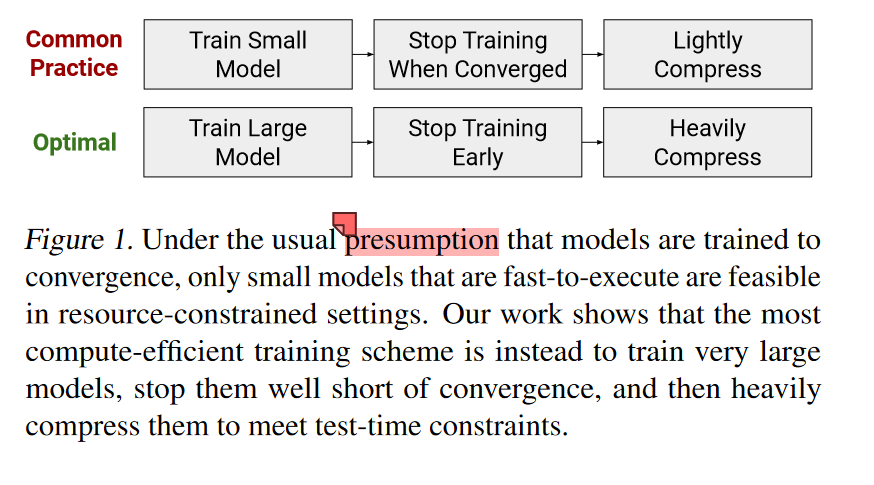

为了达到收敛的目标,在资源首先的环境中,只有快速执行的小模型是可行的。我们的工作表明最计算高效的训练方案相反是训练非常大的模型,在收敛之前停止运行,然后强烈的压缩这些大模型来满足测试-时间的约束。

简介

“Therefore, the most compute-efficient training strategy is to counterintuitively train extremely large models but stop after a small number of iterations.” (Li 等, 2020, p. 1) 因此,计算效率最高的训练策略是违反直觉地训练非常大的模型,但在少量迭代后停止。

“heavily compressed, large models achieve higher accuracy than lightly compressed, small models.” (Li 等, 2020, p. 1) 重度压缩的大型模型比轻度压缩的小型模型实现更高的精度。

“In the current deep learning paradigm, using more compute (e.g., increasing model size, dataset size, or training steps) typically leads to higher model accuracy” (Li 等, 2020, p. 1) 在当前的深度学习范式中,使用更多的计算(例如,增加模型大小、数据集大小或训练步骤)通常会导致更高的模型精度

“自监督模型训练 to scale to massive amounts of unlabeled data and very large neural models.” (Li 等, 2020, p. 1) 以扩展到大量未标记的数据和非常大的神经模型。

“This constraint(计算资源) causes the (often implicit) goal of model training to be maximizing compute efficiency: how to achieve the highest model accuracy given a fixed amount of hardware and training time.” (Li 等, 2020, p. 1) 这种约束导致模型训练的(通常是隐含的)目标是最大化计算效率:在给定固定数量的硬件和训练时间的情况下,如何实现最高的模型精度。

“In particular, there is typically an implicit assumption that models must be trained until convergence, which makes larger models appear less viable for limited compute budgets” (Li 等, 2020, p. 1) 特别是,通常有一个隐含的假设,即模型必须训练到收敛,这使得较大的模型在有限的计算预算下显得不太可行

“Concretely, we show that the fastest way to train Transformer models (Vaswani et al., 2017) is to substantially increase model size but stop training very early.” (Li 等, 2020, p. 1) 具体来说,我们表明训练Transformer模型的最快方法(Vaswani et al.,2017)是大幅增加模型大小,但很早就停止训练。

图2:对于给定的推理预算,增加Transformer模型的大小可以降低作为wall-clock函数的验证误差,并提高测试时间的准确性。(a) 演示了不同大小的ROBERTA模型在MLM预训练任务中的训练加速。在(b)中,我们采用已针对相同数量的wall-clock预训练的ROBERTA检查点,并在下游数据集(MNLI)上对其进行微调。然后,我们迭代地将模型权重修剪为零,并发现对于给定的测试时间内存预算,最好的模型是那些经过大规模训练然后被严重压缩的模型。

“we vary the width and depth of Transformer models and evaluate their training time and accuracy on self-supervised pretraining (ROBERTA (Liu et al., 2019b) trained on Wikipedia and BookCorpus) and machine translation (WMT14 English→French).” (Li 等, 2020, p. 1) 我们改变了Transformer模型的宽度和深度,并评估了它们在自监督预训练(ROBERTA(Liu et al.,2019b)和机器翻译(WMT14 English)上的训练时间和准确性→法语

“Moreover, this increase in convergence outpaces the additional computational overhead of using larger modelsthe most compute-efficient models are extremely large and stopped well short of convergence” (Li 等, 2020, p. 1) 此外,这种收敛性的增加超过了使用更大模型的额外计算开销——计算效率最高的模型非常大,而且远远没有收敛

“Although larger models train faster, they also increase the computational and memory requirements of inference. This increased cost is especially problematic in real-world applications, where the cost of inference dominates the cost of training” (Li 等, 2020, p. 2) 尽管较大的模型训练得更快,但它们也增加了推理的计算和内存需求。这种增加的成本在现实世界的应用中尤其成问题,因为推理成本在训练成本中占主导地位

“large models are considerably more robust to compression as compared to small models (Section 4)” (Li 等, 2020, p. 2) 与小型模型相比,大型模型对压缩的鲁棒性要高得多(第4节)

“large, heavily compressed models outperform small, lightly compressed models using comparable inference costs” (Li 等, 2020, p. 2) 使用可比较的推理成本,大的、高度压缩的模型胜过小的、轻度压缩的模型

“We show that the optimal model size is closely linked to the dataset size.” (Li 等, 2020, p. 2) 我们表明,最优模型大小与数据集大小密切相关。

“In particular, large models perform favorably in big data settings where overfitting is a limited concern.” (Li 等, 2020, p. 2) 特别是,大型模型在过拟合问题有限的大数据环境中表现良好。

“This error decreases as model size increases, i.e., greater overparameterization leads to easy-to-compress weights.” (Li 等, 2020, p. 2) 该误差随着模型大小的增加而减小,即,更大的过度参数化导致易于压缩权重。

实验设置

任务,模型,和数据集

自监督的预训练

“We also use an input sequence length of 128 and a batch size of 8192, unless otherwise noted.” (Li 等, 2020, p. 2) 我们还使用128的输入序列长度和8192的批量大小,除非另有说明。

“For ROBERTA, we vary the depth in {3, 6, 12, 18, 24}, and the hidden size in {256, 512, 768, 1024, 1536}.” (Li 等, 2020, p. 2) 对于ROBERTA,我们在{3,6,12,18,24}中改变深度,在{256,512,768,1024,1536}中改变隐藏大小。

“We hold out a random 0.5% of the data for validation and report the masked language modeling (MLM) perplexity on this data” (Li 等, 2020, p. 2) 我们随机拿出0.5%的数据进行验证,并报告了对这些数据的掩蔽语言建模(MLM)困惑

机器翻译

“For machine translation (MT) we train the standard Transformer architecture and hyperparameters on the WMT14 English→French dataset. We use the standard dataset splits: 36M sentences for training, newstest2013 for validation, and newstest2014 for testing.” (Li 等, 2020, p. 3) 对于机器翻译(MT),我们在WMT14 English上训练标准的Transformer架构和超参数→法国数据集。我们使用标准的数据集分割:36M个句子用于训练,newstest2013用于验证,newstest2014用于测试。

“We vary the model depth in {2, 6, 8} and hidden size in {128, 256, 512, 1024, 2048}.” (Li 等, 2020, p. 3) 我们在{2,6,8}中改变模型深度,在{128,256,512,1024,2048}中改变隐藏大小。

评价指标和Wall-Clock Time

FLOPs指标的问题

这些指标不能充分反映真实的训练时间。特别是,报告梯度步骤(gradient steps)不考虑使用较大批次或模型的成本。此外,尽管报告FLOP有助于进行比较,因为它与硬件无关,但它忽略了一个事实,即并行操作比现代硬件上的顺序操作便宜得多。

Wall-Clock time

我们使用单个机器来对每个模型大小的每个梯度步长的时间进行基准测试。特别是,我们训练模型,等待每个梯度步的时间稳定下来,然后我们使用100步的平均时间来计算训练持续时间。

在理解上,应该是等梯度计算的时候,比较稳定了,统计出100步的时间长度,然后统计出单个gradient step的耗时,用这个指标来衡量训练的进度。

论文中wall-clock time如何理解和计算?

在论文《Train Large, Then Compress: Rethinking Model Size for Efficient Training and Inference of Transformers》中,“wall-clock time”(实际时间)是指从开始执行一个任务到任务完成所经过的真实时间,不考虑任何并行计算或加速的可能性。这个概念与理论计算时间或处理器时间(CPU时间)相对,后者可能考虑的是模型执行的总操作数或指令数。

在实验中,研究者使用wall-clock time作为评估模型训练效率的主要指标。这是因为相比于梯度步骤数或训练FLOPs(浮点运算次数),**wall-clock time更能真实地反映在实际硬件上进行模型训练所需的时间。**例如,即使两个模型的梯度步骤数相同,较大的模型可能需要更多的时间来完成每个步骤,因为它可能有更大的参数集和更复杂的计算。

为了计算wall-clock time,研究者会在单个机器上(例如NVIDIA 16GB V100 GPU)训练模型,并等待每个梯度步骤的时间稳定下来。然后,他们会使用100个步骤的平均时间来计算整个训练过程的持续时间。这种方法确保了不同模型大小的训练时间可以在相同的硬件条件下进行比较,从而公平地评估不同模型的训练效率。

在论文中,研究者特别强调了wall-clock time的重要性,因为它能够揭示实际训练过程中的时间消耗,包括数据加载、模型前向传播、反向传播和参数更新等所有步骤。这种评估方式对于理解和优化实际训练过程中的效率至关重要。

相反,我们直接报告wall-clock作为我们的主要评估指标。由于不同机器的运行时间不同(硬件设置不同,作业没有隔离,等等)

“it neglects the fact that parallel operations are significantly cheaper than sequential operations on modern hardware.” (Li 等, 2020, p. 3) 它忽略了一个事实,即在现代硬件上并行操作比顺序操作便宜得多。

“we use a single machine to benchmark the time per gradient step for each model size.” (Li 等, 2020, p. 3) 我们使用单个机器来对每个模型大小的每个梯度步长的时间进行基准测试。

“In particular, we train models and wait for the time per gradient step to stabilize, and then we use the average time over 100 steps to calculate the training duration.” (Li 等, 2020, p. 3) 特别是,我们训练模型,等待每个梯度步的时间稳定下来,然后我们使用100步的平均时间来计算训练持续时间。

“We use Tensor2Tensor (Vaswani et al., 2018) for MT and fairseq (Ott et al., 2019) for RoBERTa. We train using a mix of v3-8 TPUs and 8xV100 GPUs for both tasks.” (Li 等, 2020, p. 3) 我们对MT使用Tensor2Tensor(Vaswani et al.,2018),对RoBERTa使用fairseq(Ott et al.,2019)。我们混合使用v3-8 TPU和8xV100 GPU进行两项任务的训练。

在论文《Train Large, Then Compress: Rethinking Model Size for Efficient Training and Inference of Transformers》中,“wall-clock time”(墙钟时间)是指从计算开始到计算结束所经过的实际时间。这个时间包括了程序执行的所有时间,不仅仅是CPU处理时间,还包括了等待资源、I/O操作、网络延迟等与CPU时间无关的时间。

在论文中,wall-clock time(实际时间)的计算方法涉及以下几个步骤:

-

选择基准硬件:研究者选择了特定的硬件设备(例如,NVIDIA 16GB V100 GPU)作为基准,用于测量时间。

-

训练模型:在该硬件上,研究者训练不同大小的Transformer模型。这包括设置模型参数、加载数据、执行前向和反向传播、更新权重等完整的训练过程。

-

梯度累积:为了适应不同大小的模型,研究者可能使用梯度累积技术。这意味着他们可能会在一个较大的批次上累积多个小批次的梯度,然后再执行一次参数更新。这样可以在有限的内存下训练更大的模型。

-

稳定时间:训练开始后,每个梯度步骤的时间可能会有所波动。研究者会等待这个过程稳定下来,确保每个步骤的时间测量是一致的。

-

测量时间:一旦梯度步骤的时间稳定,研究者会测量并记录每个梯度步骤的平均时间。这通常是通过计算一定数量的连续步骤(例如100步)的平均时间来完成的。

-

计算总时间:最后,研究者将每个梯度步骤的平均时间乘以训练过程中的总梯度步骤数,得到整个训练过程的wall-clock time。

例如,如果一个模型需要100,000个梯度步骤才能完成训练,而每个梯度步骤平均耗时0.5秒,那么整个训练过程的wall-clock time就是:

[ \text{Total Wall-Clock Time} = \text{Average Time per Gradient Step} \times \text{Total Gradient Steps} ]

[ \text{Total Wall-Clock Time} = 0.5 \text{ seconds/step} \times 100,000 \text{ steps} ]

[ \text{Total Wall-Clock Time} = 50,000 \text{ seconds} ]

这个总时间就是模型训练的实际时间,不考虑任何加速或并行计算的可能性。这种方法提供了一个实际的、可比较的指标,用于评估不同模型在实际训练中的效率。

Wall-clock time是衡量算法或程序执行效率的一个重要指标,因为它反映了用户实际等待的时间。在深度学习模型的训练和推理过程中,wall-clock time尤为重要,因为它直接关联到模型训练和部署的实时性,以及用户可能需要等待的时间。

计算wall-clock time通常很简单,只需要记录程序或实验开始和结束的时间点,然后计算两者之间的时间差。在编程实践中,可以使用各种编程语言提供的计时函数来测量wall-clock time。例如,在Python中,可以使用time模块的time()函数来获取当前时间的时间戳,然后通过计算开始和结束时间点的差值来得到wall-clock time。

例如,以下是一个简单的Python代码片段,用于计算某段代码执行的wall-clock time:

import time

# 记录开始时间

start_time = time.time()

# 执行某些操作或代码

# ...

# 记录结束时间

end_time = time.time()

# 计算wall-clock time

wall_clock_time = end_time - start_time

print(f"Wall-clock time: {wall_clock_time} seconds")

在这个例子中,wall_clock_time就是执行代码块所花费的实际时间,以秒为单位。这种方法可以用于测量任何程序或算法的执行时间,包括深度学习模型的训练和推理过程。

更大的模型训练更快

这是作者提出的第一个结论

“Wider and deeper Transformer models are more sampleefficient than small models: they reach the same level of performance using fewer gradient steps (Figures 3–5).” (Li 等, 2020, p. 3) 较宽和较深的Transformer模型比小型模型更具采样效率:它们使用较少的梯度步长达到相同的性能水平(图3-5)。

“this increase in convergence outpaces the additional computational overhead from increasing model size, even though we need to use more steps of gradient accumulation.” (Li 等, 2020, p. 3) 这种收敛性的增加超过了增加模型大小带来的额外计算开销,尽管我们需要使用更多的梯度累积步骤。

图4。作为梯度步长(左图)和wall-clock time(右图)的函数,较宽的模型比较窄的模型收敛得更快。

“For the masked language modeling task, the validation perplexity weakly depends on the shape of the model. Instead, the total number of model parameters is the key determiner of the convergence rate.” (Li 等, 2020, p. 3) 对于掩蔽语言建模任务,验证困惑弱地取决于模型的形状。相反,模型参数的总数是收敛速度的关键决定因素。

“We vary the batch size for the 12 layer, 768H model and increase the learning rate as is common practice” (Li 等, 2020, p. 3) 我们改变了12层768H模型的批量大小,并按照惯例提高了学习率

“Bigger batch sizes cause the model to converge in fewer steps. However, when adjusting for wall-clock time, increasing the batch size beyond a certain point only provides marginal improvements.2” (Li 等, 2020, p. 3) 批量大小越大,模型收敛的步骤就越少。然而,当根据墙上的时钟时间进行调整时,将批量大小增加到某一点之外只能提供边际改进。2

“training efficiency is maximized when models are trained near some critical batch size” (Li 等, 2020, p. 4) 当模型在某个临界批量附近训练时,训练效率最大化

“Overall, our results show that one should increase the batch size (and learning rate) until the critical batch size region is reached and then to focus on increasing model size.” (Li 等, 2020, p. 4) 总体而言,我们的结果表明,应该增加批量大小(和学习率),直到达到临界批量大小区域,然后集中精力增加模型大小。

“We investigate this by training ROBERTA models of different sizes and stopping them when they reach the same MLM perplexity (the larger models have been trained for less wall-clock time).” (Li 等, 2020, p. 4) 我们通过训练不同大小的ROBERTA模型并在它们达到相同的传销困惑时停止它们来研究这一点(较大的模型已经训练了较少的挂钟时间)。

“All models reach comparable accuracies (in fact, the larger models typically outperform the smaller ones), which shows that larger models are not more difficult to finetune.” (Li 等, 2020, p. 4) 所有模型都达到了可比的精度(事实上,较大的模型通常优于较小的模型),这表明较大的模型并不更难微调。

更大的模型更好压缩

压缩方法和评价

压缩方法

“These diminishing returns occur because (1) the per-step convergence improvements from using larger models decreases as the model gets larger and (2) the computational overhead increases as our hardware becomes increasingly compute-bound.” (Li 等, 2020, p. 4) 出现这些递减的回报是因为(1)使用更大模型的每步收敛改进随着模型变得更大而减少,(2)计算开销随着硬件变得越来越受计算限制而增加。

“Although the most compute-efficient training scheme is to use larger models, this results in models which are less inference efficient.” (Li 等, 2020, p. 4) 尽管计算效率最高的训练方案是使用较大的模型,但这会导致模型的推理效率较低。

“Model compression methods reduce the inference costs of trained models.” (Li 等, 2020, p. 4) 模型压缩方法降低了训练模型的推理成本。

“We focus on compression methods which are fast to perform—methods which require significant amounts of compute will negate the speedup from using larger models.3” (Li 等, 2020, p. 4) 我们专注于执行速度快的压缩方法——需要大量计算的方法会抵消使用较大模型带来的加速。3

“Quantization stores model weights in low precision formats to (1) accelerate operations when using hardware with reduced precision support and (2) reduce overall memory footprint” (Li 等, 2020, p. 4) 量化以低精度格式存储模型权重,以(1)在使用精度支持降低的硬件时加速操作,(2)减少总体内存占用

“Pruning sets neural network weights to zero to (1) remove operations and (2) reduce the memory footprint when models are stored in sparse matrix formats (LeCun et al., 1990; Han et al., 2015).” (Li 等, 2020, p. 4) 当模型以稀疏矩阵格式存储时,修剪将神经网络权重设置为零,以(1)去除运算,(2)减少内存占用(LeCun等人,1990;Han等人,2015)。

“Larger models typically converge faster as a function of both iterations (left plot) and wall-clock time (right plot).” (Li 等, 2020, p. 5) 作为迭代(左图)和Wall-Clock时间(右图)的函数,较大的模型通常收敛得更快。

“We train models of different sizes for 1,000,000 seconds,5 finetune them on MNLI/SST-2, and then apply quantization/pruning.” (Li 等, 2020, p. 5) 我们对不同大小的模型进行1000000秒的训练,5在MNLI/SST-2上对它们进行微调,然后应用量化/修剪。

“For evaluation, even though pruning and quantization will improve inference latency/throughput, quantifying these improvements is challenging because they are highly hardware-dependent.” (Li 等, 2020, p. 5) 对于评估而言,尽管修剪和量化将提高推理延迟/吞吐量,但量化这些改进是具有挑战性的,因为它们高度依赖于硬件。

更大的模型对于量化更加鲁棒

“We quantize every parameter, including the embedding matrix, but keep the model activations at full precision. We use floating point precisions in {4, 6, 8, 32} bits (using lower than 4-bits resulted in severe accuracy loss).” (Li 等, 2020, p. 5) 我们量化每个参数,包括嵌入矩阵,但保持模型激活的全精度。我们在{4,6,8,32}位中使用浮点精度(使用低于4位会导致严重的精度损失)。

“We quantize uniformly: the range of floats is equally split and represented by unsigned integers in {0, . . . , 2k − 1}, where k is the precision.” (Li 等, 2020, p. 5) 我们一致量化:浮点的范围被等分,并用{0,…,2k−1}中的无符号整数表示,其中k是精度。

“where Clamp() clamps all elements to the min/max range, W I is a set of integer indices, b·e is the round operator, ∆ is the distance between two adjacent quantized points, and [q0, q2k−1] indicates the quantization range.” (Li 等, 2020, p. 5) 其中Clamp()将所有元素箝位到最小/最大范围,W I是一组整数索引,b·e是舍入运算符,∆是两个相邻量化点之间的距离,[q0,q2k−1]表示量化范围。

“The larger models are more robust to quantization than the smaller models (the accuracy drop is smaller when the precision is reduced)” (Li 等, 2020, p. 5) 较大的模型比较小的模型对量化更具鲁棒性(当精度降低时,精度下降较小)

更大的模型对于剪枝更加鲁棒

“We use iterative magnitude pruning (Str ̈ om, 1997; Han et al., 2016): we iteratively zero out the smallest magnitude parameters and continue finetuning the model on the downstream task to recover lost accuracy.” (Li 等, 2020, p. 5) 我们使用迭代幅度修剪(Ströom,1997;Han等人,2016):我们迭代地将最小幅度参数归零,并在下游任务上继续微调模型,以恢复丢失的精度。

“We first find the 15% of weights with the smallest magnitude and set them to zero.6 We then finetune the model on the downstream task until it reaches within 99.5% of its original validation accuracy or until we reach one training epoch.” (Li 等, 2020, p. 5) 我们首先找到幅度最小的15%的权重,并将其设置为零。6然后,我们在下游任务中微调模型,直到它达到其原始验证精度的99.5%以内,或者直到我们达到一个训练时期。

“We then repeat this process—we prune another 15% of the smallest magnitude weights and finetune—stopping when we reach the desired sparsity level.” (Li 等, 2020, p. 5) 然后,我们重复这个过程——我们修剪另外15%的最小幅度权重并微调——当我们达到所需的稀疏度水平时停止。

“The additional training overhead from this iterative process is small because the model typically recovers its accuracy in significantly less than one epoch (sometimes it does not require any retraining to maintain 99.5%).” (Li 等, 2020, p. 5) 该迭代过程的额外训练开销很小,因为模型通常在明显少于一个历元的时间内恢复其准确性(有时不需要任何再训练来保持99.5%)。

“For most budgets (x-axis), the highest accuracy models are the ones which are trained large and then heavily compressed.” (Li 等, 2020, p. 6) 对于大多数预算(x轴),最高精度的模型是经过大规模训练然后进行大量压缩的模型。

“We report the model’s accuracy as a function of the total number of nonzero parameters.7” (Li 等, 2020, p. 6) 我们报告了模型的准确性作为非零参数总数的函数。7

“The larger models can be pruned more than the smaller models without significantly hurting accuracy. Consequently, the large, heavily pruned models provide the best accuracyefficiency trade-off.” (Li 等, 2020, p. 6) 较大的模型可以比较小的模型修剪得更多,而不会显著损害准确性。因此,大的、经过大量修剪的模型提供了最佳的精度-效率权衡。

“deep networks are more robust to pruning than wider networks, e.g., the 24 Layer, 768H model outperforms the 12 Layer, 1536H model at most test budgets.” (Li 等, 2020, p. 6) 深度网络比更宽的网络更易于修剪,例如,在大多数测试预算下,24层768H模型优于12层1536H模型。

“Pruning and quantization are complementary techniques for compressing Transformer models.” (Li 等, 2020, p. 6) 修剪和量化是压缩Transformer模型的互补技术。

“We first prune models to various sparsity levels (e.g., 15%, 30%, etc.) and then apply varying amounts of quantization (e.g., 8-bit, 4-bit, etc.) to each model.” (Li 等, 2020, p. 6) 我们首先将模型修剪到各种稀疏性水平(例如,15%、30%等),然后将不同量的量化(例如,8位、4位等)应用于每个模型。

“Large models that are heavily compressed still provide the best trade-off between accuracy and efficiency when leveraging both pruning and quantization.” (Li 等, 2020, p. 6) 当利用修剪和量化时,经过大量压缩的大型模型仍然在准确性和效率之间提供了最佳的折衷。

“A particularly strong compression method is to prune 30-40% of the weights and then quantize the model to 6-8 bits.” (Li 等, 2020, p. 6) 一种特别强的压缩方法是修剪30-40%的权重,然后将模型量化为6-8比特。

“Is it the larger model size or the lack of convergence that causes the enhanced compressibility?” (Li 等, 2020, p. 6) 是更大的模型大小还是缺乏收敛性导致了压缩性的增强?

“Quantization is hardly affected by pretraining convergence—the drop in accuracy between the full precision and the 4-bit precision MNLI models is comparable as the pretrained model becomes more converged.” (Li 等, 2020, p. 6) 量化几乎不受预训练收敛性的影响——随着预训练模型变得更加收敛,全精度和4位精度MNLI模型之间的精度下降是相当的。

更大的模型更好,何时?为什么?

用更大的模型,有更好的采样效率

“The models which are trained large and then compressed are the best performing for each test-time budget.” (Li 等, 2020, p. 7) 经过大规模训练然后压缩的模型对于每个测试时间预算来说都是性能最好的。

“One empirical reason for the acceleration in convergence is that larger Transformer models minimize the training error faster” (Li 等, 2020, p. 7) 收敛加速的一个经验原因是,较大的Transformer模型更快地将训练误差最小化

“And, since the generalization gap is small for our tasks due to very large training sets, the larger models also converge faster w.r.t test error.” (Li 等, 2020, p. 7) 而且,由于训练集非常大,我们的任务的泛化差距很小,因此较大的模型也比测试误差收敛得更快。

“Thus, although our main conclusion that increasing model size accelerates convergence still holds for the smaller models (e.g., the 12 layer model outperforms the 3 layer one), overfitting causes it to break down for the largest models.” (Li 等, 2020, p. 7) 因此,尽管我们的主要结论是,增加模型大小会加速收敛,但对于较小的模型(例如,12层模型的性能优于3层模型)仍然成立,但过拟合会导致其在最大的模型中崩溃。

对于大模型来说可管理的计算开销

“For larger models to train faster with respect to wall-clock time, their convergence improvements must not be negated by their slowdown in per-iteration time.” (Li 等, 2020, p. 7) 对于相对于墙时钟时间训练得更快的较大模型,它们的收敛性改进不能被每次迭代时间的减慢所抵消。

“Fortunately, parallel hardware (e.g., GPUs, TPUs) is usually not compute bound when training deep learning models. Instead, memory storage/movement is the limiting factor in image classification (Gomez et al., 2017),” (Li 等, 2020, p. 7) 幸运的是,在训练深度学习模型时,并行硬件(例如GPU、TPU)通常不受计算限制。相反,记忆存储/移动是图像分类的限制因素(Gomez等人,2017),

“Figure 8. We disentangle whether model size or pretraining convergence causes the enhanced compressibility of larger models.” (Li 等, 2020, p. 7) 图8。我们弄清楚模型大小或预训练收敛是否会导致较大模型的压缩性增强。

“Quantization is hardly affected by convergence—the drop in MNLI accuracy due to quantization is comparable as the pretrained model becomes more converged.” (Li 等, 2020, p. 7) 量化几乎不受收敛的影响——随着预训练的模型变得更加收敛,量化导致的MNLI精度下降是相当的。

“Instead, the factor that determines compressibility is model size—the drop in accuracy is very large when compressing smaller models and vice versa.” (Li 等, 2020, p. 7) 相反,决定可压缩性的因素是模型大小——当压缩较小的模型时,精度下降非常大,反之亦然。

“Moreover, when larger models cause hardware to run out of memory, gradient accumulation can trade-off memory for compute while still preserving the gains of large models, as shown in our experiments.” (Li 等, 2020, p. 7) 此外,如我们的实验所示,当较大的模型导致硬件内存不足时,梯度积累可以在保持较大模型增益的同时,权衡内存和计算。

对于大模型来说更小的压缩误差

“We first measure the quantization error—the difference between the full-precision and low-precision weights—for the 4-bit ROBERTA models.” (Li 等, 2020, p. 7) 我们首先测量4位ROBERTA模型的量化误差,即全精度权重和低精度权重之间的差异。

“The mean and variance of the quantization error are smaller for deeper models.” (Li 等, 2020, p. 7) 对于较深的模型,量化误差的均值和方差较小。

“The mean and variance of the weight difference after quantization (left) is consistently lower for the deeper models compared to the shallower models. The same holds for the difference after pruning (right).” (Li 等, 2020, p. 8) 与较浅的模型相比,较深的模型的量化后的权重差的平均值和方差(左)始终较低。修剪后的差异也是如此(右)。

“This shows that the larger model’s weights are naturally easier to approximate with low-precision / sparse matrices than smaller models” (Li 等, 2020, p. 8) 这表明,与较小的模型相比,较大模型的权重自然更容易用低精度/稀疏矩阵进行近似

“The mean and variance of the pruning error are smaller for deeper models (Figure 9, right).” (Li 等, 2020, p. 8) 对于较深的模型,修剪误差的平均值和方差较小(图9,右)。

“These two results show that the larger model’s weights are more easily approximated by low-precision or sparse matrices.” (Li 等, 2020, p. 8) 这两个结果表明,较大模型的权重更容易用低精度或稀疏矩阵来近似。

“The lottery ticket hypothesis argues that larger models are preferable as they have a higher chance of finding a lucky initialization in one of their subnetworks.” (Li 等, 2020, p. 8) 彩票假说认为,更大的模型更可取,因为它们有更高的机会在其子网络中找到幸运的初始化。

相关的工作

“Our work instead looks to choose the optimal model size for a fixed (small) hardware budget.” (Li 等, 2020, p. 8) 相反,我们的工作是为固定(小)硬件预算选择最佳型号尺寸。

“However, good initial models and hyperparameters are unknown when approaching new problems.” (Li 等, 2020, p. 8) 然而,在处理新问题时,良好的初始模型和超参数是未知的。

“In particular, the computeefficiency of these methods may improve by following our train large, then compress methodology.” (Li 等, 2020, p. 8) 特别是,通过遵循我们的大训练,然后压缩方法,可以提高这些方法的计算效率。

“They make similar conclusions that large, undertrained models are superior to small, well-trained models.” (Li 等, 2020, p. 8) 他们得出了类似的结论,即训练不足的大型模型优于训练良好的小型模型。

结论和进一步的工作

“We show that increasing model width and depth accelerates convergence in terms of both gradient steps and wall-clock time” (Li 等, 2020, p. 9) 我们表明,增加模型的宽度和深度会加速梯度步长和壁时钟时间的收敛

“Moreover, even though large models appear less efficient during inference, we demonstrate that they are more robust to compression. Therefore, we conclude that the best strategy for resourceconstrained training is to train large models and then heavily compress them.” (Li 等, 2020, p. 9) 此外,即使大型模型在推理过程中显得效率较低,我们也证明了它们对压缩更具鲁棒性。因此,我们得出结论,资源受限训练的最佳策略是训练大型模型,然后对其进行大量压缩。

“why do larger transformer models train fast and compress well, how does model size impact overfitting and hyperparameter tuning, and more generally, what other common design decisions should be rethought in the compute-efficient setting?” (Li 等, 2020, p. 9) 为什么更大的变压器模型训练得很快,压缩得很好,模型大小如何影响过拟合和超参数调整,更普遍地说,在计算高效的设置中,还应该重新考虑哪些常见的设计决策?

总结

[xmind 下载](https://download.csdn.net/download/lk142500/89030687)

[xmind 下载](https://download.csdn.net/download/lk142500/89030687)

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?