一、架构图

二、部署环境

| 主机名 | 系统版本 | 内核版本 | IP地址 | 备注 |

|---|---|---|---|---|

| k8s-nginx-211 | centos7.6.1810 | 5.11.16 | 192.168.1.211 | nginx节点 |

| k8s-master-212 | centos7.6.1810 | 5.11.16 | 192.168.1.212 | master节点 |

| k8s-master-213 | centos7.6.1810 | 5.11.16 | 192.168.1.213 | master节点 |

| k8s-master-214 | centos7.6.1810 | 5.11.16 | 192.168.1.214 | master节点 |

| k8s-worker-215 | centos7.6.1810 | 5.11.16 | 192.168.1.215 | worker节点 |

| k8s-worker-216 | centos7.6.1810 | 5.11.16 | 192.168.1.216 | worker节点 |

| k8s-worker-217 | centos7.6.1810 | 5.11.16 | 192.168.1.217 | worker节点 |

说明:建议操作系统选择centos7.5或centos7.6,centos7.2,centos7.3,centos7.4版本存在一定几率的kubelet无法启动问题。

三、环境初始化

说明:以下操作无论是master节点和worker节点均需要执行。

3.1、内核升级

说明:centos7.6系统内核默认是3.10.0,这里建议内核版本为5.11.16。

内核选择:

kernel-lt(lt=long-term)长期有效

kernel-ml(ml=mainline) 主流版本

升级步骤如下:

# 1、下载内核

CSDN下载地址:https://download.csdn.net/download/m0_37814112/17047556

wget https://elrepo.org/linux/kernel/el7/x86_64/RPMS/kernel-ml-5.11.16-1.el7.elrepo.x86_64.rpm

# 2、安装内核

yum localinstall kernel-ml-5.11.16-1.el7.elrepo.x86_64.rpm -y

# 3、查看当前内核版本

grub2-editenv list

# 4、查看所有内核启动grub2

awk -F \' '$1=="menuentry " {print i++ " : " $2}' /etc/grub2.cfg

# 5、修改为最新的内核启动

grub2-set-default 'CentOS Linux (5.11.16-1.el7.elrepo.x86_64) 7 (Core)'

# 6、再次查看内核版本

grub2-editenv list

# 7、reboot重启服务器(必须)

3.2、系统初始化配置

# 1、分别三个master节点和三个worker节点节点修改主机名

master1节点: hostnamectl set-hostname k8s-master-212

master2节点: hostnamectl set-hostname k8s-master-213

master3节点: hostnamectl set-hostname k8s-master-214

worker1节点: hostnamectl set-hostname k8s-worker-215

worker2节点: hostnamectl set-hostname k8s-worker-216

worker3节点: hostnamectl set-hostname k8s-worker-217

# 2、添加hosts

vim /etc/hosts

192.168.1.212 k8s-master-212

192.168.1.213 k8s-master-213

192.168.1.214 k8s-master-214

192.168.1.215 k8s-worker-215

192.168.1.216 k8s-worker-216

192.168.1.217 k8s-worker-217

# 3、内核参数修改

cat > /etc/sysctl.d/k8s.conf <<EOF

vm.swappiness = 0

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-arptables = 1

EOF

sysctl --system

# 4、永久新增br_netfilter模块

# k8s网络使用flannel,该网络需要设置内核参数bridge-nf-call-iptables=1,修改这个参数需要系统有br_netfilter模块。

cat > /etc/rc.sysinit << EOF

#!/bin/bash

for file in /etc/sysconfig/modules/*.modules ; do

[ -x $file ] && $file

done

EOF

cat > /etc/sysconfig/modules/br_netfilter.modules << EOF

modprobe br_netfilter

EOF

chmod 755 /etc/sysconfig/modules/br_netfilter.modules

# 5、关闭防火墙

systemctl stop firewalld && systemctl disable firewalld

# 6、永久关闭selinux

sed -i 's#SELINUX=enforcing#SELINUX=disabled#g' /etc/sysconfig/selinux

sed -i 's#SELINUX=enforcing#SELINUX=disabled#g' /etc/selinux/config

# 7、关闭swap

swapoff -a && sed -i 's/.*swap.*/#&/' /etc/fstab

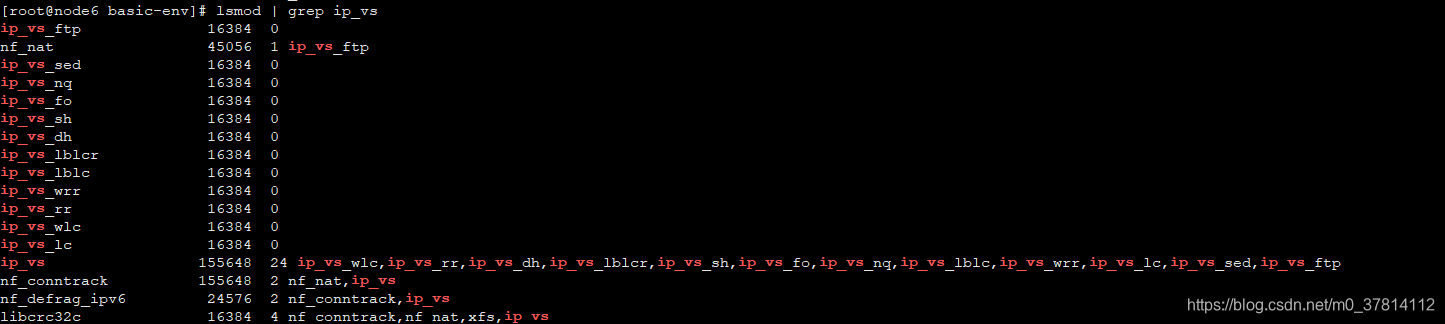

# 8、安装ipvsadm

yum install ipvsadm -y

cat > /etc/sysconfig/modules/ipvs.modules <<EOF

#!/bin/bash

ipvs_modules="ip_vs ip_vs_lc ip_vs_wlc ip_vs_rr ip_vs_wrr ip_vs_lblc ip_vs_lblcr ip_vs_dh ip_vs_sh ip_vs_fo ip_vs_nq ip_vs_sed ip_vs_ftp nf_conntrack"

for kernel_module in \${ipvs_modules}; do

/sbin/modinfo -F filename \${kernel_module} > /dev/null 2>&1

if [ $? -eq 0 ]; then

/sbin/modprobe \${kernel_module}

fi

done

EOF

chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep --color ip_vs >/dev/null

lsmod | grep ip_vs

# 9、修改文件描述符和进程数(根据实际情况修改)

vim /etc/security/limits.conf

root soft nofile 65535

root hard nofile 65535

root soft nproc 65535

root hard nproc 65535

root soft memlock unlimited

root hard memlock unlimited

* soft nofile 65535

* hard nofile 65535

* soft nproc 65535

* hard nproc 65535

* soft memlock unlimited

* hard memlock unlimited

# 10、reboot重启服务器(必须)

如下图所示:

四、Docker部署

说明:以下操作无论是master节点和worker节点均需要执行。

# 1、安装依赖包

yum install yum-utils device-mapper-persistent-data lvm2 -y

# 2、设置Docker源

yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

或

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

# 3、docker安装版本查看

yum list docker-ce --showduplicates | sort -r

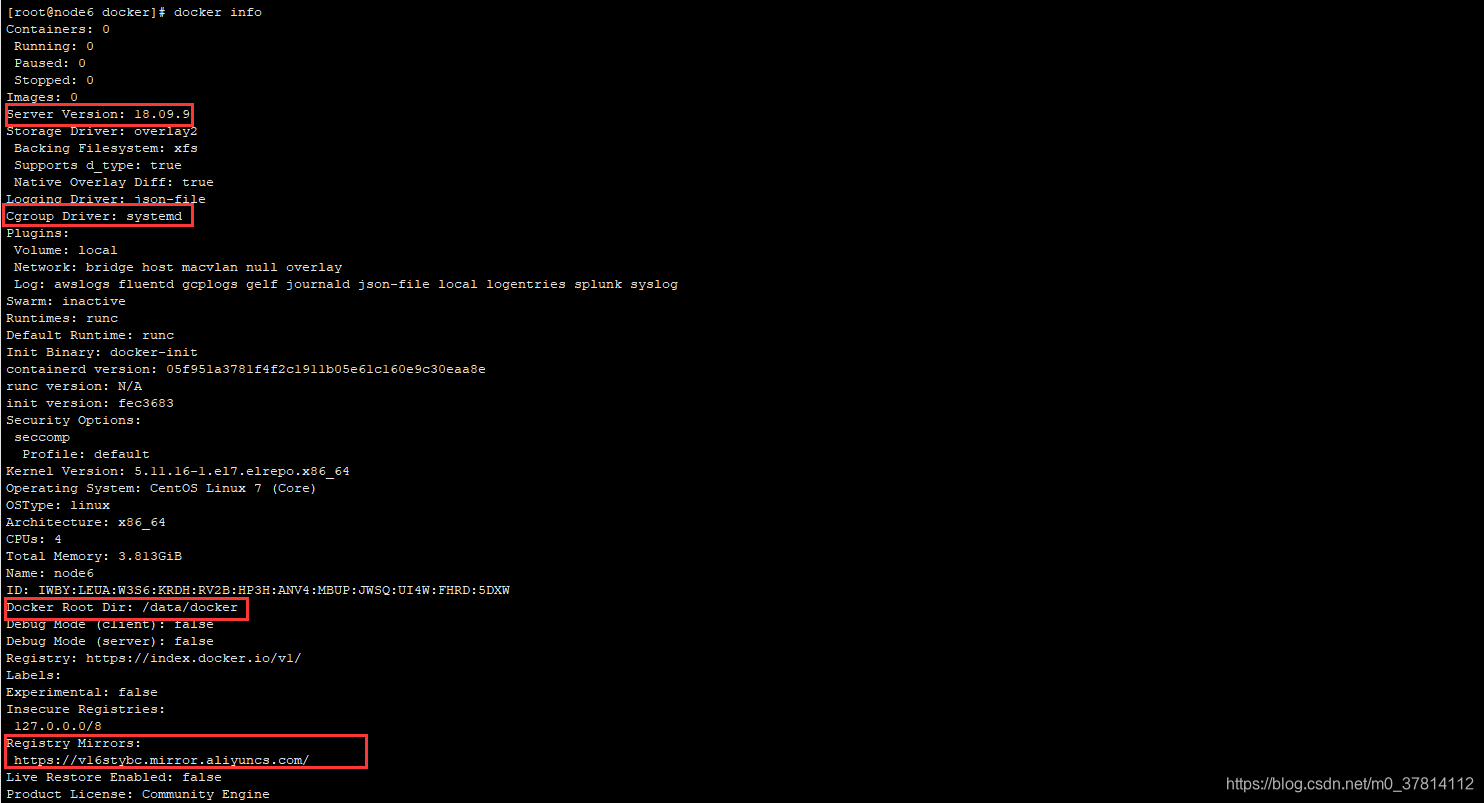

# 4、指定安装的docker版本为18.09.9

yum install docker-ce-18.09.9 docker-ce-cli-18.09.9 containerd.io -y

# 5、启动Docker

systemctl start docker && systemctl enable docker

# 6、修改Cgroup Driver

# 修改docker数据目录,默认是/var/lib/docker,建议数据目录为主机上最大磁盘空间目录或子目录

# 配置阿里云加速器

vim /etc/docker/daemon.json

{

"registry-mirrors": ["https://v16stybc.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"],

"graph": "/data/docker"

}

systemctl daemon-reload && systemctl restart docker

如下图所示:

五、Nginx部署

说明:以下操作只需要在Nginx节点部署即可。

# 1、镜像下载

docker pull nginx:1.17.2

# 2、编辑配置文件

mkdir -p /data/nginx && cd /data/nginx

vim nginx-lb.conf

user nginx;

worker_processes 2; #根据服务器cpu核数修改

error_log /var/log/nginx/error.log warn;

pid /var/run/nginx.pid;

events {

worker_connections 8192;

}

stream {

upstream apiserver {

server 192.168.1.211:6443 weight=5 max_fails=3 fail_timeout=30s; #master-01 apiserver ip和端口

server 192.168.1.213:6443 weight=5 max_fails=3 fail_timeout=30s; #master-02 apiserver ip和端口

server 192.168.1.214:6443 weight=5 max_fails=3 fail_timeout=30s; #master-03 apiserver ip和端口

}

server {

listen 8443; #监听端口

proxy_pass apiserver;

}

}

# 3、启动容器

docker run -d --restart=unless-stopped \

-p 192.168.1.211:8443 \

-v /data/nginx/nginx-lb.conf:/etc/nginx/nginx.conf \

--name nginx-lb \

--hostname nginx-lb \

nginx:1.17.2

六、kubernetes部署

6.1、设置kubernetes源

说明:以下操作无论是master节点和worker节点均需要执行。

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

yum clean all && yum -y makecache

6.2、安装kubelet、kubeadm和kubectl

说明:以下操作无论是master节点和worker节点均需要执行。

# 1、版本查看

yum list kubelet --showduplicates | sort -r

# 2、安装1.17.4版本

yum install kubelet-1.17.4 kubeadm-1.17.4 kubectl-1.17.4 -y

6.3、k8s相关镜像下载

说明:以下操作无论是master节点和worker节点均需要执行。

# k8s镜像下载

vim get_image.sh

#!/bin/bash

url=registry.cn-hangzhou.aliyuncs.com/google_containers

version=v1.17.4

images=(`kubeadm config images list --kubernetes-version=$version|awk -F '/' '{print $2}'`)

for imagename in ${images[@]} ; do

docker pull $url/$imagename

docker tag $url/$imagename k8s.gcr.io/$imagename

docker rmi -f $url/$imagename

done

# flannel网络镜像下载

docker pull quay.io/coreos/flannel:v0.14.0-rc1

如下图所示:

说明:

1、以上关于k8s镜像下载,无论master节点还是worker节点均需要下载。

2、CSDN下载地址:flannel:v0.14.0-rc1

6.4、第一个master节点部署

说明:以下操作只需要在第一个master部署节点执行。

6.4.1、创建初始化配置文件

# 根据实际部署环境修改信息:

vim kubeadm-config.yaml

apiVersion: kubeadm.k8s.io/v1beta1

kind: ClusterConfiguration

networking:

serviceSubnet: "10.96.0.0/16" #service网段

podSubnet: "10.48.0.0/16" #pod网段

kubernetesVersion: "v1.17.4" #kubernetes版本

controlPlaneEndpoint: "192.168.1.211:6443" #apiserver ip和端口

apiServer:

extraArgs:

authorization-mode: "Node,RBAC"

service-node-port-range: 30000-36000 #service端口范围

imageRepository: ""

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: "ipvs"

6.4.2、kubeadm初始化master

kubeadm init --config=kubeadm-config.yaml --upload-certs --ignore-preflight-errors=all

# 信息如下,则表示初始化第一个master节点成功

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of the control-plane node running the following command on each as root:

kubeadm join 192.168.1.211:8443 --token n94e8q.fk3y6h681hsnh3fz \

--discovery-token-ca-cert-hash sha256:9be1cf8e898285d186a3f6af300e40d0aa23f88f5822e1e333fd38ec896eb97e \

--control-plane --certificate-key bfc13b8fdb02e8dc7f2a92a1a59e7a905f7bc58c60a4945deebf70e9c6855029

Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.1.211:8443 --token n94e8q.fk3y6h681hsnh3fz \

--discovery-token-ca-cert-hash sha256:9be1cf8e898285d186a3f6af300e40d0aa23f88f5822e1e333fd38ec896eb97e

6.4.3、配置kubectl

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

6.4.4、配置flannel网络

# 1、下载官方模板文件

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

# 2、部署flannel网络

kubectl create -f kube-flannel.yml

# 3、根据实际情况修改kube-flannel.yml

vim kube-flannel.yml

---

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: psp.flannel.unprivileged

annotations:

seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/default

seccomp.security.alpha.kubernetes.io/defaultProfileName: docker/default

apparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/default

apparmor.security.beta.kubernetes.io/defaultProfileName: runtime/default

spec:

privileged: false

volumes:

- configMap

- secret

- emptyDir

- hostPath

allowedHostPaths:

- pathPrefix: "/etc/cni/net.d"

- pathPrefix: "/etc/kube-flannel"

- pathPrefix: "/run/flannel"

readOnlyRootFilesystem: false

# Users and groups

runAsUser:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

fsGroup:

rule: RunAsAny

# Privilege Escalation

allowPrivilegeEscalation: false

defaultAllowPrivilegeEscalation: false

# Capabilities

allowedCapabilities: ['NET_ADMIN', 'NET_RAW']

defaultAddCapabilities: []

requiredDropCapabilities: []

# Host namespaces

hostPID: false

hostIPC: false

hostNetwork: true

hostPorts:

- min: 0

max: 65535

# SELinux

seLinux:

# SELinux is unused in CaaSP

rule: 'RunAsAny'

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

rules:

- apiGroups: ['extensions']

resources: ['podsecuritypolicies']

verbs: ['use']

resourceNames: ['psp.flannel.unprivileged']

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-system

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.48.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

hostNetwork: true

priorityClassName: system-node-critical

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.14.0-rc1

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.14.0-rc1

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN", "NET_RAW"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

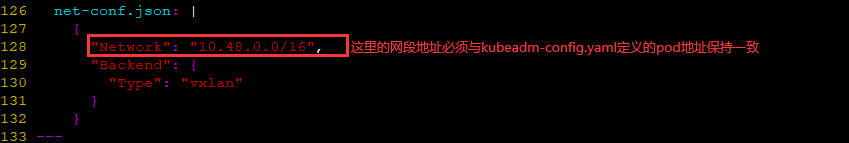

说明:官方模板文件只需要修改"Network": "10.48.0.0/16"这一字段,且这里的网段地址需要与kubeadm-config.yaml配置文件定义的必须保持一致。

如下图所示:

6.4.5、设置kubelet开机自启

systemctl enable kubelet

6.5、以master身份加入kubernetes集群

说明:以下操作分别在另外两个节点执行,当前环境为192.168.1.213和192.168.1.214两个节点。

# 1、以master身份加入kubernetes集群

kubeadm join 192.168.1.211:8443 --token n94e8q.fk3y6h681hsnh3fz \

--discovery-token-ca-cert-hash sha256:9be1cf8e898285d186a3f6af300e40d0aa23f88f5822e1e333fd38ec896eb97e \

--control-plane --certificate-key bfc13b8fdb02e8dc7f2a92a1a59e7a905f7bc58c60a4945deebf70e9c6855029

# 2、配置kubectl

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

# 3、设置开机自启

systemctl enable kubelet

6.6、以worker身份加入kubernetes集群

说明:以下操作只需要在worker节点执行。

# 1、以worker身份加入kubernetes集群

kubeadm join 192.168.1.211:8443 --token n94e8q.fk3y6h681hsnh3fz \

--discovery-token-ca-cert-hash sha256:9be1cf8e898285d186a3f6af300e40d0aa23f88f5822e1e333fd38ec896eb97e

# 2、设置开机自启

systemctl enable kubelet

如果你忘了token和公钥,可以通过如下命令执行:

执行命令生成token

kubeadm token list

获取CA(证书)公钥哈希值

openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^ .* //'

kubeadm join 192.168.1.211:6443 --token 新生成的Token填写此处 --discovery-token-ca-cert-hash sha256:获取的公钥哈希值填写此处

如下所示,kubernetes一主多从,就部署完成了。

[root@k8s-master-211 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master-212 Ready master 126m v1.17.4

k8s-master-213 Ready master 126m v1.17.4

k8s-master-214 Ready master 126m v1.17.4

k8s-worker-215 Ready <none> 105m v1.17.4

k8s-worker-216 Ready <none> 105m v1.17.4

k8s-worker-217 Ready <none> 105m v1.17.4

如下所示,kubernetes三主多从,就部署完成了。

下一章:《Kubernets部署篇:Centos7.6部署kubernetes1.17.4高可用集群(方案二)》

总结:整理不易,如果对你有帮助,可否点赞关注一下?

更多详细内容请参考:企业级K8s集群运维实战

172

172

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?