在Fully Convolutional Networks for Semantic Segmentation这篇文章中,介绍到Bilinear Upsampling这种上采样的方式,虽然文章最后用的是deconvolution,给出的理由就是不希望upsampling filter是固定的= =!

因为以前用的upsampling的方式是很简单的,比如放大两倍,就是把一个像素点复制一下变成四个。这样的做法会导致图像变得模糊。

线性插值

在介绍双线性插值前,先介绍一下线性插值。

其实说白了就很简单,就是两点确定一条线,然后在这条线上知道了x,自然可以推出y。同样的,已知y的话,自然也可以推导出x。

双线性插值

在图像中,我们面对的往往是两维,甚至三维(包含channel)的图像,那么,在进行upsampling的时候我们就要用到双线性插值和三线性插值。

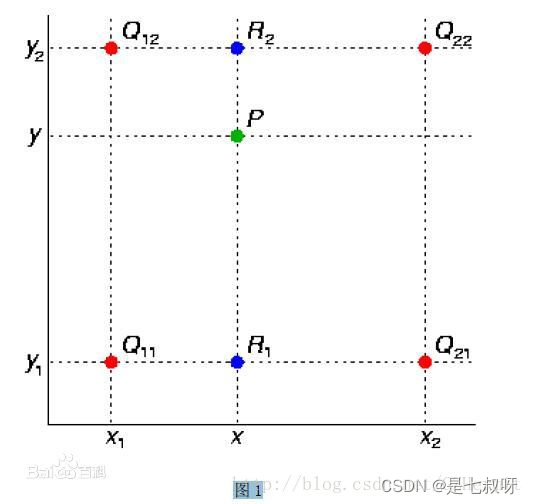

所谓双线性插值,原理和线性插值相同,并且也是通过使用三次线性插值实现的。首先看图。

双线性插值的效果

参考博客:二次线性插值原理+代码详解【python】

双线型内插值算法就是一种比较好的图像缩放算法,它充分的利用了源图中虚拟点四周的四个真实存在的像素值来共同决定目标图中的一个像素值,因此缩放效果比简单的最邻近插值要好很多。由于图像双线性插值只会用相邻的4个点,因此以下公式的分母都是1。

F.upsample_bilinear

如果在pytorch的项目中使用到了F.upsample_bilinear函数,会出现如下警告(表明此函数已经过时了,推荐使用nn.functional.interpolate来代替)

UserWarning: nn.functional.upsample_bilinear is deprecated. Use nn.functional.interpolate instead.

warnings.warn("nn.functional.upsample_bilinear is deprecated. Use nn.functional.interpolate instead.")

4739

4739

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?