【论文翻译】Temporal Fusion Transformers for Interpretable Multi-horizon Time Series Forecasting

文章目录

- 【论文翻译】Temporal Fusion Transformers for Interpretable Multi-horizon Time Series Forecasting

- Abstract

- 1. Introduction

- 2. Related work

- 3. Multi-horizon forecasting

- 4. Model architecture

- 5. Loss functions

- 6. Performance evaluation

- 7. Interpretability

- 8. Conclusions

Abstract

Multi-horizon forecasting often contains a complex mix of inputs – including static (i.e. time-invariant) covariates, known future inputs, and other exogenous time series that are only observed in the past – without any prior information on how they interact with the target. Several deep learning methods have been proposed, but they are typically ‘black-box’ models that do not shed light on how they use the full range of inputs present in practical scenarios. In this paper, we introduce the Temporal Fusion Transformer (TFT) – a novel attention-based architecture that combines high-performance multi-horizon forecasting with interpretable insights into temporal dynamics. To learn temporal relationships at different scales, TFT uses recurrent layers for local processing and interpretable self-attention layers for long-term dependencies.

TFT utilizes specialized components to select relevant features and a series of gating layers to suppress unnecessary components, enabling high performance in a wide range of scenarios. On a variety of real-world datasets, we demonstrate significant performance improvements over existing benchmarks, and highlight three practical interpretability use cases of TFT.

多水平预测(多步预测,未来可能不止预测一个点,可能预测多个点)通常包含复杂的输入组合——包括静态(即时不变)协变量、已知的未来输入和其他仅在过去观察到的外生时间序列(数据源比较复杂)——没有任何关于它们如何与目标相互作用的先验信息。已经提出了几种深度学习方法,但它们都是典型的“黑盒”模型,无法说明它们如何在实际场景中使用各种输入。没法看到不同数据源具体怎么作用到我这个预测的在本文中,我们介绍了时间融合转换器(TFT)——一种新颖的基于注意力的架构,它将高性能的多水平预测与对时间动态的可解释见解相结合(在多步预测上表现好且支持可解释性)。为了学习不同尺度上的时间关系,TFT使用循环层进行局部处理,使用可解释的自我注意层进行长期依赖。

TFT利用专门的组件来选择相关的特性,并使用一系列门控层来抑制不必要的组件,从而在广泛的场景中实现高性能。在各种真实世界的数据集上,我们展示了相对于现有基准的显著性能改进,并强调了TFT的三个实际可解释性用例。

1. Introduction

Multi-horizon forecasting, i.e. the prediction of variables-of-interest at multiple future time steps, is a crucial problem within time series machine learning. In contrast to one-step-ahead predictions, multi-horizon forecasts provide users with access to estimates across the entire path, allowing them to optimize their actions at multiple steps in the future (e.g. retailers optimizing the inventory for the entire upcoming season, or clinicians optimizing a treatment plan for a patient). Multi-horizon forecasting has many impactful real-world applications in retail (Böse et al, 2017; Courty & Li, 1999), healthcare (Lim, Alaa, & van der Schaar, 2018; Zhang & Nawata, 2018) and economics (Capistran, Constandse, & RamosFrancia, 2010) – performance improvements to existing methods in such applications are highly valuable.

多步预测,即在未来多个时间步对感兴趣的变量进行预测,是时间序列机器学习中的一个关键问题。与一步前预测相比,多阶段预测为用户提供了整个路径的估计,允许他们在未来的多个步骤中优化他们的行动(例如,零售商为即将到来的整个季节优化库存,或临床医生为患者优化治疗计划)。多步预测在零售业中有许多有影响力的现实应用(Böse等人,2017;Courty & Li, 1999),医疗保健(Lim, Alaa, & van der Schaar, 2018;Zhang & Nawata, 2018)和经济学(Capistran, Constandse, & RamosFrancia, 2010) -在此类应用中对现有方法的性能改进非常有价值。

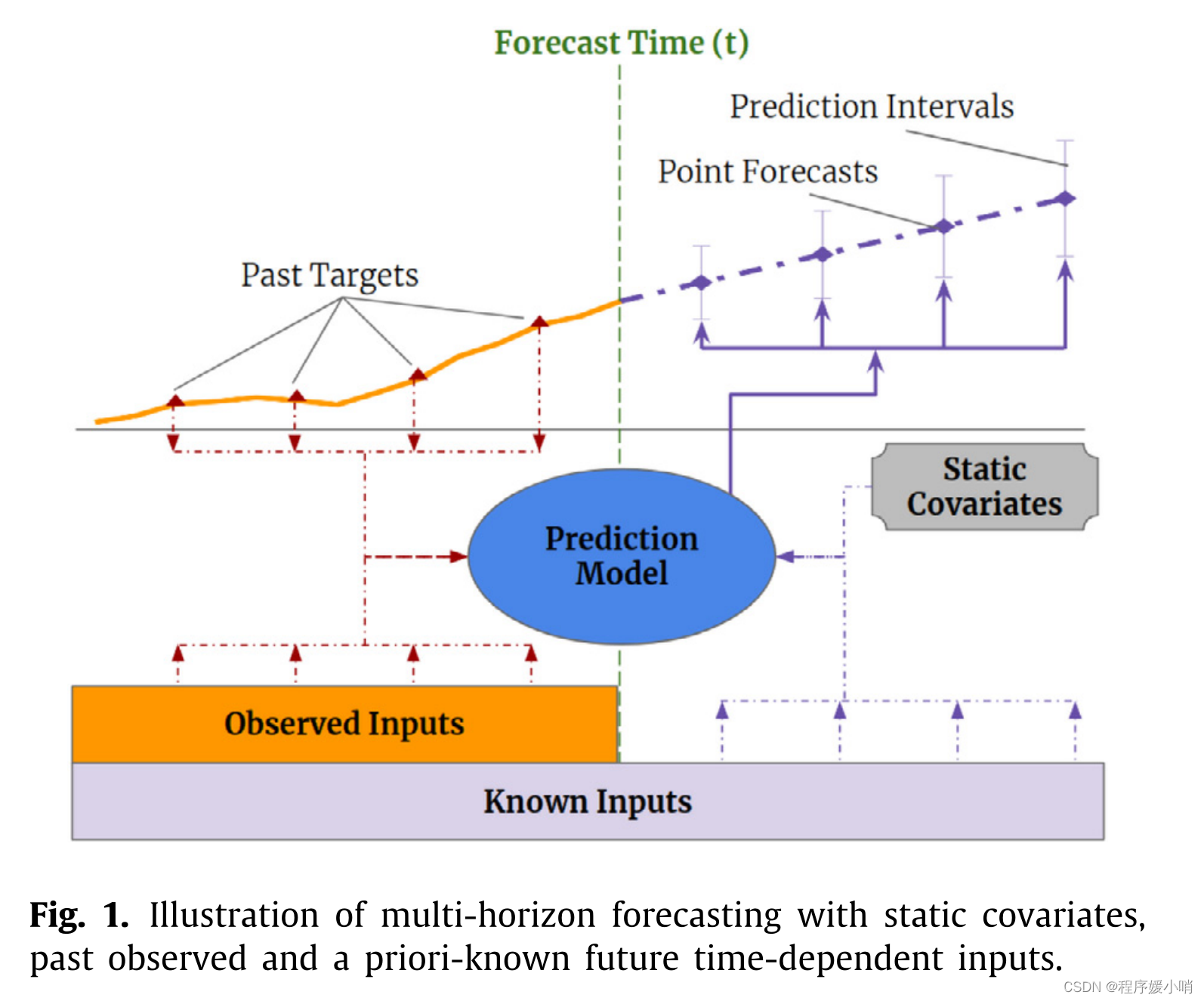

Practical multi-horizon forecasting applications commonly have access to a variety of data sources, as shown in Fig. 1, including known information about the future (e.g. upcoming holiday dates), other exogenous time series (e.g. historical customer foot traffic), and static metadata (e.g. location of the store) – without any prior knowledge on how they interact. This heterogeneity of data sources together with little information about their interactions makes multi-horizon time series forecasting particularly challenging.

实际的多视界预测应用程序通常可以访问各种数据源,如图1所示,包括关于未来的已知信息(例如即将到来的假期日期)、其他外生时间序列(例如历史客户客流量)和静态元数据(例如商店的位置),而不需要任何关于它们如何交互的先验知识。数据源的异质性以及关于它们相互作用的很少信息使得多视域时间序列预测特别具有挑战性。

Deep neural networks (DNNs) have increasingly been used in multi-horizon forecasting, demonstrating strong performance improvements over traditional time series models (Alaa & van der Schaar, 2019; Makridakis, Spiliotis, & Assimakopoulos, 2020; Rangapuram et al, 2018). While many architectures have focused on variants of recurrent neural network (RNN) architectures (Rangapuram et al, 2018; Salinas, Flunkert, Gasthaus, & Januschowski, 2019; Wen et al, 2017), recent improvements have also used attention-based methods to enhance the selection of relevant time steps in the past (Fan et al, 2019) – including transformer-based models (Li et al, 2019). However, these often fail to consider the different types of inputs commonly present in multi-horizon forecasting, and either assume that all exogenous inputs are known into the future (Li et al, 2019; Rangapuram et al, 2018; Salinas et al, 2019) – a common problem with autoregressive models – or neglect important static covariates (Wen et al, 2017) – which are simply concatenated with other time-dependent features at each step. Many recent improvements in time series models have resulted from the alignment of architectures with unique data characteristics (Koutník, Greff, Gomez, & Schmidhuber, 2014; Neil et al, 2016). We argue and demonstrate that similar performance gains can also be reaped by designing networks with suitable inductive biases for multi-horizon forecasting.

In addition to not considering the heterogeneity of common multi-horizon forecasting inputs, most current architectures are ‘black-box’ models where forecasts are controlled by complex nonlinear interactions between many parameters. This makes it difficult to explain how models arrive at their predictions, and in turn, makes it challenging for users to trust a model’s outputs and model builders to debug it. Unfortunately, commonly used explainability methods for DNNs are not well suited for applying to time series. In their conventional form, post hoc methods (e.g. LIME (Ribeiro et al, 2016) and SHAP (Lundberg & Lee, 2017)) do not consider the time ordering of input features. For example, for LIME, surrogate models are independently constructed for each data point, and for SHAP, features are considered independently for neighboring time steps. Such post hoc approaches would lead to poor explanation quality as dependencies between timesteps are typically significant in time series. On the other hand, some attention-based architectures are proposed with inherent interpretability for sequential data, primarily language or speech – such as the Transformer architecture (Vaswani, Shazeer, Parmar, Uszkoreit, Jones, Gomez, Kaiser, & Polosukhin, 2017). The fundamental caveat to apply them is that multi-horizon forecasting includes many different types of input features, as opposed to language or speech. In their conventional form, these architectures can provide insights into relevant time steps for multi-horizon forecasting, but they cannot distinguish the importance of different features at a given timestep.

深度神经网络(DNNs)已越来越多地用于多水平预测,与传统时间序列模型相比,表现出强大的性能改进(Alaa & van der Schaar, 2019;Makridakis, Spiliotis, & Assimakopoulos, 2020;Rangapuram等人,2018)。虽然许多架构都专注于循环神经网络(RNN)架构的变体(Rangapuram等人,2018;Salinas, Flunkert, Gasthaus, & Januschowski, 2019;Wen et al, 2017),最近的改进也使用了基于注意力的方法来增强过去相关时间步长的选择(Fan et al, 2019) -包括基于Transformer的模型(Li et al, 2019)。

历史研究的瓶颈

①没有考虑常见的多水平预测输入的异质性

然而,这些通常没有考虑多水平预测中常见的不同类型的输入,或者假设未来所有外生输入都是已知的(Li等人,2019;Rangapuram等人,2018;Salinas等人,2019)——自回归模型的一个常见问题——或忽略了重要的静态协变量(Wen等人,2017)——这些协变量只是在每一步中与其他随时间变化的特征连接起来。

时间序列模型的许多最新改进都来自于具有独特数据特征的架构的对齐(Koutník, Greff, Gomez, & Schmidhuber, 2014;尼尔等人,2016)。我们认为并证明,通过设计具有适合多水平预测的归纳偏差的网络,也可以获得类似的性能收益。

②缺乏可解释性

除了没有考虑常见的多水平预测输入的异质性外,目前大多数架构都是“黑盒”模型,其中预测由许多参数之间复杂的非线性相互作用控制。这使得解释模型如何得到预测变得困难,反过来,也使得用户难以信任模型的输出和模型构建者对其进行调试。不幸的是,常用的dnn解释性方法并不适合应用于时间序列。在传统形式中,事后方法(例如LIME(Ribeiro et al, 2016)和SHAP (Lundberg & Lee, 2017))不考虑输入特征的时间顺序。

例如,对于LIME,代理模型是为每个数据点独立构建的,而对于SHAP,特征是独立考虑相邻时间步长的。

这种事后的方法将导致较差的解释质量,因为时间步骤之间的依赖性在时间序列中通常是显著的。

另一方面,一些基于注意力的架构被提出,对顺序数据具有固有的可解释性,主要是语言或语音——例如Transformer架构(Vaswani, Shazeer, Parmar, Uszkoreit, Jones, Gomez, Kaiser, & Polosukhin, 2017)。应用它们的基本警告是,多水平预测包括许多不同类型的输入特征,而不是语言或语音。在它们的传统形式中,这些架构可以为多水平预测提供相关时间步骤的见解,但它们不能在给定的时间步骤中区分不同特征的重要性。只回答了那些时间点是重要的,很难回答这个时间点上哪个特征重要。

Overall, in addition to the need for new methods to tackle the heterogeneity of data in multi-horizon forecasting for high performance, new methods are also needed to render these forecasts interpretable, given the needs of the use cases.

总的来说,除了需要新的方法来处理高性能多层面预测中的数据异质性外,还需要新的方法来使这些预测具有可解释性,以满足用例的需求。

DNN没有做的特别好的地方在于:

- 没有处理好多数据源的利用

在实际业务场景中有很多数据源:不同的时序任务可以按照变量来分(单变量、多变量)

单变量(uni-var):自己预测目标本身的一个历史数据

多变量预测(muti-var):数据源就比较丰富(预测数据本身类别[商品类别],过去观测变量,未来已知的变量[节假日])

在之前的模型没有很好的针对这三种不同数据做一些模型架构设计,大部分的模型只是单纯的将数据做embedding,然后合并在一起直接输入模型进行学习 - 解释模型的预测效果

对于时序,需要告诉业务方,需要告知用的特征数据里面对产生决策映像比较大的数据,所以可解释性对于业务驱动来说很重要

In this paper, we propose the Temporal Fusion Transformer (TFT) – an attention-based DNN architecture for multi-horizon forecasting that achieves high performance while enabling new forms of interpretability. To obtain significant performance improvements over state-of-theart benchmarks, we introduce multiple novel ideas to align the architecture with the full range of potential inputs and temporal relationships common to multi-horizon forecasting – specifically incorporating (1) static covariate encoders which encode context vectors for use in other parts of the network, (2) gating mechanisms throughout and sample-dependent variable selection to minimize the contributions of irrelevant inputs, (3) a sequence-tosequence layer to locally process known and observed inputs, and (4) a temporal self-attention decoder to learn any long-term dependencies present within the dataset.

The use of these specialized components also facilitates interpretability; in particular, we show that TFT enables three valuable interpretability use cases: helping users identify (i) globally-important variables for the prediction problem, (ii) persistent temporal patterns, and (iii) significant events. On a variety of real-world datasets, we demonstrate how TFT can be practically applied, as well as the insights and benefits it provides.

论文的主要贡献

在本文中,我们提出了时间融合转换器(TFT)——一种基于注意力的DNN架构,用于多水平预测,在实现新形式的可解释性的同时实现高性能。为了在最先进的基准测试中获得显著的性能改进,我们引入了多种新颖的想法,以使架构与多水平预测常见的所有潜在输入和时间关系保持一致——特别是结合

(1)静态协变量编码器,对上下文向量进行编码,以用于网络的其他部分,相当于把静态信息引入模型引导模型去学习

(2)整个门控机制和样本因变量选择,以最大限度地减少不相关输入的贡献,特征选择部分创新

(3)序列对序列层 ,用于局部处理已知和观察到的输入;seq2seq编码,可以局部处理实变的数据

(4)时间自注意解码器,用于学习数据集中存在的任何长期依赖关系。可以学习的时间更长

这些专用组件的使用也促进了可解释性;特别地,我们展示了TFT支持三个有价值的可解释性用例:帮助用户识别

(i)预测问题的全局重要变量,

(ii)持久的时间模式,

(iii)重要事件。反馈重要时间点

在各种现实世界的数据集上,我们演示了TFT如何实际应用,以及它提供的见解和好处。

2. Related work

DNNs for Multi-horizon Forecasting: Similarly to traditional multi-horizon forecasting methods (Marcellino, Stock, & Watson, 2006; Taieb, Sorjamaa, & Bontempi, 2010), recent deep learning methods can be categorized into iterated approaches using autoregressive models (Li et al, 2019; Rangapuram et al, 2018; Salinas et al, 2019) or direct methods based on sequence-to-sequence models (Fan et al, 2019; Wen et al, 2017).

Iterated approaches utilize one-step-ahead prediction models, with multi-step predictions obtained by recursively feeding predictions into future inputs. Approaches with Long Short-term Memory (LSTM) (Hochreiter & Schmidhuber, 1997) networks have been considered, such as Deep AR (Salinas et al, 2019) which uses stacked LSTM layers to generate parameters of one-step-ahead Gaussian predictive distributions.Deep State-Space Models (DSSM) (Rangapuram et al, 2018) adopt a similarapproach, utilizing LSTMs to generate parameters of a predefined linear state-space model with predictive distributions produced via Kalman filtering – with extensions for multivariate time series data in Wang et al(2019). More recently, Transformer-based architectures have been explored in Li et al (2019), which proposes the use of convolutional layers for local processing and a sparse attention mechanism to increase the size of the receptive field during forecasting. Despite their simplicity, iterative methods rely on the assumption that the values of all variables excluding the target are known at forecast time – such that only the target needs to be recursively fed into future inputs. However, in many practical scenarios, numerous useful time-varying inputs exist, with many unknown in advance. Their straightforward use is hence limited for iterative approaches. TFT, on the other hand, explicitly accounts for the diversity of inputs – naturally handling static covariates and (past-observed and future-known) time-varying inputs.

In contrast, direct methods are trained to explicitly generate forecasts for multiple predefined horizons at each time step. Their architectures typically rely on sequence-to-sequence models, e.g. LSTM encoders to summarize past inputs, and a variety of methods to generate future predictions. The Multi-horizon Quantile Recurrent Forecaster (MQRNN) (Wen et al, 2017) uses LSTM or convolutional encoders to generate context vectors which are fed into multi-layer perceptrons (MLPs) for each horizon.

In Fan et al (2019) a multi-modal attention mechanism is used with LSTM encoders to construct context vectors for a bi-directional LSTM decoder. Despite performing better than LSTM-based iterative methods, interpretability remains challenging for such standard direct methods. In contrast, we show that by interpreting attention patterns, TFT can provide insightful explanations about temporal dynamics, and do so while maintaining state-of-the-art performance on a variety of datasets.

用于多步预测的DNNs: 类似于传统的多层面预测方法(Marcellino, Stock, & Watson, 2006;Taieb, Sorjamaa, & Bontempi, 2010),最近的深度学习方法可以被归类为使用自回归模型的迭代方法(Li等人,2019;Rangapuram等人,2018;Salinas等人,2019)或基于序列到序列模型的直接方法(Fan等人,2019;Wen等人,2017)。

迭代方法利用一步前的预测模型,通过递归地将预测输入到未来的输入中获得多步预测。已经考虑了长短期记忆(LSTM) (Hochreiter & Schmidhuber, 1997)网络的方法,例如Deep AR (Salinas等人,2019),它使用堆叠的LSTM层来生成领先一步的高斯预测分布的参数。深状态空间模型(DSSM) (Rangapuram等人,2018)采用类似的方法,利用lstm生成预定义线性状态空间模型的参数,该模型具有通过卡尔曼滤波产生的预测分布- Wang等人(2019)中对多元时间序列数据的扩展。最近,Li等人(2019)探索了基于transformer的架构,提出了使用卷积层进行局部处理和稀疏注意机制来增加预测期间接受域的大小。尽管迭代方法很简单,但它依赖于这样一个假设:在预测时间,除目标外的所有变量的值都是已知的——这样,只有目标需要递归地输入到未来的输入中。然而,在许多实际场景中,存在大量有用的时变输入,其中许多是预先未知的。因此,它们的直接使用对于迭代方法是有限的。另一方面,TFT明确地解释了输入的多样性——自然地处理静态协变量和(过去观察到的和未来已知的)时变输入。

相比之下,直接方法被训练为在每个时间步骤显式地生成多个预定义范围的预测。它们的体系结构通常依赖于序列到序列的模型,例如LSTM编码器来总结过去的输入,以及各种方法来生成未来的预测。多视界分位数循环预测器(MQRNN) (Wen等人,2017)使用LSTM或卷积编码器来生成上下文向量,这些向量被馈送到每个视界的多层感知器(mlp)。

Fan等人(2019)在LSTM编码器中使用了多模态注意机制来构造双向LSTM解码器的上下文向量。尽管表现比基于lstm的迭代方法更好,但这种标准直接方法的可解释性仍然具有挑战性。相比之下,我们表明,通过解释注意力模式,TFT可以提供关于时间动态的深刻解释,并在保持各种数据集上的最先进性能的同时做到这一点。

Time Series Interpretability with Attention: Attention mechanisms are used in translation (Vaswani et al, 2017), image classification (Wang, Jiang, Qian, Yang, Li, Zhang, Wang, & Tang, 2017) or tabular learning (Arik & Pfister, 2019) to identify salient portions of input for each instance using the magnitude of attention weights.

Recently, they have been adapted for time series with interpretability motivations (Alaa & van der Schaar, 2019; Choi et al, 2016; Li et al, 2019), using LSTM-based (Song et al, 2018) and transformer-based (Li et al, 2019) architectures. However, this was done without considering the importance of static covariates (as the above methods blend variables at each input). TFT alleviates this by using separate encoder–decoder attention for static features at each time step on top of the self-attention to determine the contribution time-varying inputs.

时间序列注意可解释性: 注意机制用于翻译(Vaswani等人,2017)、图像分类(Wang, Jiang, Qian, Yang, Li, Zhang, Wang, & Tang, 2017)或表格学习(Arik & Pfister, 2019),以使用注意权重的量级识别每个实例的输入显著部分。

最近,它们被改编为具有可解释性动机的时间序列(Alaa & van der Schaar, 2019;Choi等人,2016;Li等人,2019),使用基于lstm (Song等人,2018)和基于变压器(Li等人,2019)的架构。然而,这样做没有考虑静态协变量的重要性(因为上述方法在每个输入中混合变量)。TFT通过在自注意的基础上在每个时间步对静态特征使用单独的编码器-解码器注意来确定贡献时变输入来缓解这一问题。

Instance-wise Variable Importance with DNNs: Instance (i.e. sample)-wise variable importance can be obtained with post-hoc explanation methods (Lundberg & Lee, 2017; Ribeiro et al, 2016; Yoon, Arik, & Pfister, 2019) and inherently interpretable models (Choi et al, 2016; Guo, Lin, & Antulov-Fantulin, 2019). Post-hoc explanation methods, e.g. LIME (Ribeiro et al, 2016), SHAP (Lundberg & Lee, 2017) and RL-LIM (Yoon et al, 2019), are applied on pre-trained black-box models and often based on distilling into a surrogate interpretable model, or decomposing into feature attributions. They are not designed to take into account the time ordering of inputs, limiting their use for complex time series data. Inherently interpretable modeling approaches build components for feature selection directly into the architecture. For time series forecasting specifically, they are based on explicitly quantifying time-dependent variable contributions. For example, Interpretable Multi-Variable LSTMs (Guo et al, 2019) partitions the hidden state such that each variable contributes uniquely to its own memory segment, and weights memory segments to determine variable contributions. Methods combining temporal importance and variable selection have also been considered in Choi et al (2016), which computes a single contribution coefficient based on attention weights from each. However, in addition to the shortcoming of modeling only one-stepahead forecasts, existing methods also focus on instancespecific (i.e. sample-specific) interpretations of attention weights – without providing insights into global temporal dynamics. In contrast, the use cases in Section 7 demonstrate that TFT is able to analyze global temporal relationships and allows users to interpret global behaviors of the model on the whole dataset – specifically in the identification of any persistent patterns (e.g. seasonality or lag effects) and regimes present.

DNNs的实例变量重要性: 实例(即样本)变量重要性可以通过事后解释方法获得(Lundberg & Lee, 2017;Ribeiro等人,2016;Yoon, Arik, & Pfister, 2019)和固有可解释模型(Choi等人,2016;Guo, Lin, & Antulov-Fantulin, 2019)。事后解释方法,如LIME (Ribeiro等人,2016),SHAP (Lundberg & Lee, 2017)和RL-LIM (Yoon等人,2019),应用于预训练的黑盒模型,通常基于提取到替代可解释模型,或分解为特征属性。它们的设计没有考虑到输入的时间顺序,限制了它们对复杂时间序列数据的使用。固有的可解释的建模方法将组件直接构建到体系结构中进行特性选择。特别是对于时间序列预测,它们是基于显式量化的时间相关变量贡献。例如,可解释的多变量LSTMs (Guo等人,2019)划分隐藏状态,使每个变量对其自己的内存段有唯一的贡献,并对内存段进行加权以确定变量贡献。Choi等人(2016)也考虑了结合时间重要性和变量选择的方法,该方法基于每个变量的注意力权重计算单个贡献系数。然而,除了建模只能进行一步预测的缺点之外,现有的方法还专注于对注意力权重的实例特定(即样本特定)解释,而没有提供对全球时间动态的洞察。相比之下,第7节中的用例表明TFT能够分析全局时间关系,并允许用户在整个数据集上解释模型的全局行为——特别是在识别任何持久模式(例如季节性或滞后效应)和存在的制度方面。

3. Multi-horizon forecasting

Let there be I unique entities in a given time series dataset – such as different stores in retail or patients in healthcare. Each entity i is associated with a set of static covariates si ∈ Rms, as well as inputs χi,t ∈ Rmχ and scalar targets yi,t ∈ R at each time-step t ∈ [0, Ti].

Time-dependent input features are subdivided into two categories χi,t = [zT i,t, xT i,t ]T – observed inputs zi,t ∈ R(mz ) which can only be measured at each step and are unknown beforehand, and known inputs xi,t ∈ Rmx which can be predetermined (e.g. the day-of-week at time t).

In many scenarios, the provision for prediction intervals can be useful for optimizing decisions and risk management by yielding an indication of likely best and worst-case values that the target can take. As such, we adopt quantile regression to our multi-horizon forecasting setting (e.g. outputting the 10th, 50th and 90th percentiles at each time step). Each quantile forecast takes the form: ˆyi(q, t, τ ) = fq (τ , yi,t−k:t, zi,t−k:t, xi,t−k:t+τ , si ) , (1) where ˆyi,t+τ (q, t, τ ) is the predicted qth sample quantile of the τ -step-ahead forecast at time t, and fq(.) is a prediction model. In line with other direct methods, we simultaneously output forecasts for τmax time steps – i.e. τ ∈ {1, . . . , τmax}. We incorporate all past information within a finite look-back window k, using target and known inputs only up till and including the forecast start time t (i.e. yi,t−k:t = {yi,t−k, . . . , yi,t }) and known inputs across the entire range (i.e. xi,t−k:t+τ = {xi,t−k, . . ., xi,t, . . . , xi,t+τ }).2

假设在给定的时间序列数据集中有 I I I个唯一的实体——例如零售中的不同商店或医疗保健中的患者。每个实体 i i i在每个时间步 t ∈ [ 0 , T i ] t∈[0,T_i] t∈[0,Ti]与一组静态协变量 s i ∈ R m s s_i\in \mathbb{R}^{m_s} si∈Rms,以及输入 χ i , t ∈ R m χ \chi _{i,t}\in \mathbb{R}^{m_{\chi}} χi,t∈Rmχ和标量目标 y i , t ∈ R y_{i,t}\in \mathbb{R} yi,t∈R相关联。

与时间相关的输入特征被细分为两类: χ i , t ∈ [ z i , t ⊤ , x i , t ⊤ ] ⊤ \chi _{i,t}\in \left[ z_{i,t}^{\top},x_{i,t}^{\top} \right]^{\top} χi,t∈[zi,t⊤,xi,t⊤]⊤——观测到的输入 z i , t ∈ R ( m z ) z_{i,t}\in \mathbb{R}^{(m_{z})} zi,t∈R(mz),它只能在每一步测量,并且是事先未知的;已知的输入 x i , t ∈ R m x x_{i,t}\in \mathbb{R}^{m_{x}} xi,t∈Rmx,它可以预先确定(例如时间 t t t的星期几)。

在许多情况下,提供预测间隔对于优化决策和风险管理是有用的,因为它提供了目标可能获得的最佳值和最差值的指示。因此,我们在多水平预测设置中采用分位数回归(例如,在每个时间步骤中输出第10、50和90个百分位数)。每个分位数预测采用如下形式:

y

^

i

(

q

,

t

,

τ

)

=

f

q

(

τ

,

y

i

,

t

−

k

:

t

,

z

i

,

t

−

k

:

t

,

x

i

,

t

−

k

:

t

+

τ

,

s

i

)

,

(1)

\hat{y}_i\left( q,t,\tau \right) =f_q\left( \tau ,y_{i,t-k:t},z_{i,t-k:t},x_{i,t-k:t+\tau},s_i \right), \tag{1}

y^i(q,t,τ)=fq(τ,yi,t−k:t,zi,t−k:t,xi,t−k:t+τ,si),(1)

y

^

i

(

q

,

t

,

τ

)

\hat{y}_i\left( q,t,\tau \right)

y^i(q,t,τ) target

q

q

q quantile分位数

t现在所在的预测时间点

f

q

(

.

)

f_q\left(.\right)

fq(.)model

τ

τ

τ当前时间点预测未来时间点需要的步数

y

i

,

t

−

k

:

t

y_{i,t-k:t}

yi,t−k:thistory target

z

i

,

t

−

k

:

t

z_{i,t-k:t}

zi,t−k:tpast inputs历史的可观测数据

x

i

,

t

−

k

:

t

+

τ

x_{i,t-k:t+\tau}

xi,t−k:t+τfuture inputs未来已知的

k

k

k过去参考的窗口大小t之前的k个样本

s

i

s_i

si静态协变量

其中

y

^

i

(

q

,

t

,

τ

)

\hat{y}_i\left( q,t,\tau \right)

y^i(q,t,τ) target是在

t

t

t时刻

τ

τ

τ步进预测的第

q

q

qth quantile个样本分位数,

f

q

(

.

)

f_q\left(.\right)

fq(.)model是一个预测模型。与其他直接方法一致,我们同时输出对

τ

m

a

x

τ_{max}

τmax时间步长的预测,即

τ

∈

{

1

,

…

,

τ

m

a

x

}

τ∈\{1,…,τ_{max}\}

τ∈{1,…,τmax}。我们将所有过去的信息合并到一个有限的回溯窗口

k

k

k中,只使用目标和已知输入直到并包括预测开始时间

t

t

t(即

y

i

,

t

−

k

:

t

=

{

y

i

,

t

−

k

,

…

,

y

i

,

t

}

)

y_{i,t-k:t} = \{y_{i,t-k},…,y_{i,t} \})

yi,t−k:t={yi,t−k,…,yi,t})和整个范围内的已知输入(即

x

i

,

t

−

k

:

t

=

{

x

i

,

t

−

k

,

…

,

x

i

,

t

,

…

,

x

i

,

t

+

τ

}

)

x_{i,t-k:t} = \{x_{i,t-k},…,x_{i,t},…,x_{i,t+τ} \})

xi,t−k:t={xi,t−k,…,xi,t,…,xi,t+τ})

问题:怎么预测分位数

真实的标签是一条时间序列,在这个时间点下,我其实不知道这个分位数,在这个分位数没有label的情况下怎么预测这个分位数呢?联想DeepAR(待精读),这篇里面做这个事情预设(

μ

μ

μ,

σ

σ

σ)真实的情况下

σ

=

0

σ=0

σ=0,

μ

=

自己的真实值

μ=自己的真实值

μ=自己的真实值,这就是DeepAR的label,DeepAR的predict就是预测

μ

μ

μ,

σ

σ

σ,然后通过交叉熵的方式预测出来,得到

t

0

t_0

t0下的

μ

μ

μ,

σ

σ

σ在做个高斯采样,统计采样的分位数,就能得到时间点上分位数预测值是多少。TFT不是这样做的。

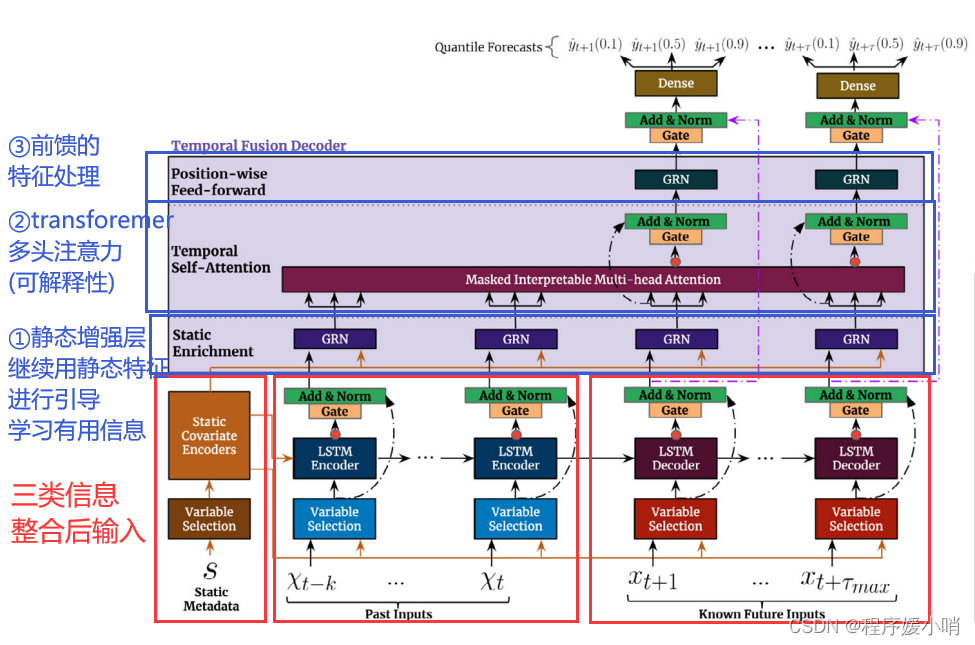

4. Model architecture

We design TFT to use canonical components to efficiently build feature representations for each input type (i.e. static, known, observed inputs) for high forecasting performance on a wide range of problems. The major constituents of TFT are:

- Gating mechanisms to skip over any unused components of the architecture, providing adaptive depth and network complexity to accommodate a wide range of datasets and scenarios.

- Variable selection networks to select relevant input variables at each time step.

- Static covariate encoders to integrate static features into the network, through the encoding of context vectors to condition temporal dynamics.

- Temporal processing to learn both long- and shortterm temporal relationships from both observed and known time-varying inputs. A sequence-to-sequence layer is employed for local processing, whereas longterm dependencies are captured using a novel interpretable multi-head attention block.

- Prediction intervals via quantile forecasts to determine the range of likely target values at each prediction horizon.

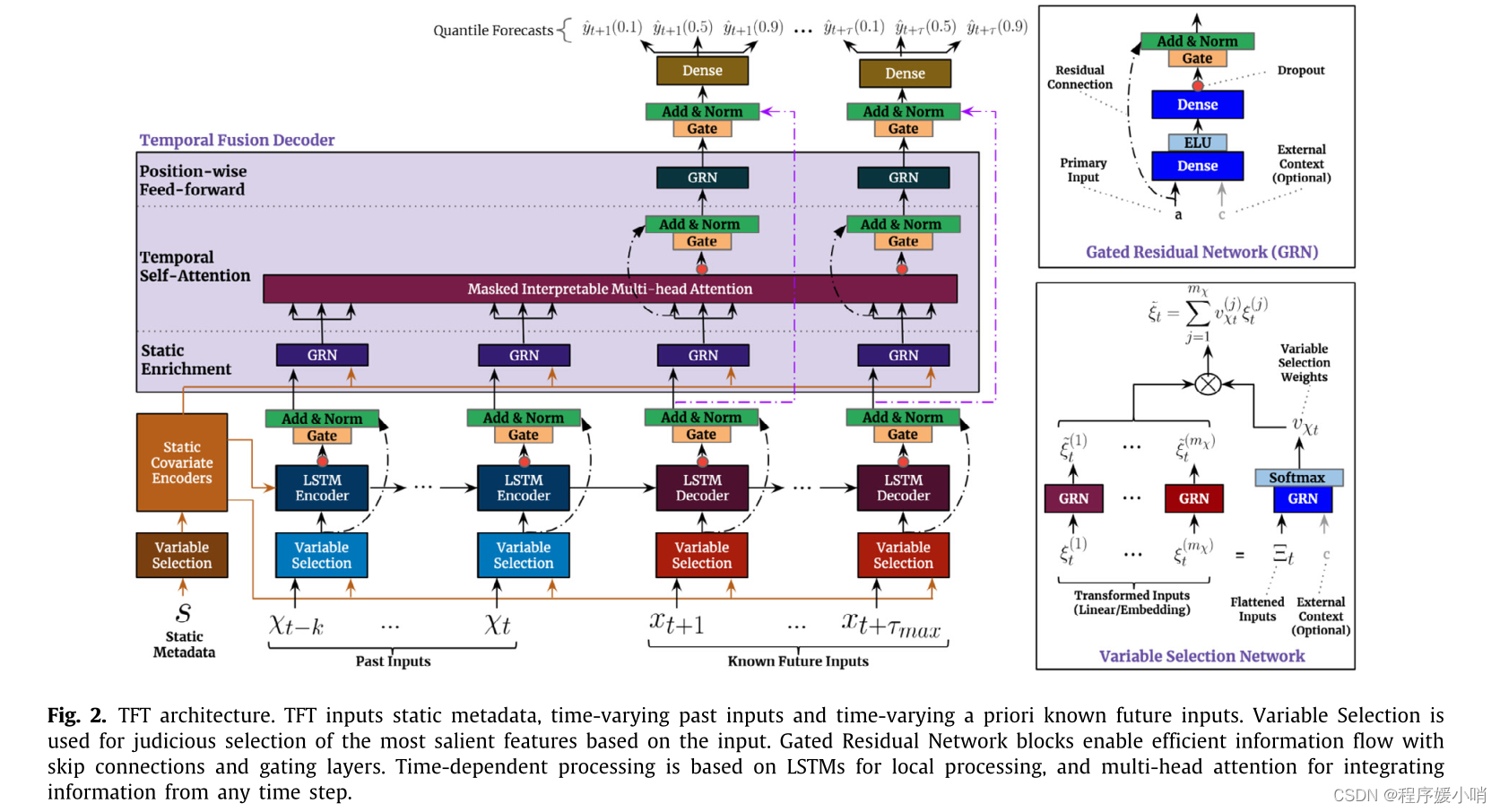

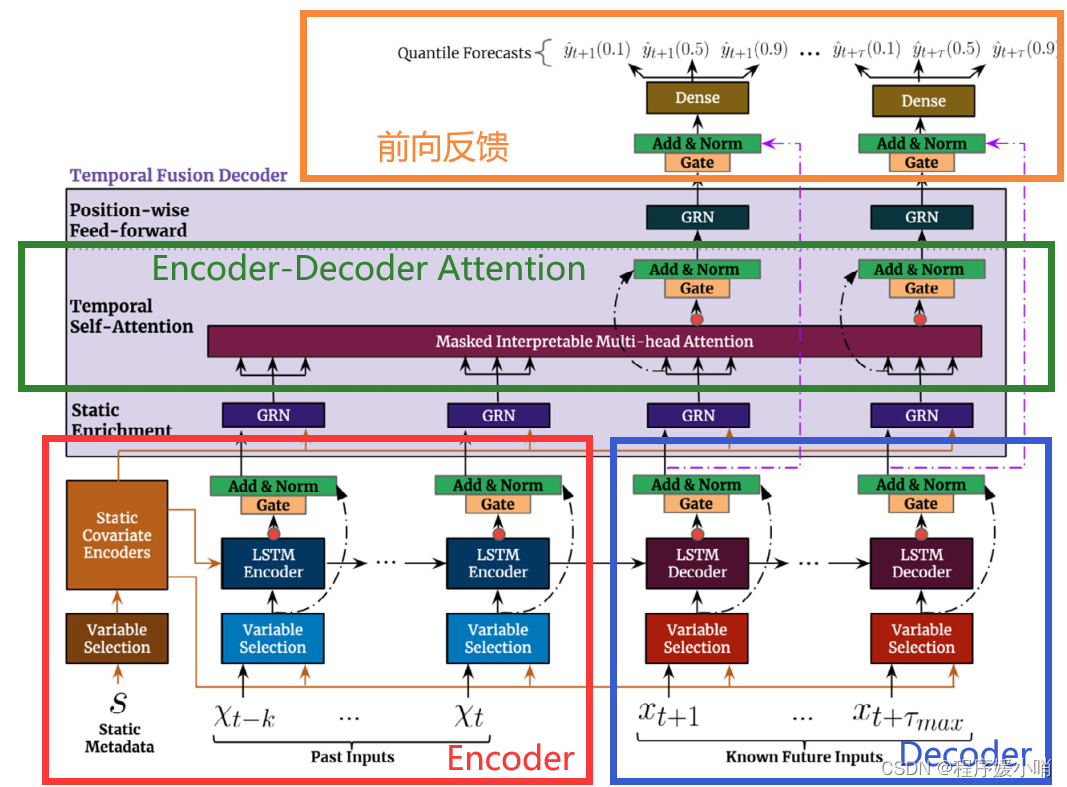

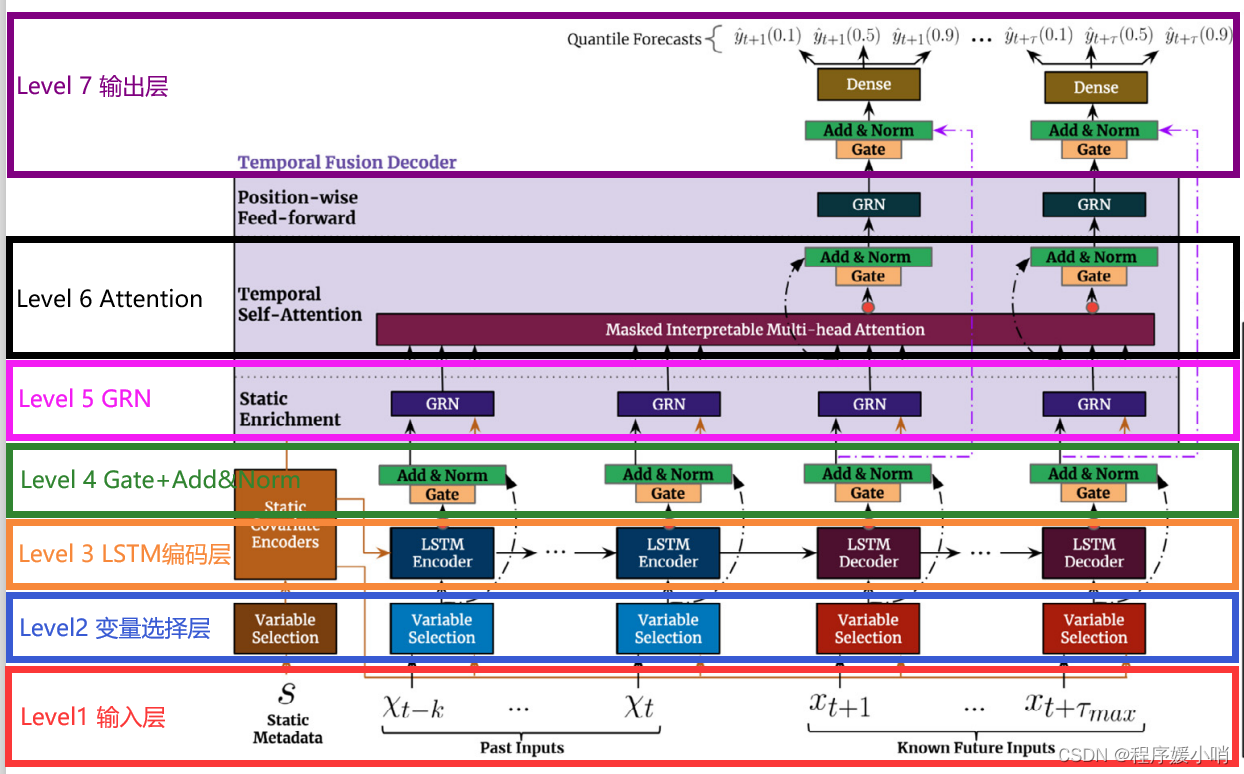

Fig. 2 shows the high-level architecture of Temporal Fusion Transformer (TFT), with individual components described in detail in the subsequent sections.

我们设计TFT使用规范组件来有效地为每种输入类型(即静态、已知、观察到的输入)构建特征表示,以在广泛的问题上实现高预测性能。TFT的主要组成部分是:

- 门控机制可以跳过架构中任何未使用的组件,提供自适应深度和网络复杂性,以适应广泛的数据集和场景。

- 变量选择网络在每个时间步中选择相关的输入变量。

- 静态协变量编码器将静态特征集成到网络中,通过对上下文向量的编码来调节时间动态。

- 时间处理从观察到的和已知的时变输入中学习长期和短期的时间关系。使用序列到序列层进行局部处理,而使用一种新的可解释的多头注意块捕获长期依赖关系。

- 预测区间通过分位数预测来确定每个预测水平可能目标值的范围。

图2显示了时序融合变压器(TFT)的高级架构,各个组件将在后续章节中详细描述。

Fig. 2. TFT architecture. TFT inputs static metadata, time-varying past inputs and time-varying a priori known future inputs. Variable Selection is used for judicious selection of the most salient features based on the input. Gated Residual Network blocks enable efficient information flow with skip connections and gating layers. Time-dependent processing is based on LSTMs for local processing, and multi-head attention for integrating information from any time step.

图2所示。TFT架构。TFT输入静态元数据、时变的过去输入和时变的先验已知未来输入。变量选择用于根据输入对最显著的特征进行明智的选择。门控剩余网络块通过跳过连接和门控层实现有效的信息流。时间依赖处理基于LSTMs进行局部处理,基于多头注意对任意时间步的信息进行整合。

GRN(门控残差网络):作用是控制信息流,主要是为了保持信息通过门控做一个初步特征选择工作

gate: pipeline里面一个GLU,门控线性单元,主要是控制信息的一个流动情况

Variable selection networks(变量选择网络):包含了上述GRN,针对不同输入做了一个设计,右下角External Context运用了外部的信息去引导学习到了一个权重,这个权重再乘以左边特征的一个feature map就可以得到特征选择的一个结果。

然后把该架构与Transformer作类比,毕竟是基于Transformer改的

按照自底而上的角度先来粗看一下这个模型做的是什么

Level 1 输入层

三部分:静态信息、历史(待预测变量)信息、未来(其他变量)信息

Level 2 变量选择层

说白了就是要做特征筛选

Level 3 LSTM编码层

既然是时间序列,LSTM来捕捉点长短期信息合情合理

Level 4 Gate + Add&Norm

门控可以理解是在进一步考虑不同特征的重要性,残差和normalization常规操作了

Level 5 GRN

跟Level4基本一样,可以理解就是在加深网络

Level 6 Attention

对不同时刻的信息进行加权

Level 7 输出层

做的是分位数回归,可以预测区间了

输入层面:

大体上是一个双输入的结构:

一个输入接static metadata也就是前面所说的静态变量,其中连续特征直接输入,离散特征接embedding之后和连续特征concat 再输入;

另一个接动态特征,动态特征分为两部分,动态时变和动态时不变特征,图中的past inputs是动态时变特征的输入部分,而known future inputs是动态时不变特征的输入部分,也是一样,动态离散特征通过embedding输入nn,常规操作;

可以看到,所有的输入都是先进入一个Variable Selection的模块,即特征选择模块。其中GRN,GLU是variable selection networks的核心组件,先介绍一下GLU和GRN。

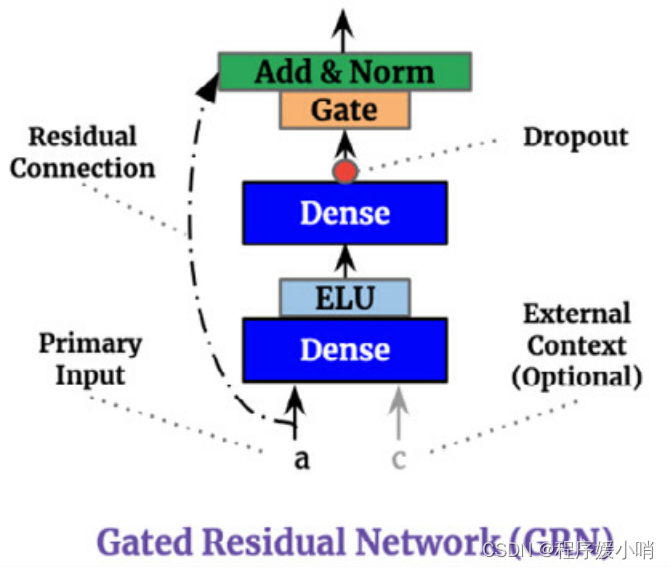

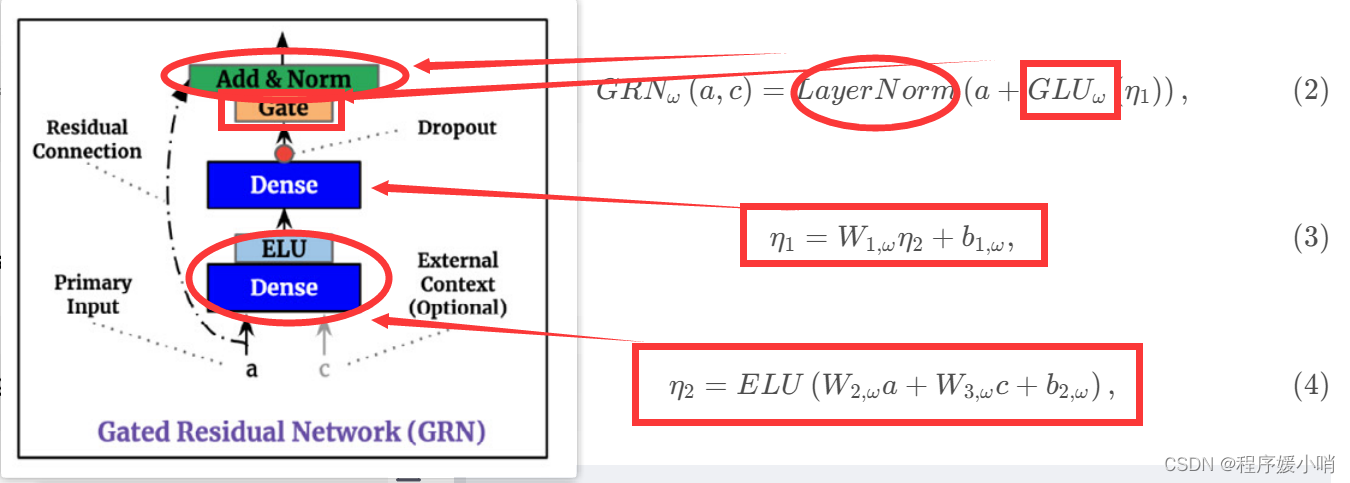

4.1. Gating mechanisms

The precise relationship between exogenous inputs and targets is often unknown in advance, making it difficult to anticipate which variables are relevant. Moreover, it is difficult to determine the extent of required non-linear processing, and there may be instances where simpler models can be beneficial – e.g. when datasets are small or noisy. With the motivation of giving the model the flexibility to apply non-linear processing only where needed, we propose Gated Residual Network (GRN) as shown in Fig. 2 as a building block of TFT. The GRN takes in a primary input a and an optional context vector c and yields:

G R N ω ( a , c ) = L a y e r N o r m ( a + G L U ω ( η 1 ) ) , (2) GRN_{\omega}\left( a,c \right) =LayerNorm\left( a+GLU_{\omega}\left( \eta _1 \right) \right), \tag{2} GRNω(a,c)=LayerNorm(a+GLUω(η1)),(2)

η 1 = W 1 , ω η 2 + b 1 , ω , (3) \eta _1=W_{1,\omega}\eta _2+b_{1,\omega},\tag{3} η1=W1,ωη2+b1,ω,(3)

η 2 = E L U ( W 2 , ω a + W 3 , ω c + b 2 , ω ) , (4) \eta _2=ELU\left( W_{2,\omega}a+W_{3,\omega}c+b_{2,\omega} \right) ,\tag{4} η2=ELU(W2,ωa+W3,ωc+b2,ω),(4)

where ELU is the Exponential Linear Unit activation function (Clevert, Unterthiner, & Hochreiter, 2016), η 1 ∈ R d m o d e l \eta _1\in \mathbb{R}^{d_{model}} η1∈Rdmodel, η 2 ∈ R d m o d e l \eta _2\in \mathbb{R}^{d_{model}} η2∈Rdmodel are intermediate layers, LayerNorm is standard layer normalization of Lei Ba, Kiros, and Hinton (2016), and ω is an index to denote weight sharing.

When W 2 , ω a + W 3 , ω c + b 2 , ω ≫ 0 W_{2,\omega}a+W_{3,\omega}c+b_{2,\omega}\gg 0 W2,ωa+W3,ωc+b2,ω≫0, the ELU activation would act as an identity function and when W 2 , ω a + W 3 , ω c + b 2 , ω ≪ 0 W_{2,\omega}a+W_{3,\omega}c+b_{2,\omega}\ll 0 W2,ωa+W3,ωc+b2,ω≪0, the ELU activation would generate a constant output, resulting in linear layer behavior. We use component gating layers based on Gated Linear Units (GLUs) (Dauphin, Fan, Auli, & Grangier, 2017) to provide the flexibility to suppress any parts of the architecture that are not required for a given dataset. Letting γ ∈ R d m o d e l γ\in \mathbb{R}^{d_{model}} γ∈Rdmodelbe the input, the GLU then takes the form:

G L U ω ( γ ) = σ ( W 4 , ω γ + b 4 , ω ) ⊙ ( W 5 , ω γ + b 5 , ω ) , (5) GLU_{\omega}\left( \gamma \right) =\sigma \left( W_{4,\omega}\gamma +b_{4,\omega} \right) \odot \left( W_{5,\omega}\gamma +b_{5,\omega} \right) ,\tag{5} GLUω(γ)=σ(W4,ωγ+b4,ω)⊙(W5,ωγ+b5,ω),(5)

where σ ( . ) σ (.) σ(.) is the sigmoid activation function, W ( . ) ∈ R d m o d e l × d m o d e l W_{(.)}\in \mathbb{R}^{d_{model}×d_{model}} W(.)∈Rdmodel×dmodel, b ( . ) ∈ R d m o d e l b_{(.)}\in \mathbb{R}^{d_{model}} b(.)∈Rdmodelare the weights and biases, ⊙ is the element-wise Hadamard product, and dmodel is the hidden state size (common across TFT). GLU allows TFT to control the extent to which the GRN contributes to

外部输入和目标之间的精确关系通常是预先未知的,因此很难预测哪些变量是相关的。此外,很难确定所需的非线性处理的程度,可能在某些情况下,更简单的模型是有益的——例如,当数据集很小或有噪声时。为了使模型具有仅在需要时应用非线性处理的灵活性,我们提出了如图2所示的门控剩余网络(GRN)作为TFT的构建块。入库单需要在一个主要输入

a

a

a和一个可选的上下文向量

c

c

c和收益率:

G

R

N

ω

(

a

,

c

)

=

L

a

y

e

r

N

o

r

m

(

a

+

G

L

U

ω

(

η

1

)

)

,

(2)

GRN_{\omega}\left( a,c \right) =LayerNorm\left( a+GLU_{\omega}\left( \eta _1 \right) \right), \tag{2}

GRNω(a,c)=LayerNorm(a+GLUω(η1)),(2)

η

1

=

W

1

,

ω

η

2

+

b

1

,

ω

,

(3)

\eta _1=W_{1,\omega}\eta _2+b_{1,\omega},\tag{3}

η1=W1,ωη2+b1,ω,(3)

η

2

=

E

L

U

(

W

2

,

ω

a

+

W

3

,

ω

c

+

b

2

,

ω

)

,

(4)

\eta _2=ELU\left( W_{2,\omega}a+W_{3,\omega}c+b_{2,\omega} \right) ,\tag{4}

η2=ELU(W2,ωa+W3,ωc+b2,ω),(4)

补充点:

这里面可能让有些人不熟悉的应该只有ELU (Exponential Linear Unit) 和 GLU (Gated Linear Units)

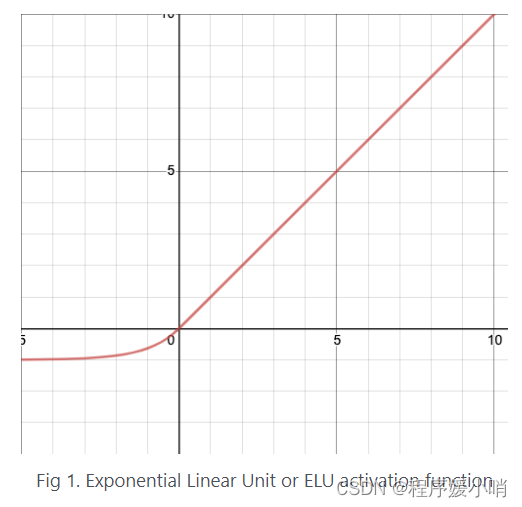

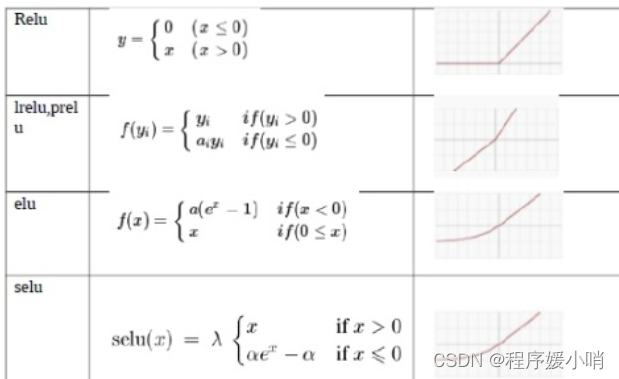

ELU: also know as Exponential Linear Unit is an activation function which is somewhat similar to the ReLU with some differences.ELU不会有梯度消失的困扰:

与 Leaky-ReLU 和 PReLU 类似,与 ReLU 不同的是,ELU 没有神经元死亡的问题(ReLU Dying 问题是指当出现异常输入时,在反向传播中会产生大的梯度,这种大的梯度会导致神经元死亡和梯度消失)。 它已被证明优于 ReLU 及其变体,如 Leaky-ReLU(LReLU) 和 Parameterized-ReLU(PReLU)。 与 ReLU 及其变体相比,使用 ELU 可在神经网络中缩短训练时间并提高准确度。

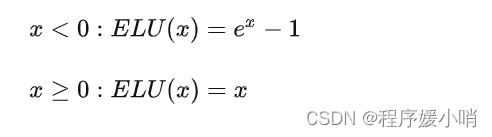

其公式如下所示:

y = ELU(x) = exp(x) − 1 ; if x<0

y = ELU(x) = x ; if x≥0

其函数图像如下图所示

优点:

- 它在所有点上都是连续的和可微的。

- 与其他线性非饱和激活函数(如 ReLU 及其变体)相比,它有着更快的训练时间。

- 与 ReLU 不同,它没有神经元死亡的问题。 这是因为 ELU 的梯度对于所有负值都是非零的。

- 作为非饱和激活函数,它不会遇到梯度爆炸或消失的问题。

- 与其他激活函数(如 ReLU 和变体、Sigmoid 和双曲正切)相比,它实现了更高的准确性。

缺点:

与 ReLU 及其变体相比,由于负输入涉及非线性,因此计算速度较慢。 然而,在训练期间,ELU 的更快收敛足以弥补这一点。 但是在测试期间,ELU 的性能会比 ReLU 及其变体慢。

- ELU这个激活函数可以取到负值,相比于Relu这让单元激活均值可以更接近0,类似于Batch Normalization的效果但是只需要更低的计算复杂度。同时在输入取较小值时具有软饱和的特性,提升了对噪声的鲁棒性。

- GLU做的时其实就是一个加权求和操作

首先看下elu的激活函数,当输入x大于0的时候,做恒等变换,小于0则做指数变换如上图公式

然后得到的结果经过了一个无激活函数,仅仅进行线性变换的dense层,经过dropout,然后通过投影层project,这里的投影层简单使用了dense,主要是因为:x = inputs + self.gated_linear_unit(x)

这里做了skip connection,为了保证inputs和gated_linear_unit(x)维度一致,所以简单做了线性变换(skip connection的常规操作)。最后经过一个layer normalization层,整个GRN的传播过程结束。

该模块这么设计的目的在于控制非线性变换的程度:x = inputs + self.gated_linear_unit(x),这里的inputs是原始的输入,即使做了inputs = self.project(inputs),project层也仅仅是不带激活函数的线性变换层,而gated_linear_unit(x)中的x,是经过了带elu的dense层和lineardense层之后得到的非线性变换的结果。x进入GLU之后会进行软性特征选择,谷歌称这样做使得模型的适应性很强,比如小数据可能不太需要复杂的非线性变换,那么通过GLU这个activation之后得到的结果x可能就是一个接近0的向量,那么inputs加入x相当于没有变换,等同于inputs直接接了一个layer norm之后输出了。

ELU是指数线性单位激活函数(Clevert、Unterthiner & Hochreiter, 2016),

η

1

∈

R

d

m

o

d

e

l

\eta _1\in \mathbb{R}^{d_{model}}

η1∈Rdmodel,

η

2

∈

R

d

m

o

d

e

l

\eta _2\in \mathbb{R}^{d_{model}}

η2∈Rdmodel中间层次,LayerNorm是标准层Lei英航正常化,Kiros,辛顿(2016),

ω

ω

ω是一个指数来表示重量共享。

当

W

2

,

ω

a

+

W

3

,

ω

c

+

b

2

,

ω

≫

0

W_{2,\omega}a+W_{3,\omega}c+b_{2,\omega}\gg 0

W2,ωa+W3,ωc+b2,ω≫0时,ELU的活化就会起到恒等函数的作用;

当

W

2

,

ω

a

+

W

3

,

ω

c

+

b

2

,

ω

≪

0

W_{2,\omega}a+W_{3,\omega}c+b_{2,\omega}\ll 0

W2,ωa+W3,ωc+b2,ω≪0时,ELU的活化就会产生恒定的输出,从而形成线性层状行为。

我们使用基于门控线性单元(GLUs)的组件门控层(Dauphin, Fan, Auli, & Grangier, 2017)来提供灵活性,以抑制给定数据集不需要的架构的任何部分。设

γ

∈

R

d

m

o

d

e

l

γ\in \mathbb{R}^{d_{model}}

γ∈Rdmodel为输入,则GLU的形式为:

G

L

U

ω

(

γ

)

=

σ

(

W

4

,

ω

γ

+

b

4

,

ω

)

⊙

(

W

5

,

ω

γ

+

b

5

,

ω

)

,

(5)

GLU_{\omega}\left( \gamma \right) =\sigma \left( W_{4,\omega}\gamma +b_{4,\omega} \right) \odot \left( W_{5,\omega}\gamma +b_{5,\omega} \right) ,\tag{5}

GLUω(γ)=σ(W4,ωγ+b4,ω)⊙(W5,ωγ+b5,ω),(5)

其中

σ

(

.

)

σ(.)

σ(.)是sigmoid激活函数,

W

(

.

)

∈

R

d

m

o

d

e

l

×

d

m

o

d

e

l

W_{(.)}\in \mathbb{R}^{d_{model}×d_{model}}

W(.)∈Rdmodel×dmodel,

b

(

.

)

∈

R

d

m

o

d

e

l

b_{(.)}\in \mathbb{R}^{d_{model}}

b(.)∈Rdmodel是权值和偏差,⊙是元素的Hadamard积,

d

m

o

d

e

l

d_{model}

dmodel是隐态大小(TFT中常见)。GLU允许TFT控制GRN的贡献程度

可以将GLU看成一个简易版的特征选择单元(软性的特征选择,可以做feature selection或者去除冗余信息,灵活控制数据中不需要的部分),然后GRN的工作主要来看就是控制数据的流动,主要是控制一些非线性,线性的贡献大概是怎么样的,然后也做一些特征选择工作

但为什么需要GRN呢?作者举了个例子当数据集很小或有噪声时。为了使模型具有仅在需要时应用非线性处理的灵活性,提出了GRN,假设没有这个Gate,一般如果数据很小非常noisy(噪声很多)的话,再走非线性这条路就会很危险(再考虑很多的特征)大概率过拟合,但加了Gate就相当于特征选择,或者可以理解为有一种降噪的作用

代码实战:

门控线性单元(GLU)

class GatedLinearUnit(layers.Layer):

def __init__(self, units):

super(GatedLinearUnit, self).__init__()

self.linear_w = layers.Dense(units)

self.linera_v = layers.Dense(units)

self.sigmoid = layers.Dense(units, activation="sigmoid")

def call(self, inputs):

return self.linear_w(inputs) * self.sigmoid(self.linear_v(inputs))

tabnet里面也用到了。就是GLU的激活函数,不是gelu是glu,其灵感来源于LSTM的门控机制,LSTM中会使用遗忘门对过去的信息进行选择性的遗忘,GLU的公式如下:

G

L

U

ω

(

γ

)

=

σ

(

W

4

,

ω

γ

+

b

4

,

ω

)

⊙

(

W

5

,

ω

γ

+

b

5

,

ω

)

(5)

GLU_{\omega}\left( \gamma \right) =\sigma \left( W_{4,\omega}\gamma +b_{4,\omega} \right) \odot \left( W_{5,\omega}\gamma +b_{5,\omega} \right) \tag{5}

GLUω(γ)=σ(W4,ωγ+b4,ω)⊙(W5,ωγ+b5,ω)(5)

G

L

U

(

X

)

=

σ

(

X

V

+

c

)

⊙

(

X

W

+

b

)

GLU(X) =\sigma \left( XV+c \right) \odot \left( XW+b \right)

GLU(X)=σ(XV+c)⊙(XW+b)

输入X,W, b, V, c是需要学的参数,可以理解就是对输入做完仿射变换后,再进行加权,这个权重也是输入的仿射变换进行归一化(过一下sigmoid)。torch的实现是:

class GLU(nn.Module):

def __init__(self, in_size):

super().__init__()

self.linear1 = nn.Linear(in_size, in_size)

self.linear2 = nn.Linear(in_size, in_size)

def forward(self, X):

return self.linear1(X)*self.linear2(X).sigmoid()

sigmoid之后的结果介于0~1之间,因此,可以起到软性的特征选择功能功能,当某些特征再训练的过程中几乎没有帮助的时候,对应的sigmoid之后的值会逐渐变得接近于0。

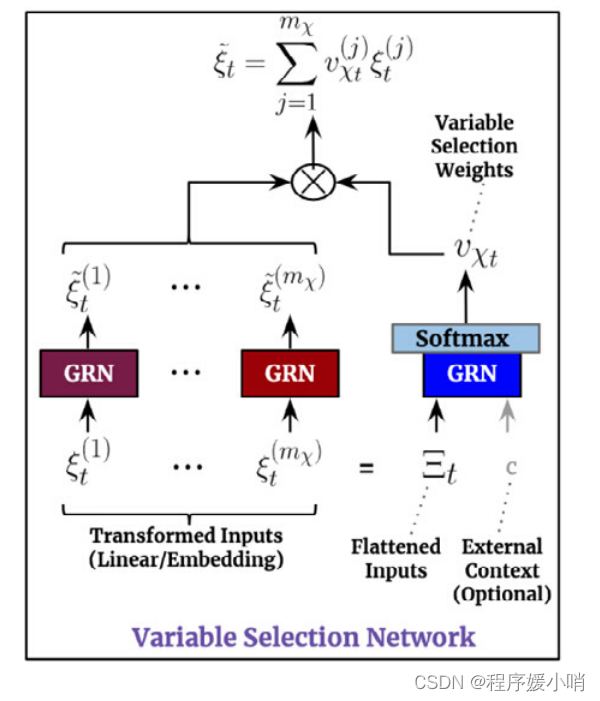

4.2. Variable selection networks

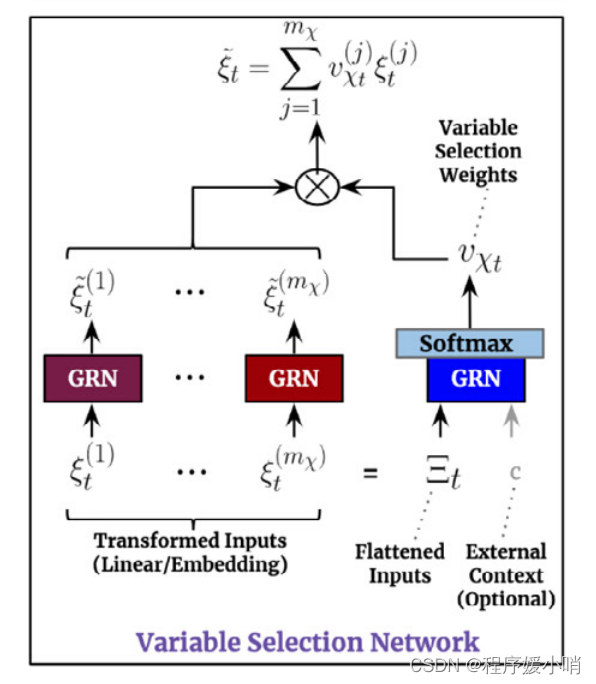

While multiple variables may be available, their relevance and specific contribution to the output are typically unknown. TFT is designed to provide instancewise variable selection through the use of variable selection networks applied to both static covariates and time-dependent covariates. Beyond providing insights into which variables are most significant for the prediction problem, variable selection also allows TFT to remove any unnecessary noisy inputs which could negatively impact performance. Most real-world time series datasets contain features with less predictive content, thus variable selection can greatly help model performance via utilization of learning capacity only on the most salient ones.

We use entity embeddings (Gal & Ghahramani, 2016) for categorical variables as feature representations, and linear transformations for continuous variables – transforming each input variable into a (dmodel)-dimensional vector which matches the dimensions in subsequent layers for skip connections. All static, past and future inputs make use of separate variable selection networks with distinct weights (as denoted by different colors in the main architecture diagram of Fig. 2). Without loss of generality, we present the variable selection network for past inputs below – noting that those for other inputs take the same form.

Let ξ t ( j ) ∈ R d m o d e l \xi _{t}^{\left( j \right)}\in \mathbb{R}^{d_{model}} ξt(j)∈Rdmodel denote the transformed input of the jth variable at time t, with Ξ t = [ ξ t ( 1 ) T , . . . , ξ t ( m χ ) T ] T \varXi _t=\left[ \xi _{t}^{\left( 1 \right) ^T},...,\xi _{t}^{\left( m_{\chi} \right) ^T} \right] ^T Ξt=[ξt(1)T,...,ξt(mχ)T]Tbeing the flattened vector of all past inputs at time t. Variable selection weights are generated by feeding both Ξt and an external context vector c s through a GRN, followed by a Softmax layer: υ χ t = S o f t max ( G R N v χ ( Ξ t , c s ) ) , (6) \upsilon _{\chi t}=Soft\max \left( GRN_{v_{\chi}}\left( \varXi _t,c_s \right) \right) ,\tag{6} υχt=Softmax(GRNvχ(Ξt,cs)),(6)

where vχt ∈ Rmχ is a vector of variable selection weights, and cs is obtained from a static covariate encoder (see Section 4.3). For static variables, we note that the context vector c s is omitted – given that it already has access to static information.

At each time step, an additional layer of non-linear processing is employed by feeding each ξ(j) t through its own GRN:

ξ ~ t ( j ) = G R N ξ ~ ( j ) ( ξ t ( j ) ) (7) \tilde{\xi}_{t}^{\left( j \right)}=GRN_{\tilde{\xi}\left( j \right)}\left( \xi _{t}^{\left( j \right)} \right) \tag{7} ξ~t(j)=GRNξ~(j)(ξt(j))(7)

where ˜ξ (j) t is the processed feature vector for variable j.

We note that each variable has its own G R N ξ ~ ( j ) GRN_{\tilde{\xi}\left( j \right)} GRNξ~(j), with weights shared across all time steps t. Processed features are then weighted by their variable selection weights and combined: ξ ~ t = ∑ j = 1 m χ υ χ t ( j ) ξ ~ t ( j ) (8) \tilde{\xi}_t=\sum_{j=1}^{m_{\chi}}{\upsilon _{\chi _t}^{\left( j \right)}\tilde{\xi}_{t}^{\left( j \right)}}\tag{8} ξ~t=j=1∑mχυχt(j)ξ~t(j)(8)

where υ χ t ( j ) \upsilon _{\chi _t}^{\left( j \right)} υχt(j)is the jth element of vector υ χ t \upsilon _{\chi _t} υχt.

虽然可以有多个变量,但它们的相关性和对输出的具体贡献通常是未知的。TFT的设计目的是通过使用应用于静态协变量和时间相关协变量的变量选择网络来提供实例变量选择。除了提供对预测问题最重要的变量的见解外,变量选择还允许TFT删除任何可能对性能产生负面影响的不必要的噪声输入。大多数真实世界的时间序列数据集包含较少预测内容的特征,因此变量选择可以通过仅在最显著的特征上使用学习能力来极大地帮助模型性能。

我们将实体嵌入(Gal & Ghahramani, 2016)用于分类变量作为特征表示,并将线性转换用于连续变量——将每个输入变量转换为(dmodel)维向量,该向量与后续层中的维度匹配,用于跳过连接。所有静态、过去和未来输入都使用具有不同权重的单独变量选择网络(如图2的主架构图中不同颜色所示)。在不丧失一般性的情况下,我们在下面展示了过去输入的变量选择网络——注意其他输入的变量选择网络采用相同的形式。

设

ξ

t

(

j

)

∈

R

d

m

o

d

e

l

\xi _{t}^{\left( j \right)}\in \mathbb{R}^{d_{model}}

ξt(j)∈Rdmodel表示第j个变量在t时刻的变换输入,

Ξ

t

=

[

ξ

t

(

1

)

T

,

.

.

.

,

ξ

t

(

m

χ

)

T

]

T

\varXi _t=\left[ \xi _{t}^{\left( 1 \right) ^T},...,\xi _{t}^{\left( m_{\chi} \right) ^T} \right] ^T

Ξt=[ξt(1)T,...,ξt(mχ)T]T是T时刻所有过去输入的平化向量。变量选择权重是通过GRN输入

Ξ

t

\varXi _t

Ξt和外部上下文向量

c

s

c_s

cs来生成的,然后是Softmax层:

υ

χ

t

=

S

o

f

t

max

(

G

R

N

v

χ

(

Ξ

t

,

c

s

)

)

,

(6)

\upsilon _{\chi t}=Soft\max \left( GRN_{v_{\chi}}\left( \varXi _t,c_s \right) \right) ,\tag{6}

υχt=Softmax(GRNvχ(Ξt,cs)),(6)

其中

υ

χ

t

∈

R

m

χ

\upsilon _{\chi t}∈\mathbb{R}^{m_{\chi}}

υχt∈Rmχ是变量选择权重的向量,cs是从静态共变量编码器获得的(见章节4.3)。对于静态变量,我们注意到上下文向量c s被省略了——因为它已经可以访问静态信息。

在每一个时间步骤中,通过向每个

ξ

~

t

(

j

)

\tilde{\xi}_{t}^{\left( j \right)}

ξ~t(j)输入它自己的GRN来使用额外的非线性处理层:

ξ

~

t

(

j

)

=

G

R

N

ξ

~

(

j

)

(

ξ

t

(

j

)

)

(7)

\tilde{\xi}_{t}^{\left( j \right)}=GRN_{\tilde{\xi}\left( j \right)}\left( \xi _{t}^{\left( j \right)} \right) \tag{7}

ξ~t(j)=GRNξ~(j)(ξt(j))(7)

其中 ξ ~ t ( j ) \tilde{\xi}_{t}^{\left( j \right)} ξ~t(j)是变量 j j j的处理特征向量。

我们注意到每个变量都有自己的

G

R

N

ξ

~

(

j

)

GRN_{\tilde{\xi}\left( j \right)}

GRNξ~(j),在所有时间步长t中共享权重。处理后的特征通过它们的变量选择权重进行加权并组合:

ξ

~

t

=

∑

j

=

1

m

χ

υ

χ

t

(

j

)

ξ

~

t

(

j

)

(8)

\tilde{\xi}_t=\sum_{j=1}^{m_{\chi}}{\upsilon _{\chi _t}^{\left( j \right)}\tilde{\xi}_{t}^{\left( j \right)}}\tag{8}

ξ~t=j=1∑mχυχt(j)ξ~t(j)(8)

其中 υ χ t ( j ) \upsilon _{\chi _t}^{\left( j \right)} υχt(j)是向量 υ χ t \upsilon _{\chi _t} υχt的第 j j j个元素。

总结,Variable selection networks 一共有3个公式

ξ

t

(

j

)

{\xi}_{t}^{\left( j \right)}

ξt(j)input(selected feature)左边那些输入都是原始特征独立开的,

假设在时间t下面有m个变量(输入),对变量做embedding,

如果是连续型变量(continuous variables)直接做一个线性转化(linear transformations)

如果是特征变量(categorical variables)用实体嵌入(entity embeddings )方式去做

拿到每个变量表征之后,就将他们放平(flattened),得到放平的结果

ξ

~

t

(

j

)

=

G

R

N

ξ

~

(

j

)

(

ξ

t

(

j

)

)

\tilde{\xi}_{t}^{\left( j \right)}=GRN_{\tilde{\xi}\left( j \right)}\left( \xi _{t}^{\left( j \right)} \right)

ξ~t(j)=GRNξ~(j)(ξt(j))

Ξ

t

=

[

ξ

t

(

1

)

T

,

.

.

.

,

ξ

t

(

m

χ

)

T

]

T

\varXi _t=\left[ \xi _{t}^{\left( 1 \right) ^T},...,\xi _{t}^{\left( m_{\chi} \right) ^T} \right] ^T

Ξt=[ξt(1)T,...,ξt(mχ)T]T图左边输入放平的结果将原始特征全部汇聚在一起了

c

c

c静态变量(由一个静态变量编码器编码出来的静态变量特征)为什么要加这个变量呢,其实是为了引导GRN的学习

υ

χ

t

(

j

)

\upsilon _{\chi _t}^{\left( j \right)}

υχt(j)特征选择权重:

υ

χ

t

=

S

o

f

t

max

(

G

R

N

v

χ

(

Ξ

t

,

c

s

)

)

\upsilon _{\chi t}=Soft\max \left( GRN_{v_{\chi}}\left( \varXi _t,c_s \right) \right)

υχt=Softmax(GRNvχ(Ξt,cs))由上述方平结果和静态变量共同输入GRN再softmax得到的权重值

最后

ξ

~

t

=

∑

j

=

1

m

χ

υ

χ

t

(

j

)

ξ

~

t

(

j

)

\tilde{\xi}_t=\sum_{j=1}^{m_{\chi}}{\upsilon _{\chi _t}^{\left( j \right)}\tilde{\xi}_{t}^{\left( j \right)}}

ξ~t=∑j=1mχυχt(j)ξ~t(j)

变量选择网络 (VSN) 的工作原理如下:

- 将 GRN 单独应用于每个特征。

- 在所有特征的串联上应用 GRN,然后是 softmax 以产生特征权重。

- 生成单个 GRN 输出的加权总和。

这里的变量选择其实是一种soft的选择方式,并不是剔除不重要变量,而是对变量进行加权,权重越大的代表重要性越高。(可以理解跟注意力机制的意思差不多,只是换种计算注意力系数的方式)

ξ

~

t

=

∑

j

=

1

m

χ

υ

χ

t

(

j

)

ξ

~

t

(

j

)

\tilde{\xi}_t=\sum_{j=1}^{m_{\chi}}{\upsilon _{\chi _t}^{\left( j \right)}\tilde{\xi}_{t}^{\left( j \right)}}

ξ~t=∑j=1mχυχt(j)ξ~t(j)

这里考虑多变量情况,不单独考虑单变量情况。

代码实战``

class VariableSelectionNetwork(nn.Module):

def __init__(

self,

input_sizes: Dict[str, int],

hidden_size: int,

input_embedding_flags: Dict[str, bool] = {},

context_size: int = None,

single_variable_grns: Dict[str, GatedResidualNetwork] = {},

prescalers: Dict[str, nn.Linear] = {},

):

"""

Calcualte weights for ``num_inputs`` variables which are each of size ``input_size``

"""

super().__init__()

self.hidden_size = hidden_size

self.input_sizes = input_sizes

self.input_embedding_flags = input_embedding_flags

self.context_size = context_size

if self.context_size is not None:

self.flattened_grn = GatedResidualNetwork(

self.input_size_total,

min(self.hidden_size, self.num_inputs),

self.num_inputs,

self.context_size)

else:

self.flattened_grn = GatedResidualNetwork(

self.input_size_total,

min(self.hidden_size, self.num_inputs),

self.num_inputs,)

self.single_variable_grns = nn.ModuleDict()

self.prescalers = nn.ModuleDict()

for name, input_size in self.input_sizes.items():

if name in single_variable_grns:

self.single_variable_grns[name] = single_variable_grns[name]

elif self.input_embedding_flags.get(name, False):

self.single_variable_grns[name] = ResampleNorm(input_size, self.hidden_size)

else:

self.single_variable_grns[name] = GatedResidualNetwork(

input_size,

min(input_size, self.hidden_size),

output_size=self.hidden_size,)

if name in prescalers: # reals need to be first scaled up

self.prescalers[name] = prescalers[name]

elif not self.input_embedding_flags.get(name, False):

self.prescalers[name] = nn.Linear(1, input_size)

self.softmax = nn.Softmax(dim=-1)

def forward(self, x: Dict[str, torch.Tensor], context: torch.Tensor = None):

# transform single variables

var_outputs = []

weight_inputs = []

for name in self.input_sizes.keys():

# select embedding belonging to a single input

variable_embedding = x[name]

if name in self.prescalers:

variable_embedding = self.prescalers[name](variable_embedding)

weight_inputs.append(variable_embedding)

var_outputs.append(self.single_variable_grns[name](variable_embedding))

var_outputs = torch.stack(var_outputs, dim=-1)

# 计算权重

flat_embedding = torch.cat(weight_inputs, dim=-1)

sparse_weights = self.flattened_grn(flat_embedding, context)

sparse_weights = self.softmax(sparse_weights).unsqueeze(-2)

outputs = var_outputs * sparse_weights # 加权和

outputs = outputs.sum(dim=-1)

return outputs, sparse_weights

4.3. Static covariate encoders

In contrast with other time series forecasting architectures, the TFT is carefully designed to integrate information from static metadata, using separate GRN encoders to produce four different context vectors, cs, c e, cc, and c h. These contect vectors are wired into various locations in the temporal fusion decoder (Section 4.5) where static variables play an important role in processing. Specifically, this includes contexts for

(1) temporal variable selection (cs),

(2) local processing of temporal features (c c, c h), and

(3) enriching of temporal features with static information (c e). As an example, taking ζ to be the output of the static variable selection network, contexts for temporal variable selection would be encoded according to c s = GRNcs(ζ).

与其他时间序列预测架构相比,TFT经过精心设计,用于集成来自静态元数据的信息,使用单独的GRN编码器产生四个不同的上下文向量,

c

s

,

c

e

,

c

c

和

c

h

c_s, c_e, c_c和c_h

cs,ce,cc和ch。这些连接向量连接到时间融合解码器的不同位置(第4.5节),其中静态变量在处理中发挥重要作用。具体来说,这包括

(1)时间变量选择

(

c

s

)

(c_s)

(cs)的上下文,(VSN的)

(2)时间特征的局部处理

(

c

c

,

c

h

)

(c_c, c_h)

(cc,ch),(LSTM的)

(3)用静态信息丰富时间特征的上下文

(

c

e

)

(c_e)

(ce)。例如,将ζ作为静态变量选择网络的输出,时间变量选择的上下文将按照

c

s

=

G

R

N

c

s

(

ζ

)

c_s = GRN_{c_s}(ζ)

cs=GRNcs(ζ)编码。

编码器就是一个简单的GRN,整个静态变量引入模型的方法就是作为一个引导特征选择这些网络的一个外生变量,可以把它理解为元学习mata learning

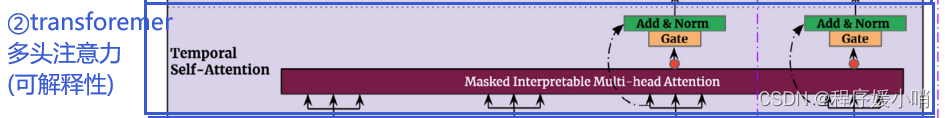

4.4. Interpretable multi-head attention

The TFT employs a self-attention mechanism to learn long-term relationships across different time steps, which we modify from multi-head attention in transformerbased architectures (Li et al, 2019; Vaswani et al, 2017) to enhance explainability. In general, attention mechanisms scale values V ∈ R N × d V V\in \mathbb{R}^{N\times d_V} V∈RN×dVbased on relationships between keys K ∈ R N × d a t t n K\in \mathbb{R}^{N\times d_{attn}} K∈RN×dattnand queries Q ∈ R N × d a t t n Q\in \mathbb{R}^{N\times d_{attn}} Q∈RN×dattn as below:

A t t e n t i o n ( Q , K , V ) = A ( Q , K ) V , (9) Attention(Q , K, V ) = A(Q , K )V , \tag{9} Attention(Q,K,V)=A(Q,K)V,(9)

where A ( ) A() A()is a normalization function, and N is the number of time steps feeding into the attention layer (i.e. k + τ m a x k + τ_{max} k+τmax). A common choice is scaled dot-product attention (Vaswani et al, 2017):

A ( Q , K ) = S o f t max ( Q K T / d a t t n ) (10) A\left( Q,K \right) =Soft\max \left( QK^T/\sqrt{d_{attn}} \right) \tag{10} A(Q,K)=Softmax(QKT/dattn)(10)

To improve the learning capacity of the standard attention mechanism, multi-head attention is proposed in Vaswani et al (2017), employing different heads for different representation subspaces: M u l t i H e a d ( Q , K , V ) = [ H 1 , . . . H m H ] W H , ( 11 ) MultiHead(Q , K, V ) = [H_1, . . . H_{m_H} ] W_H, (11) MultiHead(Q,K,V)=[H1,...HmH]WH,(11)

H h = A t t e n t i o n ( Q W Q ( h ) , K W K ( h ) , V W V ( h ) ) , ( 12 ) H_h = Attention(Q W^{(h)}_Q , K W^{(h)}_K , V W^{(h)}_V ), (12) Hh=Attention(QWQ(h),KWK(h),VWV(h)),(12)where W K ( h ) ∈ R d m o d e l × d a t t n W^{(h)}_K ∈ \mathbb{R}^{d_{model}\times d_{attn}} WK(h)∈Rdmodel×dattn, W Q ( h ) ∈ R d m o d e l × d a t t n W^{(h)}_Q ∈ \mathbb{R}^{d_{model}\times d_{attn}} WQ(h)∈Rdmodel×dattn, W V ( h ) ∈ R d m o d e l × d V W^{(h)}_V ∈ \mathbb{R}^{d_{model}\times d_{V}} WV(h)∈Rdmodel×dV are head-specific weights for keys, queries and values, and W H ∈ R ( m H ⋅ d V ) × d m o d e l W_H ∈ \mathbb{R}^{\left( m_H\cdot d_V \right) \times d_{model}} WH∈R(mH⋅dV)×dmodellinearly combines outputs concatenated from all heads H h H_h Hh.

TFT采用一种自注意机制来学习跨不同时间步长的长期关系,我们修改了基于Transformer架构中的多头注意(Li等人,2019;Vaswani等人,2017),以增强解释性。一般来说,基于键

K

∈

R

N

×

d

a

t

t

n

K\in \mathbb{R}^{N\times d_{attn}}

K∈RN×dattn和查询

Q

∈

R

N

×

d

a

t

t

n

Q\in \mathbb{R}^{N\times d_{attn}}

Q∈RN×dattn之间的关系,注意机制的尺度值

V

∈

R

N

×

d

V

V\in \mathbb{R}^{N\times d_V}

V∈RN×dV如下所示:注意

A

t

t

e

n

t

i

o

n

(

Q

,

K

,

V

)

=

A

(

Q

,

K

)

V

,

(9)

Attention(Q, K, V ) = A(Q , K )V , \tag{9}

Attention(Q,K,V)=A(Q,K)V,(9)

其中

A

(

)

A()

A()是归一化函数,N是进入注意层的时间步数(即

k

+

τ

m

a

x

k + τ_{max}

k+τmax)。一个常见的选择是缩放点积注意力(Vaswani等人,2017):

A

(

Q

,

K

)

=

S

o

f

t

max

(

Q

K

⊤

/

d

a

t

t

n

)

,

(10)

A\left( Q,K \right) =Soft\max \left( QK^{\top}/\sqrt{d_{attn}} \right), \tag{10}

A(Q,K)=Softmax(QK⊤/dattn),(10)

Q

Q

Q query

K

K

K key

V

V

V value

A

(

)

A()

A() Attention score

/

d

a

t

t

n

/\sqrt{d_{attn}}

/dattn除以K向量的维度,帮助模型拥有更稳定的梯度,如果不除,K的向量维度如果很大,会导致最后点乘结果变得很大,这样整个softmax函数会推向一个极小梯度的方向

A

t

t

e

n

t

i

o

n

(

)

Attention()

Attention()Attention feature

为了提高标准注意机制的学习能力,Vaswani等人(2017)提出了多头注意,对不同的表示子空间使用不同的头部:

M

u

l

t

i

H

e

a

d

(

Q

,

K

,

V

)

=

[

H

1

,

.

.

.

H

m

H

]

W

H

,

(11)

MultiHead(Q , K, V ) = [H_1, . . . H_{m_H} ] W_H,\tag{11}

MultiHead(Q,K,V)=[H1,...HmH]WH,(11)

H

h

=

A

t

t

e

n

t

i

o

n

(

Q

W

Q

(

h

)

,

K

W

K

(

h

)

,

V

W

V

(

h

)

)

,

(12)

H_h = Attention(Q W^{(h)}_Q , K W^{(h)}_K , V W^{(h)}_V ),\tag{12}

Hh=Attention(QWQ(h),KWK(h),VWV(h)),(12)

这里对传统的Transformer多头注意力机制进行了一些小改进,传统的针对 Q K V QKV QKV针对每一个头都会有不同权重,但是TFT在这里 V V V是多头共享的参数, Q K QK QK本身是组成Attention的一个重要部分,所以这两个就不用想参数了,每一个头都是每一个头的权重。

其中 W K ( h ) ∈ R d m o d e l × d a t t n W^{(h)}_K ∈ \mathbb{R}^{d_{model}\times d_{attn}} WK(h)∈Rdmodel×dattn, W Q ( h ) ∈ R d m o d e l × d a t t n W^{(h)}_Q ∈ \mathbb{R}^{d_{model}\times d_{attn}} WQ(h)∈Rdmodel×dattn, W V ( h ) ∈ R d m o d e l × d V W^{(h)}_V ∈ \mathbb{R}^{d_{model}\times d_{V}} WV(h)∈Rdmodel×dV 是键、查询和值的头部特定权重, W H ∈ R ( m H ⋅ d V ) × d m o d e l W_H ∈ \mathbb{R}^{\left( m_H\cdot d_V \right) \times d_{model}} WH∈R(mH⋅dV)×dmodel线性组合所有头部 H h H_h Hh连接的输出。

Given that different values are used in each head, attention weights alone would not be indicative of a particular feature’s importance. As such, we modify multihead attention to share values in each head, and employ additive aggregation of all heads: I n t e r p r e t a b l e M u l t i H e a d ( Q , K , V ) = H ~ W H , (13) InterpretableMultiHead(Q , K, V ) = \tilde{H} W_H,\tag{13} InterpretableMultiHead(Q,K,V)=H~WH,(13)

H ~ = A ~ ( Q , K ) V W V , (14) \tilde{H}=\tilde{A}\left( Q,K \right) V\ W_V,\tag{14} H~=A~(Q,K)V WV,(14)

= { 1 m H ∑ h = 1 m H A ( Q W Q ( h ) , K W K ( h ) ) } V W V , (15) =\left\{ \frac{1}{m_H}\sum_{h=1}^{m_H}{A\left( Q\ W_{Q}^{\left( h \right)},KW_{K}^{\left( h \right)} \right)} \right\} VW_V,\tag{15} ={mH1h=1∑mHA(Q WQ(h),KWK(h))}VWV,(15)

= 1 m H ∑ h = 1 m H A t t e n t i o n ( Q W Q ( h ) , K W K ( h ) , V W V ) , (16) =\frac{1}{m_H}\sum_{h=1}^{m_H}{Attention\left( QW_{Q}^{\left( h \right)},KW_{K}^{\left( h \right)},VW_V \right) ,}\tag{16} =mH1h=1∑mHAttention(QWQ(h),KWK(h),VWV),(16)

where W V ( h ) ∈ R d m o d e l × d V W^{(h)}_V ∈ \mathbb{R}^{d_{model}\times d_{V}} WV(h)∈Rdmodel×dVare value weights shared across all heads, and W H ∈ R d a t t n × d m o d e l W_H ∈ \mathbb{R}^{d_{attn} \times d_{model}} WH∈Rdattn×dmodelis used for final linear mapping.

Comparing Eqs. (9) and (14), we can see that the final output of interpretable multi-head attention bears a strong resemblance to a single attention layer – the key difference lying in the methodology to generate attention weights A ~ ( Q , K ) \tilde{A}\left( Q,K \right) A~(Q,K). From Eq. (15), each head can learn different temporal patterns A ~ ( Q W Q ( h ) , K W K ( h ) ) \tilde{A}\left( QW^{(h)}_Q,K W^{(h)}_K\right) A~(QWQ(h),KWK(h)) while attending to a common set of input features V V V – which can be interpreted as a simple ensemble over attention weights into combined matrix A ~ ( Q , K ) \tilde{A}\left( Q,K \right) A~(Q,K)in Eq. (14). Compared to A ( Q , K ) {A}\left( Q,K \right) A(Q,K)in Eq. (10), A ~ ( Q , K ) \tilde{A}\left( Q,K \right) A~(Q,K)yields an increased representation capacity in an efficient way, while still allowing simple interpretability studies to be performed by analyzing a single set of attention weights.

考虑到每个头部使用不同的值,仅靠注意力权重并不能表明特定特征的重要性。因此,我们修改多头注意力以共享每个头部的值,并使用所有头部的加性聚合:

I n t e r p r e t a b l e M u l t i H e a d ( Q , K , V ) = H ~ W H , (13) InterpretableMultiHead(Q , K, V ) = \tilde{H} W_H,\tag{13} InterpretableMultiHead(Q,K,V)=H~WH,(13)

H

~

=

A

~

(

Q

,

K

)

V

W

V

,

(14)

\tilde{H}=\tilde{A}\left( Q,K \right) V\ W_V,\tag{14}

H~=A~(Q,K)V WV,(14)

=

{

1

m

H

∑

h

=

1

m

H

A

(

Q

W

Q

(

h

)

,

K

W

K

(

h

)

)

}

V

W

V

,

(15)

=\left\{ \frac{1}{m_H}\sum_{h=1}^{m_H}{A\left( Q\ W_{Q}^{\left( h \right)},KW_{K}^{\left( h \right)} \right)} \right\} VW_V,\tag{15}

={mH1h=1∑mHA(Q WQ(h),KWK(h))}VWV,(15)

=

1

m

H

∑

h

=

1

m

H

A

t

t

e

n

t

i

o

n

(

Q

W

Q

(

h

)

,

K

W

K

(

h

)

,

V

W

V

)

,

(16)

=\frac{1}{m_H}\sum_{h=1}^{m_H}{Attention\left( QW_{Q}^{\left( h \right)},KW_{K}^{\left( h \right)},VW_V \right) ,}\tag{16}

=mH1h=1∑mHAttention(QWQ(h),KWK(h),VWV),(16)

其中 W V ( h ) ∈ R d m o d e l × d V W^{(h)}_V ∈ \mathbb{R}^{d_{model}\times d_{V}} WV(h)∈Rdmodel×dVa为所有磁头共享的值权值, W H ∈ R d a t t n × d m o d e l W_H ∈ \mathbb{R}^{d_{attn} \times d_{model}} WH∈Rdattn×dmodel用于最终的线性映射。

比较方程式。(9)和(14),我们可以看到可解释的多头注意力的最终输出与单一注意层非常相似——关键的区别在于生成注意力权重 A ~ ( Q , K ) \tilde{A}\left( Q,K \right) A~(Q,K)的方法。从Eq.(15)中,每个头部都可以学习不同的时间模式 A ~ ( Q W Q ( h ) , K W K ( h ) ) \tilde{A}\left( QW^{(h)}_Q,K W^{(h)}_K\right) A~(QWQ(h),KWK(h)) ,同时关注一组共同的输入特征 V V V-这可以被解释为在Eq.(14)中对注意力权重进行组合的矩阵 A ~ ( Q , K ) \tilde{A}\left( Q,K \right) A~(Q,K)的简单集成。与公式(10)中的 A ( Q , K ) {A}\left( Q,K \right) A(Q,K)相比, A ~ ( Q , K ) \tilde{A}\left( Q,K \right) A~(Q,K)以一种有效的方式产生了更高的表示能力,同时仍然允许通过分析一组注意力权重来进行简单的可解释性研究。

注意力机制的设计

文章对多头注意力机制的改进在于共享部分参数,即对于每一个head,Q和K都有分别的线性变换矩阵,但是V变换矩阵是共享的

H

~

=

A

~

(

Q

,

K

)

V

W

V

,

(14)

\tilde{H}=\tilde{A}\left( Q,K \right) V\ W_V,\tag{14}

H~=A~(Q,K)V WV,(14)

=

{

1

m

H

∑

h

=

1

m

H

A

(

Q

W

Q

(

h

)

,

K

W

K

(

h

)

)

}

V

W

V

,

(15)

=\left\{ \frac{1}{m_H}\sum_{h=1}^{m_H}{A\left( Q\ W_{Q}^{\left( h \right)},KW_{K}^{\left( h \right)} \right)} \right\} VW_V,\tag{15}

={mH1h=1∑mHA(Q WQ(h),KWK(h))}VWV,(15)

class InterpretableMultiHeadAttention(nn.Module):

def __init__(self, n_head: int, d_model: int, dropout: float = 0.0):

super(InterpretableMultiHeadAttention, self).__init__()

self.n_head = n_head

self.d_model = d_model

self.d_k = self.d_q = self.d_v = d_model // n_head

self.dropout = nn.Dropout(p=dropout)

self.v_layer = nn.Linear(self.d_model, self.d_v)

self.q_layers = nn.ModuleList([nn.Linear(self.d_model, self.d_q) for _ in range(self.n_head)])

self.k_layers = nn.ModuleList([nn.Linear(self.d_model, self.d_k) for _ in range(self.n_head)])

self.attention = ScaledDotProductAttention()

self.w_h = nn.Linear(self.d_v, self.d_model, bias=False)

def forward(self, q, k, v, mask=None) -> Tuple[torch.Tensor, torch.Tensor]:

heads = []

attns = []

vs = self.v_layer(v) # 共享的

for i in range(self.n_head):

qs = self.q_layers[i](q)

ks = self.k_layers[i](k)

head, attn = self.attention(qs, ks, vs, mask)

head_dropout = self.dropout(head)

heads.append(head_dropout)

attns.append(attn)

head = torch.stack(heads, dim=2) if self.n_head > 1 else heads[0]

attn = torch.stack(attns, dim=2)

outputs = torch.mean(head, dim=2) if self.n_head > 1 else head

outputs = self.w_h(outputs)

outputs = self.dropout(outputs)

return outputs, attn

其中注意力机制的计算还是标准的ScaledDotProductAttention,用QK计算出注意力系数,然后再来对V加权一下

A

(

Q

,

K

)

=

S

o

f

t

max

(

Q

K

⊤

/

d

a

t

t

n

)

A\left( Q,K \right) =Soft\max \left( QK^{\top}/\sqrt{d_{attn}} \right)

A(Q,K)=Softmax(QK⊤/dattn)

A

t

t

e

n

t

i

o

n

(

Q

,

K

,

V

)

=

A

(

Q

,

K

)

V

Attention(Q, K, V ) = A(Q , K )V

Attention(Q,K,V)=A(Q,K)V

class ScaledDotProductAttention(nn.Module):

def __init__(self, dropout: float = None, scale: bool = True):

super(ScaledDotProductAttention, self).__init__()

if dropout is not None:

self.dropout = nn.Dropout(p=dropout)

else:

self.dropout = dropout

self.softmax = nn.Softmax(dim=2)

self.scale = scale

def forward(self, q, k, v, mask=None):

attn = torch.bmm(q, k.permute(0, 2, 1)) # query-key overlap

if self.scale:

dimension = torch.as_tensor(k.size(-1), dtype=attn.dtype, device=attn.device).sqrt()

attn = attn / dimension

if mask is not None:

attn = attn.masked_fill(mask, -1e9)

attn = self.softmax(attn)

if self.dropout is not None:

attn = self.dropout(attn)

output = torch.bmm(attn, v)

return output, attn

4.5. Temporal fusion decoder

The temporal fusion decoder uses the series of layers described below to learn temporal relationships present in the dataset:

时间融合解码器使用下面描述的一系列层来学习数据集中存在的时间关系:

给LSTM Encoder喂入过去的一些特征,再给LSTM Decoder喂入未来的一个特征,然后LSTM的编码器和解码器会再次经过Gat又做特征选择的工作,把这些特征都处理完了后会统一流入到这个TFT,所以TFT的输入把过去,未来和现在的信息都整合过来了。

整合进来完了之后会流入内部的三个模块

4.5.1. Locality enhancement with sequence-to-sequence layer

In time series data, points of significance are often identified in relation to their surrounding values – such as anomalies, change-points, or cyclical patterns. Leveraging local context, through the construction of features that utilize pattern information on top of point-wise values, can thus lead to performance improvements in attentionbased architectures. For instance, Li et al (2019) adopts a single convolutional layer for locality enhancement – extracting local patterns using the same filter across all time. However, this might not be suitable for cases when observed inputs exist, due to the differing number of past and future inputs.

在时间序列数据中,重要点通常是根据其周围的值来确定的,例如异常、变化点或周期模式。利用局部上下文,通过在点值之上构建利用模式信息的特性,可以在基于注意力的体系结构中提高性能。例如,Li等人(2019)采用单一卷积层进行局部性增强——在所有时间内使用相同的过滤器提取局部模式。然而,由于过去和未来输入的数量不同,这可能不适用于存在观测到的输入的情况。

As such, we propose the application of a sequenceto-sequence layer to naturally handle these differences – feeding ξ ~ t − k : t \tilde{\xi}_{t-k:t} ξ~t−k:tinto the encoder and ξ ~ t + 1 : t + τ max \tilde{\xi}_{t+1:t+\tau _{\max}} ξ~t+1:t+τmaxinto the decoder. This then generates a set of uniform temporal features which serve as inputs into the temporal fusion decoder itself, denoted by ϕ ( t , n ) ∈ { ϕ ( t , − k ) , … , ϕ ( t , τ m a x ) } {\phi}(t, n)∈\{ {\phi}(t,−k),…,{\phi}(t, τ_{max})\} ϕ(t,n)∈{ϕ(t,−k),…,ϕ(t,τmax)} with n n n being a position index. Inspired by its success in canonical sequential encoding problems, we consider the use of an LSTM encoder–decoder, a commonly-used building block in other multi-horizon forecasting architectures (Fan et al, 2019; Wen et al, 2017), although other designs can potentially be adopted as well. This also serves as a replacement for standard positional encoding, providing an appropriate inductive bias for the time ordering of the inputs. Moreover, to allow static metadata to influence local processing, we use the c c, ch context vectors from the static covariate encoders to initialize the cell state and hidden state respectively for the first LSTM in the layer. We also employ a gated skip connection over this layer: : ϕ ~ ( t , n ) = L a y e r n o r m ( ξ ~ t + n + G L U ϕ ~ ( ϕ ( t , n ) ) ) , (17) \tilde{\phi}\left( t,n \right) =Layernorm\left( \tilde{\xi}_{t+n}+GLU_{\tilde{\phi}}\left( \phi \left( t,n \right) \right) \right) ,\tag{17} ϕ~(t,n)=Layernorm(ξ~t+n+GLUϕ~(ϕ(t,n))),(17) , (17) where n ∈ [ − k , τ m a x ] n∈[−k, τ_{max}] n∈[−k,τmax]is a position index.

因此,我们建议应用一个序列到序列层来自然地处理这些差异-将

ξ

~

t

−

k

:

t

\tilde{\xi}_{t-k:t}

ξ~t−k:t馈入编码器,并将

ξ

~

t

+

1

:

t

+

τ

max

\tilde{\xi}_{t+1:t+\tau _{\max}}

ξ~t+1:t+τmax馈入解码器。然后生成一组统一的时间特征,作为时间融合解码器本身的输入,用

ϕ

(

t

,

n

)

∈

{

ϕ

(

t

,

−

k

)

,

…

,

ϕ

(

t

,

τ

m

a

x

)

}

{\phi}(t, n)∈\{ {\phi}(t,−k),…,{\phi}(t, τ_{max})\}

ϕ(t,n)∈{ϕ(t,−k),…,ϕ(t,τmax)},

n

n

n为位置指数。受其在规范顺序编码问题上成功的启发,我们考虑使用LSTM编码器-解码器,这是其他多水平预测架构中常用的构建块(Fan等人,2019;Wen等人,2017),尽管其他设计也可以被采用。这也可以作为标准位置编码的替代品,为输入的时间顺序提供适当的归纳偏差。此外,为了允许静态元数据影响局部处理,我们使用来自静态协变量编码器的c c, ch上下文向量分别初始化层中第一个LSTM的单元状态和隐藏状态。我们还在这一层上采用门控跳跃连接:

ϕ

~

(

t

,

n

)

=

L

a

y

e

r

n

o

r

m

(

ξ

~

t

+

n

+

G

L

U

ϕ

~

(

ϕ

(

t

,

n

)

)

)

,

(17)

\tilde{\phi}\left( t,n \right) =Layernorm\left( \tilde{\xi}_{t+n}+GLU_{\tilde{\phi}}\left( \phi \left( t,n \right) \right) \right) ,\tag{17}

ϕ~(t,n)=Layernorm(ξ~t+n+GLUϕ~(ϕ(t,n))),(17)

其中

n

∈

[

−

k

,

τ

m

a

x

]

n∈[−k, τ_{max}]

n∈[−k,τmax]是位置指数。

4.5.2. Static enrichment layer

As static covariates often have a significant influence on the temporal dynamics (e.g. genetic information on disease risk), we introduce a static enrichment layer that enhances temporal features with static metadata. For a given position index n, static enrichment takes the form:

θ ( t , n ) = G R N θ ( ϕ ~ ( t , n ) , c c ) , (17) \theta \left( t,n \right) =GRN_{\theta}\left( \tilde{\phi}\left( t,n \right) ,c_c \right) ,\tag{17} θ(t,n)=GRNθ(ϕ~(t,n),cc),(17)

where the weights of G R N ϕ GRN_\phi GRNϕ are shared across the entire layer, and c e is a context vector from a static covariate encoder.

由于静态协变量通常对时间动态(例如疾病风险的遗传信息)有重大影响,我们引入了静态富集层,该层使用静态元数据增强时间特征。对于给定的位置指数n,静态富集的形式为:

θ

(

t

,

n

)

=

G

R

N

θ

(

ϕ

~

(

t

,

n

)

,

c

c

)

,

(18)

\theta \left( t,n \right) =GRN_{\theta}\left( \tilde{\phi}\left( t,n \right) ,c_c \right), \tag{18}

θ(t,n)=GRNθ(ϕ~(t,n),cc),(18)

其中 G R N ϕ GRN_\phi GRNϕ的权重在整个层中共享, c e c_e ce是来自静态协变量编码器的上下文向量。

4.5.3. Temporal self-attention layer

Following static enrichment, we next apply selfattention. All static-enriched temporal features are first grouped into a single matrix – i.e.

Θ ( t ) = [ θ ( t , − k ) , . . . , θ ( t , τ ) ] T \varTheta \left( t \right) =\left[ \theta \left( t,-k \right) ,...,\theta \left( t,\tau \right) \right] ^T Θ(t)=[θ(t,−k),...,θ(t,τ)]T – and interpretable multi-head attention (see Section 4.4) is applied at each forecast time (with N = τ m a x + k + 1 N = τ_{max} + k + 1 N=τmax+k+1):

B ( t ) = I n t e r p r e t a b l e M u t i H e a d ( Θ ( t ) , Θ ( t ) , Θ ( t ) ) , (19) B\left( t \right) =InterpretableMutiHead\left( \varTheta \left( t \right) ,\varTheta \left( t \right) ,\varTheta \left( t \right) \right) , \tag{19} B(t)=InterpretableMutiHead(Θ(t),Θ(t),Θ(t)),(19)

to yield B ( t ) = [ β ( t , − k ) , . . . , β ( t , τ max ) ] . d V = d a t t n = d m o d e l / m H B\left( t \right) =\left[ \beta \left( t,-k \right) ,...,\beta \left( t,\tau _{\max} \right) \right]. d_V=d_{attn}={d_{model}}/{m_H} B(t)=[β(t,−k),...,β(t,τmax)].dV=dattn=dmodel/mH are chosen, where m H m_H mH is the number of heads.

Decoder masking (Li et al, 2019; Vaswani et al, 2017) is applied to the multi-head attention layer to ensure that each temporal dimension can only attend to features preceding it. Besides preserving causal information flow via masking, the self-attention layer allows TFT to pick up long-range dependencies that may be challenging for RNN-based architectures to learn. Following the selfattention layer, an additional gating layer is also applied to facilitate training: δ ( t , n ) = L a y e r N o r m ( θ ( t , n ) + G L U δ ( β ( t , n ) ) ) , (20) δ(t, n) = LayerNorm(θ(t, n) + GLU_δ(β(t, n))),\tag{20} δ(t,n)=LayerNorm(θ(t,n)+GLUδ(β(t,n))),(20)

在静态充实之后,我们接下来应用自我注意。所有静态丰富的时间特征首先被分组到一个单一矩阵-即

Θ

(

t

)

=

[

θ

(

t

,

−

k

)

,

.

.

.

,

θ

(

t

,

τ

)

]

T

\varTheta \left( t \right) =\left[ \theta \left( t,-k \right) ,...,\theta \left( t,\tau \right) \right] ^T

Θ(t)=[θ(t,−k),...,θ(t,τ)]T-并且可解释的多头注意力(见第4.4节)应用于每个预测时间

N

=

τ

m

a

x

+

k

+

1

N = τ_{max} + k + 1

N=τmax+k+1:

B

(

t

)

=

I

n

t

e

r

p

r

e

t

a

b

l

e

M

u

t

i

H

e

a

d

(

Θ

(

t

)

,

Θ

(

t

)

,

Θ

(

t

)

)

,

(19)

B\left( t \right) =InterpretableMutiHead\left( \varTheta \left( t \right) ,\varTheta \left( t \right) ,\varTheta \left( t \right) \right) , \tag{19}

B(t)=InterpretableMutiHead(Θ(t),Θ(t),Θ(t)),(19)

为了产出

B

(

t

)

=

[

β

(

t

,

−

k

)

,

.

.

.

,

β

(

t

,

τ

max

)

]

.

d

V

=

d

a

t

t

n

=

d

m

o

d

e

l

/

m

H

B\left( t \right) =\left[ \beta \left( t,-k \right) ,...,\beta \left( t,\tau _{\max} \right) \right]. d_V=d_{attn}={d_{model}}/{m_H}

B(t)=[β(t,−k),...,β(t,τmax)].dV=dattn=dmodel/mH被选择,其中

m

H

{m_H}

mH为正面数。

解码器掩蔽(Li等人,2019;Vaswani et al, 2017)应用于多头注意层,以确保每个时间维度只能关注它之前的特征。除了通过掩蔽保持因果信息流外,自注意层还允许TFT拾取长期依赖关系,这对于基于rnn的架构来说可能是一个挑战。在自注意层之后,还应用了一个附加的门控层来促进训练:

δ

(

t

,

n

)

=

L

a

y

e

r

N

o

r

m

(

θ

(

t

,

n

)

+

G

L

U

δ

(

β

(

t

,

n

)

)

)

,

(20)

δ(t, n) = LayerNorm(θ(t, n) + GLU_δ(β(t, n))),\tag{20}

δ(t,n)=LayerNorm(θ(t,n)+GLUδ(β(t,n))),(20)

4.5.4. Position-wise feed-forward layer

We apply additional non-linear processing to the outputs of the self-attention layer. Similar to the static enrichment layer, this makes use of GRNs:

ψ ( t , n ) = G R N ψ ( δ ( t , n ) ) , (21) ψ(t, n) = GRN_ψ (δ(t, n)) , \tag{21} ψ(t,n)=GRNψ(δ(t,n)),(21) where the weights of GRNψ are shared across the entire layer. As per Fig. 2, we also apply a gated residual connection which skips over the entire transformer block, providing a direct path to the sequence-to-sequence layer – yielding a simpler model if additional complexity is not required, as shown below:

ψ ~ ( t , n ) = l a y e r N o r m ( ϕ ~ ( t , n ) + G L U ψ ~ ( ψ ( t , n ) ) ) , (22) \tilde{\psi}\left( t,n \right) =layerNorm\left( \tilde{\phi}\left( t,n \right) +GLU_{\tilde{\psi}}\left( \psi \left( t,n \right) \right) \right) ,\tag{22} ψ~(t,n)=layerNorm(ϕ~(t,n)+GLUψ~(ψ(t,n))),(22)

我们对自注意层的输出进行额外的非线性处理。与静态富集层类似,它利用GRNs:

ψ

(

t

,

n

)

=

G

R

N

ψ

(

δ

(

t

,

n

)

)

,

(21)

ψ(t, n) = GRN_ψ (δ(t, n)),\tag{21}

ψ(t,n)=GRNψ(δ(t,n)),(21)

其中GRNψ的权重在整个层中共享。如图2所示,我们还应用了一个门限值剩余连接,跳过整个变压器块,提供了一个到序列到序列层的直接路径-如果不需要额外的复杂性,则产生一个更简单的模型,如下所示:

ψ

~

(

t

,

n

)

=

l

a

y

e

r

N

o

r

m

(

ϕ

~

(

t

,

n

)

+

G

L

U

ψ

~

(

ψ

(

t

,

n

)

)

)

,

(22)

\tilde{\psi}\left( t,n \right) =layerNorm\left( \tilde{\phi}\left( t,n \right) +GLU_{\tilde{\psi}}\left( \psi \left( t,n \right) \right) \right) ,\tag{22}

ψ~(t,n)=layerNorm(ϕ~(t,n)+GLUψ~(ψ(t,n))),(22)

4.6. Quantile outputs

In line with previous work (Wen et al, 2017), TFT also generates prediction intervals on top of point forecasts.

This is achieved by the simultaneous prediction of various percentiles (e.g. 10th, 50th and 90th) at each time step.

Quantile forecasts are generated using a linear transformation of the output from the temporal fusion decoder:

y

^

(

q

,

t

,

τ

)

=

W

q

ψ

~

(

t

,

τ

)

+

b

q

,

(23)

\hat{y}\left( q,t,\tau \right) =W_q\tilde{\psi}\left( t,\tau \right) +b_q ,\tag{23}

y^(q,t,τ)=Wqψ~(t,τ)+bq,(23) where

W

q

∈

R

1

×

d

W_q ∈\mathbb{R}^{1×d}

Wq∈R1×d,

b

q

∈

R

b_q ∈\mathbb{R}

bq∈R are linear coefficients for the specified quantile q. We note that forecasts are only generated for horizons in the future – i.e.

τ

∈

{

1

,

.

.

.

,

τ

m

a

x

}

τ ∈ \{1, . . . , τ_{max}\}

τ∈{1,...,τmax}.

与之前的工作(Wen et al, 2017)一致,TFT还在点预测的基础上生成预测区间。

这是通过在每个时间步同时预测各种百分位数(例如第10、第50和第90)来实现的。分位数预测是使用时间融合解码器输出的线性变换生成的:

y

^

(

q

,

t

,

τ

)

=

W

q

ψ

~

(

t

,

τ

)

+

b

q

,

(23)

\hat{y}\left( q,t,\tau \right) =W_q\tilde{\psi}\left( t,\tau \right) +b_q ,\tag{23}

y^(q,t,τ)=Wqψ~(t,τ)+bq,(23)其中

W

q

∈

R

1

×

d

W_q ∈\mathbb{R}^{1×d}

Wq∈R1×d,

b

q

∈

R

b_q ∈\mathbb{R}

bq∈R 是指定分位数q的线性系数。我们注意到预测仅为未来的水平层生成-即

τ

∈

{

1

,

.

.

.

,

τ

m

a

x

}

τ ∈ \{1, . . . , τ_{max}\}

τ∈{1,...,τmax}。

5. Loss functions

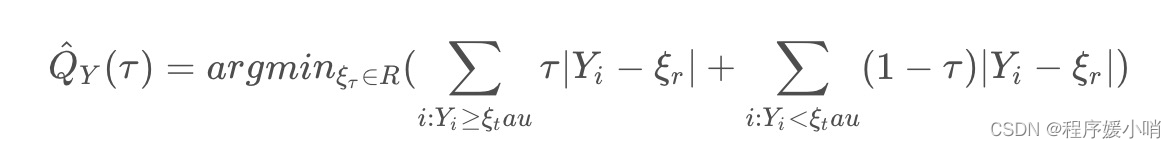

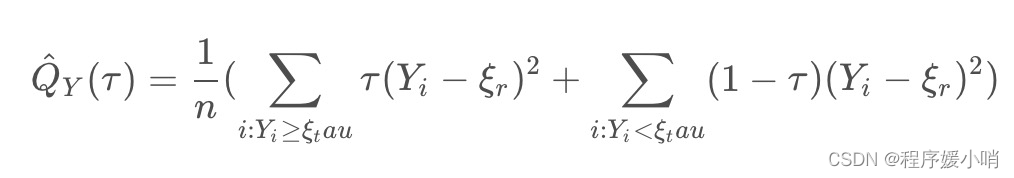

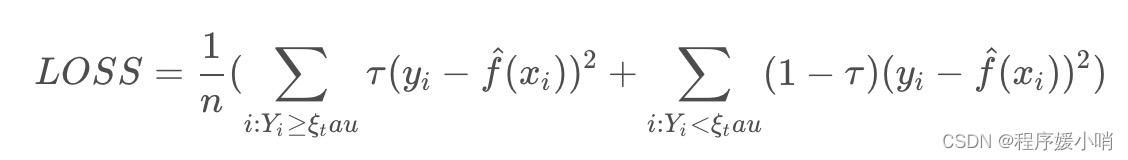

TFT is trained by jointly minimizing the quantile loss (Wen et al, 2017), summed across all quantile outputs:

L ( Ω , W ) = ∑ y t ∈ Ω ∑ q ∈ Q ∑ τ = 1 τ max Q L ( y t , y ^ ( q , t − τ , τ ) , q ) M τ max (24) \mathcal{L}\left( \varOmega ,W \right) =\sum_{y_t\in \varOmega}^{}{\sum_{q\in \mathcal{Q}}^{}{\sum_{\tau =1}^{\tau _{\max}}{\frac{QL\left( y_t,\hat{y}\left( q,t-\tau ,\tau \right) ,q \right)}{M\tau _{\max}}}}} \tag{24} L(Ω,W)=yt∈Ω∑q∈Q∑τ=1∑τmaxMτmaxQL(yt,y^(q,t−τ,τ),q)(24)

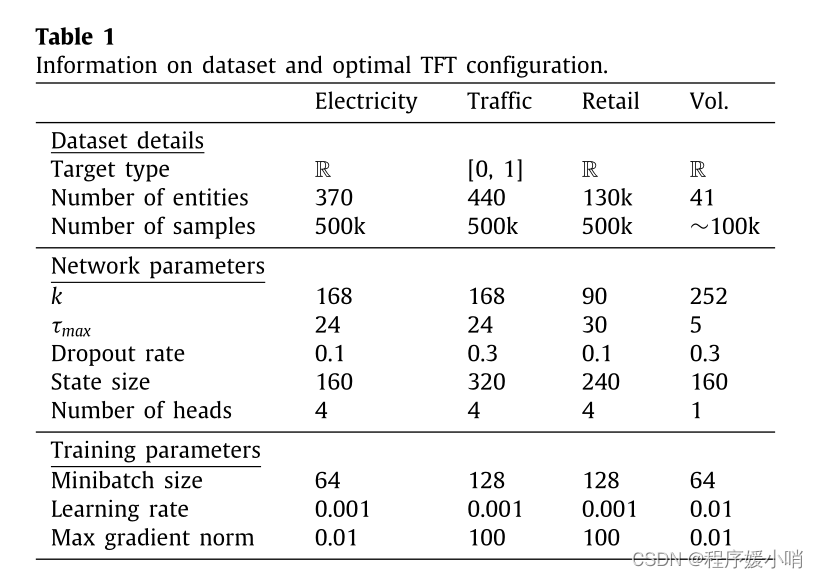

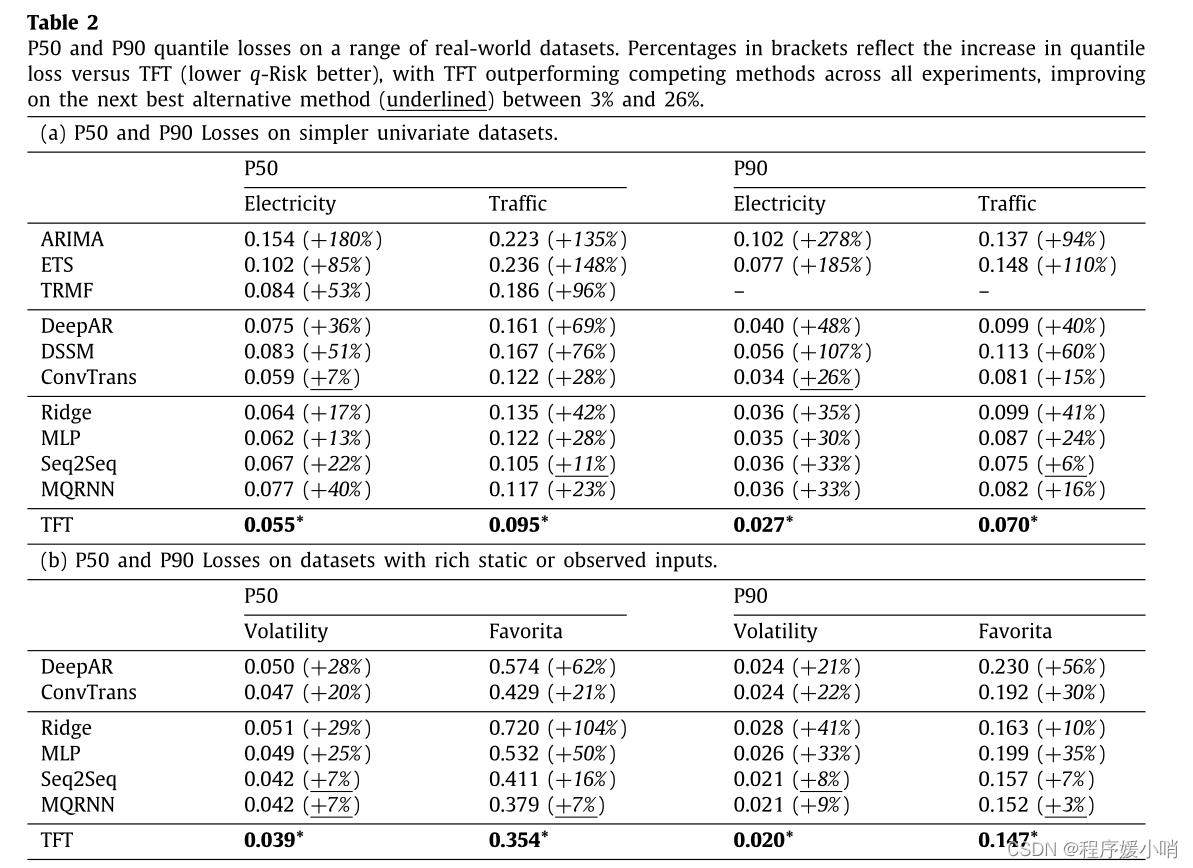

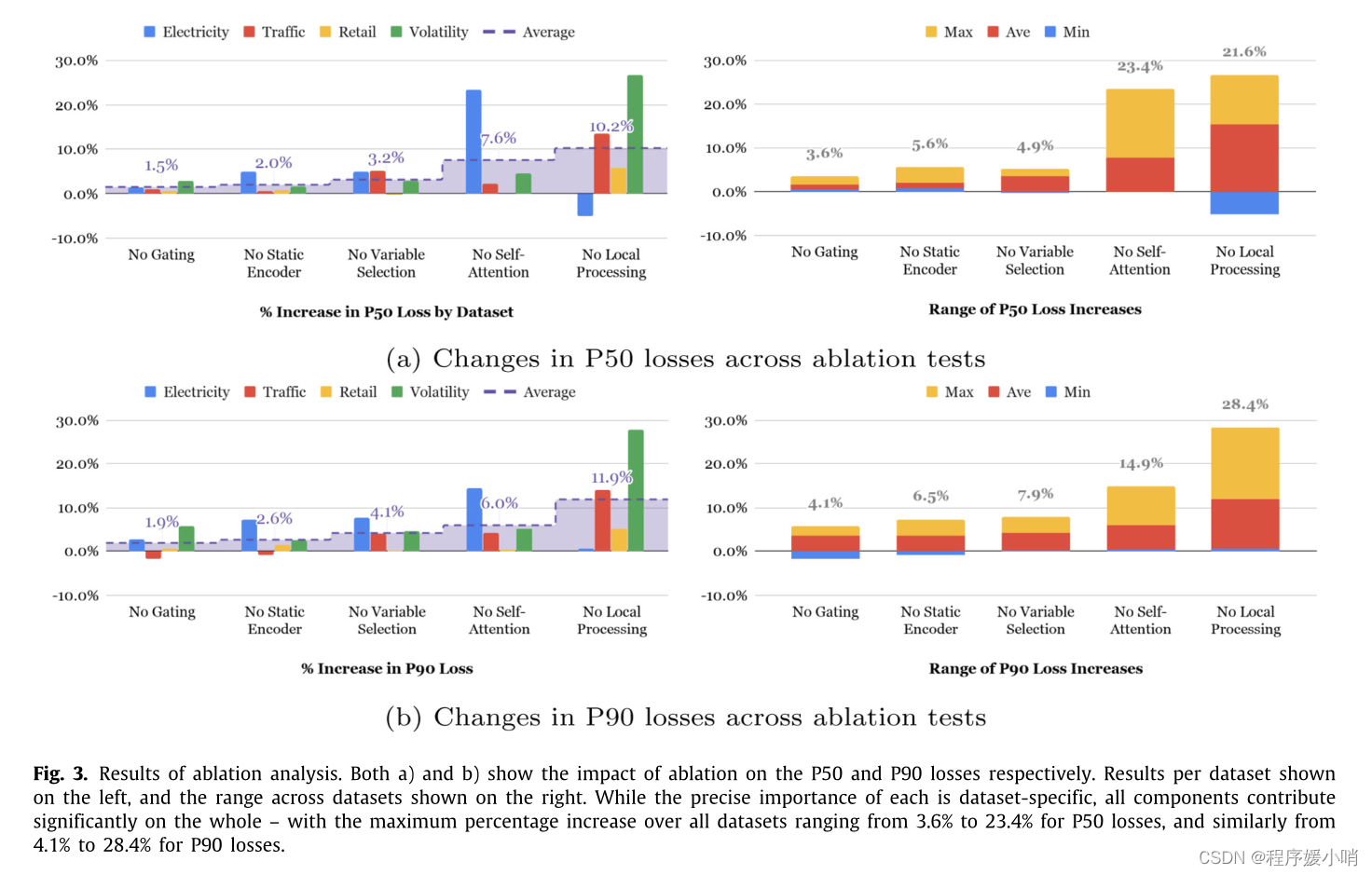

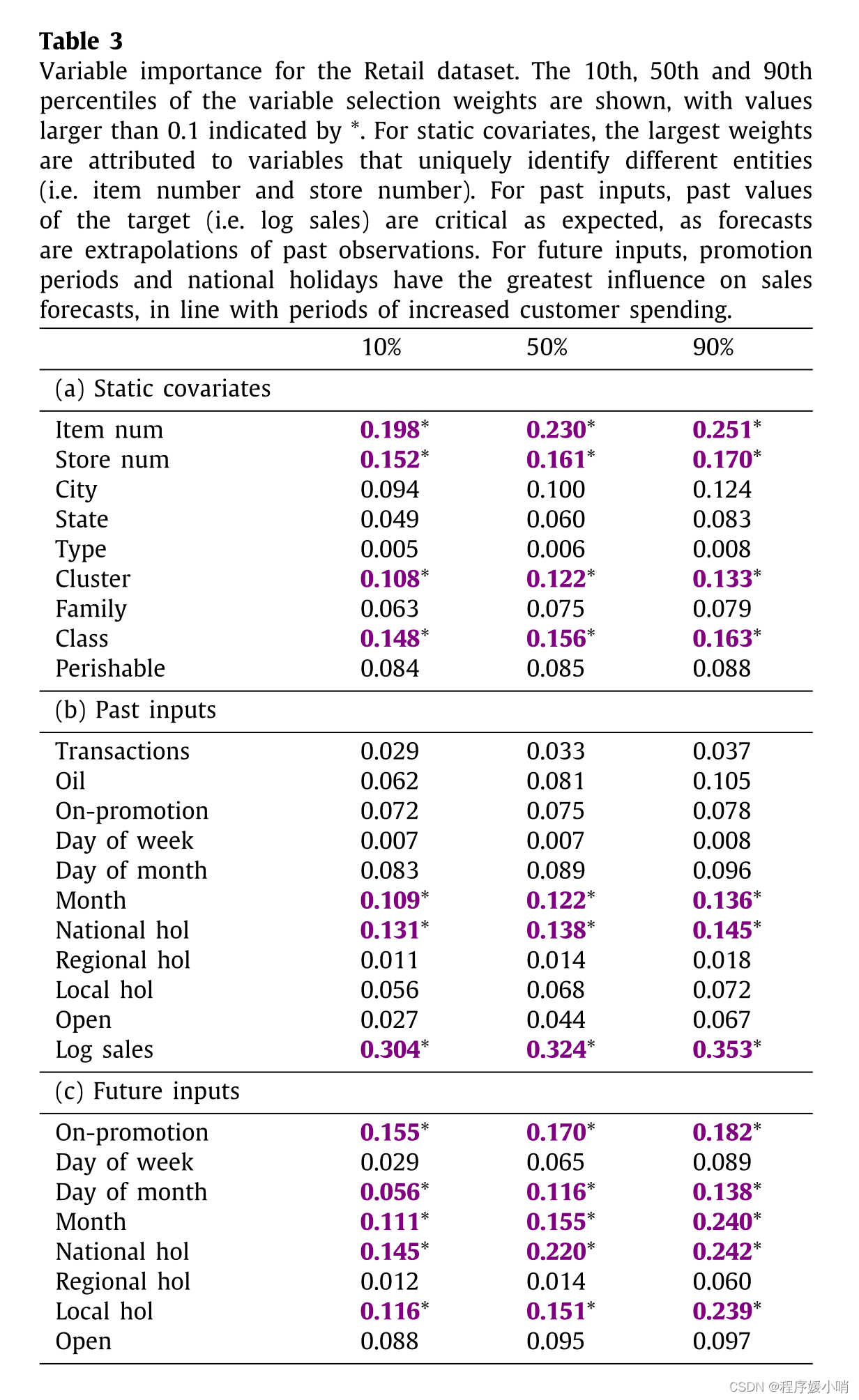

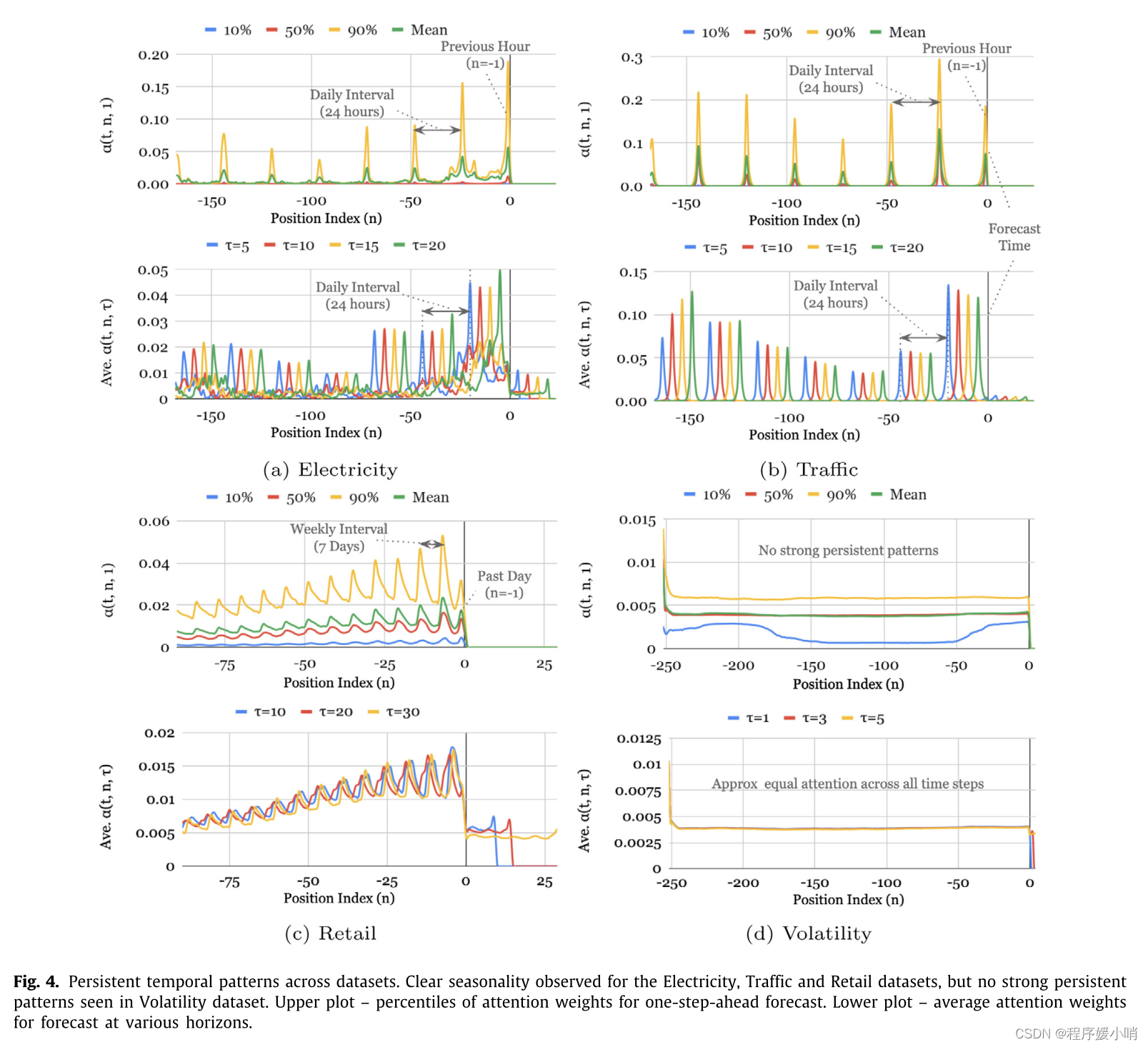

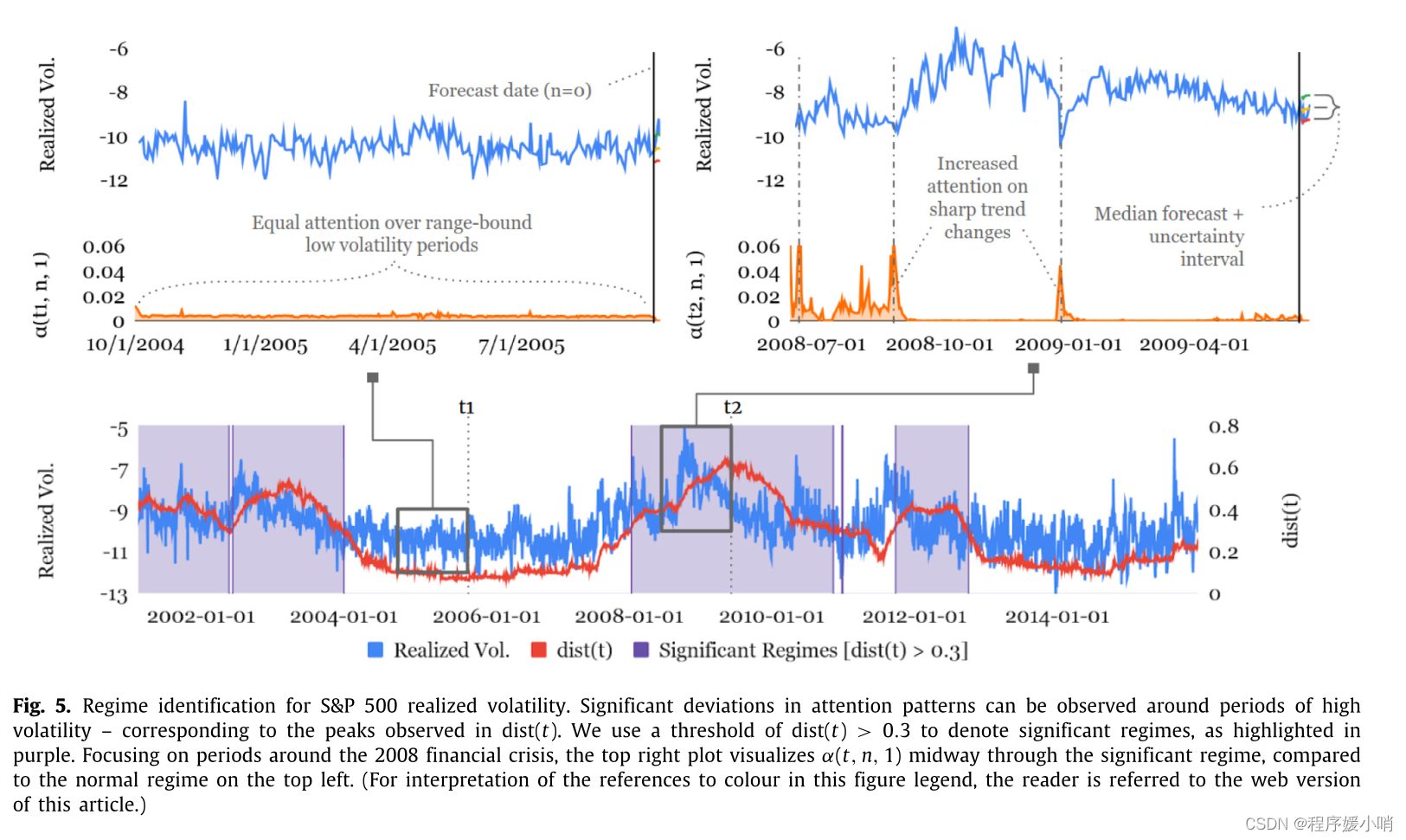

Q L ( y , y ^ , q ) = q ( y − y ^ ) + + ( 1 − q ) ( y ^ − y ) + (25) QL\left( y,\hat{y},q \right) =q\left( y-\hat{y} \right) _++\left( 1-q \right) \left( \hat{y}-y \right) _+\tag{25} QL(y,y^,q)=q(y−y^)++(1−q)(y^−y)+(25)