1. 线性回归

import torch

x_data = torch.Tensor([[1.0],[2.0],[3.0]])

y_data = torch.Tensor([[2.0],[4.0],[6.0]])

class MyLinear(torch.nn.Module):

def __init__(self):

super().__init__()

self.linear = torch.nn.Linear(1,1)

def forward(self, x):

y_pred = self.linear(x)

return y_pred

model = MyLinear()

criterion = torch.nn.MSELoss()

optimizer = torch.optim.SGD(model.parameters(), lr=0.01)

for epoch in range(1000):

y_pred = model(x_data)

loss = criterion(y_pred,y_data)

print('epoch==' + str(epoch), 'loss==' + str(loss.item()))

optimizer.zero_grad()

loss.backward()

optimizer.step()

print('w=',model.linear.weight.item())

print('b=',model.linear.bias.item())

x_test = torch.tensor([[4.0]])

y_test = model(x_test)

print("y_test=",y_test.data)

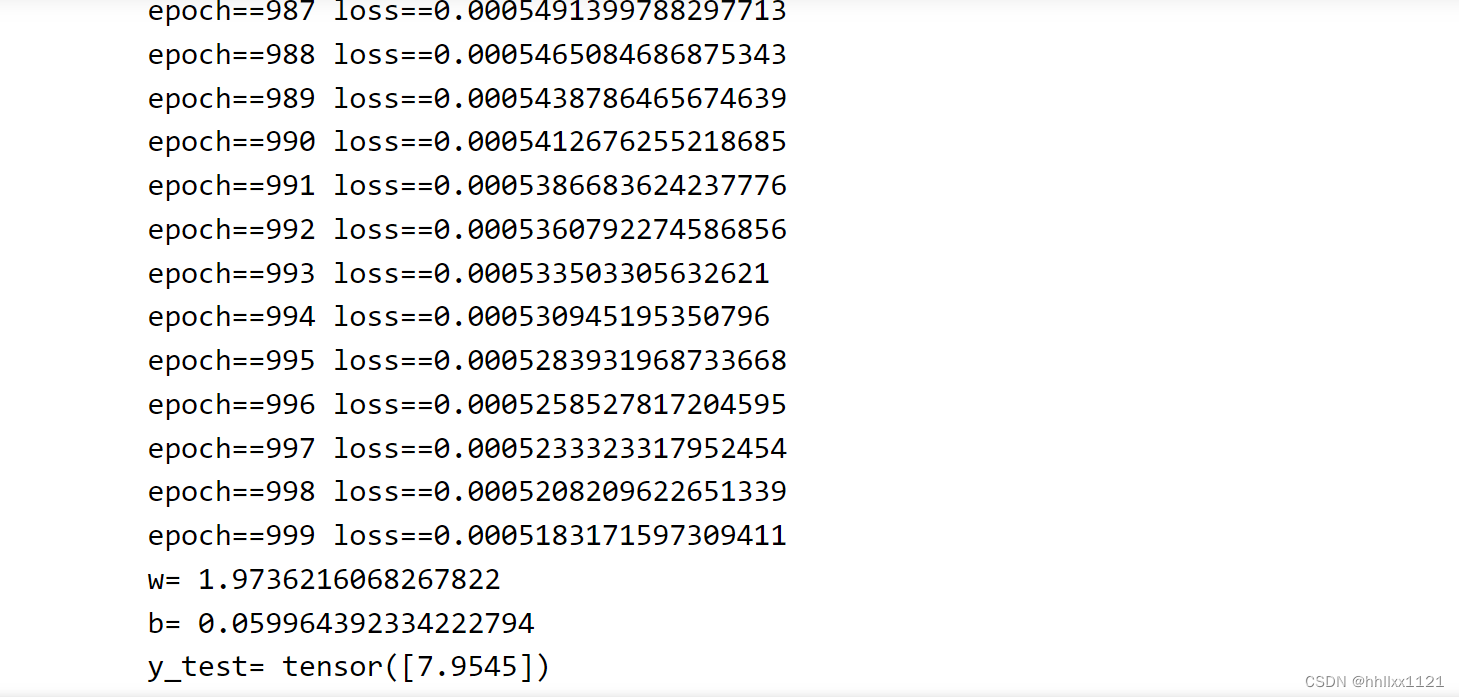

输出结果:

2. 逻辑回归

import torch

x_data = torch.Tensor([[1.0],[2.0],[3.0],[4.0],[5.0],[6.0]])

y_data = torch.Tensor([[0],[1],[0],[1],[0],[1]])

class LogisticRegressionModel(torch.nn.Module):

def __init__(self):

super(LogisticRegressionModel,self).__init__()

self.linear = torch.nn.Linear(1,1)

def forward(self,x):

y_pred = torch.sigmoid(self.linear(x))

return y_pred

model = LogisticRegressionModel()

criterion = torch.nn.BCELoss() # 交叉熵

optimizer = torch.optim.SGD(model.parameters(),lr=0.01)

for epoch in range(1000):

y_pred = model(x_data)

loss = criterion(y_pred,y_data)

print(f'epoch=={epoch}',loss.item())

optimizer.zero_grad()

loss.backward()

optimizer.step()

x_test = torch.Tensor([[8.0]])

y_test = model(x_test)

print("y_text==", y_test.data)

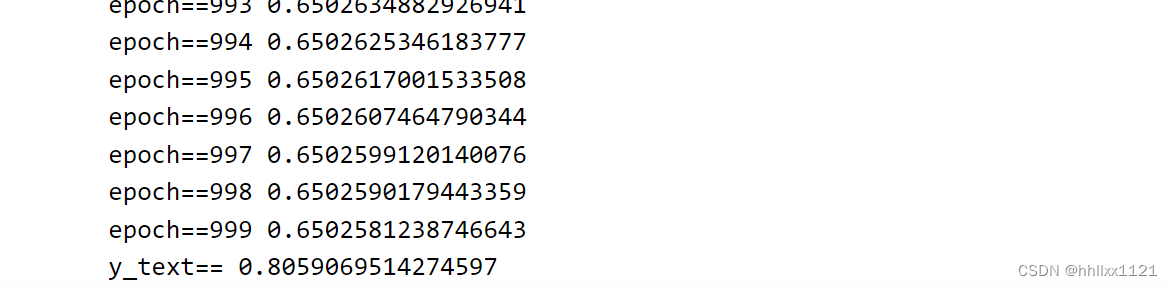

输出结果

3. softmax多分类

import torch

from torchvision import transforms

from torchvision import datasets

from torch.utils.data import DataLoader

import torch.nn.functional as F

import torch.optim as optim

batch_size = 64

transform = transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.1307,),(0.3081,)) # 均值和标准差

])

train_dataset = datasets.MNIST(root='./dataset/mnist/',train=True,download=False,transform=transform)

train_loader = DataLoader(train_dataset,shuffle=True,batch_size=batch_size)

test_dataset = datasets.MNIST(root='./dataset/mnist/',train=False,download=False,transform=transform)

test_loader = DataLoader(test_dataset,shuffle=True,batch_size=batch_size)

class Net(torch.nn.Module):

def __init__(self):

super(Net,self).__init__()

self.l1 = torch.nn.Linear(784,512)

self.l2 = torch.nn.Linear(512,256)

self.l3 = torch.nn.Linear(256,128)

self.l4 = torch.nn.Linear(128,64)

self.l5 = torch.nn.Linear(64,10)

def forward(self, x):

x = x.view(-1,784)

x = F.relu(self.l1(x))

x = F.relu(self.l2(x))

x = F.relu(self.l3(x))

x = F.relu(self.l4(x))

return self.l5(x)

model = Net()

criterion = torch.nn.CrossEntropyLoss()

optimizer = optim.SGD(model.parameters(),lr=0.01,momentum=0.5)

def train(epoch):

running_loss = 0.0

for batch_idx,data in enumerate(train_loader,0):

inputs,target = data

optimizer.zero_grad()

outputs = model(inputs)

loss = criterion(outputs,target)

loss.backward()

optimizer.step()

running_loss += loss.item()

if batch_idx %300 ==299:

print('[%d,%5d] loss: %.3f' %(epoch+1, batch_idx+1, running_loss/300))

running_loss = 0.0

def test():

correct = 0

total = 0

with torch.no_grad():

for data in test_loader:

images,labels = data

outputs = model(images)

_,predicted = torch.max(outputs.data,dim=1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

print('Accuracy on test set:%d %%' % (100*correct/total))

if __name__ == '__main__':

for epoch in range(10):

train(epoch)

test()

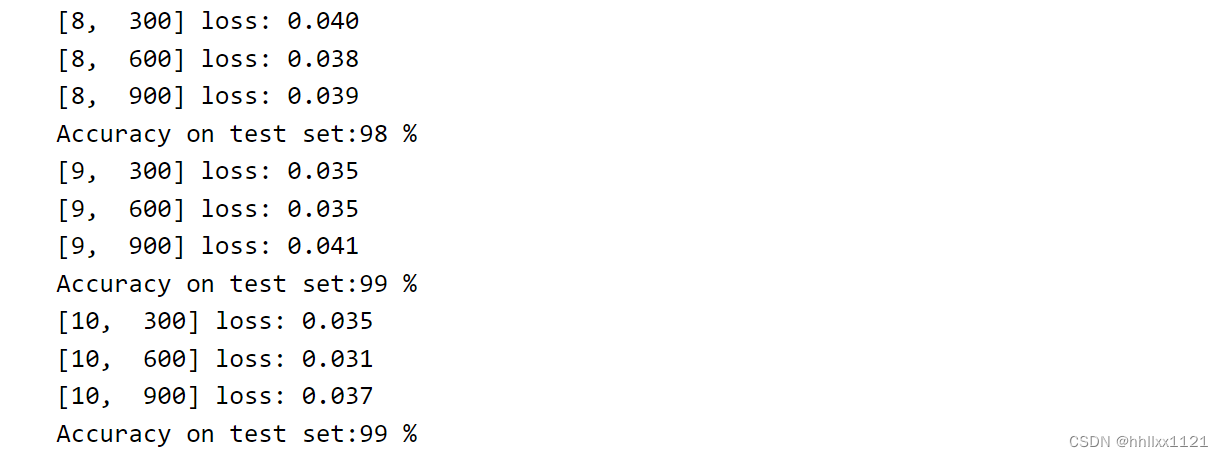

输出结果

4. 卷积神经网络

import torch

from torchvision import transforms

from torchvision import datasets

from torch.utils.data import DataLoader

import torch.nn.functional as F

import torch.optim as optim

batch_size = 64

transform = transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.1307,),(0.3081,))

])

train_dataset = datasets.MNIST(root='./dataset/mnist/',train=True,download=True,transform=transform)

train_loader = DataLoader(train_dataset,shuffle=True,batch_size=batch_size)

test_dataset = datasets.MNIST(root='./dataset/mnist/',train=True,download=True,transform=transform)

test_loader = DataLoader(test_dataset,shuffle=True,batch_size=batch_size)

class Net(torch.nn.Module):

def __init__(self):

super(Net,self).__init__()

self.conv1 = torch.nn.Conv2d(1,10,kernel_size=5) # in_channel, out_channel

self.conv2= torch.nn.Conv2d(10,20,kernel_size=5)

self.pooling = torch.nn.MaxPool2d(2)

self.fc = torch.nn.Linear(320,10)

def forward(self,x):

batch_size = x.size(0)

x = F.relu(self.pooling(self.conv1(x)))

x = F.relu(self.pooling(self.conv2(x)))

x = x.view(batch_size,-1) # 展平为 (batch_size, 320)

x = self.fc(x) # 不做激活,使用CrossEntropyLoss

return x

model = Net()

device = torch.device('cuda:0' if torch.cuda.is_available() else 'cpu')

model = model.to(device)

print(device)

criterion = torch.nn.CrossEntropyLoss()

optimizer = optim.SGD(model.parameters(),lr=0.01,momentum=0.5)

def train(epoch):

running_loss = 0.0

for batch_idx,data in enumerate(train_loader,0):

inputs,target = data

inputs,target = inputs.to(device),target.to(device)

optimizer.zero_grad()

outputs = model(inputs)

loss = criterion(outputs,target)

loss.backward()

optimizer.step()

running_loss += loss.item()

if batch_idx %300 ==299:

print('[%d,%5d] loss: %.3f' %(epoch+1, batch_idx+1, running_loss/300))

running_loss = 0.0

def test():

correct = 0

total = 0

with torch.no_grad():

for data in test_loader:

images,labels = data

images,labels = images.to(device),labels.to(device)

outputs = model(images)

_,predicted = torch.max(outputs.data,dim=1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

print('Accuracy on test set:%d %%' % (100*correct/total))

if __name__ == '__main__':

for epoch in range(10):

train(epoch)

test()

输出结果

5. 循环神经网络

import torch

input_size = 4

hidden_size = 4

batch_size = 1

num_layers = 1

seq_len = 5

idx2char = ['e','h','l','o']

x_data = [1,0,2,2,3] # hello

y_data = [3,1,2,3,2] # ohlol

one_hot_lookup = [

[1,0,0,0],

[0,1,0,0],

[0,0,1,0],

[0,0,0,1]

]

x_one_hot = [one_hot_lookup[x] for x in x_data]

inputs = torch.Tensor(x_one_hot).view(-1, batch_size, input_size)

labels = torch.LongTensor(y_data)

class Model(torch.nn.Module):

def __init__(self, input_size, hidden_size, batch_size, num_layers=1):

super().__init__()

self.num_layers = num_layers

self.input_size = input_size

self.batch_size = batch_size

self.hidden_size = hidden_size

self.rnn = torch.nn.RNN(input_size=self.input_size,hidden_size=self.hidden_size,num_layers=self.num_layers)

def forward(self,input):

hidden = torch.zeros(self.num_layers,self.batch_size,self.hidden_size)

out,_ = self.rnn(input,hidden)

return out.view(-1,self.hidden_size)

net = Model(input_size,hidden_size, batch_size, num_layers)

criterion = torch.nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(net.parameters(), lr=0.05)

for epoch in range(15):

optimizer.zero_grad()

outputs = net(inputs)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

_,idx = outputs.max(dim=1)

idx = idx.data.numpy()

print("Predicted:",''.join([idx2char[x] for x in idx]),end='')

print(', Epoch [%d/15] loss = %.3f' %(epoch+1,loss.item()))

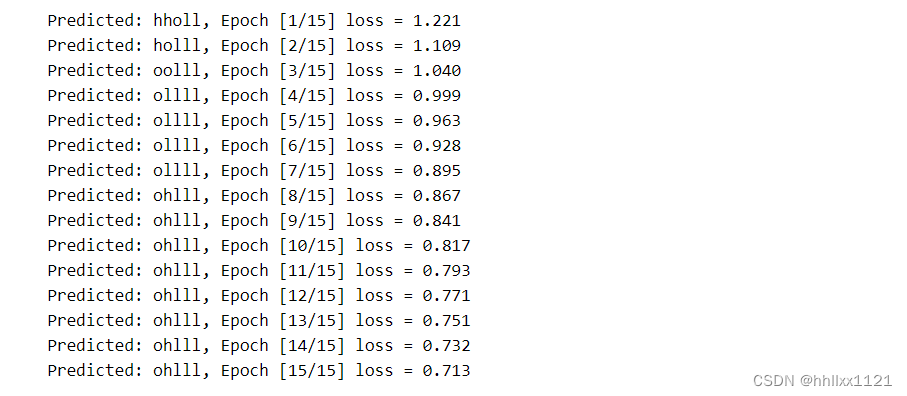

输出结果

附加

- 判断是否有GPU

- 把模型迁移到GPU

model.to(device) - 把数据迁移到GPU

948

948

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?