本代码其他资源以及其他工具见:地址链接

### 数据说明

本次实验所用的数据为0-9(其中0的标签为Z(Zero))和O这11个字符的英文录音所提取的39维的MFCC特征。其中

* 训练数据:330句话,11个字符,每个字符30句话,训练数据位于train目录下。

* 测试数据:110句话,11个字符,每个字符10句话,测试数据位于test目录下。

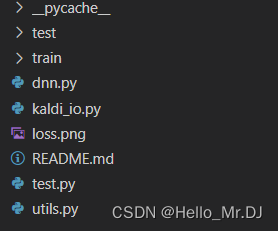

train/test目录下各有3个文件,分别如下:

* text: 标注文件,每一行第一列为句子id,第二列为标注。

* feats.scp: 特征索引文件,每一行第一列为句子id,第二列为特征的索引表示。

* feats.ark: 特征实际存储文件,该文件为二进制文件。

### 实验内容

本实验实现了一个简单的DNN的框架,使用DNN进行11个数字的训练和识别。

实验中使用以上所述的训练和测试数据分别对该DNN进行训练和测试。

请阅读dnn.py中的代码,理解该DNN框架,完善ReLU激活函数和FullyConnect全连接层的前向后向算法。

可以参考Softmax的前向和后向实现。dnn.py中代码插入位置为。文件格式如下:

dnn.py:

import math

import numpy as np

import kaldi_io

from utils import *

import matplotlib

import matplotlib.pyplot as plt

#训练100轮

epochs = 100

#一次喂入100个数据

batch = 100

targets_list = ['Z', '1', '2', '3', '4', '5', '6', '7', '8', '9', 'O']

targets_mapping = {}

for i, x in enumerate(targets_list):

targets_mapping[x] = i

def plot_spectrogram(spec, file_name):

fig = plt.figure(figsize=(20, 10))

plt.plot(spec)

plt.xlabel('epochs')

plt.ylabel('loss')

plt.savefig(file_name)

class Layer:

def forward(self, input):

''' Forward function by input

Args:

input: input, B * N matrix, B for batch size

Returns:

output when applied this layer

'''

raise 'Not implement error'

def backward(self, input, output, d_output):

''' Compute gradient of this layer's input by (input, output, d_output)

as well as compute the gradient of the parameter of this layer

Args:

input: input of this layer

output: output of this layer

d_output: accumulated gradient from final output to this

layer's output

Returns:

accumulated gradient from final output to this layer's input

'''

raise 'Not implement error'

def set_learning_rate(self, lr):

''' Set learning rate of this layer'''

self.learning_rate = lr

def update(self):

#softmax层和relu层为非连接层不需要更新

''' Update this layers parameter if it has or do nothing

'''

class ReLU(Layer):

def forward(self, input):

# BEGIN_LAB

mat = np.maximum(0, input)

return mat.T

# END_LAB

def backward(self, input, output, d_output):

# BEGIN_LAB

mat = np.array(d_output, copy=True)

mat[input <= 0] = 0

return mat.T

# END_LAB

class FullyConnect(Layer):

#模拟全连接层,权重w和偏置b

def __init__(self, in_dim, out_dim):

self.w = np.random.randn(out_dim, in_dim) * np.sqrt(2.0 / in_dim)

self.b = np.zeros((out_dim,1))

self.dw = np.zeros((out_dim, in_dim))

self.db = np.zeros((out_dim,1))

def forward(self, input):

# BEGIN_LAB

#input[100,429]

mat = np.dot(self.w, input.T) + self.b

return mat

# END_LAB

def backward(self, input, output, d_output):

batch_size = input.shape[0]

in_diff = None

# BEGIN_LAB, compute in_diff/dw/db here

self.dw = np.dot(d_output, input) / batch_size

self.db = np.sum(d_output, axis=1, keepdims=True) / batch_size

in_diff = np.dot(self.w.T, d_output).T

# END_LAB

# Normalize dw/db by batch size

self.dw = self.dw / batch_size

self.db = self.db / batch_size

return in_diff

def update(self):

self.w = self.w - self.learning_rate * self.dw

self.b = self.b - self.learning_rate * self.db

class Softmax(Layer):

def forward(self, input):

input = input.T

row_max = input.max(axis=1).reshape(input.shape[0], 1)

x = input - row_max

return np.exp(x) / np.sum(np.exp(x), axis=1).reshape(x.shape[0], 1)

def backward(self, input, output, d_output):

''' Directly return the d_output as we show below, the grad is to

the activation(input) of softmax

'''

return d_output

class DNN:

def __init__(self, in_dim, out_dim, hidden_dim, num_hidden):

self.layers = []

self.layers.append(FullyConnect(in_dim, hidden_dim))

self.layers.append(ReLU())

for i in range(num_hidden):

#隐藏层W的维度[hidden_dim, hidden_dim],每层后面放置一个Relu实例,最后输出Softmax实例

self.layers.append(FullyConnect(hidden_dim, hidden_dim))

self.layers.append(ReLU())

self.layers.append(FullyConnect(hidden_dim, out_dim))

self.layers.append(Softmax())

def set_learning_rate(self, lr):

for layer in self.layers:

layer.set_learning_rate(lr)

def forward(self, input):

self.forward_buf = []

#[100,429]

out = input

self.forward_buf.append(out)

for i in range(len(self.layers)):

#[每一层的节点数,100]

out = self.layers[i].forward(out)

self.forward_buf.append(out)

assert (len(self.forward_buf) == len(self.layers) + 1)

#最后返回的是softmax数据矩阵[n,100]

return out

def backward(self, grad):

#此处的反向传播算法还需要再研究一下

'''

Args:

grad: the grad is to the activation before softmax

'''

self.backward_buf = [None] * len(self.layers)

self.backward_buf[len(self.layers) - 1] = grad

for i in range(len(self.layers) - 2, -1, -1):

grad = self.layers[i].backward(self.forward_buf[i],

self.forward_buf[i + 1],

self.backward_buf[i + 1].T)

self.backward_buf[i] = grad

def update(self):

for layer in self.layers:

layer.update()

def one_hot(labels, total_label):

output = np.zeros((labels.shape[0], total_label))

for i in range(labels.shape[0]):

output[i][labels[i]] = 1.0

return output

def train(dnn):

#utt2feat{330} scp文件以空格分割,wav文件名为key,value为索引

#utt2target{330} text文件以空格分割,wav文件名为key,value为标签

utt2feat, utt2target = read_feats_and_targets('train/feats.scp',

'train/text')

#处理后的[所有语音的所有帧数,429],以及对应帧的标签

inputs, labels = build_input(targets_mapping, utt2feat, utt2target)

num_samples = inputs.shape[0]

# Shuffle data

#对所有帧以及对应标签进行随机排序

permute = np.random.permutation(num_samples)

inputs = inputs[permute]

labels = labels[permute]

num_epochs = epochs

batch_size = batch

avg_loss = np.zeros(num_epochs)

for i in range(num_epochs):

cur = 0

while cur < num_samples:

end = min(cur + batch_size, num_samples)

#[100,429]

input = inputs[cur:end]

#[100,1]

label = labels[cur:end]

# Step1: forward

#[所有层节点数,100]

out = dnn.forward(input)

one_hot_label = one_hot(label, out.shape[1])

# Step2: Compute cross entropy loss and backward

loss = -np.sum(np.log(out + 1e-20) * one_hot_label) / out.shape[0]

# The grad is to activation before softmax

grad = out - one_hot_label

dnn.backward(grad)

# Step3: update parameters

dnn.update()

avg_loss[i] += loss

cur += batch_size

print('Epoch {} loss {}'.format(i, loss))

avg_loss[i] /= math.ceil(num_samples / batch_size)

plot_spectrogram(avg_loss, 'loss.png')

def test(dnn):

utt2feat, utt2target = read_feats_and_targets('test/feats.scp',

'test/text')

total = len(utt2feat)

correct = 0

for utt in utt2feat:

t = utt2target[utt]

ark = utt2feat[utt]

mat = kaldi_io.read_mat(ark)

mat = splice(mat, 5, 5)

#将某一个音频的所有帧数据喂汝,计算每一帧属于每一个类别的softmax概率

posterior = dnn.forward(mat)

#将所有帧率属于某一类别的softmax概率相加

posterior = np.sum(posterior, axis=0) / float(mat.shape[0])

#求这一帧概率最大的类别在targets_list中的索引

predict = targets_list[np.argmax(posterior)]

if t == predict: correct += 1

print('Test success:{},fault:{}'.format(correct,(total-correct)))

print('Acc: {}'.format(float(correct) / total))

def main():

np.random.seed(777)

# We splice the raw feat with left 5 frames and right 5 frames

# So the input here is 39 * (5 + 1 + 5) = 429

#初始化DNN参数

dnn = DNN(429, 11, 128, 1)

#设定学习率

dnn.set_learning_rate(2e-2)

train(dnn)

test(dnn)

if __name__ == '__main__':

main()最终输出结果:

Epoch 0 loss 8.39371605283952

Epoch 1 loss 6.430591497496062

Epoch 2 loss 5.392602354073269

Epoch 3 loss 4.718402282291926

Epoch 4 loss 4.197041496797619

Epoch 5 loss 3.7948171284313386

Epoch 6 loss 3.4810500076139177

Epoch 7 loss 3.232500223635363

Epoch 8 loss 3.025948512742329

Epoch 9 loss 2.847784388744921

Epoch 10 loss 2.6886262382327146

Epoch 11 loss 2.5442591200442415

Epoch 12 loss 2.41912589263994

Epoch 13 loss 2.3078869268480173

Epoch 14 loss 2.2061915060292936

Epoch 15 loss 2.1136354295261524

Epoch 16 loss 2.0290296015967186

Epoch 17 loss 1.9492051732387286

Epoch 18 loss 1.8754369154951156

Epoch 19 loss 1.8065863731921954

Epoch 20 loss 1.7433242988636568

Epoch 21 loss 1.6850684607546684

Epoch 22 loss 1.6327207387175333

Epoch 23 loss 1.5833336439295596

Epoch 24 loss 1.5374158281287738

Epoch 25 loss 1.49459231781753

Epoch 26 loss 1.4539046554502038

Epoch 27 loss 1.4149755053024395

Epoch 28 loss 1.3781974608941918

Epoch 29 loss 1.343177551897468

Epoch 30 loss 1.309703635666986

Epoch 31 loss 1.277588582832339

Epoch 32 loss 1.2474548371569074

Epoch 33 loss 1.2193926043833774

Epoch 34 loss 1.1923332130268844

Epoch 35 loss 1.167035532076408

Epoch 36 loss 1.143334378514255

Epoch 37 loss 1.12147990294338

Epoch 38 loss 1.1006671376182853

Epoch 39 loss 1.080747014672373

Epoch 40 loss 1.0617155311103916

Epoch 41 loss 1.0432848193289006

Epoch 42 loss 1.026050388548674

Epoch 43 loss 1.0095483329941846

Epoch 44 loss 0.9927764127306355

Epoch 45 loss 0.9764471937444515

Epoch 46 loss 0.9608742813821497

Epoch 47 loss 0.9454276114725653

Epoch 48 loss 0.9302733172799915

Epoch 49 loss 0.9151429416122426

Epoch 50 loss 0.9005248130345576

Epoch 51 loss 0.886545545461796

Epoch 52 loss 0.8730899677088563

Epoch 53 loss 0.8597942830627889

Epoch 54 loss 0.8469844697095887

Epoch 55 loss 0.8345649565485721

Epoch 56 loss 0.8229685499533995

Epoch 57 loss 0.8119108910662776

Epoch 58 loss 0.801294200761067

Epoch 59 loss 0.7910160039831089

Epoch 60 loss 0.7810150252143205

Epoch 61 loss 0.7710355296691648

Epoch 62 loss 0.7617359168800142

Epoch 63 loss 0.7524741538083125

Epoch 64 loss 0.743385846297439

Epoch 65 loss 0.7344208208761847

Epoch 66 loss 0.7259121793513479

Epoch 67 loss 0.7178935032174829

Epoch 68 loss 0.7100279299980566

Epoch 69 loss 0.7023279459373876

Epoch 70 loss 0.6945042124352947

Epoch 71 loss 0.686638576938571

Epoch 72 loss 0.679000184894466

Epoch 73 loss 0.6715993716799477

Epoch 74 loss 0.664594243476965

Epoch 75 loss 0.6575766908451528

Epoch 76 loss 0.6505749805586865

Epoch 77 loss 0.6438740607885409

Epoch 78 loss 0.637355614109719

Epoch 79 loss 0.6312051568609839

Epoch 80 loss 0.6251122389867056

Epoch 81 loss 0.6190324852112509

Epoch 82 loss 0.6131713114305248

Epoch 83 loss 0.6075953994357652

Epoch 84 loss 0.6021921191965783

Epoch 85 loss 0.5967345477402273

Epoch 86 loss 0.5913652312310598

Epoch 87 loss 0.5860017511215531

Epoch 88 loss 0.5807798071628769

Epoch 89 loss 0.5756116514318202

Epoch 90 loss 0.5705298706978991

Epoch 91 loss 0.5654096080710989

Epoch 92 loss 0.5604412145706512

Epoch 93 loss 0.5553980467211257

Epoch 94 loss 0.5505323487159214

Epoch 95 loss 0.5456167080728114

Epoch 96 loss 0.5408443171318722

Epoch 97 loss 0.5362309103725605

Epoch 98 loss 0.5317224927552567

Epoch 99 loss 0.5271678468351199

Test success:103,fault:7

Acc: 0.9363636363636364

[Done] exited with code=0 in 48.167 seconds

1820

1820

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?