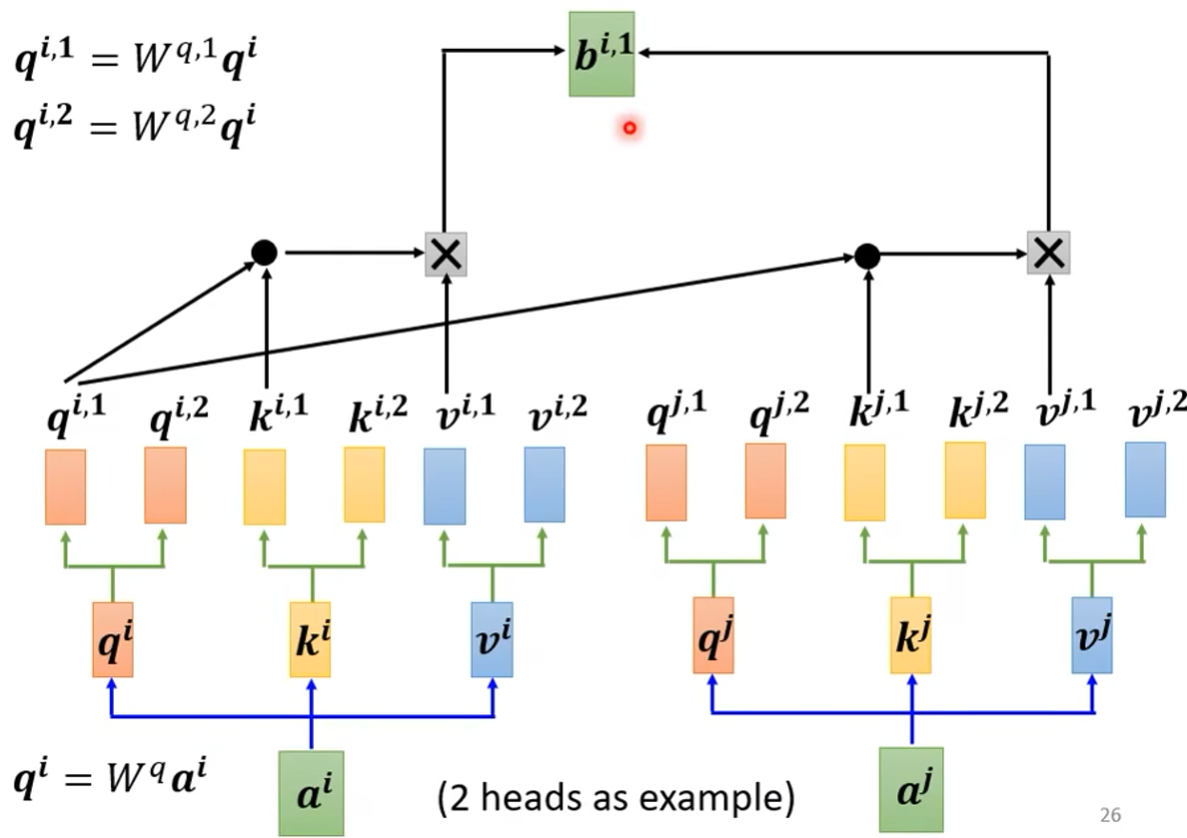

多头注意力机制

class MultiHeadedAttention(nn.Module):

def __init__(self, h, d_model, dropout=0.1):

"Take in model size and number of heads."

super(MultiHeadedAttention, self).__init__()

assert d_model % h == 0

# We assume d_v always equals d_k

self.d_k = d_model // h

self.h = h

self.linears = clones(nn.Linear(d_model, d_model), 4)

self.attn = None

self.dropout = nn.Dropout(p=dropout)

def forward(self, query, key, value, mask=None):

"Implements Figure 2"

if mask is not None:

# Same mask applied to all h heads.

mask = mask.unsqueeze(1)

nbatches = query.size(0)

# 1) Do all the linear projections in batch from d_model => h x d_k

query, key, value = [

lin(x).view(nbatches, -1, self.h, self.d_k).transpose(1, 2)

for lin, x in zip(self.linears, (query, key, value))

]

# 2) Apply attention on all the projected vectors in batch.

x, self.attn = attention(

query, key, value, mask=mask, dropout=self.dropout

)

# 3) "Concat" using a view and apply a final linear.

x = (

x.transpose(1, 2)

.contiguous()

.view(nbatches, -1, self.h * self.d_k)

)

del query

del key

del value

return self.linears[-1](x)

如上图举例所示,qi1在算attention分数时忽略qi2,只计算qi1的attention,qi2也是同样的操作,这即是两头时的操作,多头操作亦如此。

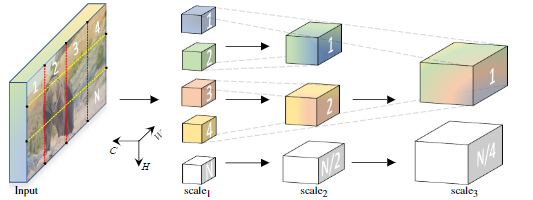

多头池化注意力(Multi Head Pooling Attention)

首先对MHPA作出解释,这是本文的核心,它使得多尺度变换器以逐渐变化的时空分辨率进行操作。与原始的多头注意力(MHA)不同,在原始的多头注意力中,通道维度和时空分辨率保持不变,MHPA将潜在张量序列合并,以减少参与输入的序列长度(分辨率)。如图所示

池化注意力:

def attention_pool(tensor, pool, thw_shape, has_cls_embed=True, norm=None):

if pool is None:

return tensor, thw_shape

tensor_dim = tensor.ndim

if tensor_dim == 4:

pass

elif tensor_dim == 3:

tensor = tensor.unsqueeze(1)

else:

raise NotImplementedError(f"Unsupported input dimension {tensor.shape}")

if has_cls_embed:

cls_tok, tensor = tensor[:, :, :1, :], tensor[:, :, 1:, :]

B, N, L, C = tensor.shape

T, H, W = thw_shape

tensor = (

tensor.reshape(B * N, T, H, W, C).permute(0, 4, 1, 2, 3).contiguous()

)

tensor = pool(tensor)

thw_shape = [tensor.shape[2], tensor.shape[3], tensor.shape[4]]

L_pooled = tensor.shape[2] * tensor.shape[3] * tensor.shape[4]

tensor = tensor.reshape(B, N, C, L_pooled).transpose(2, 3)

if has_cls_embed:

tensor = torch.cat((cls_tok, tensor), dim=2)

if norm is not None:

tensor = norm(tensor)

# Assert tensor_dim in [3, 4]

if tensor_dim == 4:

pass

else: # tensor_dim == 3:

tensor = tensor.squeeze(1)

return tensor, thw_shape多层感知机

class Mlp(nn.Module):

def __init__(

self,

in_features,

hidden_features=None,

out_features=None,

act_layer=nn.GELU,

drop_rate=0.0,

):

super().__init__()

self.drop_rate = drop_rate

out_features = out_features or in_features

hidden_features = hidden_features or in_features

self.fc1 = nn.Linear(in_features, hidden_features)

self.act = act_layer()

self.fc2 = nn.Linear(hidden_features, out_features)

if self.drop_rate > 0.0:

self.drop = nn.Dropout(drop_rate)

def forward(self, x):

x = self.fc1(x)

x = self.act(x)

if self.drop_rate > 0.0:

x = self.drop(x)

x = self.fc2(x)

if self.drop_rate > 0.0:

x = self.drop(x)

return x分类头

class ClassificationHead(nn.Sequential):

def __init__(self, emb_size: int = 768, n_classes: int = 1000):

super().__init__(

Reduce('b n e -> b e', reduction='mean'),

nn.LayerNorm(emb_size),

nn.Linear(emb_size, n_classes))

多尺度变换器模型

逐步增加信道维度,同时降低整个网络的时空分辨率(即序列长度)。MViT在早期层中具有精细的时空分辨率和低信道维度,而在后期层中,变为粗略的时空分辨率和高信道维度。如图所示:

class MultiScaleAttention(nn.Module):

def __init__(

self,

dim,

dim_out,

input_size,

num_heads=8,

qkv_bias=False,

drop_rate=0.0,

kernel_q=(1, 1, 1),

kernel_kv=(1, 1, 1),

stride_q=(1, 1, 1),

stride_kv=(1, 1, 1),

norm_layer=nn.LayerNorm,

has_cls_embed=True,

# Options include `conv`, `avg`, and `max`.

mode="conv",

# If True, perform pool before projection.

pool_first=False,

rel_pos_spatial=False,

rel_pos_temporal=False,

rel_pos_zero_init=False,

residual_pooling=False,

separate_qkv=False,

):

super().__init__()

self.pool_first = pool_first

self.separate_qkv = separate_qkv

self.drop_rate = drop_rate

self.num_heads = num_heads

self.dim_out = dim_out

head_dim = dim_out // num_heads

self.scale = head_dim**-0.5

self.has_cls_embed = has_cls_embed

self.mode = mode

padding_q = [int(q // 2) for q in kernel_q]

padding_kv = [int(kv // 2) for kv in kernel_kv]

if pool_first or separate_qkv:

self.q = nn.Linear(dim, dim_out, bias=qkv_bias)

self.k = nn.Linear(dim, dim_out, bias=qkv_bias)

self.v = nn.Linear(dim, dim_out, bias=qkv_bias)

else:

self.qkv = nn.Linear(dim, dim_out * 3, bias=qkv_bias)

self.proj = nn.Linear(dim_out, dim_out)

if drop_rate > 0.0:

self.proj_drop = nn.Dropout(drop_rate)

# Skip pooling with kernel and stride size of (1, 1, 1).

if numpy.prod(kernel_q) == 1 and numpy.prod(stride_q) == 1:

kernel_q = ()

if numpy.prod(kernel_kv) == 1 and numpy.prod(stride_kv) == 1:

kernel_kv = ()

if mode in ("avg", "max"):

pool_op = nn.MaxPool3d if mode == "max" else nn.AvgPool3d

self.pool_q = (

pool_op(kernel_q, stride_q, padding_q, ceil_mode=False)

if len(kernel_q) > 0

else None

)

self.pool_k = (

pool_op(kernel_kv, stride_kv, padding_kv, ceil_mode=False)

if len(kernel_kv) > 0

else None

)

self.pool_v = (

pool_op(kernel_kv, stride_kv, padding_kv, ceil_mode=False)

if len(kernel_kv) > 0

else None

)

elif mode == "conv" or mode == "conv_unshared":

if pool_first:

dim_conv = dim // num_heads if mode == "conv" else dim

else:

dim_conv = dim_out // num_heads if mode == "conv" else dim_out

self.pool_q = (

nn.Conv3d(

dim_conv,

dim_conv,

kernel_q,

stride=stride_q,

padding=padding_q,

groups=dim_conv,

bias=False,

)

if len(kernel_q) > 0

else None

)

self.norm_q = norm_layer(dim_conv) if len(kernel_q) > 0 else None

self.pool_k = (

nn.Conv3d(

dim_conv,

dim_conv,

kernel_kv,

stride=stride_kv,

padding=padding_kv,

groups=dim_conv,

bias=False,

)

if len(kernel_kv) > 0

else None

)

self.norm_k = norm_layer(dim_conv) if len(kernel_kv) > 0 else None

self.pool_v = (

nn.Conv3d(

dim_conv,

dim_conv,

kernel_kv,

stride=stride_kv,

padding=padding_kv,

groups=dim_conv,

bias=False,

)

if len(kernel_kv) > 0

else None

)

self.norm_v = norm_layer(dim_conv) if len(kernel_kv) > 0 else None

else:

raise NotImplementedError(f"Unsupported model {mode}")

self.rel_pos_spatial = rel_pos_spatial

self.rel_pos_temporal = rel_pos_temporal

if self.rel_pos_spatial:

assert input_size[1] == input_size[2]

size = input_size[1]

q_size = size // stride_q[1] if len(stride_q) > 0 else size

kv_size = size // stride_kv[1] if len(stride_kv) > 0 else size

rel_sp_dim = 2 * max(q_size, kv_size) - 1

self.rel_pos_h = nn.Parameter(torch.zeros(rel_sp_dim, head_dim))

self.rel_pos_w = nn.Parameter(torch.zeros(rel_sp_dim, head_dim))

if not rel_pos_zero_init:

trunc_normal_(self.rel_pos_h, std=0.02)

trunc_normal_(self.rel_pos_w, std=0.02)

if self.rel_pos_temporal:

self.rel_pos_t = nn.Parameter(

torch.zeros(2 * input_size[0] - 1, head_dim)

)

if not rel_pos_zero_init:

trunc_normal_(self.rel_pos_t, std=0.02)

self.residual_pooling = residual_pooling

def forward(self, x, thw_shape):

B, N, _ = x.shape

if self.pool_first:

if self.mode == "conv_unshared":

fold_dim = 1

else:

fold_dim = self.num_heads

x = x.reshape(B, N, fold_dim, -1).permute(0, 2, 1, 3)

q = k = v = x

else:

assert self.mode != "conv_unshared"

if not self.separate_qkv:

qkv = (

self.qkv(x)

.reshape(B, N, 3, self.num_heads, -1)

.permute(2, 0, 3, 1, 4)

)

q, k, v = qkv[0], qkv[1], qkv[2]

else:

q = k = v = x

q = (

self.q(q)

.reshape(B, N, self.num_heads, -1)

.permute(0, 2, 1, 3)

)

k = (

self.k(k)

.reshape(B, N, self.num_heads, -1)

.permute(0, 2, 1, 3)

)

v = (

self.v(v)

.reshape(B, N, self.num_heads, -1)

.permute(0, 2, 1, 3)

)

q, q_shape = attention_pool(

q,

self.pool_q,

thw_shape,

has_cls_embed=self.has_cls_embed,

norm=self.norm_q if hasattr(self, "norm_q") else None,

)

k, k_shape = attention_pool(

k,

self.pool_k,

thw_shape,

has_cls_embed=self.has_cls_embed,

norm=self.norm_k if hasattr(self, "norm_k") else None,

)

v, v_shape = attention_pool(

v,

self.pool_v,

thw_shape,

has_cls_embed=self.has_cls_embed,

norm=self.norm_v if hasattr(self, "norm_v") else None,

)

if self.pool_first:

q_N = (

numpy.prod(q_shape) + 1

if self.has_cls_embed

else numpy.prod(q_shape)

)

k_N = (

numpy.prod(k_shape) + 1

if self.has_cls_embed

else numpy.prod(k_shape)

)

v_N = (

numpy.prod(v_shape) + 1

if self.has_cls_embed

else numpy.prod(v_shape)

)

q = q.permute(0, 2, 1, 3).reshape(B, q_N, -1)

q = (

self.q(q)

.reshape(B, q_N, self.num_heads, -1)

.permute(0, 2, 1, 3)

)

v = v.permute(0, 2, 1, 3).reshape(B, v_N, -1)

v = (

self.v(v)

.reshape(B, v_N, self.num_heads, -1)

.permute(0, 2, 1, 3)

)

k = k.permute(0, 2, 1, 3).reshape(B, k_N, -1)

k = (

self.k(k)

.reshape(B, k_N, self.num_heads, -1)

.permute(0, 2, 1, 3)

)

N = q.shape[2]

attn = (q * self.scale) @ k.transpose(-2, -1)

if self.rel_pos_spatial:

attn = cal_rel_pos_spatial(

attn,

q,

k,

self.has_cls_embed,

q_shape,

k_shape,

self.rel_pos_h,

self.rel_pos_w,

)

if self.rel_pos_temporal:

attn = cal_rel_pos_temporal(

attn,

q,

self.has_cls_embed,

q_shape,

k_shape,

self.rel_pos_t,

)

attn = attn.softmax(dim=-1)

x = attn @ v

if self.residual_pooling:

if self.has_cls_embed:

x[:, :, 1:, :] += q[:, :, 1:, :]

else:

x = x + q

x = x.transpose(1, 2).reshape(B, -1, self.dim_out)

x = self.proj(x)

if self.drop_rate > 0.0:

x = self.proj_drop(x)

return x, q_shape

1610

1610

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?