Kubeadm 部署高可用集群

环境规划 真机ip master1 192.168.25.45

Mastre2 192.168.25.46

Master3 192.168.25.47

node1 192.168.25.48

Node2 192.168.25.49

Node3 192.168.25.50

预计vip 192.168.25.100

工具 kubeadm docker-ce calico iptables

以下操作每台机器都要做!!!!

1 配置ip vi /etc/sysconfig/network-scripts-ifcfg-ens33

TYPE=Ethernet

BOOTPROTO=static

IPADDR=192.168.25.45

NETMASK=255.255.255.0

GATEWAY=192.168.25.1

DNS1=192.168.30.169

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=ens33

DEVICE=ens33

ONBOOT=yes

配置完毕后重启网卡服务 systemctl restart network

2 分别配置主机名和域名解析

hostnamectl set-hostname master1 && bash

/etc/hosts

192.168.25.45 master1

192.168.25.46 master2

192.168.25.47 master3

192.168.25.48 node1

192.168.25.49 node2

192.168.25.50 node3

3关闭交换分区

Swapoff -a 临时关闭

Vim /etc/fstab

#/dev/mapper/centos-swap swap swap defaults 0 0 #永久关闭

4 修改内核参数

Modprobe br_netfilter

Lsmod |grep br_netfilter 检查是否开启成功

Echo “modprobe br_netfilter “ >> /etc/profile

cat > /etc/sysctl.d/k8s.conf <<EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

Sysctl - p /etc/sysctl.d/k8s.conf

5 关闭防火墙 selinux

Systemctl disable firewalld --now

Getenforce 0

sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

6 配置yum源

Rm -rf /etc/yum.repos.d/*

curl -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-vault-8.5.2111.repo #基础镜像

# step 1: 安装必要的一些系统工具

sudo yum install -y yum-utils device-mapper-persistent-data lvm2# Step 2: 添加软件源信息

sudo yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo# Step 3

sudo sed -i 's+download.docker.com+mirrors.aliyun.com/docker-ce+' /etc/yum.repos.d/docker-ce.repo# Step 4: 更新并安装Docker-CE

vim /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=0

Scp /etc/yum.repos.d/* master2:/etc/yum.repos.d/ 设置好的镜像分发各节点

7 时间同步

yum install ntpdate -y && ntpdate cn.pool.ntp.org

* */1 * * * /usr/sbin/ntpdate cn.pool.ntp.org &> /dev/null

Systemctl restart crond

8 安装基础常用镜像包

yum install -y yum-utils device-mapper-persistent-data lvm2 wget net-tools nfs-utils lrzsz gcc gcc-c++ make cmake libxml2-devel openssl-devel curl curl-devel unzip sudo ntp libaio-devel wget vim ncurses-devel autoconf automake zlibdevel python-devel epel-release openssh-server socat ipvsadm conntrack ntpdate telnet

yum install docker-ce-20.10.6 docker-ce-cli-20.10.6 containerd.io -y

yum install -y kubelet-1.20.6 kubeadm-1.20.6 kubectl-1.20.6

修改 docker文件

vim /etc/docker/daemon.json

{

"registry-mirrors":["https://rsbud4vc.mirror.aliyuncs.com","https://registry.docker

cn.com","https://docker.mirrors.ustc.edu.cn","https://dockerhub.azk8s.cn","http://hub

mirror.c.163.com","http://qtid6917.mirror.aliyuncs.com",

"https://rncxm540.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"]

}

Systemctl enable docker --now

Systemctl enable kubelet --now 设置开机自启

以下操作在master机器进行!!!!

yum install nginx keepalived -y

Yum - y install nginx-mod-stream

Vim /etc/nginx/nginx.conf

stream {

log_format main '$remote_addr $upstream_addr - [$time_local] $status

$upstream_bytes_sent';

access_log /var/log/nginx/k8s-access.log main;

upstream k8s-apiserver {

server 192.168.25.45:6443; # Master1 APISERVER IP:PORT

server 192.168.25.46:6443; # Master2 APISERVER IP:PORT

Server 192.168.25.47:6443; # Master3 APISERVER IP:PORT

}

server {

listen 16443; # 由于 nginx 与 master 节点复用,这个监听端口不能是 6443,否则会冲突

proxy_pass k8s-apiserver;

}

}

Nginx -t 检查配置

Vim /etc/keepalived/keepalived.conf

global_defs {

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id NGINX_MASTER

}

vrrp_script check_nginx {

script "/etc/keepalived/check_nginx.sh"

}

vrrp_instance VI_1 {

state MASTER # 主节点为MASTER 其余节点为 BACKUP

interface ens160 # 修改为实际网卡名

virtual_router_id 51 # VRRP 路由 ID 实例,每个实例是唯一的

priority 100 # 优先级,备服务器设置 90

advert_int 1 # 指定 VRRP 心跳包通告间隔时间,默认 1 秒

authentication {

auth_type PASS

auth_pass 1111

}

# 虚拟 IP

virtual_ipaddress {

192.168.25.100

}

track_script {

check_nginx

}

}

#vrrp_script:指定检查 nginx 工作状态脚本(根据 nginx 状态判断是否故障转移)

#virtual_ipaddress:虚拟 ip VIP

vim /etc/keepalived/check_nginx.sh

#!/bin/bash

A=`ps -C nginx --no-header |wc -l`

# 判断nginx是否宕机,如果宕机了,尝试重启

if [ $A -eq 0 ];then

systemctl restart nginx

# 等待一小会再次检查nginx,如果没有启动成功,则停止keepalived,使其启动备用机

sleep 3

if [ `ps -C nginx --no-header |wc -l` -eq 0 ];then

systemctl stop keepalived

fi

fi

启动服务

systemctl daemon-reload

systemctl enable nginx keepalived --now

Ifconfig 查看vip设置是否成功

初始化集群

Mkdir /root/k8s/init && cd /root/k8s/init

Vim kubeadm-config.yaml

apiVersion: kubeadm.k8s.io/v1beta2

kind: ClusterConfiguration

kubernetesVersion: v1.20.6

controlPlaneEndpoint: 192.168.40.100:16443

imageRepository: registry.aliyuncs.com/google_containers

apiServer:

certSANs:

- 192.168.25.45

- 192.168.25.46

- 192.168.25.47

- 192.168.25.100

networking:

podSubnet: 10.244.0.0/16

serviceSubnet: 10.10.0.0/16

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: ipvs

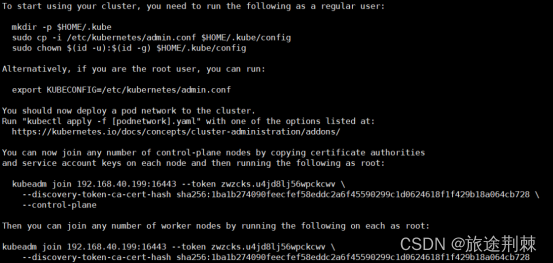

kubeadm init --config kubeadm-config.yaml --ignore-preflight-errors=SystemVerification 初始化命令

显示如下,说明安装完成

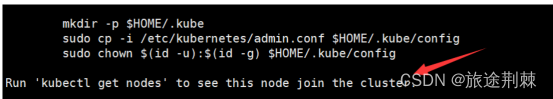

根据提示操作

1 mkdir -p $HOME/.kube

2 sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

3 sudo chown $(id -u):$(id -g) $HOME/.kube/config

kubectl get nodes 查看节点状态

NAME STATUS ROLES AGE VERSION

master1 NotReady control-plane,master 60s v1.20.6

此时集群状态还是 NotReady 状态,因为没有安装网络插件。

扩容节点

Master2 master3 上分别创建目录复制证书

cd /root && mkdir -p /etc/kubernetes/pki/etcd &&mkdir -p ~/.kube/

scp /etc/kubernetes/pki/ca.crt master2:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/ca.key master2:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/sa.pub master2:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/front-proxy-ca.crt master2:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/front-proxy-ca.key master2:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/etcd/ca.crt master2:/etc/kubernetes/pki/etcd/

Scp /etc/kubernetes/pki/etcd/ca.key master2:/etc/kubernetes/pki/etcd/

#证书拷贝之后在 master2 上执行如下命令,大家复制自己的,这样就可以把 master2 和加入到集群,成为控制节点:

在 master1 上查看加入节点的命令:

kubeadm join 192.168.25.100:16443 --token zwzcks.u4jd8lj56wpckcwv \ --discovery-token-ca-cert-hash sha256:1ba1b274090feecfef58eddc2a6f45590299c1d0624618f1f429b18a064cb728 \

--control-plane

如果忘记token 可以使用下面命令生成一个新的token,token默认24小时内有效

kubeadm token create --print-join-command

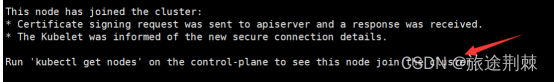

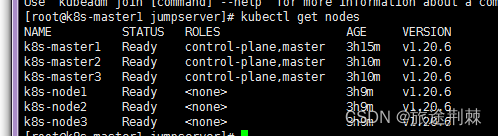

加入完毕后 可以 kubectl get nodes 查看节点信息

添加完master节点添加node节点

kubeadm join 192.168.25.100:16443 --token zwzcks.u4jd8lj56wpckcwv \ --discovery-token-ca-cert-hash\

sha256:1ba1b274090feecfef58eddc2a6f45590299c1d0624618f1f429b18a064cb728

#看到上面说明 node1 节点已经加入到集群了,充当工作节点

查看节点信息

正常情况下 你们的状态应该是NotReady 我这边是安装好了网络插件所以是Ready

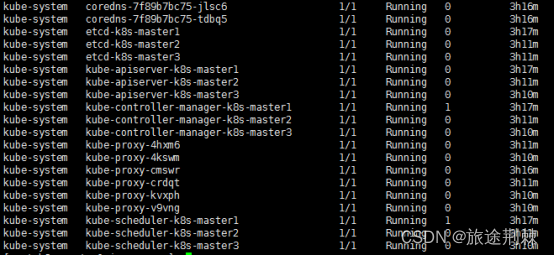

查看POD 信息

Kubectl get pods -A 查看所有pod信息

你们的coredns 是 pending 状态,这是因为还没有安装网络插件,等到下面安装好网络插件

之后这个 cordns 就会变成 running 了

安装网络插件

Curl -o calico.yaml https://docs.projectcalico.org/manifests/calico.yaml

1 把calico.yaml里pod所在网段改成kubeadm init时选项--pod-network-cidr所指定的网段,

直接用vim编辑打开此文件查找192,按如下标记进行修改:

# no effect. This should fall within `--cluster-cidr`.

# - name: CALICO_IPV4POOL_CIDR

# value: "192.168.0.0/16"

# Disable file logging so `kubectl logs` works.

- name: CALICO_DISABLE_FILE_LOGGING

value: "true"

把两个#及#后面的空格去掉,并把192.168.0.0/16改成10.244.0.0/16

# no effect. This should fall within `--cluster-cidr`

- name: CALICO_IPV4POOL_CIDR

value: "10.244.0.0/16"

# Disable file logging so `kubectl logs` works.

- name: CALICO_DISABLE_FILE_LOGGING

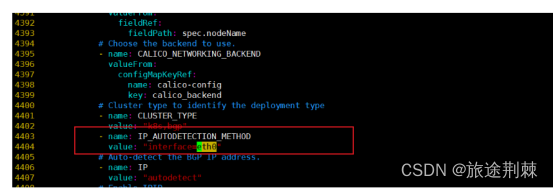

2 指定网卡 vim calico.yaml 吧网卡名称调成主机的网卡名称

Kubectl apply -f calico.yaml 部署

Kubectl get pods -A 查看

Ready 是1/1 status 是running 运行正常

再次查看集群状态 kubectl get nodes 可以看到节点都是ready状态

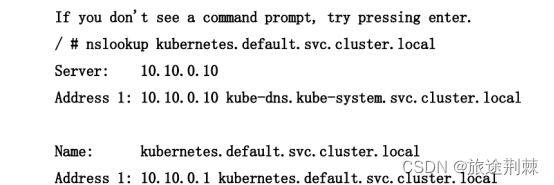

测试

kubectl run busybox --image busybox:1.28 --restart=Never --rm -it busybox -- sh

2271

2271

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?