目录

1.多层感知机介绍

1.1什么是多层感知机

1.2一个简单的例子

1.3如何使用pytorch实现多层感知机

2.主要实验步骤

2.1 姓氏数据集

2.2 词汇表、向量化器和数据加载器

2.3 姓氏分类器模型

2.4 训练过程

2.5 模型评估和预测

3.完整实验代码

4.补充内容

1.多层感知机的介绍

1.1什么是多层感知机

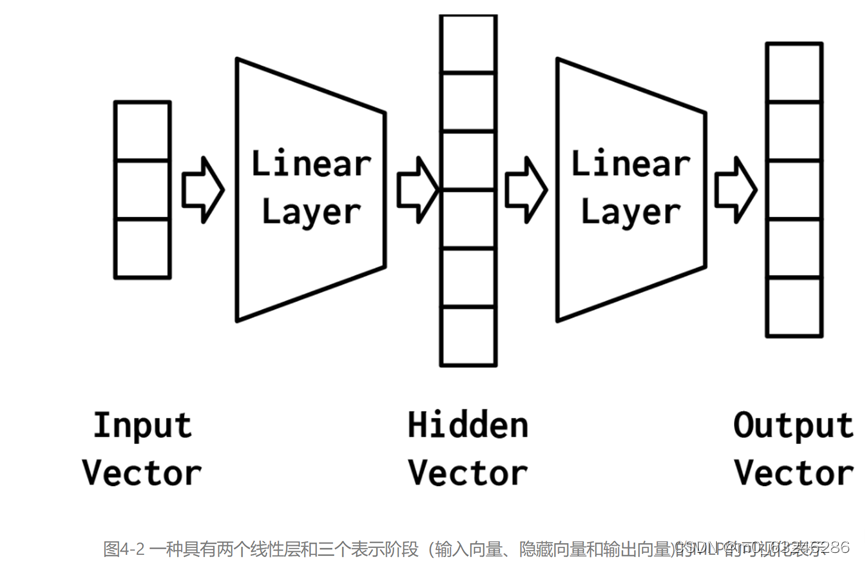

多层感知器(MLP)被认为是最基本的神经网络构建模块之一。在MLP中,许多感知器被分组,以便单个层的输出是一个新的向量,而不是单个输出值。在PyTorch中,正如您稍后将看到的,这只需设置线性层中的输出特性的数量即可完成。MLP的另一个方面是,它将多个层与每个层之间的非线性结合在一起。最简单的MLP,如图4-2所示,由三个表示阶段和两个线性层组成。第一阶段是输入向量。这是给定给模型的向量。在“示例:对餐馆评论的情绪进行分类”中,输入向量是Yelp评论的一个收缩的one-hot表示。给定输入向量,第一个线性层计算一个隐藏向量——表示的第二阶段。隐藏向量之所以这样被调用,是因为它是位于输入和输出之间的层的输出。我们所说的“层的输出”是什么意思?理解这个的一种方法是隐藏向量中的值是组成该层的不同感知器的输出。使用这个隐藏的向量,第二个线性层计算一个输出向量。在像Yelp评论分类这样的二进制任务中,输出向量仍然可以是1。在多类设置中,将在本实验后面的“示例:带有多层感知器的姓氏分类”一节中看到,输出向量是类数量的大小。虽然在这个例子中,我们只展示了一个隐藏的向量,但是有可能有多个中间阶段,每个阶段产生自己的隐藏向量。最终的隐藏向量总是通过线性层和非线性的组合映射到输出向量。

mlp的力量来自于添加第二个线性层和允许模型学习一个线性分割的的中间表示——该属性的能表示一个直线(或更一般的,一个超平面)可以用来区分数据点落在线(或超平面)的哪一边的。学习具有特定属性的中间表示,如分类任务是线性可分的,这是使用神经网络的最深刻后果之一,也是其建模能力的精髓。

1.2一个简单的例子:异或模型(XOR)

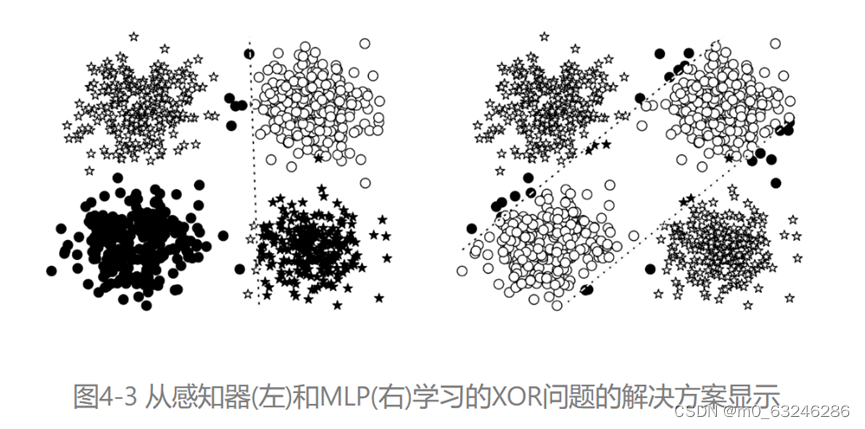

让我们看一下前面描述的XOR示例,看看感知器与MLP之间会发生什么。在这个例子中,我们在一个二元分类任务中训练感知器和MLP:星和圆。每个数据点是一个二维坐标。在不深入研究实现细节的情况下,最终的模型预测如图4-3所示。在这个图中,错误分类的数据点用黑色填充,而正确分类的数据点没有填充。在左边的面板中,从填充的形状可以看出,感知器在学习一个可以将星星和圆分开的决策边界方面有困难。然而,MLP(右面板)学习了一个更精确地对恒星和圆进行分类的决策边界。

图4-3中,每个数据点的真正类是该点的形状:星形或圆形。错误的分类用块填充,正确的分类没有填充。这些线是每个模型的决策边界。在边的面板中,感知器学习—个不能正确地将圆与星分开的决策边界。事实上,没有一条线可以。在右动的面板中,MLP学会了从圆中分离星。

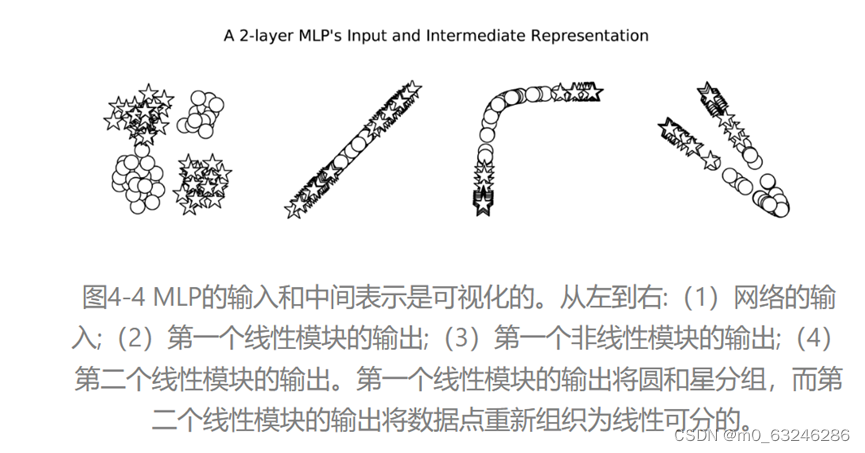

虽然在图中显示MLP有两个决策边界,这是它的优点,但它实际上只是一个决策边界!决策边界就是这样出现的,因为中间表示法改变了空间,使一个超平面同时出现在这两个位置上。在图4-4中,我们可以看到MLP计算的中间值。这些点的形状表示类(星形或圆形)。我们所看到的是,神经网络(本例中为MLP)已经学会了“扭曲”数据所处的空间,以便在数据通过最后一层时,用一线来分割它们。

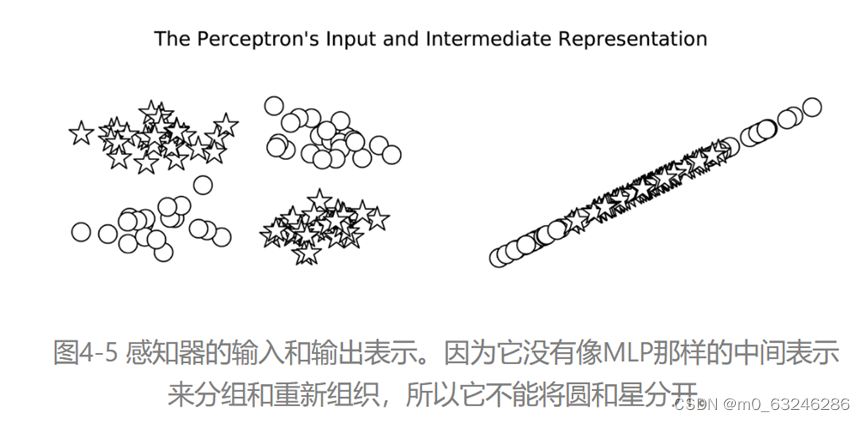

相反,如图4-5所示,感知器没有额外的一层来处理数据的形状,直到数据变成线性可分的。

1.3使用pytorch实现The Multilayer Perceptron(多层感知器)

在我们在例4-1中给出的实现中,我们用PyTorch的两个线性模块实例化了这个想法。线性对象被命名为fc1和fc2,它们遵循一个通用约定,即将线性模块称为“完全连接层”,简称为“fc层”。除了这两个线性层外,还有一个修正的线性单元(ReLU)非线性(在实验3“激活函数”一节中介绍),它在被输入到第二个线性层之前应用于第一个线性层的输出。由于层的顺序性,必须确保层中的输出数量等于下一层的输入数量。使用两个线性层之间的非线性是必要的,因为没有它,两个线性层在数学上等价于一个线性层4,因此不能建模复杂的模式。MLP的实现只实现反向传播的前向传递。这是因为PyTorch根据模型的定义和向前传递的实现,自动计算出如何进行向后传递和梯度更新。

Example 4-1. Multilayer Perceptron

import torch.nn as nn

import torch.nn.functional as F

class MultilayerPerceptron(nn.Module):

def __init__(self, input_dim, hidden_dim, output_dim):

"""

Args:

input_dim (int): the size of the input vectors

hidden_dim (int): the output size of the first Linear layer

output_dim (int): the output size of the second Linear layer

"""

super(MultilayerPerceptron, self).__init__()

self.fc1 = nn.Linear(input_dim, hidden_dim)

self.fc2 = nn.Linear(hidden_dim, output_dim)

def forward(self, x_in, apply_softmax=False):

"""The forward pass of the MLP

Args:

x_in (torch.Tensor): an input data tensor.

x_in.shape should be (batch, input_dim)

apply_softmax (bool): a flag for the softmax activation

should be false if used with the Cross Entropy losses

Returns:

the resulting tensor. tensor.shape should be (batch, output_dim)

"""

intermediate = F.relu(self.fc1(x_in)) # 将输入数据通过第一个全连接层,并使用ReLU激活函数进行非线性变换

output = self.fc2(intermediate) # 将中间结果通过第二个全连接层得到最终输出

if apply_softmax:# 如果apply_softmax为True,则在输出上应用softmax激活函数

output = F.softmax(output, dim=1)

return output

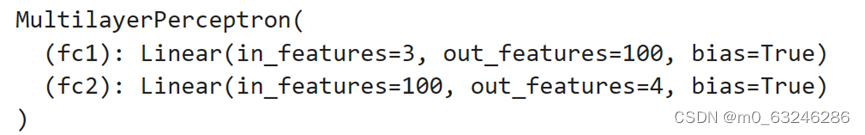

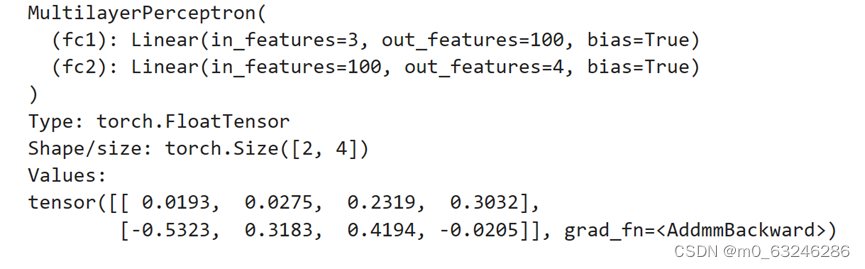

在例4-2中,我们实例化了MLP。由于MLP实现的通用性,可以为任何大小的输入建模。为了演示,我们使用大小为3的输入维度、大小为4的输出维度和大小为100的隐藏维度。请注意,在print语句的输出中,每个层中的单元数很好地排列在一起,以便为维度3的输入生成维度4的输出。

Example 4-2. An example instantiation of an MLP

batch_size = 2 # number of samples input at once

input_dim = 3

hidden_dim = 100

output_dim = 4

# Initialize model

mlp = MultilayerPerceptron(input_dim, hidden_dim, output_dim)

print(mlp)

上述代码运行结果:

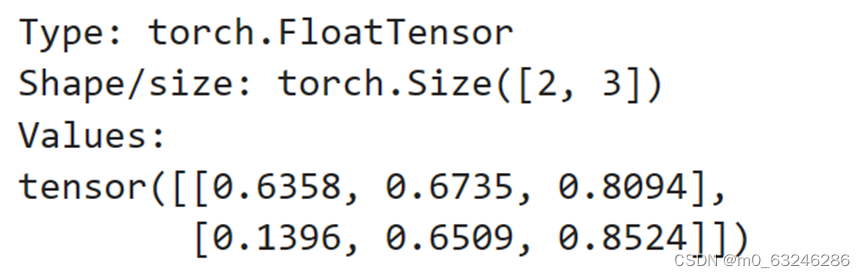

我们可以通过传递一些随机输入来快速测试模型的“连接”,如示例4-3所示。因为模型还没有经过训练,所以输出是随机的。在花费时间训练模型之前,这样做是一个有用的完整性检查。请注意PyTorch的交互性是如何让我们在开发过程中实时完成所有这些工作的,这与使用NumPy或panda没有太大区别:

Example 4-3. Testing the MLP with random inputs

import torch

def describe(x):

print("Type: {}".format(x.type()))

print("Shape/size: {}".format(x.shape))

print("Values: \n{}".format(x))

x_input = torch.rand(batch_size, input_dim)

describe(x_input)

上述代码运行结果:

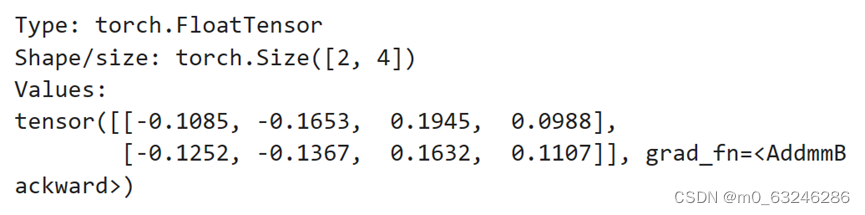

y_output = mlp(x_input, apply_softmax=False)

describe(y_output)

上述代码运行结果:

学习如何读取PyTorch模型的输入和输出非常重要。在前面的例子中,MLP模型的输出是一个有两行四列的张量。这个张量中的行与批处理维数对应,批处理维数是小批处理中的数据点的数量。列是每个数据点的最终特征向量。在某些情况下,例如在分类设置中,特征向量是一个预测向量。名称为“预测向量”表示它对应于一个概率分布。预测向量会发生什么取决于我们当前是在进行训练还是在执行推理。在训练期间,输出按原样使用,带有一个损失函数和目标类标签的表示。我们将在“示例:带有多层感知器的姓氏分类”中对此进行深入介绍。

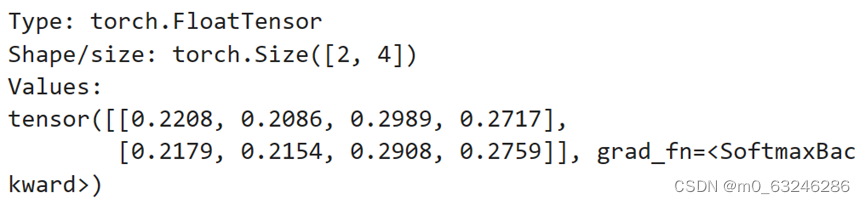

但是,如果想将预测向量转换为概率,则需要额外的步骤。具体来说,需要softmax函数,它用于将一个值向量转换为概率。softmax有许多根。在物理学中,它被称为玻尔兹曼或吉布斯分布;在统计学中,它是多项式逻辑回归;在自然语言处理(NLP)社区,它是最大熵(MaxEnt)分类器。不管叫什么名字,这个函数背后的直觉是,大的正值会导致更高的概率,小的负值会导致更小的概率。在示例4-3中,apply_softmax参数应用了这个额外的步骤。在例4-4中,可以看到相同的输出,但是这次将apply_softmax标志设置为True:

Example 4-4. MLP with apply_softmax=True

y_output = mlp(x_input, apply_softmax=True)

describe(y_output)

上述代码运行结果:

综上所述,mlp是将张量映射到其他张量的线性层。在每一对线性层之间使用非线性来打破线性关系,并允许模型扭曲向量空间。在分类设置中,这种扭曲应该导致类之间的线性可分性。另外,可以使用softmax函数将MLP输出解释为概率,但是不应该将softmax与特定的损失函数一起使用,因为底层实现可以利用高级数学/计算捷径。

2.主要实验步骤

2.1The Surname Dataset

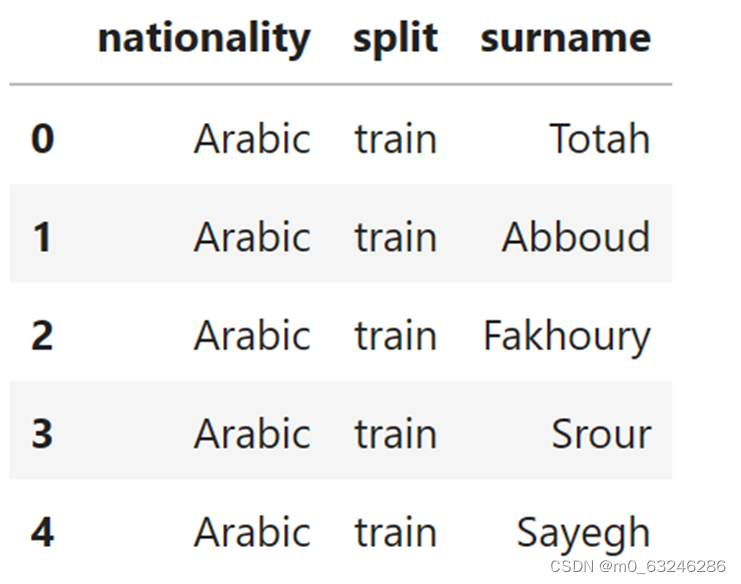

姓氏数据集,它收集了来自18个不同国家的10,000个姓氏,这些姓氏是作者从互联网上不同的姓名来源收集的。该数据集将在本课程实验的几个示例中重用,并具有一些使其有趣的属性。第一个性质是它是相当不平衡的。排名前三的课程占数据的60%以上:27%是英语,21%是俄语,14%是阿拉伯语。剩下的15个民族的频率也在下降——这也是语言特有的特性。第二个特点是,在国籍和姓氏正字法(拼写)之间有一种有效和直观的关系。有些拼写变体与原籍国联系非常紧密(比如“O ‘Neill”、“Antonopoulos”、“Nagasawa”或“Zhu”)。

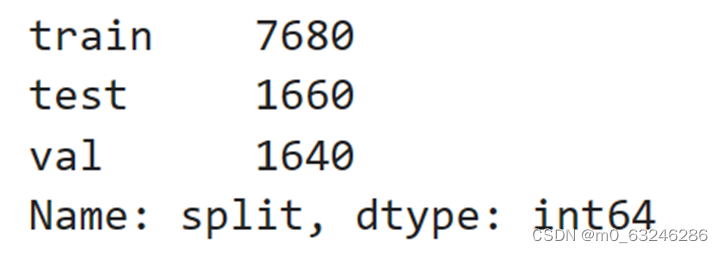

为了创建最终的数据集,我们从一个比课程补充材料中包含的版本处理更少的版本开始,并执行了几个数据集修改操作。第一个目的是减少这种不平衡——原始数据集中70%以上是俄文,这可能是由于抽样偏差或俄文姓氏的增多。为此,我们通过选择标记为俄语的姓氏的随机子集对这个过度代表的类进行子样本。接下来,我们根据国籍对数据集进行分组,并将数据集分为三个部分:70%到训练数据集,15%到验证数据集,最后15%到测试数据集,以便跨这些部分的类标签分布具有可比性。

SurnameDataset的实现与“Example: classification of Sentiment of Restaurant Reviews”中的ReviewDataset几乎相同,只是在getitem方法的实现方式上略有不同。回想一下,本课程中呈现的数据集类继承自PyTorch的数据集类,因此,我们需要实现两个函数:__getitem方法,它在给定索引时返回一个数据点;以及len方法,该方法返回数据集的长度。“示例:餐厅评论的情绪分类”中的示例与本示例的区别在getitem__中,如示例4-5所示。它不像“示例:将餐馆评论的情绪分类”那样返回一个向量化的评论,而是返回一个向量化的姓氏和与其国籍相对应的索引:

Example 4-5. Implementing SurnameDataset.__getitem__()

class SurnameDataset(Dataset):

# Implementation is nearly identical to Section 3.5

def __getitem__(self, index):

row = self._target_df.iloc[index]

surname_vector = \

self._vectorizer.vectorize(row.surname)

nationality_index = \

self._vectorizer.nationality_vocab.lookup_token(row.nationality)

return {'x_surname': surname_vector,

'y_nationality': nationality_index}

2.2 Vocabulary, Vectorizer, and DataLoader

为了使用字符对姓氏进行分类,我们使用词汇表、向量化器和DataLoader将姓氏字符串转换为向量化的minibatches。这些数据结构与“Example: Classifying Sentiment of Restaurant Reviews”中使用的数据结构相同,它们举例说明了一种多态性,这种多态性将姓氏的字符标记与Yelp评论的单词标记相同对待。数据不是通过将字令牌映射到整数来向量化的,而是通过将字符映射到整数来向量化的。

Example 4-6. Implementing SurnameVectorizer

class SurnameVectorizer(object):

""" The Vectorizer which coordinates the Vocabularies and puts them to use"""

def __init__(self, surname_vocab, nationality_vocab):

self.surname_vocab = surname_vocab

self.nationality_vocab = nationality_vocab

def vectorize(self, surname):

"""Vectorize the provided surname

Args:

surname (str): the surname

Returns:

one_hot (np.ndarray): a collapsed one-hot encoding

"""

vocab = self.surname_vocab

one_hot = np.zeros(len(vocab), dtype=np.float32) # 遍历姓氏中的每个字符,并将对应位置的向量元素设为1

for token in surname:

one_hot[vocab.lookup_token(token)] = 1# 使用词汇表的lookup_token方法找到字符对应的索引

return one_hot

@classmethod

def from_dataframe(cls, surname_df):

"""Instantiate the vectorizer from the dataset dataframe

Args:

surname_df (pandas.DataFrame): the surnames dataset

Returns:

an instance of the SurnameVectorizer

"""

surname_vocab = Vocabulary(unk_token="@")

nationality_vocab = Vocabulary(add_unk=False)

for index, row in surname_df.iterrows(): # 遍历数据框的每一行来填充词汇表

for letter in row.surname:

surname_vocab.add_token(letter)# 将姓氏的每个字符添加到姓氏词汇表中

nationality_vocab.add_token(row.nationality) # 将国籍添加到国籍词汇表中

return cls(surname_vocab, nationality_vocab)

2.3The Surname Classifier Model

SurnameClassifier是本实验前面介绍的MLP的实现。第一个线性层将输入向量映射到中间向量,并对该向量应用非线性。第二线性层将中间向量映射到预测向量。

在最后一步中,可选地应用softmax操作,以确保输出和为1;这就是所谓的“概率”。它是可选的原因与我们使用的损失函数的数学公式有关——交叉熵损失。我们研究了“损失函数”中的交叉熵损失。回想一下,交叉熵损失对于多类分类是最理想的,但是在训练过程中软最大值的计算不仅浪费而且在很多情况下并不稳定。

Example 4-7. The SurnameClassifier as an MLP

import torch.nn as nn

import torch.nn.functional as F

class SurnameClassifier(nn.Module):

""" A 2-layer Multilayer Perceptron for classifying surnames """

def __init__(self, input_dim, hidden_dim, output_dim):

"""

Args:

input_dim (int): the size of the input vectors

hidden_dim (int): the output size of the first Linear layer

output_dim (int): the output size of the second Linear layer

"""

super(SurnameClassifier, self).__init__()

self.fc1 = nn.Linear(input_dim, hidden_dim) # 定义第一个全连接层,将输入层连接到隐藏层

self.fc2 = nn.Linear(hidden_dim, output_dim)# 定义第二个全连接层,将隐藏层连接到输出层

def forward(self, x_in, apply_softmax=False):

"""The forward pass of the classifier

Args:

x_in (torch.Tensor): an input data tensor.

x_in.shape should be (batch, input_dim)

apply_softmax (bool): a flag for the softmax activation

should be false if used with the Cross Entropy losses

Returns:

the resulting tensor. tensor.shape should be (batch, output_dim)

"""

intermediate_vector = F.relu(self.fc1(x_in)) # 输入数据通过第一个全连接层后,使用ReLU激活函数

prediction_vector = self.fc2(intermediate_vector) # 中间结果通过第二个全连接层

if apply_softmax:

prediction_vector = F.softmax(prediction_vector, dim=1)

return prediction_vector

2.4 The Training Routine

虽然我们使用了不同的模型、数据集和损失函数,但是训练例程是相同的。因此,在例4-8中,我们只展示了args以及本例中的训练例程与“示例:餐厅评论情绪分类”中的示例之间的主要区别。

Example 4-8. The args for classifying surnames with an MLP

args = Namespace(

# Data and path information

surname_csv="/home/jovyan/surnames_with_splits.csv",

vectorizer_file="vectorizer.json",

model_state_file="model.pth",

save_dir="model_storage/ch4/surname_mlp",

# Model hyper parameters

hidden_dim=300,

# Training hyper parameters

seed=1337,

num_epochs=100,

early_stopping_criteria=5,

learning_rate=0.001,

batch_size=64,

# Runtime options omitted for space

)

训练中最显著的差异与模型中输出的种类和使用的损失函数有关。在这个例子中,输出是一个多类预测向量,可以转换为概率。正如在模型描述中所描述的,这种输出的损失类型仅限于CrossEntropyLoss和NLLLoss。由于它的简化,我们使用了CrossEntropyLoss。

在例4-9中,我们展示了数据集、模型、损失函数和优化器的实例化。这些实例应该看起来与“示例:将餐馆评论的情绪分类”中的实例几乎相同。事实上,在本课程后面的实验中,这种模式将对每个示例进行重复。

Example 4-9. Instantiating the dataset, model, loss, and optimizer

dataset = SurnameDataset.load_dataset_and_make_vectorizer(args.surname_csv)

vectorizer = dataset.get_vectorizer()

classifier = SurnameClassifier(input_dim=len(vectorizer.surname_vocab),

hidden_dim=args.hidden_dim,

output_dim=len(vectorizer.nationality_vocab))

classifier = classifier.to(args.device)

loss_func = nn.CrossEntropyLoss(dataset.class_weights)

optimizer = optim.Adam(classifier.parameters(), lr=args.learning_rate)

THE TRAINING LOOP

与“Example: Classifying Sentiment of Restaurant Reviews”中的训练循环相比,本例的训练循环除了变量名以外几乎是相同的。具体来说,示例4-10显示了使用不同的key从batch_dict中获取数据。除了外观上的差异,训练循环的功能保持不变。利用训练数据,计算模型输出、损失和梯度。然后,使用梯度来更新模型。

Example 4-10. A snippet of the training loop

# the training routine is these 5 steps:

# --------------------------------------

# step 1. zero the gradients

optimizer.zero_grad()

# step 2. compute the output

y_pred = classifier(batch_dict['x_surname'])

# step 3. compute the loss

loss = loss_func(y_pred, batch_dict['y_nationality'])

loss_batch = loss.to("cpu").item()

running_loss += (loss_batch - running_loss) / (batch_index + 1)

# step 4. use loss to produce gradients

loss.backward()

# step 5. use optimizer to take gradient step

optimizer.step()

2.5 Model Evaluation and Prediction

要理解模型的性能,应该使用定量和定性方法分析模型。定量测量出的测试数据的误差,决定了分类器能否推广到不可见的例子。定性地说,可以通过查看分类器的top-k预测来为一个新示例开发模型所了解的内容的直觉。

2.5.1 EVALUATING ON THE TEST DATASET

评价SurnameClassifier测试数据,我们执行相同的常规的routine文本分类的例子“餐馆评论的例子:分类情绪”:我们将数据集设置为遍历测试数据,调用classifier.eval()方法,并遍历测试数据以同样的方式与其他数据。在这个例子中,调用classifier.eval()可以防止PyTorch在使用测试/评估数据时更新模型参数。

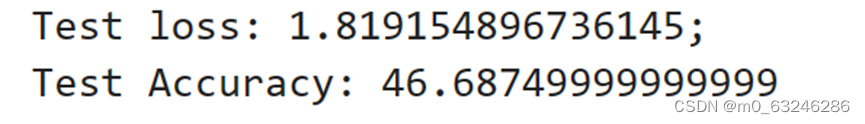

该模型对测试数据的准确性达到50%左右。如果在附带的notebook中运行训练例程,会注意到在训练数据上的性能更高。这是因为模型总是更适合它所训练的数据,所以训练数据的性能并不代表新数据的性能。如果遵循代码,你可以尝试隐藏维度的不同大小,应该注意到性能的提高。然而,这种增长不会很大(尤其是与“用CNN对姓氏进行分类的例子”中的模型相比)。其主要原因是收缩的onehot向量化方法是一种弱表示。虽然它确实简洁地将每个姓氏表示为单个向量,但它丢弃了字符之间的顺序信息,这对于识别起源非常重要。

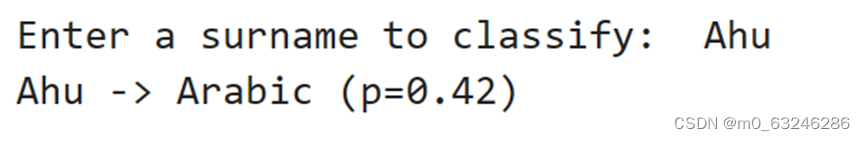

2.5.2 CLASSIFYING A NEW SURNAME

示例4-11显示了分类新姓氏的代码。给定一个姓氏作为字符串,该函数将首先应用向量化过程,然后获得模型预测。注意,我们包含了apply_softmax标志,所以结果包含概率。模型预测,在多项式的情况下,是类概率的列表。我们使用PyTorch张量最大函数来得到由最高预测概率表示的最优类。

Example 4-11. A function for performing nationality prediction

def predict_nationality(name, classifier, vectorizer):

vectorized_name = vectorizer.vectorize(name)

vectorized_name = torch.tensor(vectorized_name).view(1, -1)

result = classifier(vectorized_name, apply_softmax=True)

probability_values, indices = result.max(dim=1)

index = indices.item()

predicted_nationality = vectorizer.nationality_vocab.lookup_index(index)

probability_value = probability_values.item()

return {'nationality': predicted_nationality,

'probability': probability_value}

2.5.3 RETRIEVING THE TOP-K PREDICTIONS FOR A NEW SURNAME

不仅要看最好的预测,还要看更多的预测。例如,NLP中的标准实践是采用k-best预测并使用另一个模型对它们重新排序。PyTorch提供了一个torch.topk函数,它提供了一种方便的方法来获得这些预测,如示例4-12所示。

Example 4-12. Predicting the top-k nationalities

def predict_topk_nationality(name, classifier, vectorizer, k=5):

vectorized_name = vectorizer.vectorize(name)

vectorized_name = torch.tensor(vectorized_name).view(1, -1)

prediction_vector = classifier(vectorized_name, apply_softmax=True)

probability_values, indices = torch.topk(prediction_vector, k=k)

# returned size is 1,k

probability_values = probability_values.detach().numpy()[0]

indices = indices.detach().numpy()[0]

results = []

for prob_value, index in zip(probability_values, indices):

nationality = vectorizer.nationality_vocab.lookup_index(index)

results.append({'nationality': nationality,

'probability': prob_value})

return results

2.5.6 Regularizing MLPs: Weight Regularization and Structural Regularization (or Dropout)

在实验3中,我们解释了正则化是如何解决过拟合问题的,并研究了两种重要的权重正则化类型——L1和L2。这些权值正则化方法也适用于MLPs和卷积神经网络,我们将在本实验后面介绍。除权值正则化外,对于深度模型(即例如本实验讨论的前馈网络,一种称为dropout的结构正则化方法变得非常重要。

DROPOUT

简单地说,在训练过程中,dropout有一定概率使属于两个相邻层的单元之间的连接减弱。这有什么用呢?我们从斯蒂芬•梅里蒂(Stephen Merity)的一段直观(且幽默)的解释开始:“Dropout,简单地说,是指如果你能在喝醉的时候反复学习如何做一件事,那么你应该能够在清醒的时候做得更好。这一见解产生了许多最先进的结果和一个新兴的领域。”

神经网络——尤其是具有大量分层的深层网络——可以在单元之间创建有趣的相互适应。“Coadaptation”是神经科学中的一个术语,但在这里它只是指一种情况,即两个单元之间的联系变得过于紧密,而牺牲了其他单元之间的联系。这通常会导致模型与数据过拟合。通过概率地丢弃单元之间的连接,我们可以确保没有一个单元总是依赖于另一个单元,从而产生健壮的模型。dropout不会向模型中添加额外的参数,但是需要一个超参数——“drop probability”。drop probability,它是单位之间的连接drop的概率。通常将下降概率设置为0.5。例4-13给出了一个带dropout的MLP的重新实现。

Example 4-13. MLP with dropout

import torch.nn as nn

import torch.nn.functional as F

class MultilayerPerceptron(nn.Module):

def __init__(self, input_dim, hidden_dim, output_dim):

"""

Args:

input_dim (int): the size of the input vectors

hidden_dim (int): the output size of the first Linear layer

output_dim (int): the output size of the second Linear layer

"""

super(MultilayerPerceptron, self).__init__()

self.fc1 = nn.Linear(input_dim, hidden_dim)

self.fc2 = nn.Linear(hidden_dim, output_dim)

def forward(self, x_in, apply_softmax=False):

"""The forward pass of the MLP

Args:

x_in (torch.Tensor): an input data tensor.

x_in.shape should be (batch, input_dim)

apply_softmax (bool): a flag for the softmax activation

should be false if used with the Cross Entropy losses

Returns:

the resulting tensor. tensor.shape should be (batch, output_dim)

"""

intermediate = F.relu(self.fc1(x_in))

output = self.fc2(F.dropout(intermediate, p=0.5))

if apply_softmax:

output = F.softmax(output, dim=1)

return output

batch_size = 2 # number of samples input at once

input_dim = 3

hidden_dim = 100

output_dim = 4

# Initialize model

mlp = MultilayerPerceptron(input_dim, hidden_dim, output_dim)

print(mlp)

y_output = mlp(x_input, apply_softmax=False)

describe(y_output)

上述代码运行结果:

请注意,dropout只适用于训练期间,不适用于评估期间。

3.完整实验代码:

from argparse import Namespace

from collections import Counter

import json

import os

import string

import numpy as np

import pandas as pd

import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

from torch.utils.data import Dataset, DataLoader

from tqdm import tqdm_notebook

class Vocabulary(object):

"""Class to process text and extract vocabulary for mapping"""

def __init__(self, token_to_idx=None, add_unk=True, unk_token="<UNK>"):

"""

Args:

token_to_idx (dict): a pre-existing map of tokens to indices

add_unk (bool): a flag that indicates whether to add the UNK token

unk_token (str): the UNK token to add into the Vocabulary

"""

if token_to_idx is None:

token_to_idx = {}

self._token_to_idx = token_to_idx

self._idx_to_token = {idx: token

for token, idx in self._token_to_idx.items()}

self._add_unk = add_unk

self._unk_token = unk_token

self.unk_index = -1

if add_unk:

self.unk_index = self.add_token(unk_token)

def to_serializable(self):

""" returns a dictionary that can be serialized """

return {'token_to_idx': self._token_to_idx,

'add_unk': self._add_unk,

'unk_token': self._unk_token}

@classmethod

def from_serializable(cls, contents):

""" instantiates the Vocabulary from a serialized dictionary """

return cls(**contents)

def add_token(self, token):

"""Update mapping dicts based on the token.

Args:

token (str): the item to add into the Vocabulary

Returns:

index (int): the integer corresponding to the token

"""

try:

index = self._token_to_idx[token]

except KeyError:

index = len(self._token_to_idx)

self._token_to_idx[token] = index

self._idx_to_token[index] = token

return index

def add_many(self, tokens):

"""Add a list of tokens into the Vocabulary

Args:

tokens (list): a list of string tokens

Returns:

indices (list): a list of indices corresponding to the tokens

"""

return [self.add_token(token) for token in tokens]

def lookup_token(self, token):

"""Retrieve the index associated with the token

or the UNK index if token isn't present.

Args:

token (str): the token to look up

Returns:

index (int): the index corresponding to the token

Notes:

`unk_index` needs to be >=0 (having been added into the Vocabulary)

for the UNK functionality

"""

if self.unk_index >= 0:

return self._token_to_idx.get(token, self.unk_index)

else:

return self._token_to_idx[token]

def lookup_index(self, index):

"""Return the token associated with the index

Args:

index (int): the index to look up

Returns:

token (str): the token corresponding to the index

Raises:

KeyError: if the index is not in the Vocabulary

"""

if index not in self._idx_to_token:

raise KeyError("the index (%d) is not in the Vocabulary" % index)

return self._idx_to_token[index]

def __str__(self):

return "<Vocabulary(size=%d)>" % len(self)

def __len__(self):

return len(self._token_to_idx)

class SurnameVectorizer(object):

""" The Vectorizer which coordinates the Vocabularies and puts them to use"""

def __init__(self, surname_vocab, nationality_vocab):

"""

Args:

surname_vocab (Vocabulary): maps characters to integers

nationality_vocab (Vocabulary): maps nationalities to integers

"""

self.surname_vocab = surname_vocab

self.nationality_vocab = nationality_vocab

def vectorize(self, surname):

"""

Args:

surname (str): the surname

Returns:

one_hot (np.ndarray): a collapsed one-hot encoding

"""

vocab = self.surname_vocab

one_hot = np.zeros(len(vocab), dtype=np.float32)

for token in surname:

one_hot[vocab.lookup_token(token)] = 1

return one_hot

@classmethod

def from_dataframe(cls, surname_df):

"""Instantiate the vectorizer from the dataset dataframe

Args:

surname_df (pandas.DataFrame): the surnames dataset

Returns:

an instance of the SurnameVectorizer

"""

surname_vocab = Vocabulary(unk_token="@")

nationality_vocab = Vocabulary(add_unk=False)

for index, row in surname_df.iterrows():

for letter in row.surname:

surname_vocab.add_token(letter)

nationality_vocab.add_token(row.nationality)

return cls(surname_vocab, nationality_vocab)

@classmethod

def from_serializable(cls, contents):

surname_vocab = Vocabulary.from_serializable(contents['surname_vocab'])

nationality_vocab = Vocabulary.from_serializable(contents['nationality_vocab'])

return cls(surname_vocab=surname_vocab, nationality_vocab=nationality_vocab)

def to_serializable(self):

return {'surname_vocab': self.surname_vocab.to_serializable(),

'nationality_vocab': self.nationality_vocab.to_serializable()}

class SurnameDataset(Dataset):

def __init__(self, surname_df, vectorizer):

"""

Args:

surname_df (pandas.DataFrame): the dataset

vectorizer (SurnameVectorizer): vectorizer instatiated from dataset

"""

self.surname_df = surname_df

self._vectorizer = vectorizer

self.train_df = self.surname_df[self.surname_df.split=='train']

self.train_size = len(self.train_df)

self.val_df = self.surname_df[self.surname_df.split=='val']

self.validation_size = len(self.val_df)

self.test_df = self.surname_df[self.surname_df.split=='test']

self.test_size = len(self.test_df)

self._lookup_dict = {'train': (self.train_df, self.train_size),

'val': (self.val_df, self.validation_size),

'test': (self.test_df, self.test_size)}

self.set_split('train')

# Class weights

class_counts = surname_df.nationality.value_counts().to_dict()

def sort_key(item):

return self._vectorizer.nationality_vocab.lookup_token(item[0])

sorted_counts = sorted(class_counts.items(), key=sort_key)

frequencies = [count for _, count in sorted_counts]

self.class_weights = 1.0 / torch.tensor(frequencies, dtype=torch.float32)

@classmethod

def load_dataset_and_make_vectorizer(cls, surname_csv):

"""Load dataset and make a new vectorizer from scratch

Args:

surname_csv (str): location of the dataset

Returns:

an instance of SurnameDataset

"""

surname_df = pd.read_csv(surname_csv)

train_surname_df = surname_df[surname_df.split=='train']

return cls(surname_df, SurnameVectorizer.from_dataframe(train_surname_df))

@classmethod

def load_dataset_and_load_vectorizer(cls, surname_csv, vectorizer_filepath):

"""Load dataset and the corresponding vectorizer.

Used in the case in the vectorizer has been cached for re-use

Args:

surname_csv (str): location of the dataset

vectorizer_filepath (str): location of the saved vectorizer

Returns:

an instance of SurnameDataset

"""

surname_df = pd.read_csv(surname_csv)

vectorizer = cls.load_vectorizer_only(vectorizer_filepath)

return cls(surname_df, vectorizer)

@staticmethod

def load_vectorizer_only(vectorizer_filepath):

"""a static method for loading the vectorizer from file

Args:

vectorizer_filepath (str): the location of the serialized vectorizer

Returns:

an instance of SurnameVectorizer

"""

with open(vectorizer_filepath) as fp:

return SurnameVectorizer.from_serializable(json.load(fp))

def save_vectorizer(self, vectorizer_filepath):

"""saves the vectorizer to disk using json

Args:

vectorizer_filepath (str): the location to save the vectorizer

"""

with open(vectorizer_filepath, "w") as fp:

json.dump(self._vectorizer.to_serializable(), fp)

def get_vectorizer(self):

""" returns the vectorizer """

return self._vectorizer

def set_split(self, split="train"):

""" selects the splits in the dataset using a column in the dataframe """

self._target_split = split

self._target_df, self._target_size = self._lookup_dict[split]

def __len__(self):

return self._target_size

def __getitem__(self, index):

"""the primary entry point method for PyTorch datasets

Args:

index (int): the index to the data point

Returns:

a dictionary holding the data point's:

features (x_surname)

label (y_nationality)

"""

row = self._target_df.iloc[index]

surname_vector = \

self._vectorizer.vectorize(row.surname)

nationality_index = \

self._vectorizer.nationality_vocab.lookup_token(row.nationality)

return {'x_surname': surname_vector,

'y_nationality': nationality_index}

def get_num_batches(self, batch_size):

"""Given a batch size, return the number of batches in the dataset

Args:

batch_size (int)

Returns:

number of batches in the dataset

"""

return len(self) // batch_size

def generate_batches(dataset, batch_size, shuffle=True,

drop_last=True, device="cpu"):

"""

A generator function which wraps the PyTorch DataLoader. It will

ensure each tensor is on the write device location.

"""

dataloader = DataLoader(dataset=dataset, batch_size=batch_size,

shuffle=shuffle, drop_last=drop_last)

for data_dict in dataloader:

out_data_dict = {}

for name, tensor in data_dict.items():

out_data_dict[name] = data_dict[name].to(device)

yield out_data_dict

class SurnameClassifier(nn.Module):

""" A 2-layer Multilayer Perceptron for classifying surnames """

def __init__(self, input_dim, hidden_dim, output_dim):

"""

Args:

input_dim (int): the size of the input vectors

hidden_dim (int): the output size of the first Linear layer

output_dim (int): the output size of the second Linear layer

"""

super(SurnameClassifier, self).__init__()

self.fc1 = nn.Linear(input_dim, hidden_dim)

self.fc2 = nn.Linear(hidden_dim, output_dim)

def forward(self, x_in, apply_softmax=False):

"""The forward pass of the classifier

Args:

x_in (torch.Tensor): an input data tensor.

x_in.shape should be (batch, input_dim)

apply_softmax (bool): a flag for the softmax activation

should be false if used with the Cross Entropy losses

Returns:

the resulting tensor. tensor.shape should be (batch, output_dim)

"""

intermediate_vector = F.relu(self.fc1(x_in))

prediction_vector = self.fc2(intermediate_vector)

if apply_softmax:

prediction_vector = F.softmax(prediction_vector, dim=1)

return prediction_vector

def make_train_state(args):

return {'stop_early': False,

'early_stopping_step': 0,

'early_stopping_best_val': 1e8,

'learning_rate': args.learning_rate,

'epoch_index': 0,

'train_loss': [],

'train_acc': [],

'val_loss': [],

'val_acc': [],

'test_loss': -1,

'test_acc': -1,

'model_filename': args.model_state_file}

def update_train_state(args, model, train_state):

"""Handle the training state updates.

Components:

- Early Stopping: Prevent overfitting.

- Model Checkpoint: Model is saved if the model is better

:param args: main arguments

:param model: model to train

:param train_state: a dictionary representing the training state values

:returns:

a new train_state

"""

# Save one model at least

if train_state['epoch_index'] == 0:

torch.save(model.state_dict(), train_state['model_filename'])

train_state['stop_early'] = False

# Save model if performance improved

elif train_state['epoch_index'] >= 1:

loss_tm1, loss_t = train_state['val_loss'][-2:]

# If loss worsened

if loss_t >= train_state['early_stopping_best_val']:

# Update step

train_state['early_stopping_step'] += 1

# Loss decreased

else:

# Save the best model

if loss_t < train_state['early_stopping_best_val']:

torch.save(model.state_dict(), train_state['model_filename'])

# Reset early stopping step

train_state['early_stopping_step'] = 0

# Stop early ?

train_state['stop_early'] = \

train_state['early_stopping_step'] >= args.early_stopping_criteria

return train_state

def compute_accuracy(y_pred, y_target):

_, y_pred_indices = y_pred.max(dim=1)

n_correct = torch.eq(y_pred_indices, y_target).sum().item()

return n_correct / len(y_pred_indices) * 100

def set_seed_everywhere(seed, cuda):

np.random.seed(seed)

torch.manual_seed(seed)

if cuda:

torch.cuda.manual_seed_all(seed)

def handle_dirs(dirpath):

if not os.path.exists(dirpath):

os.makedirs(dirpath)

args = Namespace(

# Data and path information

surname_csv="/home/jovyan/surnames_with_splits.csv",

vectorizer_file="vectorizer.json",

model_state_file="model.pth",

save_dir="model_storage/ch4/surname_mlp",

# Model hyper parameters

hidden_dim=300,

# Training hyper parameters

seed=1337,

num_epochs=100,

early_stopping_criteria=5,

learning_rate=0.001,

batch_size=64,

# Runtime options

cuda=False,

reload_from_files=False,

expand_filepaths_to_save_dir=True,

)

if args.expand_filepaths_to_save_dir:

args.vectorizer_file = os.path.join(args.save_dir,

args.vectorizer_file)

args.model_state_file = os.path.join(args.save_dir,

args.model_state_file)

print("Expanded filepaths: ")

print("\t{}".format(args.vectorizer_file))

print("\t{}".format(args.model_state_file))

# Check CUDA

if not torch.cuda.is_available():

args.cuda = False

args.device = torch.device("cuda" if args.cuda else "cpu")

print("Using CUDA: {}".format(args.cuda))

# Set seed for reproducibility

set_seed_everywhere(args.seed, args.cuda)

# handle dirs

handle_dirs(args.save_dir)

if args.reload_from_files:

# training from a checkpoint

print("Reloading!")

dataset = SurnameDataset.load_dataset_and_load_vectorizer(args.surname_csv,

args.vectorizer_file)

else:

# create dataset and vectorizer

print("Creating fresh!")

dataset = SurnameDataset.load_dataset_and_make_vectorizer(args.surname_csv)

dataset.save_vectorizer(args.vectorizer_file)

vectorizer = dataset.get_vectorizer()

classifier = SurnameClassifier(input_dim=len(vectorizer.surname_vocab),

hidden_dim=args.hidden_dim,

output_dim=len(vectorizer.nationality_vocab))

classifier = classifier.to(args.device)

dataset.class_weights = dataset.class_weights.to(args.device)

loss_func = nn.CrossEntropyLoss(dataset.class_weights)

optimizer = optim.Adam(classifier.parameters(), lr=args.learning_rate)

scheduler = optim.lr_scheduler.ReduceLROnPlateau(optimizer=optimizer,

mode='min', factor=0.5,

patience=1)

train_state = make_train_state(args)

epoch_bar = tqdm_notebook(desc='training routine',

total=args.num_epochs,

position=0)

dataset.set_split('train')

train_bar = tqdm_notebook(desc='split=train',

total=dataset.get_num_batches(args.batch_size),

position=1,

leave=True)

dataset.set_split('val')

val_bar = tqdm_notebook(desc='split=val',

total=dataset.get_num_batches(args.batch_size),

position=1,

leave=True)

try:

for epoch_index in range(args.num_epochs):

train_state['epoch_index'] = epoch_index

# Iterate over training dataset

# setup: batch generator, set loss and acc to 0, set train mode on

dataset.set_split('train')

batch_generator = generate_batches(dataset,

batch_size=args.batch_size,

device=args.device)

running_loss = 0.0

running_acc = 0.0

classifier.train()

for batch_index, batch_dict in enumerate(batch_generator):

# the training routine is these 5 steps:

# --------------------------------------

# step 1. zero the gradients

optimizer.zero_grad()

# step 2. compute the output

y_pred = classifier(batch_dict['x_surname'])

# step 3. compute the loss

loss = loss_func(y_pred, batch_dict['y_nationality'])

loss_t = loss.item()

running_loss += (loss_t - running_loss) / (batch_index + 1)

# step 4. use loss to produce gradients

loss.backward()

# step 5. use optimizer to take gradient step

optimizer.step()

# -----------------------------------------

# compute the accuracy

acc_t = compute_accuracy(y_pred, batch_dict['y_nationality'])

running_acc += (acc_t - running_acc) / (batch_index + 1)

# update bar

train_bar.set_postfix(loss=running_loss, acc=running_acc,

epoch=epoch_index)

train_bar.update()

train_state['train_loss'].append(running_loss)

train_state['train_acc'].append(running_acc)

# Iterate over val dataset

# setup: batch generator, set loss and acc to 0; set eval mode on

dataset.set_split('val')

batch_generator = generate_batches(dataset,

batch_size=args.batch_size,

device=args.device)

running_loss = 0.

running_acc = 0.

classifier.eval()

for batch_index, batch_dict in enumerate(batch_generator):

# compute the output

y_pred = classifier(batch_dict['x_surname'])

# step 3. compute the loss

loss = loss_func(y_pred, batch_dict['y_nationality'])

loss_t = loss.to("cpu").item()

running_loss += (loss_t - running_loss) / (batch_index + 1)

# compute the accuracy

acc_t = compute_accuracy(y_pred, batch_dict['y_nationality'])

running_acc += (acc_t - running_acc) / (batch_index + 1)

val_bar.set_postfix(loss=running_loss, acc=running_acc,

epoch=epoch_index)

val_bar.update()

train_state['val_loss'].append(running_loss)

train_state['val_acc'].append(running_acc)

train_state = update_train_state(args=args, model=classifier,

train_state=train_state)

scheduler.step(train_state['val_loss'][-1])

if train_state['stop_early']:

break

train_bar.n = 0

val_bar.n = 0

epoch_bar.update()

except KeyboardInterrupt:

print("Exiting loop")

# compute the loss & accuracy on the test set using the best available model

classifier.load_state_dict(torch.load(train_state['model_filename']))

classifier = classifier.to(args.device)

dataset.class_weights = dataset.class_weights.to(args.device)

loss_func = nn.CrossEntropyLoss(dataset.class_weights)

dataset.set_split('test')

batch_generator = generate_batches(dataset,

batch_size=args.batch_size,

device=args.device)

running_loss = 0.

running_acc = 0.

classifier.eval()

for batch_index, batch_dict in enumerate(batch_generator):

# compute the output

y_pred = classifier(batch_dict['x_surname'])

# compute the loss

loss = loss_func(y_pred, batch_dict['y_nationality'])

loss_t = loss.item()

running_loss += (loss_t - running_loss) / (batch_index + 1)

# compute the accuracy

acc_t = compute_accuracy(y_pred, batch_dict['y_nationality'])

running_acc += (acc_t - running_acc) / (batch_index + 1)

train_state['test_loss'] = running_loss

train_state['test_acc'] = running_acc

print("Test loss: {};".format(train_state['test_loss']))

print("Test Accuracy: {}".format(train_state['test_acc']))

输出结果:

def predict_nationality(surname, classifier, vectorizer):

"""Predict the nationality from a new surname

Args:

surname (str): the surname to classifier

classifier (SurnameClassifer): an instance of the classifier

vectorizer (SurnameVectorizer): the corresponding vectorizer

Returns:

a dictionary with the most likely nationality and its probability

"""

vectorized_surname = vectorizer.vectorize(surname)

vectorized_surname = torch.tensor(vectorized_surname).view(1, -1)

result = classifier(vectorized_surname, apply_softmax=True)

probability_values, indices = result.max(dim=1)

index = indices.item()

predicted_nationality = vectorizer.nationality_vocab.lookup_index(index)

probability_value = probability_values.item()

return {'nationality': predicted_nationality, 'probability': probability_value}

new_surname = input("Enter a surname to classify: ")

classifier = classifier.to("cpu")

prediction = predict_nationality(new_surname, classifier, vectorizer)

print("{} -> {} (p={:0.2f})".format(new_surname,

prediction['nationality'],

prediction['probability']))

输出结果:

vectorizer.nationality_vocab.lookup_index(8)

'Irish'

def predict_topk_nationality(name, classifier, vectorizer, k=5):

vectorized_name = vectorizer.vectorize(name) # 使用vectorizer对名字进行向量化

vectorized_name = torch.tensor(vectorized_name).view(1, -1)

prediction_vector = classifier(vectorized_name, apply_softmax=True) # 使用分类器对名字进行预测,得到预测向量,并应用softmax函数得到概率分布

probability_values, indices = torch.topk(prediction_vector, k=k) # 从预测向量中找到概率最高的k个值和对应的索引

# returned size is 1,k

probability_values = probability_values.detach().numpy()[0] # 将结果从PyTorch张量转换为NumPy数组,并调整形状

indices = indices.detach().numpy()[0]

results = []

for prob_value, index in zip(probability_values, indices):

nationality = vectorizer.nationality_vocab.lookup_index(index)

results.append({'nationality': nationality,

'probability': prob_value})# 遍历概率值和索引,查找对应的国籍,并将国籍和概率值添加到结果列表中

return results

new_surname = input("Enter a surname to classify: ")

classifier = classifier.to("cpu")

k = int(input("How many of the top predictions to see? "))

if k > len(vectorizer.nationality_vocab):

print("Sorry! That's more than the # of nationalities we have.. defaulting you to max size :)")

k = len(vectorizer.nationality_vocab)# 如果k大于国籍词汇表的大小,则将k设置为词汇表的大小,并打印警告消息

predictions = predict_topk_nationality(new_surname, classifier, vectorizer, k=k)

print("Top {} predictions:".format(k))

print("===================")

for prediction in predictions:

print("{} -> {} (p={:0.2f})".format(new_surname,

prediction['nationality'],

prediction['probability']))

输出结果:

4.补充:如何得到一个以便用于机器学习模型的训练、验证和测试的姓氏数据集

import collections

import numpy as np

import pandas as pd

import re

from argparse import Namespace

args = Namespace(

raw_dataset_csv="/home/jovyan/surnames.csv",

train_proportion=0.7,

val_proportion=0.15,

test_proportion=0.15,

output_munged_csv="/home/jovyan/surnames_with_splits.csv",

seed=1337

)

# Read raw data

surnames = pd.read_csv(args.raw_dataset_csv, header=0)

surnames.head()

# Unique classes

set(surnames.nationality)

{'Arabic', 'Chinese', 'Czech', 'Dutch', 'English', 'French', 'German', 'Greek', 'Irish', 'Italian', 'Japanese', 'Korean', 'Polish', 'Portuguese', 'Russian', 'Scottish', 'Spanish', 'Vietnamese'}

# Splitting train by nationality

# Create dict

by_nationality = collections.defaultdict(list)

for _, row in surnames.iterrows():

by_nationality[row.nationality].append(row.to_dict())

# Create split data

final_list = []

np.random.seed(args.seed)# 设置随机数种子,以确保每次运行代码时数据的分割方式都是一致的

for _, item_list in sorted(by_nationality.items()):

# 遍历按国籍分类后的数据集

np.random.shuffle(item_list)# 将当前国籍的数据打乱顺序

n = len(item_list)

n_train = int(args.train_proportion*n)

n_val = int(args.val_proportion*n)

n_test = int(args.test_proportion*n)

# Give data point a split attribute

for item in item_list[:n_train]:

item['split'] = 'train'

for item in item_list[n_train:n_train+n_val]:

item['split'] = 'val'

for item in item_list[n_train+n_val:]:

item['split'] = 'test'

# Add to final list

final_list.extend(item_list) # 将当前国籍的所有数据点(包括已分配的子集信息)添加到final_list中

# Write split data to file

final_surnames = pd.DataFrame(final_list)

final_surnames.split.value_counts()

final_surnames.head()

# Write munged data to CSV

final_surnames.to_csv(args.output_munged_csv, index=False)

1559

1559

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?